Anais do IHC'2001 - Departamento de Informática e Estatística - UFSC

Anais do IHC'2001 - Departamento de Informática e Estatística - UFSC

Anais do IHC'2001 - Departamento de Informática e Estatística - UFSC

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

74<br />

<strong>Anais</strong> <strong>do</strong> IHC’2001 - IV Workshop sobre Fatores Humanos em Sistemas Computacionais<br />

was divi<strong>de</strong>d into two phases. The first phase involved 27 non-expert people, without any<br />

experience in software <strong>de</strong>velopment, web <strong>de</strong>sign or usability evaluations, but familiar with<br />

computers and web sites, while the second phase involved 13 software engineers and eight<br />

usability experts.<br />

Method<br />

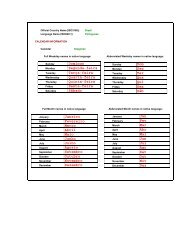

At first, the non-expert evaluators were assigned, at ran<strong>do</strong>m, to five different groups: one<br />

called Control group (CNTL), and four other groups, each corresponding to a different<br />

inspection method. The Control group did not follow any method or <strong>do</strong>cument, while the<br />

others received the following <strong>do</strong>cuments related to these inspection methods:<br />

• ISO - ISO 9241-10 Dialogue principles (ISO, 1996);<br />

• EC - Ergonomic Criteria (Bastien & Scapin, 1993);<br />

• INDEX - Usability In<strong>de</strong>x Checklist for Websites (Keevil, 1998);<br />

• HEU - Corporate Portal Heuristics (Dias, 2001).<br />

All evaluators were invited to read the method's <strong>do</strong>cument, and to i<strong>de</strong>ntify usability<br />

problems on the sample portal's interface, initially by exploring it on their own and then, by<br />

trying to perform three typical tasks. The tasks provi<strong>de</strong>d to all evaluators had been<br />

i<strong>de</strong>ntified by the researcher, as being representative of typical tasks, based on <strong>de</strong>tailed<br />

analysis of the context of use of the sample portal. The participants were then given an<br />

evaluation gui<strong>de</strong> elaborated for this study, and asked to inspect, using the respective<br />

methods' <strong>do</strong>cuments, all web pages they walked through to complete the typical tasks.<br />

Finally, each evaluator reported the usability problems they encountered on a<br />

common evaluation form, correlating each problem to a usability principle <strong>de</strong>scribed in the<br />

method's <strong>do</strong>cument they used. On the same form, the evaluators answered 17 <strong>de</strong>mographic<br />

questions, and expressed their opinion about the examined corporate portal and the<br />

usability evaluation method used, answering 13 satisfaction statements (on a semantic<br />

differential scale, with two opposite adjectives along each statement).<br />

The usability problems i<strong>de</strong>ntified through use of the four methods were categorized<br />

using common metrics, so data could be compared across methods on dimensions like type<br />

of usability problems (general or recurring problems; problems of low, mo<strong>de</strong>rate or high<br />

severity; and problems associated to usability factors).<br />

To compare the evaluation methods, the following criteria, gathered from previous<br />

comparative studies, like Jeffries et al. (1991) and Bastien, Scapin & Leulier (1996), were<br />

<strong>de</strong>fined in advance: average time spent by evaluators; researcher time spent on evaluation<br />

planning, sessions and data analysis; number and type of i<strong>de</strong>ntified usability problems;<br />

number of correct correlations between i<strong>de</strong>ntified problems and method's principles; size of<br />

aggregates (usability problems found by different number of evaluators); evaluators' <strong>do</strong>ubts<br />

and average opinion about the methods.<br />

Based on the preliminary results of the first phase, in regard to the comparison<br />

criteria above, two methods were selected to proceed on the second phase: the Corporate<br />

Portal Heuristics (HEU), and the Usability In<strong>de</strong>x Checklist for Websites (INDEX). The<br />

software engineers were allocated to two groups, each corresponding to one of the<br />

methods. The same occurred to the usability experts. All four groups were invited to try to