Diploma Thesis - Bad Request - Fachhochschule Vorarlberg

Diploma Thesis - Bad Request - Fachhochschule Vorarlberg

Diploma Thesis - Bad Request - Fachhochschule Vorarlberg

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Moment-based<br />

Facial Feature Tracking<br />

using Java<br />

<strong>Diploma</strong> <strong>Thesis</strong> in the Degree Program<br />

iTec - Information and Communication Engineering<br />

Manuela Hutter<br />

011 0109 038<br />

Supervisor: Dipl.-Ing. (FH) Walter Ritter<br />

Dornbirn, August 2005<br />

<strong>Fachhochschule</strong> <strong>Vorarlberg</strong> GmbH

Eidesstattliche Erklärung<br />

Ich erkläre hiermit ehrenwörtlich, dass ich die vorliegende Arbeit selbständig angefer-<br />

tigt habe. Die aus fremden Quellen direkt oder indirekt übernommenen Gedanken sind<br />

als solche kenntlich gemacht. Die Arbeit wurde bisher keiner anderen Prüfungsbehörde<br />

vorgelegt und auch noch nicht veröffentlicht.<br />

Acknowledgments<br />

Manuela Hutter (Dornbirn, August 2005)<br />

Many people helped me with this work in one way or another. I especially thank<br />

Walter Ritter, my supervisor, for his patience and help in all concerns. I am grateful<br />

to my introductory supervisor Miglena Dontschewa, who provided the initial idea for<br />

this thesis and enthused me for it; to Guido Kempter, who supported me in a diffi-<br />

cult phase of the project and assisted my statistical analyses; and to Avinash Manian,<br />

who gave me a helping hand with the data analysis in SPSS (thanks for your patience).<br />

I thank Colin Gregory-Moores and Lisa Newman for helping me with the basic structure<br />

of my English writing; Regine Bolter, the head of the study program, for giving me<br />

important hints to get on the right track; and Justin Zobel for writing the most helpful<br />

book about “writing for computer science”[Zobel, 2004]. Thanks to Wolfgang Mähr<br />

for proofreading and making helpful suggestions, and to my brother Matthias Hutter<br />

for collecting statistical data. Last but not least, thanks to my parents, Christine and<br />

Josef Hutter, for their personal and financial commitment.<br />

For scientific work with ethical awareness and without animal abuse.<br />

The use of registered names, trademarks etc. in this material does not imply, even in the absence of a specific statement, that<br />

such names are exempt from the relevant protective laws and regulations and therefore free for general use.<br />

i

Zusammenfassung<br />

Diese <strong>Diploma</strong>rbeit stellt ein plattform-unabhängiges, in Java entwickeltes Programm<br />

für die Gesichtsbewegungserkennung vor. Trackingalgorithmen, die markante Punkte<br />

im menschlichen Gesicht lokalisieren und verfolgen, sind eine wichtige Grundlage für<br />

viele unterschiedliche, darauf aufbauende Anwendungen: in der 3D-Modellanimation<br />

werden Punkte für die Gesichtsanimation eines Charakters benötigt; Analysen von<br />

menschlichen Emotionen verwenden die Punkte für automatische Klassifikation der<br />

Gesichtsmimik; und alternative Benutzerschnittstellen können Gesichtsbewegungen<br />

als Basis ihrer Funktionsweise benutzen. Zahlreiche Forschungsarbeiten beschreiben<br />

Bemühungen im Bereich der Erkennung von Gesichtsbewegungen. Trotzdem sind<br />

praktikable Lösungen selten. Es wurde nur eine Anwendung auf dem Markt gefunden,<br />

welche das Trackingproblem in Echtzeit und ohne physische Markierungen auf dem<br />

untersuchten Gesicht löst; es funktioniert allerdings nur auf Windows-Plattformen.<br />

Die entwickelte Java-Anwendung kann auf allen Plattformen verwendet werden, auf<br />

denen eine ‘Java Virtual Machine’ installiert ist. Sie benutzt eine Trackingmethode,<br />

die auf Bildmomenten und einer ‘Binary Space Partitioning’-Datenstruktur basiert,<br />

und einen Canny-Kantendetektor für die Datenaufbereitung verwendet. Die Software<br />

arbeitet mit Video-Eingangsdaten, ohne Markierungen auf dem betrachteten Gesicht.<br />

Sie hat eine modulare Programmstruktur, welche die Verwendung und den Austausch<br />

von externen Bibliotheken zulässt. Derzeit werden das ‘Java Media Framework’ für die<br />

Extrahierung der Video-Frames, und entweder ‘Java2D’ oder ‘Java Advanced Imag-<br />

ing’ für die Bildaufbereitung verwendet. Das Programm kann relevante Merkmale<br />

in vorausgewählten Bildregionen finden. Obwohl die extrahierten Punkte nicht mit<br />

standardisierten Gesichtsparametern wie den MPEG-4 ‘Facial Animation Parameters’<br />

übereinstimmen, zeigen 2 untersuchte Beispielpunkte bemerkenswerte Korrelationen<br />

von bis zu 98% im Vergleich zu manuell ermittelten Punkten; das Erkennen von<br />

Gesichtsmerkmalen auf einem vorverarbeiteten Bild dauert zirka 5 ms. Nachdem der<br />

Tracking-Prozess abgeschlossen ist, können die gefundenen Punkte in einer Ausgabe-<br />

datei gespeichert werden, um sie für nachfolgende übergeordnete Aufgaben verfügbar<br />

zu machen.<br />

ii

Abstract<br />

In this thesis, we present a platform-independent program for facial feature tracking,<br />

implemented in Java. Facial feature tracking algorithms, which locate and pursue dis-<br />

tinctive points in a human face, are an important basis for many different high-level<br />

tasks: 3D model animation needs feature points for moving the model’s facial fea-<br />

tures; programs that analyze human emotions use the points for automatic emotion<br />

recognition; and facial movements may provide a basis for alternative user interfaces.<br />

Numerous papers describe research efforts in the field of facial feature tracking. Nev-<br />

ertheless, practicable solutions are rare. We found only one application on the market<br />

that solves the tracking task in realtime and without physical markers on the tracked<br />

face. However, it only works on Windows platforms. The implemented Java tracking<br />

program can be used on all platforms that have a ‘Java Virtual Machine’ installed. It<br />

uses a tracking method based on image moments and a ’Binary Space Partitioning’<br />

data structure, the input data is prepared by a Canny edge detection mechanism.<br />

The software works on video input, without markers on the processed face. It has<br />

a modular program structure that allows for the use and interchange of external li-<br />

braries. Currently, it uses the ‘Java Media Framework’ for video frame extraction,<br />

and either ‘Java2D’ or ‘Java Advanced Imaging’ for preprocessing. The program is<br />

able to find relevant feature points in preselected image regions. While the extracted<br />

points are not in accordance with point definition standards like the MPEG-4 ‘Facial<br />

Animation Parameters’, 2 tested sample points show remarkable correlations of up to<br />

98% in comparison to manually ascertained points; the computation time of feature<br />

points on a preprocessed image region lies around 5 ms. After the tracking process,<br />

the extracted points can be saved to an output file in order to make them available<br />

for subsequent higher level tasks.<br />

iii

Contents<br />

Introduction 1<br />

1. State of the Art 5<br />

1.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5<br />

1.2. Basic Tracking Process . . . . . . . . . . . . . . . . . . . . . . . . . . . 6<br />

1.3. Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8<br />

1.3.1. Optical Flow Techniques . . . . . . . . . . . . . . . . . . . . . . 8<br />

1.3.2. Active Contours (Snakes) . . . . . . . . . . . . . . . . . . . . . 12<br />

1.3.3. Image Moments . . . . . . . . . . . . . . . . . . . . . . . . . . . 16<br />

1.4. Commercial Implementations . . . . . . . . . . . . . . . . . . . . . . . 22<br />

1.5. Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26<br />

2. Algorithms in Consideration 27<br />

2.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27<br />

2.2. Testing Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27<br />

2.2.1. Testing Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27<br />

2.2.2. Input Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28<br />

2.3. Testing Snake Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . 29<br />

2.4. Testing Image Moments . . . . . . . . . . . . . . . . . . . . . . . . . . 34<br />

2.5. Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35<br />

3. Input Data and Its Preparation 36<br />

3.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36<br />

3.2. Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36<br />

3.2.1. Data Format Prerequisites . . . . . . . . . . . . . . . . . . . . . 36<br />

3.2.2. Video Quality . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41<br />

3.2.3. Video Samples . . . . . . . . . . . . . . . . . . . . . . . . . . . 41<br />

3.3. Preparation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42<br />

iv

Contents<br />

3.3.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42<br />

3.3.2. Edge Detection Algorithms . . . . . . . . . . . . . . . . . . . . 44<br />

3.3.3. Edge Detector Realization . . . . . . . . . . . . . . . . . . . . . 47<br />

3.3.4. Further Improvements . . . . . . . . . . . . . . . . . . . . . . . 48<br />

3.4. Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49<br />

4. Programming 50<br />

4.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50<br />

4.2. Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50<br />

4.2.1. Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50<br />

4.2.2. Basic Application Flow . . . . . . . . . . . . . . . . . . . . . . 54<br />

4.2.3. Tracking Algorithm . . . . . . . . . . . . . . . . . . . . . . . . 55<br />

4.3. Implementation Process . . . . . . . . . . . . . . . . . . . . . . . . . . 60<br />

4.3.1. Working Environment . . . . . . . . . . . . . . . . . . . . . . . 60<br />

4.3.2. Difficulties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61<br />

4.4. Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63<br />

5. Evaluation 64<br />

5.1. Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64<br />

5.2. Program Abilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64<br />

5.3. Tracking Quality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65<br />

5.3.1. Test Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68<br />

5.3.2. Technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68<br />

5.3.3. Statistical Evaluation . . . . . . . . . . . . . . . . . . . . . . . 69<br />

5.4. Time Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77<br />

5.4.1. Technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77<br />

5.4.2. Statistical Evaluation . . . . . . . . . . . . . . . . . . . . . . . 77<br />

5.5. Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79<br />

Conclusions 80<br />

Bibliography 82<br />

Glossary 86<br />

v

Contents<br />

A. Appendix 92<br />

A.1. Evaluation Data: Coordinates of Corners of the Mouth . . . . . . . . . 92<br />

A.2. Evaluation Data: Tracking Mouth Area Selection (60x20) . . . . . . . 94<br />

A.3. Evaluation Data: Tracking of Whole Area Selection (384x288) . . . . . 96<br />

A.4. Evaluation Data: Canny Preprocessing . . . . . . . . . . . . . . . . . . 98<br />

A.5. Evaluation Data: Sobel Preprocessing . . . . . . . . . . . . . . . . . . 100<br />

vi

Introduction<br />

In this thesis, we develop a modular Java application that is able to detect and track<br />

facial features in video input data. The program finds distinctive points in the first<br />

frame of the input video and tracks them in subsequent video frames. Such facial fea-<br />

ture trackers are needed in different fields of computer vision. One area of application<br />

includes facial expression recognition, classification and detection of emotional states,<br />

where feature points can be used for an automated recognition process. Another area<br />

of application is information based encoding and compression; extracted feature in-<br />

formation could for example be used for low-bandwith video chats where only the<br />

movement information has to be transmitted. In terms of model acquisition and an-<br />

imation, feature points are needed for moving the character’s facial features. Facial<br />

movements may also provide a basis for alternative user interfaces that, for example,<br />

allow handicapped people to operate computers with facial expressions.<br />

This project started because there is no freely available, undisclosed, and platform<br />

independent program that is able to track feature points out of an input video. Various<br />

papers deal with the tracking of facial movements, using different techniques to localize<br />

and follow facial features. These works describe their approach in a more or less trans-<br />

parent way, they mostly mention that they implemented an example prototype, but<br />

they do not provide implementation details or even source code. We assume that they<br />

mainly use C or C++ as programming languages, at least no paper was found that<br />

explicitly uses Java for feature tracking. Despite the number of available approaches,<br />

only one solution, the commercial product VeeAnimator, which was developed for 3D<br />

model animation, currently solves the facial movement tracking task without the need<br />

of physical markers on the tracked face. In contrast to this application, we wanted<br />

to implement a free feature tracker, with the aim to be based on a comprehensible<br />

tracking method, to function on different platforms, and with disclosed sources and<br />

documentation. It should help to understand tracking algorithms, and evaluate the<br />

feasibility to implement a feature tracker in Java.<br />

1

Introduction<br />

In this paper, we first investigate and evaluate various movement tracking algorithms<br />

according to cost factors and their comprehensibility and practicability. Existing track-<br />

ing algorithms work with a range of different approaches. Techniques based on active<br />

contours can track deformable objects. However, they require manual initialization,<br />

and, due to their complexity, implementation as well as computation times may be too<br />

high. Local approaches, such as optical flow, surpass active contours concerning their<br />

complexity and computation times. Still, they have some limitations, as they may<br />

for instance compute more pixels than strictly necessary. Other algorithms designed<br />

for basic shape tracking, like the moment-based approach by Rocha et al. [2002], may<br />

not be applicable for facial movement tracking because they require clear, not frayed<br />

objects in the input images.<br />

Besides the comprehensibility and practicability of the algorithms, we also evaluate<br />

the quality of the obtained output. The extracted feature points may either follow<br />

defined standards, or they may not be consistent and well-positioned enough to fit into<br />

these norms. Figure 0.1 shows a possible tracking output, where the feature points<br />

are valid in the respect that they hold essential positions on the contour of the mouth.<br />

However, one feature point cannot permanently be defined as a certain facial feature<br />

point (the right corner of the mouth, for example), since its position may not be close<br />

enough to a predefined feature location, or the point’s position may unexpectedly<br />

change in subsequent video frames.<br />

(a) (b)<br />

Figure 0.1.: Not standardized output of facial feature localization. The tracked points<br />

(a) may not correlate with standardized points like the MPEG-4 Facial<br />

Animation Parameters (FAP) (b) [Antunes Abrantes and Pereira, 1999].<br />

2

Introduction<br />

For evaluation and decision making, we used modified versions of existing Java code<br />

that implement the algorithms in consideration. We then selected one algorithm that<br />

scored well in the evaluation process to be translated into Java for the usage in the<br />

final program. The chosen approach is based on a shape tracking algorithm by Rocha<br />

et al. [2002]. It uses image moment calculations and a ’Binary Space Partitioning’ data<br />

structure (described in Section 1.3.3) in order to find object positions and orientations.<br />

The algorithm is straightforward and shows small computation times. However, the<br />

output data may not be applicable to standards.<br />

The quality and the condition of the input data are very important factors for the<br />

algorithm to function and produce good results. To enhance these factors, we define<br />

certain prerequisites, select an appropriate Java library for the technical realization,<br />

and describe the preparation of the data. We therefore use the Java Media Frame-<br />

work (JMF) for video frame acquisition, and a Java2D based Canny edge detection<br />

mechanism for the data preprocessing.<br />

The resulting Java program is able to execute simple tracking tasks on preselected<br />

facial regions. For convenience, the users can perform the preselection, and control the<br />

video flow using a Graphical User Interface (GUI). Because this work only deals with<br />

the basic tracking process and leaves out important procedures (like the determination<br />

of face position and orientation), the problem area was reduced and special prerequi-<br />

sites were added (as described in Section 3.2). The architecture of the Java tracker has<br />

a modular design with 3 exchangeable components: the part responsible for the video<br />

frame acquisition, the preprocessing mechanism and the tracking implementation (see<br />

Section 4.2).<br />

The evaluation of the implemented application shows that the bottleneck in terms<br />

of computation times is the currently used Canny preprocessing technique. Investiga-<br />

tions on two exemplary feature points, the corners of the moth, demonstrate that the<br />

moment-based tracking algorithm is producing results similar to manually ascertained<br />

points. Variations are mainly caused by ragged edges in the preprocessed images.<br />

3

This paper is split up into five chapters:<br />

Introduction<br />

Chapter 1 informs about the basic tracking process and the current state of research<br />

on face localization and movement tracking. It gives an overview of commercial solu-<br />

tions and established algorithms in that field and makes a comparison of their range<br />

of application, their strengths and weaknesses.<br />

Chapter 2 goes into detail on preselected algorithms and analyzes the choice of the<br />

moment-based approach to be implemented in the final program. It describes sample<br />

implementations that we used for decision making, and reflects the test runs that we<br />

made in order to come to a decision.<br />

Chapter 3 defines the required input data format for the program, the prerequisites<br />

and the preparation of videos for the tracking process. We compare techniques to<br />

read videos and split them up into single images, and we select the most appropriate<br />

option for our aims. For that purpose, we define video information constraints, like a<br />

convenient and constant position and orientation of the face in all video frames. After<br />

having the right input data, with the face in the right place, we need to transform the<br />

images into edge images to be applicable to the tracking algorithm.<br />

Chapter 4 describes the code development of the feature tracker. It illustrates the<br />

program architecture with its general structure and the basic application workflow. In<br />

that context, we also describe the implementation of the tracking algorithm in detail.<br />

In the second part of this chapter, we briefly outline the working environment, and<br />

state difficulties that arouse during the implementation process.<br />

Chapter 5 shows the achieved results of the Java feature tracker and evaluates them<br />

according to the correctness of 2 calculated feature points, the corners of the mouth,<br />

and the required preprocessing and calculation time.<br />

4

1. State of the Art<br />

1.1. Overview<br />

In order to select the most appropriate facial feature tracking method for the Java<br />

implementation, we inspect the basic tracking process and look at the way it is imple-<br />

mented by different algorithms. We group these algorithms into 3 categories: optical<br />

flow techniques, active contour models (snakes), and moment-based shape tracking.<br />

For every group, we first state general definitions and properties in order to explain<br />

the mode of operation. We then give examples of approaches that use an algorithm of<br />

this group for facial feature tracking, and evaluate their feasibility for the Java feature<br />

tracker. In this context, we also introduce two commercial solutions, which unfortu-<br />

nately do not provide technical background information.<br />

Facial feature tracking is not to be mistaken with face tracking, which aims to locate<br />

the complete face inside a video sequence, characterizing its position and orientation,<br />

but often not evaluating further details inside the tracked face. It may however be<br />

a preparatory step for, or mixed with, facial feature tracking. Several authors have<br />

dealt with the face tracking problem ([Krüger et al., 2000; Sahbi and Boujemaa, 2002;<br />

Wu et al., 1999]).<br />

Within the field of computer vision, “recognition of the facial expressions is a very<br />

complex and interesting subject where there have been numerous research efforts”<br />

[Goto et al., 1999]. Most of these works are based on a similar process workflow, with<br />

videos and/or video streams as input data (see Section 1.2). However, they differ in<br />

their complexity, the initialization strategy and output quality, and in the way how<br />

they deduct facial feature points and movements. As it is the goal to find a straight-<br />

forward tracking algorithm, we examine existing algorithms according to cost factors,<br />

their comprehensibility and practicability. One group of techniques, based on snakes,<br />

can track deformable objects, but requires manual initialization and is computationally<br />

5

1. State of the Art<br />

expensive. It may also require extensive training and implementation periods. Other<br />

approaches, such as optical flow, surpass more complex methods concerning compu-<br />

tation speed, but “provide a low-level problem characterization and suffer from some<br />

drawbacks” [Rocha et al., 2002]: They might, for example, “integrate more pixel than<br />

strictly necessary” [Dellaert and Collins, 1999]. Basic shape tracking methods may<br />

not be applicable for facial movement tracking because of their need of clear, singular<br />

objects in the input images. All examined works provide a theoretical and mathe-<br />

matical description of the developed algorithm, but they lack an illustration of the<br />

programmatic implementation, flow diagrams, or code snippets. The commercial so-<br />

lutions provide very little technical background information, the tracking methodology<br />

is not disclosed. We found two products that are able to track facial features, only<br />

one of them solves this task without the need of physical markers on the tracked face.<br />

1.2. Basic Tracking Process<br />

Most of the work in facial feature tracking is based on a similar basic process workflow.<br />

As illustrated in Figure 1.1, the process works on input data from standard video or<br />

web cameras. This data may underlie some constraints, such as the distance between<br />

the tracked face and the camera, or lightning conditions (described in Section 3.2 for<br />

the Java feature tracker). The core tracking procedure consists of a number of steps.<br />

Most feature trackers have a preprocessing step, where the video data is prepared to<br />

be applicable to the tracking algorithm. The image sequences may be smoothed, con-<br />

verted into a different color model, or object details may be accentuated (see Section<br />

3.3). As facial feature tracking methods mostly assume a certain size or orientation<br />

of the tracked objects, the face has to be detected, located, and probably transformed.<br />

Due to time constraints, this project does not deal with face localization and bridges<br />

this gap with input data prerequisites. Having the preprocessed facial data in the<br />

right position in the image sequence, the feature tracking algorithm can set to work.<br />

Some algorithms, like the motion-based Java tracker or Snake-based methods, require<br />

pre-selection of feature points or feature regions. In case the implemented Java pro-<br />

gram, this step could be automated in further development steps. After the tracking<br />

procedure, all methods provide more or less standardized facial parameters, 2D or<br />

3D, depending on the tracker application. These parameters may then be used for<br />

facial model animation, or for high level face processing tasks such as facial expression<br />

recognition, face classification or face identification.<br />

6

1. State of the Art<br />

Figure 1.1.: Basic facial feature tracking workflow. All non-grey elements are part of<br />

the implemented Java feature tracker.<br />

Gorodnichy [2003] has illustrated a similar “hierarchy of face processing tasks”. He<br />

does not state input and output data, but goes into detail with the face localization<br />

as a preliminary step, and he lists a range of higher level tasks. Facial feature tracking<br />

is not mentioned in his his illustration, he seems to include this procedure in a step<br />

called “Face Localization (precise)”.<br />

In the following section, we describe a set of well-established tracking methodologies<br />

and their practice in the illustrated facial feature tracking workflow.<br />

7

1.3. Algorithms<br />

1.3.1. Optical Flow Techniques<br />

Definitions and Properties<br />

1. State of the Art<br />

Optical flow is a concept for considering the motion of objects within a visual represen-<br />

tation, where the motion is typically represented as vectors originating or terminating<br />

at pixels in a digital image sequence. Every pixel in an optical flow image is repre-<br />

sented by a motion vector that indicates the direction and the intensity of motion in<br />

this point. The work of Beauchemin and Barron [1995] extensively describes optical<br />

flow techniques. Figure 1.2, taken from their work, illustrates the computation of<br />

optical flow.<br />

(a) (b)<br />

Figure 1.2.: One frame of an image sequence (a) and its optical flow (b) Beauchemin<br />

and Barron [1995].<br />

An optical flow algorithm “estimates the 2D flow field from image intensities”[Cutler<br />

and Turky, 1998]. In the survey of Cédras and Shah [1995], the methods are di-<br />

vided into four classes: differential methods, region-based matching, energy-based,<br />

and phase-based techniques:<br />

“Differential methods compute the velocity from spatiotemporal derivates<br />

of image intensity. Methods for the computation of first order and sec-<br />

ond order derivates were devised, although estimates from second order<br />

approaches are usually poor and sparse. In region-based matching, the<br />

velocity is defined as the shift yielding the best fit between image regions,<br />

according to some similarity or distance measure.<br />

8

1. State of the Art<br />

Energy-based (or frequency-based) methods compute optical flow using<br />

the output from the energy of velocity-tuned filters in the Fourier domain,<br />

while phase-based methods define velocity in terms of the phase behavior<br />

of band-pass filter output, for example the zero crossing techniques.”<br />

Optical Flow for Feature Tracking<br />

Cohn et al. An example of how optical flow techniques are used in facial feature<br />

tracking is described by Cohn et al. [1998]. In their work, they manually select feature<br />

points in the first frame. Each of these points is then defined as the center of a 13x13<br />

pixel flow window. The position of all feature points is normalized by automatically<br />

mapping them to a standard face model based on three facial feature points: the<br />

medial canthi of both eyes and the uppermost point of the philtrum (see Figure 1.3).<br />

Figure 1.3.: Standard face model according to Cohn et al. [1998]. Medial canthus:<br />

inner corner of the eye, philtrum: vertical groove in the upper lip.<br />

A hierarchical optical flow method is used to automatically track feature points in<br />

the image sequence. The displacement of each feature point is calculated by subtract-<br />

ing its normalized position in the first frame from its current normalized position.<br />

The resulting flow vectors are concatenated to produce a 12-dimensional displacement<br />

vector in the brow region, a 16-dimensional displacement vector in the eye region, a<br />

12-dimensional displacement vector in the nose region, and a 20-dimensional vector in<br />

the mouth region (see Figure 1.4). The technique is based on the Facial Action Coding<br />

System (FACS), a widespread method for measuring and describing facial behaviors<br />

developed by Ekman and Friesen [1978] in the 1970s. Facial activities are described in<br />

terms of a set of small, basic actions, each called an Action Unit (AU). The AUs are<br />

based on the anatomy of the face and occur as the result of one or more muscle actions.<br />

9

1. State of the Art<br />

Figure 1.4.: Feature point displacements. Change from neutral expression (AU 0)<br />

to brow raise, eye widening, and mouth stretched wide open (AU<br />

1+2+5+27). Lines trailing from the feature points represent replacement<br />

vectors due to expression Cohn et al. [1998].<br />

Essa and Pentland The work of Essa and Pentland [1997] describes another facial fea-<br />

ture tracking method based on optical flow. They base their work on a self-developed,<br />

“extending FACS”, encoding system. They analyzed image sequences of facial expres-<br />

sions and probabilistically characterizing the facial muscle activation associated with<br />

each expression. This is achieved using a detailed physics-based dynamic model of the<br />

skin and muscles coupled with optical flow in a feedback controlled framework. They<br />

call this analysis control-theoretic approach, which produces muscle-based representa-<br />

tions of facial motion (Figure 1.5 shows an example).<br />

(a) (b)<br />

Figure 1.5.: A motion field for the expression of smile from optical flow computation<br />

(a) mapped to a face model using the control-theoretic approach (b) [Essa<br />

and Pentland, 1997].<br />

10

Evaluation<br />

1. State of the Art<br />

The approach by Cohn et al. uses the FACS for feature tracking. This system has<br />

been widely used for controlling computer animation, but was not intentionally devel-<br />

oped for this purpose. The intended goal was to “create a reliable means for skilled<br />

human scorers to determine the category or categories in which to fit each facial be-<br />

havior” (http://face-and-emotion.com/dataface/facs/description.jsp). Essa<br />

and Pentland [1997] state in their work, that<br />

“it is widely recognized that the lack of temporal and detailed spatial in-<br />

formation (both local and global) is a significant limitation to the FACS<br />

model. [...] Additionally, the heuristic ‘dictionary’ of facial actions origi-<br />

nally developed for FACS-based coding of emotion has proven to be difficult<br />

to adapt to machine recognition of facial expression”.<br />

The results of the method show that the accuracy is between 83% and 92% compared to<br />

previous tests and results of human testers, depending on the region. The authors find<br />

one reason for the lack of 100% agreement is “the inherent subjectivity of human FACS<br />

coding, which attenuates the reliability of human FACS codes” [Cohn et al., 1998].<br />

Two other possible reasons were the “restricted number of optical flow feature windows<br />

and the reliance on a single computer vision method”. In contrast to this approach<br />

by Cohn et al., the solution by Essa and Pentland specifically deals with the facial<br />

expression recognition. The work describes a complete tracking framework, which<br />

includes a physics-based dynamic model for skin and muscles-description, something<br />

that is not intended for the Java tracker. Both algorithms do not mention complexity<br />

or computation time for the tracking process.<br />

11

1.3.2. Active Contours (Snakes)<br />

Definitions and Properties<br />

1. State of the Art<br />

Active contour models, commonly called snakes, are energy-minimizing curves that<br />

deform to fit image features. Snakes, first introduced by Kass et al. [1988], “lock on to<br />

nearby minima in the potential energy generated by processing an image. (This energy<br />

is minimized by iterative gradient descent [...]) In addition, internal (smoothing) forces<br />

produce tension and stiffness that constrain the behavior of the models; external forces<br />

may be specified by a supervising process or a human user” [Ivins and Porrill, 1993].<br />

Figure 1.6 shows the basic functionality of a closed snake.<br />

Figure 1.6.: A closed snake. The snake’s ends are joined so that it forms a closed<br />

loop. Over a series of time steps the snake moves into alignment with the<br />

nearest salient feature [Ivins and Porrill, 1993].<br />

Snakes are applied to a range of different image processing problems. They sup-<br />

port the detection of lines and edges, but can also be used for stereo matching or for<br />

segmenting image sequences. Snakes have often been used in medical research appli-<br />

cations, and motion tracking systems use them to model moving objects. The main<br />

limitations of the models are that they “usually only incorporate edge information<br />

(ignoring other image characteristics) possibly combined with some prior expectation<br />

of shape; and that they must be initialized close to the feature of interest if they are<br />

to avoid being trapped by other local minima”[Ivins and Porrill, 1993] 1 .<br />

1 An overview of John Ivins’ publications about snakes is available at http://www.computing.edu.<br />

au/~jim/snakes.html<br />

12

1. State of the Art<br />

A snake (V ) is an ordered collection of n points in the image plane:<br />

V = {vi, . . . , vn} (1.1)<br />

vi = (xi, yi), i = {i, . . . , n}<br />

The points in the contour iteratively approach the boundary of an object through the<br />

solution of an energy minimizing problem. For each point in the neighborhood of vi,<br />

an energy term is computed<br />

Ei = αEint(vi) + βEext(vi) (1.2)<br />

where Eint(vi) is an energy function dependent on the shape of the contour, and<br />

Eext(vi) is an energy function dependent on the image properties, such as the gradient,<br />

near point vi. α and β are constants providing the relative weighting of the energy<br />

terms. Ei, Eint, and Eext are calculated using matrices. The value at the center of each<br />

matrix corresponds to the contour energy at point vi. Other values in the matrices<br />

correspond (spatially) to the energy at each point in the neighborhood of vi. Each<br />

point vi is moved to the point v ′ i , corresponding to the location of the minimum value<br />

in Ei. This process is illustrated in Figure 1.7. If the energy functions are chosen<br />

correctly, the contour V should approach the object boundary and stop when done so.<br />

Figure 1.7.: An example of the movement of a point vi in a snake. The point vi is<br />

the location of minimum energy due to a large gradient at that point<br />

[Mackiewich, 1995].<br />

13

Snakes for Feature Tracking<br />

1. State of the Art<br />

The work of Terzopoulos and Waters [1993] describes a hybrid method, where shape<br />

models and snakes are taking part in the tracking process. Face models are set up,<br />

which are then tracked by snakes. The approach incorporates many complex proce-<br />

dures, described by the authors as following:<br />

“An approach to the analysis of dynamic facial images for the purposes<br />

of estimating and resynthesizing dynamic facial expressions is presented.<br />

The approach exploits a sophisticated generative model of the human face<br />

originally developed for realistic facial animation. The face model which<br />

may be simulated and rendered at interactive rates on a graphics work-<br />

station, incorporates a physics-based synthetic facial tissue and a set of<br />

anatomically motivated facial muscle actuators. The estimation of dynam-<br />

ical facial muscle contractions from video sequences of expressive human<br />

faces is considered. An estimation technique that uses deformable contour<br />

models (snakes) to track the nonrigid motions of facial features in video<br />

images is developed. The technique estimates muscle actuator controls<br />

with sufficient accuracy to permit the face model to resynthesize transient<br />

expressions.”<br />

Figure 1.8 illustrates how snakes are used in this work.<br />

(a) (b)<br />

Figure 1.8.: Snakes and fiducial points used for muscle contraction estimation: neutral<br />

expression (a) and surprise expression (b)<br />

14

Evaluation<br />

1. State of the Art<br />

Snakes are mostly used in combination with other methods, as they require pre-<br />

initialization close to the feature of interest. A big disadvantage of the snake algorithm<br />

is that it is easily mislead if the edge is uncontinuous. Xie and Mirmehdi [2003] call<br />

this characteristic weak edge:<br />

“Despite their significant advantages, geometric snakes only use local in-<br />

formation and suffer from sensitivity to local minima. Hence, they are<br />

attracted to noisy pixels and also fail to recognize weaker edges for lack<br />

of a better global view of the image. The constant flow term can speed<br />

up convergence and push the snake into concavities easily when gradient<br />

values at object boundaries are large. But when the object boundary is<br />

indistinct or has gaps, it can also force the snake to pass through the<br />

boundary.”<br />

They developed an improved edge algorithm, called RAGS, that is able to undergo<br />

this problem. It works with “extra diffused region force which delivers useful global<br />

information about the object boundary and helps prevent the snake from stepping<br />

through”[Xie and Mirmehdi, 2003]. Figure 1.9 shows improvement with RAGS.<br />

(a) (b)<br />

Figure 1.9.: Weak-edge leakage. A regular snake leaks out of a weak edge (a); RAGS<br />

snake converges properly using its extra region force (b).<br />

Snakes have a great potential to work well in a tracking environment. However, the<br />

weak-edge leakage problem and the complexity of the algorithm argues against the use<br />

of snakes.<br />

15

1.3.3. Image Moments<br />

Definitions and Properties<br />

1. State of the Art<br />

In order to define its basic position, size, and orientation, a binary or greyscale image<br />

object can be approximated by a best-fitting ellipse. This ellipse is defined by the<br />

centroid, major and minor axis, and the angle of the major axis with the x-axis.<br />

These values are calculated using image moment functions. Figure 1.10 shows example<br />

moment calculations for a binary image object (the black pixels in the illustration). a,<br />

b and θ, and the resulting ellipse are illustrated in the image. The following paragraphs<br />

derive and explain the functions necessary for the calculation of the best-fitting ellipse.<br />

m00 = 5<br />

m10 = 15, m01 = 15<br />

m20 = 49, m02 = 47, m11 = 43<br />

c = (3, 3)<br />

θ = 31.7 ◦ , a = 1.84, b = 0.70<br />

Figure 1.10.: Example for moment calculations and shape representation.<br />

The image ellipse is represented by the semi-major axis a, the semi-minor<br />

axis b and the orientation angle θ.<br />

General Moment Definition A grayscale image can be seen as a two-dimensional<br />

density distribution function, written in the form of f(x, y), where the function value<br />

represents the intensity of a pixel at the position (x, y). A general definition of two-<br />

dimensional (p + q) order moments is then given by the following equation:<br />

Φpq =<br />

�∞<br />

�∞<br />

−∞ −∞<br />

Ψpq(x, y) f(x, y) dx dy p, q = 0, 1, 2, 3... (1.3)<br />

where Ψpq is a continuous function of (x, y), known as the moment weighting kernel<br />

or the basis set. The indices p, q usually denote the degrees of the coordinates (x, y),<br />

as defined inside the function Ψ. For example, a zeroth order moment is given by<br />

16

1. State of the Art<br />

p = 0 and q = 0. Applied to an image, the intensity function f(x, y) is bounded,<br />

and therefore the integrals in equation 1.3 are finite. In consequence, the general<br />

two-dimensional moment function can also be written in the form<br />

��<br />

Φpq = Ψpq(x, y) f(x, y) dx dy p, q = 0, 1, 2, 3... (1.4)<br />

ζ<br />

where ζ represents the image region, that is the number of foreground pixels in the<br />

image. Detailed moment function descriptions can be found in the book “Moment<br />

Functions in Image Analysis” [Mukundan and Ramakrishnan, 1998].<br />

Geometric Moments “Geometric moments are the simplest among moment func-<br />

tions, with the kernel function defined as a product of the pixel coordinates.” [Mukun-<br />

dan and Ramakrishnan, 1998, p. 9]. Compared with more complex weighting kernels,<br />

geometric moments are easy to perform and implement. They are also called Carte-<br />

sian moments, or regular moments. Equation 1.5 shows the two-dimensional geometric<br />

moment function, referred to as mpq.<br />

��<br />

mpq =<br />

ζ<br />

x p y q f(x, y) dx dy p, q = 0, 1, 2, 3... (1.5)<br />

In this equation, the basis set is defined as x p y q (compare to equation 1.3).<br />

As the number of values in the image region is discrete and finite, the integral can<br />

be replaced by a summation to make it easier to compute. The equation can then be<br />

written as<br />

mpq = �<br />

A<br />

x p y q f(x, y) dx dy p, q = 0, 1, 2, 3... (1.6)<br />

where A is the number of pixels in the image region.<br />

Moments that are calculated from a binary (or silhouette) image are called silhouette<br />

moments. The pixels of a binary image can only adopt the values 0 and 1. If a pixel<br />

is part of an image region, it is set to 1. If it belongs to the background, its value is<br />

0. For silhouette moments, the image region ζ only contains the pixels with value 1,<br />

17

1. State of the Art<br />

and the equation can be written in the form<br />

��<br />

mpq =<br />

Shape Representation Using Moments<br />

ζ<br />

x p y q dx dy p, q = 0, 1, 2, 3... (1.7)<br />

A set of low order moments can be used to describe the shape of image regions.<br />

Geometrical properties like the image area, the center of mass and the orientation<br />

can be defined by using moments of zeroth, first and second order. The moment<br />

of zeroth order (m00) represents the total intensity of an image. If the image is<br />

binary, m00 represents the image area, that is the number of foreground pixels. The<br />

intensity centroid can be calculated by combining first order moments m10, m01 with<br />

the moment of order zero. The first order moments “provide the intensity moment<br />

about the y-axis and x-axis of the image” [Mukundan and Ramakrishnan, 1998, p.<br />

12]. For example, m10 on a silhouette image sums up all the x-coordinates of the<br />

image region. The centroid c = (xc, yc) is given by<br />

xc = m10<br />

m00<br />

, yc = m01<br />

. (1.8)<br />

For a silhouette image, c represents the geometrical center of the image region, also<br />

called the center of mass.<br />

Central moments shift the reference system to the centroid to make the moment<br />

m00<br />

calculations independent of the image area position. They are defined as<br />

��<br />

µpq =<br />

ζ<br />

(x − xc) p (y − yc) q f(x, y) dx dy p, q = 0, 1, 2, 3... (1.9)<br />

As the image region remains unchanged during the transformation and the pixel co-<br />

ordinates are in equal shares on both sides of the reference system, we have<br />

µ00 = m00; µ10 = µ01 = 0. (1.10)<br />

According to equation 1.9, the image area is traversed twice for central moment cal-<br />

culations, as the centroid is determined before µpq can be calculated. The work of<br />

18

1. State of the Art<br />

Rocha et al. [2002] avoids the double traversation. It uses the following equations for<br />

the calculation of the second order central moments:<br />

µ20 = m20<br />

− x<br />

m00<br />

2 c<br />

µ11 = m11<br />

− xcyc<br />

m00<br />

µ02 = m02<br />

− y<br />

m00<br />

2 c<br />

(1.11)<br />

(1.12)<br />

(1.13)<br />

The second order moments are “a measure of variance of the image intensity distri-<br />

bution about the origin. The central moments µ20, µ02 give the variances about the<br />

mean (centroid). The covariance is given by µ11.” [Mukundan and Ramakrishnan,<br />

1998, p. 12]. The second order central moments can also be seen as moments of inertia<br />

with the coordinate axes moved to have the intensity centroid as their origin. If these<br />

so-called principal axes of inertia are used as the reference system, they make the<br />

product of inertia component (µ11) vanish. The moments of inertia (µ20, µ02) of the<br />

image about this reference system are then called the principal moments of inertia.<br />

We can use these moments to provide useful descriptors of shape. The work of Morse<br />

[2004] gives a good description of these techniques:<br />

“Suppose that for a binary shape we let the pixels outside the shape have<br />

value 0 and the pixels inside the shape value 1. The moments µ20 and<br />

µ02 are thus the variances of x and y respectively. The moment µ11 is the<br />

covariance between x and y [...] . You can use the covariance to determine<br />

the orientation of the shape.”<br />

The covariance matrix C is<br />

C =<br />

�<br />

µ20 µ11<br />

µ11 µ02<br />

�<br />

(1.14)<br />

By finding the eigenvalues and eigenvectors of C and looking at the ratio of the eigen-<br />

value, we can determine the eccentricity, or elongation, of the shape. The direction<br />

of elongation can then be derived using the direction of the eigenvector whose corre-<br />

sponding eigenvalue has the largest absolute value.<br />

19

The eigenvalues of C are defined as<br />

1. State of the Art<br />

I1 = (µ20<br />

�<br />

+ µ02) + (µ20 − µ02) 2 + 4µ 2 11<br />

�<br />

2<br />

I2 = (µ20 + µ02) −<br />

(µ20 − µ02) 2 + 4µ 2 11<br />

The semi-major axis a and the semi-minor axis b can then be calculated as<br />

2<br />

(1.15)<br />

a = � 3 ∗ I1; b = � 3 ∗ I2. (1.16)<br />

These axis-calculations are derived from the paper by Rocha et al. [2002]. Other au-<br />

thors described a and b differently ([Mukundan and Ramakrishnan, 1998, p. 14],[Sonka<br />

et al., 1999, p. 258]). During the implementation phase, testing results were most ap-<br />

propriate with the usage of the stated formulas.<br />

The orientation angle θ of one of the principal axis of inertia with the x-axis is given<br />

by<br />

Image Moments for Feature Tracking<br />

θ = 1<br />

2 tan−1<br />

� �<br />

2µ11<br />

. (1.17)<br />

µ20 − µ02<br />

The work of Rocha et al. [2002] introduces a moment-based object tracking method<br />

where the object in the binary image is approximated by best-fitting ellipses. Binary<br />

Space Partitioning (BSP), a method for recursively subdividing a space into convex sets<br />

by hyperplanes, is used for the approximation. Each node of the BSP tree represents<br />

a part of the image object, described by its best-fitting ellipse.<br />

(a) (b) (c)<br />

Figure 1.11.: Object fitting by 2 k ellipses at each level. Construction of the BSP tree<br />

at level 0 (a), level 1 (b), and the result of level 3 (c) [Rocha et al., 2002].<br />

20

1. State of the Art<br />

As illustrated in Figure 1.11, the algorithm starts by calculating the ellipse of the<br />

root node (level 0). Then, the image region is divided along the minor axis, and the<br />

child nodes are created, each incorporating the pixels on one side of the splitting axis.<br />

This subdivision is repeated until a certain predefined tree depth is reached where the<br />

ellipses sufficiently approximate the image shape (see (c) in Figure 1.11).<br />

The approach by Rocha et al. [2002] was designed for basic shape tracking purposes,<br />

with only one simple object on the image region. It is not yet used and evaluated for<br />

more complex tasks, such as a facial feature tracking. As stated by the authors,<br />

“problems that we did not address in this paper are occlusion, tracking of multiple<br />

objects and motion discontinuities. Future work will go in these directions”[Rocha<br />

et al., 2002]. We did not find any further papers that base their work on this moment<br />

tracking algorithm.<br />

Evaluation<br />

Despite its simple approach, the proposed ellipse approximation method of Rocha<br />

et al. [2002] surprises with the quality of the achieved results. The paper is described<br />

in a very legible way, and the results are illustrated graphically. Therefore the work<br />

presages a straightforward implementation. The algorithm is not yet tested on multiple<br />

objects, but with an appropriate region selection on the preprocessed face images, we<br />

can simplify the object structures in order to make them applicable to the tracking<br />

procedure. The paper does not state processing times, but the design of the algorithm<br />

permits to expect short operating times.<br />

21

1.4. Commercial Implementations<br />

Overview<br />

1. State of the Art<br />

The number of facial movement tracking software on the market is still very limited.<br />

During inquiry, we have found two products. X-IST FaceTracker by the German com-<br />

pany noDNA (http://www.nodna.com/FaceTracker.26.0.html) and VeeAnimator<br />

by Vidiator Technology (USA, http://www.vidiator.com/facestation.php). Both<br />

of them keep the technical specification short and do not provide information on what<br />

tracking methods and algorithms have been used.<br />

The basic operating sequence is the same for the two systems, even though they differ<br />

in some key factors. Both of them take video streams as input data, are able to process<br />

and transfer in realtime, and provide data for proprietary 3D animation software.<br />

However, only VeeAnimator can operate without physical markers on the tracked<br />

person. They also differ in the scope of supply, hardware requirements and integration<br />

with proprietary 3D animation software, where the German product is ahead. Still<br />

surprising is the fact that VeeAnimator, which gets by without any physical markers,<br />

is about a fourth the price of the X-IST FaceTracker.<br />

X-IST FaceTracker<br />

The X-IST FaceTracker is characterized by a head-mounted video camera, required<br />

facial markers and lighting conditions, and the support for range of different 3D an-<br />

imation formats. In contrast to the VeeAnimator, X-IST FaceTracker uses its own<br />

proprietary headset for video recording (see Figure 1.12). The camera on this headset<br />

is near infrared sensitive, with PAL or NTSC video output and adjustable camera<br />

focus. It has a near infrared dimmable light source built into. Currently, X-IST works<br />

on Microsoft Windows 2000, it will be available for Windows XP Professional in future.<br />

22

1. State of the Art<br />

It works with infrared reflective markers on the face of the tracked person, which<br />

are then recognized by the tracking software. To detect these markers correctly, the<br />

studio environment has to be kept in fluorescent (cold) light, without daylight or<br />

other warm light sources such as halogene or light bulbs. It provides drivers for 3D<br />

animation programs (Alias Mocap, Famous3D, 3ds Max, FBX), a Portable Control<br />

Unit (PCU) and a Software Development Kit (SDK) for 3rd party integration. The<br />

package with the headset system and the provided software costs e 6.999, without<br />

required additional hardware and drivers.<br />

Figure 1.12.: The X-IST FaceTracker. With the provided headset (on the left) it is<br />

possible to create facial animations.<br />

VeeAnimator (formerly FaceStation)<br />

VeeAnimator stands out with the ability to track in realtime, without the use of<br />

physical markers and with standard hardware components, which makes the tracking<br />

process simple in execution.<br />

It is “a suite of software applications that allow you to animate heads and faces<br />

in Discreet’s 3ds max or Alias|Wavefront Maya” [vidiator, 2004] that uses a normal<br />

video camera. The camera does not have to be head mounted and, in contrast to<br />

the X-IST FaceTracker, whole head movements are recorded. The software places 22<br />

virtual markers at key positions on the face. The movement of these markers is then<br />

‘tracked’ from each video frame to the next to generate facial animation data. This<br />

data is used to animate a model in the 3D animation package.<br />

23

1. State of the Art<br />

At any given video frame, the face is analyzed into a mixture of 16 different facial<br />

expression elements (including smile, frown, lip pucker, vowel sounds, raised eyebrows,<br />

closed eyelids). These facial expression elements can then be used for animation, for<br />

example to drive a set of morph targets with the defined expressions. The software<br />

additionally provides audio (speech) analysis tools that can be used to refine lip move-<br />

ments. The big advantage of VeeAnimator is that is does not need any additional<br />

hardware or special lighting. Soft diffused illumination on the actors face, from what-<br />

ever light source, is sufficient for the program to work satisfactorily. Figure 1.13 shows<br />

a tracking example with this software, taken from the VeeAnimator demonstration<br />

video 2 .<br />

(a) (b)<br />

Figure 1.13.: VeeAnimator in action. The tracked feature points (a), the real-life per-<br />

son (right) and its virtual reality equivalent during realtime tracking (b).<br />

VeeAnimator contains 4 parts: FaceLifter tracks prerecorded computer video files,<br />

FaceTracker does realtime tracking on video streams, FaceDriver is the 3ds Max or<br />

Maya plug-in component, and the Avatar Editor creates fully textured head models.<br />

Comparison<br />

Table 1.1 on page 25 gives a summarizing overview over the mentioned two programs.<br />

They differ in a lot of points, especially in prerequisites and the required hardware.<br />

Especially the comparison of the number of supported feature points of these two ap-<br />

plications is interesting.<br />

2 http://www.vidiator.com/demos/facestation/FSDemoFinal_small.wmv<br />

24

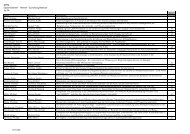

Components<br />

1. State of the Art<br />

X-IST FaceTracker V 4.5 VeeAnimator<br />

Included Software package, headset<br />

system, cables and marker<br />

tape<br />

Software package<br />

Required PCI Framegrabber Card Ordinary digital video<br />

Optional Drivers/Converters for 3rd<br />

Requirements<br />

party animation software;<br />

PCU; SDK<br />

Software Windows 2000<br />

(XP in progress)<br />

camera, ‘Alias Maya 3D’ or<br />

‘Autodesk 3ds Max’<br />

Windows 2000/XP;<br />

Maya 4.5 or 5.0 /<br />

3ds Max 4.26, 4.3, 5.0, 6.0<br />

Clock rate ≥800 MHz prerecorded: ≥700 MHz,<br />

Hardware 20 GB HD, 128 MB RAM,<br />

Specification<br />

2D Graphics Card XGA, 1<br />

PCI Slot<br />

Feature Points up to 36 (typically 15) 22<br />

Physical Markers Yes No<br />

Environment no daylight/warm light;<br />

fluorescent (cold) light only<br />

Tracking Rates 25/50 fps (PAL),<br />

30/60 fps (NTSC)<br />

realtime: ≥2.0 GHz<br />

200 MB HD,<br />

Maya 3D / 3ds Max<br />

requirements<br />

soft defused illumination<br />

30/60 fps (NTSC)<br />

Price e 6,999.00 �1,995.00<br />

Table 1.1.: Comparison of commercial products<br />

25

1.5. Summary<br />

1. State of the Art<br />

Many researchers have already developed facial feature tracking algorithms, describing<br />

their work with different levels of detail. The examined approaches based on optical<br />

flow use the FACS, which suffers from major drawbacks because of the lack of spatial<br />

information. Other methods that work with snakes combine various tracking and loca-<br />

tion techniques and are hence more complex. Moreover, snakes can suffer the leaking<br />

edge problem, which worsens the result dramatically. The investigated image moment<br />

technique is straightforward, but may not be applicable to complex tracking tasks. No<br />

paper states information about the used programming language, and sources are not<br />

freely available on the Internet. We therefore assume that all works are implemented<br />

with a platform dependent language like C++, which may have advantages in the<br />

required processing time, but raises constraints in the portability and the ease of use.<br />

26

2. Algorithms in Consideration<br />

2.1. Overview<br />

In Chapter 1 we summarized different approaches for facial feature tracking. We<br />

showed that FACS-based methods have difficulties as they were not originally devel-<br />

oped for machine recognition. The motion-based approach surprised to be straight-<br />

forward and comprehensible. Other algorithms have been computationally expensive<br />

or seem not to be straightforward to implement. According to their description in pa-<br />

pers and their predicted practicability, we selected two tracking procedures for a closer<br />

examination: active contour models (snakes) and moment-based tracking. Both ap-<br />

proaches need manual or automated initialization in the first video frame. Snakes need<br />

an initial contour and therefore exact feature points for processing, the moment-based<br />

solution works on the complete picture, but needs initialization of feature regions be-<br />

cause it can only recognize single objects. The required preprocessing steps for the two<br />

methods are also similar. They both work on binary edge images, but will presumably<br />

produce better results on grayscale edge images, where the edge intensity varies and<br />

and therefore also weaker edges can be handled. The two algorithms mainly differ in<br />

their implementation complexity and processing time. This factor is investigated in<br />

this chapter.<br />

2.2. Testing Method<br />

2.2.1. Testing Tool<br />

For testing the practicability and performance of the algorithms in consideration, we<br />

have used and extended Java code examples which already implement the required<br />

functionality. These examples are programmed as plugins for the Java based image<br />

processing tool ImageJ. It is a public domain program, available at http://rsb.info.<br />

nih.gov/ij/.<br />

27

2. Algorithms in Consideration<br />

On the homepage, the program is described as following:<br />

“ImageJ is [...] inspired by NIH Image for the Macintosh. It runs, either as<br />

an online applet or as a downloadable application, on any computer with a<br />

Java 1.1 or later virtual machine. Downloadable distributions are available<br />

for Windows, Mac OS, Mac OS X and Linux. [...]<br />

ImageJ was designed with an open architecture that provides extensibil-<br />

ity via Java plugins. Custom acquisition, analysis and processing plugins<br />

can be developed using ImageJ’s built in editor and Java compiler. User-<br />

written plugins make it possible to solve almost any image processing or<br />

analysis problem.”<br />

At the time of inquiry, ImageJ was available in version 1.33, which had errors in<br />

working with Java 1.5 on Linux 1 and was therefore used with Java 1.4.2. The recent<br />

ImageJ version 1.34 works fine with Java 1.5.<br />

2.2.2. Input Data<br />

For the following tests we used a binary edge image of a human face. We therefore<br />

extracted a video frame that shows a face in neutral position in the middle of the im-<br />

age. This enables us to have clearly identifiable facial features, represented by edges,<br />

which eases the selection of feature region and therefore the correct comparison of the<br />

output. Moreover, we approximate the test situation to the conditions of the final<br />

Java tracking program. In order to transform the video frame into the correct format,<br />

we converted the color image into a grayscale image and processed it with a Canny<br />

edge detector.<br />

In the following sections we describe the results of the ImageJ feature tracking<br />

plugins.<br />

1 java.lang.NullPointerException is thrown during image window initialization.<br />

28

2.3. Testing Snake Algorithms<br />

2. Algorithms in Consideration<br />

We have found two ImageJ plugins that implement Snake algorithms, which both<br />

work on grayscale images: Jacob’s SplineSnake implementation, and the snake plugin<br />

by Boudier.<br />

SplineSnake The SplineSnake implementation of Jacob et al. [2004] allows to select<br />

any required image region by drawing a path onto the source image. Points on this<br />

path, which have a preset distance between each other, are called knots and are the<br />

initialization for the snake algorithm. Additionally, the user can specify constraint<br />

knots that have to be passed by the final snake. All adjustable parameters are de-<br />

scribed at http://ip.beckman.uiuc.edu/Software/SplineSnake/usage.html, the<br />

values in Table 2.1 are directly used by the Snake algorithm:<br />

Parameter Default<br />

Image energy: proper linear combination of gradient and region<br />

energies can result in better convergence. The right combina-<br />

tion depends on the image.<br />

“100% Region”<br />

Maximum number of iterations. 2000<br />

Size of one step during optimization. 2.0<br />

Accuracy to which the snake is optimized. 0.01<br />

Smoothing radius of the image smoothing procedure that is<br />

computed before running the snake algorithm.<br />

Spring weight: specifies how the constraint knots are weighted. 0.75<br />

Table 2.1.: SplineSnake parameters.<br />

For testing, we have drawn a nearly rectangular path around the mouth, with a<br />

knot distance of 5 pixels. During the testing process, we varied the step size and the<br />

number of iterations. Satisfying results were possible with a step size of 10, and 200<br />

iterations. With 50 iterations (as in (b) and (c)), we were not able to approximate the<br />

mouth contour close enough. A step size of more than 10 did not enhance the process.<br />

Results are illustrated in Figure 2.1.<br />

29<br />

1.5

(a)<br />

(c)<br />

2. Algorithms in Consideration<br />

Figure 2.1.: Overview of SplineSnake results. The initial selection (a), SplineSnake<br />

with step size 1.0 and 50 iterations (b), step size 10.0 and 50 iterations<br />

(c), and SplineSnake with step size 10.0 and 200 iterations.<br />

SplineSnake cannot omit small sources of interference in its processing. A tracking<br />

example with distracting pixels is illustrated in Figure 2.2.<br />

Figure 2.2.: SplineSnake interference. The final snake (the inner red line) is not able to<br />

ignore single interfering pixels on the right side of the upper lip contour.<br />

The plugin delivers information about the processing time and the resulting snake<br />

knots. Table 2.2 shows a result of SplineSnake test cycles. For these results, we tested<br />

with different manually drawn mouth selections, 200 snake iterations and a step size<br />

of 10.0. Other values were not changed from default. The average processing time<br />

after 20 cycles was 2.42 seconds, with 26.3 resulting knots and 4.35 curve-describing<br />

samples per knot.<br />

30<br />

(b)<br />

(d)

2. Algorithms in Consideration<br />

Requiring a tracking program that is able to work close to realtime, these tracking<br />

times are not supportable. However, we have to notice that the tracking times of<br />

subsequent video frames could be reduced by initializing the snake with the parameters<br />

of the preceding frame. The initial snake would then be close to the final snake, and<br />

therefore less cycles (presumably < 10) have to be processed.<br />

no. knots samples/knot time<br />

1 29 4 2.280<br />

2 27 4 2.587<br />

3 29 4 3.846<br />

4 25 4 2.674<br />

5 32 3 4.133<br />

6 27 5 2.844<br />

7 24 5 3.684<br />

8 17 6 0.616<br />

9 23 5 1.353<br />

10 27 4 2.207<br />

11 25 4 1.579<br />

12 25 5 0.849<br />

13 25 3 2.027<br />

14 27 5 2.051<br />

15 25 4 1.543<br />

16 25 4 2.662<br />

17 22 4 1.233<br />

18 35 5 4.131<br />

19 29 4 3.263<br />

20 28 5 2.833<br />

26.3 4.35 2.420<br />

Table 2.2.: SplineSnake: Results<br />

31

2. Algorithms in Consideration<br />

Boudier Snake Plugin The second ImageJ plugin for snakes is written by Thomas<br />

Boudier. It is available at http://www.snv.jussieu.fr/~wboudier/softs/snake.<br />

html. For testing, we used the default parameters listed in Table 2.3.<br />

Parameter Value<br />

Gradient threshold 20<br />

Regularization 0.10<br />

Number of iterations 200<br />

Step result show 5<br />

Alpha-Canny-Deriche 1.00<br />

Table 2.3.: Bodier snake parameters<br />

For a comparison to the SplineSnake plugin, we chose to use a rectangular initial<br />

selection. As illustrated in Figure 2.3, the success of the snake procedure greatly<br />

depends on this initial selection. During testing, a change of the selection by one pixel<br />

resulted in extreme outgrowths of the resulting snake.<br />

1(a)<br />

2(a)<br />

Figure 2.3.: Overview of snake results. 1: a Selection of (231, 208, 59, 16) (a) delivers<br />

1(b)<br />

2(b)<br />

good results (b), 2: an enlargement of the region width by 1 pixel (a) has<br />

significant negative effects (b).<br />

Table 2.4 shows testing results that were made with this snake plugin. The values<br />

specified represent the rectangular selection on the edge image round the mouth region<br />

32

2. Algorithms in Consideration<br />

(position on x/y-axes, width and height). A checkmark in the last column indicates<br />

whether the result is satisfying (that is the snake bounds the mouth region), or leaked<br />

out over a big part of the displayed face.<br />

As the results show, the plugin delivers a successful result in only about one third of<br />

the testcases. In the last row we took the average of all selections as snake initialization<br />

values, which also lead to a negative outcome. If the region selection is closer to the<br />

mouth contour, for example with an elliptical selection, the algorithm works more<br />

reliably.<br />

no. x y w h result<br />

1 236 215 54 9 ✗<br />

2 236 210 54 16 ✗<br />

3 235 210 55 15 ✗<br />

4 234 207 56 20 ✗<br />

5 233 211 57 17 ✗<br />

6 233 211 54 18 ✗<br />

7 233 208 60 18 ✗<br />

8 233 208 58 17 ✗<br />

9 232 209 61 21 ✗<br />

10 232 209 61 19 ✗<br />

11 232 209 60 17 ✓<br />

12 231 208 64 21 ✗<br />

13 231 208 62 19 ✓<br />

14 231 208 60 16 ✗<br />

15 231 208 59 16 ✓<br />

233 209 58 17 ✗<br />

Table 2.4.: Snake: Results<br />

33

2.4. Testing Image Moments<br />

2. Algorithms in Consideration<br />

An ImageJ moment calculation implementation was found at http://rsb.info.nih.<br />

gov/ij/plugins/moments.html, which was apparently integrated into ImageJ ver-<br />

sion 1.34 2 . The plugin calculates image moments from rectangular image selections<br />

up to the 4th order, and calculates the elongation and orientation of objects. The<br />

implementation allows a mapping of image intensity values before the moments are<br />

calculated. For that purpose, it uses the equation<br />

pi,j = f ∗ (pi,j − c) (2.1)<br />

where pi,j is the intensity value of the pixel. Factor f and cutoff c can be specified<br />

manually in the user interface. This mapping allows the user to specify another back-<br />

ground color than black (by setting the cutoff accordingly), and to process images with<br />

a different color range (by changing the factor). The plugin provides tabular output<br />

of the moment calculations, the results are not illustrated in the image. The calcu-<br />

lations and the provided source code still give a good overview on how the moment<br />

calculations work. The implementation is straightforward and very comprehensible.<br />

It executes the following steps:<br />

Step 1: Compute moments of order 0 and 1.<br />

Step 2: Compute coordinates of the centroid.<br />

Step 3: Compute moments of orders 2, 3, and 4.<br />

Step 4: Normalize 2nd moments and compute the variance around the centroid.<br />

Step 5: Normalize 3rd and 4th order moments and compute the skewness (symmetry)<br />

and kurtosis (peakedness) around the centroid.<br />

Step 6: Compute orientation and eccentricity.<br />

Source: Awcock [Awcock, 1995, pp. 162–165]<br />

In the case of a moment-based facial feature tracker, moment calculations above the<br />

2nd order are not necessary. Step 5 and 6 can therefore be left out. Note that the<br />

image pixels have to be traversed twice (in step 1 and step 3), which increases the<br />

complexity of g(n) = (n) for a region with n foreground pixels by factor 2.<br />

2 Measurements in ImageJ (‘Analyze→Set Measurements...’ and ‘Analyze→Measure’)<br />

34

2. Algorithms in Consideration<br />

For testing purposes, we changed the plugin code so that it displays processing time<br />

information. This information shows calculation times between 10 ms and < 1 ms for a<br />

60x20 pixel selection. The more often the plugin is executed, the less calculation time<br />

is needed. JVM caching may be responsible for that behavior. This time information<br />

cannot be compared one-to-one to the data produced by the snake code. The plugin<br />

calculates moments of higher orders, which are not necessary for a feature tracker.<br />

Still, this procedure does not have an influence on the complexity of the algorithm, as<br />

no additional traversation of the image pixels is necessary. The complexity will change<br />

with the implementation of the BSP tree structure, as the moment information has<br />

to be calculated for every tree node. It is then g(n) = O(log(d) ∗ n) for a tree depth<br />

of d. Still, the processing times are far shorter than those of the snake plugin, and we<br />

assume that this will also be the case if Java tracker is based on moments.<br />

2.5. Summary<br />

We have shown that the performance and reliability of snake algorithms strongly de-<br />

pends on the adjustment of its parameters and the initialization of snake knots. The<br />

accurate selection of the image area and snake parameters has been problematic and<br />

challenging during the test phase. Small changes of region selections have caused in-<br />

comprehensible huge differences in the processing results. Calculation times of about<br />

2.5 seconds per execution seem to be too high for an application that aims to work<br />

close to realtime. The time could be reduced in subsequent frames by initializing the<br />

snake knots with the knots of the previous frame. Then the major execution time<br />

would only accrue in the first video frame. In contrast, moment calculations proved<br />

to be straightforward, fast and comprehensible. In addition to the results of our tests,<br />

the paper about tracking with moments calculation [Rocha et al., 2002] describes an<br />

exact course of action, which gives a clear path on how to proceed and therefore eases<br />

future work. For that reason we decided to implement a moment-based Java feature<br />

tracker.<br />

The next section describes the necessary prerequisites and preparations that have<br />

to be made, so that a moment-based tracking algorithm can work satisfactorily.<br />

35

3. Input Data and Its Preparation<br />

3.1. Overview<br />

In order to be applicable to the selected tracking algorithm, the input video has to<br />

be read and transformed into a proper format and quality. In this chapter, we divide<br />