Automated Marketing Research Using Online Customer Reviews

Automated Marketing Research Using Online Customer Reviews

Automated Marketing Research Using Online Customer Reviews

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

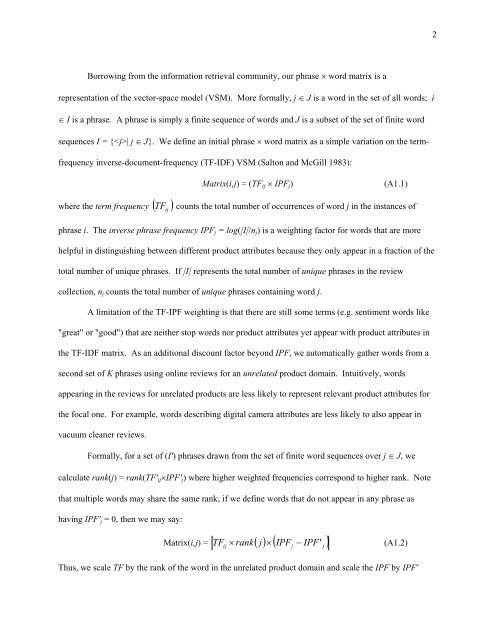

Borrowing from the information retrieval community, our phrase � word matrix is a<br />

representation of the vector-space model (VSM). More formally, j � J is a word in the set of all words; i<br />

� I is a phrase. A phrase is simply a finite sequence of words and J is a subset of the set of finite word<br />

sequences I = {| j � J}. We define an initial phrase � word matrix as a simple variation on the term-<br />

frequency inverse-document-frequency (TF-IDF) VSM (Salton and McGill 1983):<br />

Matrix(i,j) = (TFij � IPFj) (A1.1)<br />

where the term frequency �TF ij � counts the total number of occurrences of word j in the instances of<br />

phrase i. The inverse phrase frequency IPFj = log(|I|/nj) is a weighting factor for words that are more<br />

helpful in distinguishing between different product attributes because they only appear in a fraction of the<br />

total number of unique phrases. If |I| represents the total number of unique phrases in the review<br />

collection, nj counts the total number of unique phrases containing word j.<br />

A limitation of the TF-IPF weighting is that there are still some terms (e.g. sentiment words like<br />

"great" or "good") that are neither stop words nor product attributes yet appear with product attributes in<br />

the TF-IDF matrix. As an additional discount factor beyond IPF, we automatically gather words from a<br />

second set of K phrases using online reviews for an unrelated product domain. Intuitively, words<br />

appearing in the reviews for unrelated products are less likely to represent relevant product attributes for<br />

the focal one. For example, words describing digital camera attributes are less likely to also appear in<br />

vacuum cleaner reviews.<br />

Formally, for a set of (I') phrases drawn from the set of finite word sequences over j � J, we<br />

calculate rank(j) = rank(TF'ij�IPF'j) where higher weighted frequencies correspond to higher rank. Note<br />

that multiple words may share the same rank; if we define words that do not appear in any phrase as<br />

having IPF'j = 0, then we may say:<br />

Matrix(i,j) = TF rank�<br />

j��<br />

�IPF � IPF'<br />

�<br />

ij<br />

� (A1.2)<br />

Thus, we scale TF by the rank of the word in the unrelated product domain and scale the IPF by IPF'<br />

j<br />

j<br />

2