al - Adobe Acrobat Engineering

al - Adobe Acrobat Engineering

al - Adobe Acrobat Engineering

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Proceedings of the<br />

IEEE Visu<strong>al</strong>ization '94 Conference

Copyright © 1994, Institute of Electric<strong>al</strong> and Electronics Engineers. All rights reserved.<br />

No part of this book may be reproduced in any form, nor may it be stored in a retriev<strong>al</strong><br />

system or transmitted in any form without written permission from the publisher.<br />

Person<strong>al</strong> use of this materi<strong>al</strong> is permitted. However, permission to reprint/republish this<br />

materi<strong>al</strong> for advertising or promotion<strong>al</strong> purposes or for creating new collective works for<br />

res<strong>al</strong>e or redistribution must be obtained from the IEEE. For information on obtaining<br />

permission, send a blank email message to info.pub.permission@ieee.org.<br />

By choosing to view this document, you agree to <strong>al</strong>l provisions of the copyright laws<br />

protecting it.

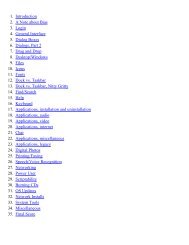

Contents<br />

Preface......................................................................................................................................x<br />

Reviewers................................................................................................................................xi<br />

Conference Committee...................................................................................................... xiii<br />

Program Committee ...........................................................................................................xiv<br />

Honorary Chair Address<br />

Interactive Visu<strong>al</strong>ization via 3D User Interfaces......................................................................2<br />

A. van Dam<br />

Keynote Panel<br />

Introduction: Visu<strong>al</strong>ization in the Information Highway .........................................................4<br />

N. Gershon<br />

Information Workspaces for Large Sc<strong>al</strong>e Cognition..................................................................5<br />

S.K. Card<br />

A Visu<strong>al</strong>ization System on Every Desk — Keeping it Simple...................................................6<br />

S.F. Roth<br />

The Future of Graphic User Interfaces.....................................................................................7<br />

B. Shneiderman<br />

Capstone Address<br />

The Cruci<strong>al</strong> Difference between Human and Machine Vision: Foc<strong>al</strong> Attention.....................10<br />

B. Julesz<br />

Volume Visu<strong>al</strong>ization Systems<br />

PAPERS<br />

Integrated Control of Distributed Volume Visu<strong>al</strong>ization Through<br />

the World-Wide-Web................................................................................................................13<br />

C.S. Ang, D.C. Martin, and M.D. Doyle<br />

A Distributed, Par<strong>al</strong>lel, Interactive Volume Rendering Package ...........................................21<br />

J.S. Rowlan, G.E. Lent, N. Gokh<strong>al</strong>e, and S. Bradshaw<br />

VolVis: A Diversified Volume Visu<strong>al</strong>ization System ...............................................................31<br />

R. Avila, T. He, L. Hong, A. Kaufman, H. Pfister,<br />

C. Silva, L. Sobierajski, and S. Wang<br />

Applications<br />

Implicit Modeling of Swept Surfaces and Volumes.................................................................40<br />

W.J. Schroeder, W.E. Lorensen, and S. Linthicum<br />

Visu<strong>al</strong>izing Polycryst<strong>al</strong>line Orientation Microstructures<br />

with Spheric<strong>al</strong> Color Maps ......................................................................................................46<br />

B. Yamrom, J.A. Sutliff, and A.P. Woodfield<br />

Introducing Alpha Shapes for the An<strong>al</strong>ysis of Path Integr<strong>al</strong> Monte Carlo Results................52<br />

P.J. Moran and M. Wagner<br />

v

Surfaces<br />

Piecewise-Linear Surface Approximation from Noisy Scattered Samples..............................61<br />

M. Marg<strong>al</strong>iot and C. Gotsman<br />

Triangulation and Display of Ration<strong>al</strong> Parametric Surfaces..................................................69<br />

C.L. Bajaj and A. Royappa<br />

Isosurface Generation by Using Extrema Graphs ..................................................................77<br />

T. Itoh and K. Koyamada<br />

Visu<strong>al</strong>ization Techniques<br />

Wavelet-Based Volume Morphing ...........................................................................................85<br />

T. He, S. Wang, and A. Kaufman<br />

Progressive Transmission of Scientific Data Using Biorthogon<strong>al</strong><br />

Wavelet Transform ..................................................................................................................93<br />

H. Tao and R.J. Moorhead<br />

An Ev<strong>al</strong>uation of Reconstruction Filters for Volume Rendering ..........................................100<br />

S.R. Marschner and R.J. Lobb<br />

Visu<strong>al</strong>izing Flow with Quaternion Frames ...........................................................................108<br />

A.J. Hanson and H. Ma<br />

Flow Features and Topology<br />

Feature Detection from Vector Quantities in a Numeric<strong>al</strong>ly Simulated<br />

Hypersonic Flow Field in Combination with Experiment<strong>al</strong> Flow Visu<strong>al</strong>ization ..................117<br />

H.-G. Pagendarm and B. W<strong>al</strong>ter<br />

3D Visu<strong>al</strong>ization of Unsteady 2D Airplane Wake Vortices ...................................................124<br />

K.-L. Ma and Z.C. Zheng<br />

Vortex Tubes in Turbulent Flows: Identification, Representation, Reconstruction.............132<br />

D.C. Banks and B.A. Singer<br />

The Topology of Second-Order Tensor Fields........................................................................140<br />

T. Delmarcelle and L. Hesselink<br />

Visu<strong>al</strong>izing Geometry and Algorithms<br />

GASP - A System for Visu<strong>al</strong>izing Geometric Algorithms......................................................149<br />

A. T<strong>al</strong> and D. Dobkin<br />

Virtu<strong>al</strong> Re<strong>al</strong>ity Performance for Virtu<strong>al</strong> Geometry...............................................................156<br />

R.A. Cross and A.J. Hanson<br />

A Library for Visu<strong>al</strong>izing Combinatori<strong>al</strong> Structures.............................................................164<br />

M.A. Najork and M.H. Brown<br />

Strata-Various: Multi-Layer Visu<strong>al</strong>ization of Dynamics in Software System Behavior ......172<br />

D. Kimelman, B. Rosenberg, and T. Roth<br />

Volume Visu<strong>al</strong>ization Techniques<br />

Differenti<strong>al</strong> Volume Rendering: A Fast Volume Visu<strong>al</strong>ization Technique<br />

for Flow Animation ................................................................................................................180<br />

H.-W. Shen and C. R. Johnson<br />

Fast Surface Rendering from Raster Data by Voxel Travers<strong>al</strong><br />

Using Chessboard Distance...................................................................................................188<br />

vi

M. Šrámek<br />

Par<strong>al</strong>lel Performance Measures for Volume Ray Casting.....................................................196<br />

C.T. Silva and A.E. Kaufman<br />

User Interfaces and Techniques<br />

Spiders: A New User Interface for Rotation and Visu<strong>al</strong>ization<br />

of N-Dimension<strong>al</strong> Point Sets..................................................................................................205<br />

K.L. Duffin and W.A. Barrett<br />

Restorer: A Visu<strong>al</strong>ization Technique for Handling Missing Data.........................................212<br />

R. Twiddy, J. Cav<strong>al</strong>lo, and S.M. Shiri<br />

User Modeling for Adaptive Visu<strong>al</strong>ization Systems..............................................................217<br />

G.O. Domik and B. Gutkauf<br />

Flow Visu<strong>al</strong>ization Techniques<br />

Streamb<strong>al</strong>l Techniques for Flow Vizu<strong>al</strong>ization .....................................................................225<br />

M. Brill, H. Hagen, H.-C. Rodrian, W. Djatschin, and S.V. Klimenko<br />

Volume Rendering Methods for Computation<strong>al</strong> Fluid Dynamics Visu<strong>al</strong>ization...................232<br />

D.S. Ebert, R. Yagel, J. Scott, and Y. Kurzion<br />

Visu<strong>al</strong>izing Flow over Curvilinear Grid Surfaces Using Line Integr<strong>al</strong> Convolution ...........240<br />

L.K. Forssell<br />

Visu<strong>al</strong>izing 3D Velocity Fields Near Contour Surfaces.........................................................248<br />

N. Max, R. Crawfis, and C. Grant<br />

Flow Visu<strong>al</strong>ization Systems<br />

UFAT — A Particle Tracer for Time-Dependent Flow Fields...............................................257<br />

D.A. Lane<br />

The Design and Implementation of the Cortex Visu<strong>al</strong>ization System..................................265<br />

D. Banerjee, C. Morley, and W. Smith<br />

An Annotation System for 3D Fluid Flow Visu<strong>al</strong>ization.......................................................273<br />

M.M. Loughlin and J.F. Hughes<br />

Surface Extraction<br />

Discretized Marching Cubes..................................................................................................281<br />

C. Montani, R. Scateni, and R. Scopigno<br />

Approximation of Isosurface in the Marching Cube: Ambiguity Problem ............................288<br />

S.V. Matveyev<br />

Nonpolygon<strong>al</strong> Isosurface Rendering for Large Volume Datasets .........................................293<br />

J.W. Durkin and J.F. Hughes<br />

Visu<strong>al</strong>ization Systems<br />

Mix&Match: A Construction Kit for Visu<strong>al</strong>ization................................................................302<br />

A. Pang and N. Alper<br />

A Lattice Model for Data Display..........................................................................................310<br />

W.L. Hibbard, C.R. Dyer, and B.E. Paul<br />

An Object Oriented Design for the Visu<strong>al</strong>ization of Multi-Variable Data Objects...............318<br />

vii

J.M. Favre and J. Hahn<br />

XmdvTool: Integrating Multiple Methods for Visu<strong>al</strong>izing Multivariate Data......................326<br />

M.O. Ward<br />

CASE STUDIES<br />

Magnetohydrodynamics and Mathematics<br />

Tokamak Plasma Turbulence Visu<strong>al</strong>ization..........................................................................337<br />

S.E. Parker and R. Samtaney<br />

Visu<strong>al</strong>izing Magnetohydrodynamic Turbulence and Vortex Streets ........................................*<br />

A. Roberts<br />

Visu<strong>al</strong>ization and Data An<strong>al</strong>ysis in Space and Atmospheric Science ...................................341<br />

A. Mankofsky, E.P. Szuszczewicz, P. Blanchard, C. Goodrich,<br />

D. McNabb, R. Kulkarni, and D. Kamins<br />

Visu<strong>al</strong>ization for Boundary V<strong>al</strong>ue Problems .........................................................................345<br />

G. Domokos and R. Paffenroth<br />

Environment<br />

Severe Rainf<strong>al</strong>l Events in Northwestern Peru: Visu<strong>al</strong>ization of<br />

Scattered Meteorologic<strong>al</strong> Data:..............................................................................................350<br />

L.A. Treinish<br />

Visu<strong>al</strong>ization of Mesosc<strong>al</strong>e Flow Features in Ocean Basins .................................................355<br />

A. Johannsen and R. Moorehead<br />

Integrating Spati<strong>al</strong> Data Display with Virtu<strong>al</strong> Reconstruction............................................359<br />

P. Peterson, B. Hayden, and F.D. Fracchia<br />

Medic<strong>al</strong> Applications<br />

Observing a Volume Rendered Fetus within a Pregnant Patient ........................................364<br />

A. State, D.T. Chen, C. Tector, A. Brandt, H. Chen,<br />

R. Ohbuchi, M. Bajura, and H. Fuchs<br />

Visu<strong>al</strong>ization of 3D Ultrasonic Data......................................................................................369<br />

G. Sakas, L.-A. Schreyer, and M. Grimm<br />

New Techniques in the Design of He<strong>al</strong>thcare Facilities .......................................................374<br />

T. Alameldin and M. Shepley<br />

Fire and Brimstone<br />

Visu<strong>al</strong>ization of an Electric Power Transmission System.....................................................379<br />

P.M. Mahadev and R.D. Christie<br />

Volume Rendering of Pool Fire Data.....................................................................................382<br />

H.E. Rushmeier, A. Hamins, and M.-Y. Choi<br />

_____________________________________________________<br />

* Paper not received in time for publication<br />

Visu<strong>al</strong>ization of Volcanic Ash Clouds ....................................................................................386<br />

M. Roth and R. Guritz<br />

viii

PANELS<br />

Ch<strong>al</strong>lenges and Opportunities in Visu<strong>al</strong>ization for NASA’s EOS Mission<br />

to Planet Earth ......................................................................................................................392<br />

Chair: M. Botts<br />

Panelists: J.D. Dykstra, L.S. Elson, S.J. Goodman, and M. Lee<br />

Visu<strong>al</strong>ization in Medicine: VIRTUAL Re<strong>al</strong>ity or ACTUAL Re<strong>al</strong>ity?.....................................396<br />

Co-Chairs: C. Roux and J.-L. Coatrieux<br />

Panelists: J.-L. Dillenseger, E.K. Fishman, M. Loew,<br />

H.-P. Meinzer, and J.D. Pearlman<br />

Visu<strong>al</strong>ization and Geographic Information Systems Integration: What Are the<br />

Needs and the Requirements, If Any ?? ................................................................................400<br />

Chair: T.M. Rhyne<br />

Panelists: W. Ivey, L. Knapp, P. Kochevar, and T. Mace<br />

Visu<strong>al</strong>ization of Multivariate (Multidimension<strong>al</strong>) Data and Relations.................................404<br />

Chair: A. Inselberg<br />

Panelists: H. Hinterberger, T. Mih<strong>al</strong>isin, and G. Grinstein<br />

Visu<strong>al</strong>izing Data: Is Virtu<strong>al</strong> Re<strong>al</strong>ity the Key?.......................................................................410<br />

Chair: L.M. Stone<br />

Panelists: T. Erickson, B.B. Bederson, P. Rothman, and R. Muzzy<br />

V<strong>al</strong>idation, Verification and Ev<strong>al</strong>uation................................................................................414<br />

Chair: S. Uselton<br />

Panelists: G. Dorn, C. Farhat, M. Vannier, K. Esbensen, and A. Globus<br />

Color Plates .......................................................................................................CP-1 to CP-46<br />

Author Index...................................................................................................................CP-47<br />

ix

Integrated Control of Distributed Volume Visu<strong>al</strong>ization<br />

Through the World-Wide-Web<br />

The World-Wide-Web (WWW) has created a new paradigm for<br />

online information retriev<strong>al</strong> by providing immediate and ubiquitous<br />

access to digit<strong>al</strong> information of any type from data repositories<br />

located throughout the world. The web’s development enables not<br />

only effective access for the generic user, but <strong>al</strong>so more efficient and<br />

timely information exchange among scientists and researchers. We<br />

have extended the capabilities of the web to include access to threedimension<strong>al</strong><br />

volume data sets with integrated control of a distributed<br />

client-server volume visu<strong>al</strong>ization system. This paper provides<br />

a brief background on the World-Wide-Web, an overview of<br />

the extensions necessary to support these new data types and a<br />

description of an implementation of this approach in a WWWcompliant<br />

distributed visu<strong>al</strong>ization system.<br />

1. Introduction<br />

Advanced scanning devices, such as magnetic resonance<br />

imaging (MRI) and computer tomography (CT), have been<br />

widely used in the fields of medicine, qu<strong>al</strong>ity assurance and<br />

meteorology [Pommert, Zandt, Hibbard]. The need to visu<strong>al</strong>ize<br />

resulting data has given rise to a wide variety of volume<br />

visu<strong>al</strong>ization techniques and computer graphics research groups<br />

have implemented a number of systems to provide volume<br />

visu<strong>al</strong>ization (e.g. AVS, ApE, Sunvision Voxel and 3D<br />

Viewnix)[Gerleg, Mercurio, VandeWettering]. Previously<br />

these systems have depended upon speci<strong>al</strong>ized graphics hardware<br />

for rendering and significant loc<strong>al</strong> secondary storage for<br />

the data. The expense of these requirements has limited the<br />

ability of researchers to exchange findings. To overcome the<br />

barrier of cost, and to provide addition<strong>al</strong> means for researchers<br />

to exchange and examine three-dimension<strong>al</strong> volume data, we<br />

have implemented a distributed volume visu<strong>al</strong>ization tool for<br />

gener<strong>al</strong> purpose hardware, we have further integrated that<br />

visu<strong>al</strong>ization service with the distributed hypermedia [Flanders,<br />

Broering, Kiong, Robison, Story] system provided by the<br />

World-Wide-Web [Nickerson].<br />

Our distributed volume visu<strong>al</strong>ization tool, VIS, utilizes a<br />

Cheong S. Ang, M.S.<br />

David C. Martin, M.S.<br />

Michael D. Doyle, Ph.D.<br />

University of C<strong>al</strong>ifornia, San Francisco<br />

Library and Center for Knowledge Management<br />

San Francisco, C<strong>al</strong>ifornia 94143-0840<br />

pool of gener<strong>al</strong> purpose workstations to generate three dimension<strong>al</strong><br />

representations of volume data. The VIS tool provides<br />

integrated load-b<strong>al</strong>ancing across any number of heterogeneous<br />

UNIX workstations (e.g. SGI, Sun, DEC, etc…) [Giertsen]<br />

taking advantage of the unused cycles that are gener<strong>al</strong>ly available<br />

in academic and research environments. In addition, VIS<br />

supports speci<strong>al</strong>ized graphics hardware (e.g. the Re<strong>al</strong>ityEngine<br />

from Silicon Graphics), when available, for re<strong>al</strong>-time visu<strong>al</strong>ization.<br />

Distributing information that includes volume data requires<br />

the integration of visu<strong>al</strong>ization with a document delivery<br />

mechanism. We have integrated VIS and volume data into<br />

the WWW, taking advantage of the client-server architecture<br />

of WWW and its ability to access hypertext documents stored<br />

anywhere on the Internet [Obraszka, Nickersen]. We have<br />

enhanced the capabilities of the most popular WWW client,<br />

Mosaic [Andreessen] from the Nation<strong>al</strong> Center for<br />

Supercomputer Applications (NCSA), to support volume data<br />

and have defined an inter-client protocol for communication<br />

between VIS and Mosaic for volume visu<strong>al</strong>ization. It should be<br />

noted that other types of interactive applications could be<br />

"embedded" within HTML documents as well. Our approach<br />

can be gener<strong>al</strong>ized to <strong>al</strong>low the implementation of object<br />

linking and embedding over the Internet, similar to the features<br />

the OLE 2.0 provides users of Microsoft Windows on an<br />

individu<strong>al</strong> machine.<br />

1.1 The World-Wide-Web<br />

The World-Wide-Web is a combination of a transfer<br />

protocol for hyper-text documents (HTTP) and a hyper-text<br />

mark-up language (HTML) [Nickersen]. The basic function<strong>al</strong>ity<br />

of HTTP <strong>al</strong>lows a client application to request a wide<br />

variety of data objects from a server. Objects are identified by<br />

a univers<strong>al</strong> resource locator (URL)[Obraczka] that contains<br />

information sufficient to both locate and query a remote server.<br />

HTML documents are defined by a document type definition

(DTD) of the Standard Gener<strong>al</strong>ized Mark-up Language<br />

(SGML). These documents are returned to WWW clients and<br />

are presented to the user. Users are able to interact with the<br />

document presentation, following hyper-links that lead to<br />

other HTML documents or data objects. The client application<br />

may <strong>al</strong>so directly support other Internet services, such as<br />

FTP, Gopher, and WAIS, [Andreessen] or may utilize gateways<br />

that convert HTTP protocol requests and return HTML<br />

documents. In <strong>al</strong>l interactions, however, the user is presented<br />

with a common resulting data format (HTML) and <strong>al</strong>l links are<br />

accessible via URL’s.<br />

1.2 Mosaic<br />

THE INTERNET<br />

SESSION<br />

MANAGER<br />

VISUALIZATION SERVERS DATA CENTER<br />

HIGH SPEED LOCAL NETWORK (1 GBPS)<br />

Figure 1: VIS client/server model.<br />

The Nation<strong>al</strong> Center for Supercomputer Applications<br />

(NCSA) has developed one of the most function<strong>al</strong> and popular<br />

World-Wide-Web clients: Mosaic. This client is available via<br />

public FTP for the most popular computer interfaces (Motif,<br />

Windows and Macintosh). Mosaic interprets a majority of the<br />

HTML DTD elements and presents the encoded information<br />

with page formatting, type-face specification, image display,<br />

fill-in forms, and graphic<strong>al</strong> widgets. In addition, Mosaic<br />

provides inherent access to FTP, Gopher, WAIS and other<br />

network services [Andreessen].<br />

1.3 VIS<br />

VIS is a simple but complete volume visu<strong>al</strong>izer. VIS<br />

provides arbitrary three-dimension<strong>al</strong> transformation (e.g. rotation<br />

and sc<strong>al</strong>ing), specification of six axi<strong>al</strong> clipping planes<br />

(n.b. a cuboid), one arbitrary clipping plane, and control of<br />

opacity and intensity. VIS interactively transforms the cuboid,<br />

and texture-maps the volume data onto the transformed geometry.<br />

It supports distributed volume rendering [Argrio, Drebin,<br />

Kaufman] with run-time selection of computation servers, and<br />

isosurface generation (marching cubes)[Lorenson, Levoy] with<br />

software Gouraud shading for surface-based model extraction<br />

and rendering. It reads NCSA Hierarchic<strong>al</strong> Data Format<br />

(HDF) volume data files, and has a graphic<strong>al</strong> interface utility<br />

to import volume data stored in other formats.<br />

2. VIS: A Distributed Volume Visu<strong>al</strong>ization Tool<br />

VIS is a highly modular distributed visu<strong>al</strong>ization tool,<br />

following the principles of client/server architecture (figure 1),<br />

and consisting of three cooperating processes: VIS, Panel, and<br />

VRServer(s). The VIS module handles the tasks of transformation,<br />

texture-mapping, isosurface extraction, Gouraud shading,<br />

and manages load distribution in volume rendering. VIS<br />

produces images that are drawn either to its own top-level<br />

window (when running stand-<strong>al</strong>one) or to a shared window<br />

system buffer (when running as a cooperative process). The<br />

Panel module provides a graphic<strong>al</strong> user-interface for <strong>al</strong>l VIS<br />

function<strong>al</strong>ity and communicates state changes to VIS. The<br />

VRServer processes execute on a heterogenous pool of gener<strong>al</strong><br />

purpose workstations and perform volume rendering at the<br />

request of the VIS process . The three modules are integrated<br />

as shown in figure 3 when cooperating with another process. A<br />

simple output window is displayed when no cooperating<br />

process is specified.<br />

2.1 Distributed Volume Rendering<br />

Volume rendering <strong>al</strong>gorithms require a significant amount<br />

of computation<strong>al</strong> resources. However, these <strong>al</strong>gorithms are<br />

excellent candidates for par<strong>al</strong>lelization. VIS distributes the<br />

volume rendering among workstations with a “greedy” <strong>al</strong>gorithm<br />

that <strong>al</strong>locates larger portions of the work to faster<br />

machines [Bloomer]. VIS segments the task of volume rendering<br />

based on scan-lines, with segments sized to b<strong>al</strong>ance computation<strong>al</strong><br />

effort versus network transmission time. Each of the

user-selected computation servers fetches a segment for rendering<br />

via remote procedure c<strong>al</strong>ls (RPC), returns results and fetch<br />

another segment. The servers effectively compete for segments,<br />

with faster servers processing more segments per unit<br />

time, ensuring relatively equ<strong>al</strong> load b<strong>al</strong>ancing across the pool.<br />

An<strong>al</strong>ysis of this distribution <strong>al</strong>gorithm [Giertsen, 93] shows<br />

that the performance improvement is a function of both the<br />

number of segments and the number of computation<strong>al</strong> servers,<br />

with the optim<strong>al</strong> number of sections increasing directly with<br />

the number of available servers. Test results indictate that<br />

performance improvement flattens out between 10 to 20<br />

segments distributed across an available pool of four servers.<br />

Although this <strong>al</strong>gorithm may not be perfect, it achieves acceptable<br />

results.<br />

2.2 Cooperative Visu<strong>al</strong>izaton<br />

The VIS client, together with its volume rendering servers,<br />

may be lauched by another application collectively as a<br />

visu<strong>al</strong>ization server. The two requirements of cooperation are<br />

a shared window system buffer for the rendered image and<br />

support for a limited number of inter-process messages. VIS<br />

and the initiating application communicate via the ToolT<strong>al</strong>k<br />

service, passing messages specifying the data object to visu<strong>al</strong>ize<br />

as well as options for visu<strong>al</strong>ization, and maintaining state<br />

regarding image display. The VIS Panel application appears as<br />

a new top-level window and <strong>al</strong>lows the user control of the<br />

visu<strong>al</strong>ization tool.<br />

3. Visu<strong>al</strong>ization with Mosaic<br />

We have enhanced the Mosaic WWW browser to support<br />

both a three-dimension<strong>al</strong> data object and communication with<br />

VIS as a coopezrating application (figure 2). HTTP servers<br />

respond to requests from clients, e.g. Mosaic, by transferring<br />

hypertext documents to the client. Those documents may<br />

contain text and images as intrinsic elements and may <strong>al</strong>so<br />

contain extern<strong>al</strong> links to any arbitrary data object (e.g. audio,<br />

video, etc…). Mosaic may <strong>al</strong>so communicate with other<br />

Internet servers, e.g FTP, either directly – translating request<br />

results into HTML on demand – or via a gateway that provides<br />

translation services. As a WWW client, Mosaic communicates<br />

with the server(s) of interest in response to user actions (e.g.<br />

selecting a hyperlink), initiating a connection and requesting<br />

the document specified by the URL. The server delivers the file<br />

specified in the URL, which may be a HTML document or a<br />

variety of multimedia data files (for example, images, audio<br />

files, and MPEG movies) and Mosaic uses the predefined<br />

SGML DTD for HTML to parse and present the information.<br />

Data types not directly supported by Mosaic are displayed via<br />

user-specifiable extern<strong>al</strong> applications and we have extended<br />

that paradigm to both include three-dimension<strong>al</strong> volume data,<br />

as well as to integrate the extern<strong>al</strong> applications more completely<br />

with Mosaic.<br />

3.1 Mosaic 3D image support<br />

We have extended the HTML DTD to support threedimension<strong>al</strong><br />

data via the introduction of a new SGML element:<br />

EMBED. This element provides information to the<br />

presentation system (i.e. Mosaic) about the content that is<br />

referenced in the document. The EMBED element is defined<br />

in the HTML DTD as shown in Example 1, which is translated<br />

as “SGML document instance element tag EMBED containing<br />

no content; four required attributes: TYPE, the type of the<br />

extern<strong>al</strong> application, in the MIME-type format; HREF, the<br />

location/URL of the datafile; WIDTH, the window width<br />

and, HEIGHT, the window height. The TYPE attribute give<br />

this specification the flexibility to accomodate different types<br />

of extern<strong>al</strong> applications. In a HTML document, a 3D image<br />

element would be represented as shown in Example 2, which<br />

may be interpreted as “create a drawing-area window of width<br />

400 pixels, height 400 pixels, and use the application associated<br />

to hdf/volume MIME content-type to visu<strong>al</strong>ize the data<br />

Embryo.hdf located at the HTTP server site<br />

www.library.ucsf.edu”.<br />

3.2 Interface with Mosaic<br />

The VIS/Mosaic software system consists of three elements:<br />

VIS, Mosaic, and Panel. Currently, the VIS application<br />

communicates with Mosaic via ToolT<strong>al</strong>k, but the<br />

system will work with any interclient communication protocol.<br />

When Mosaic interprets the HTML tag EMBED, it<br />

creates a drawing area widget in the document page presentation<br />

and requests a shared buffer or pixmap from the windowing<br />

system to receive visu<strong>al</strong>ization results. In addition, Mosaic<br />

launches the Panel process, specifying the location of the data<br />

object to render and identifying the shared image buffer. The<br />

Panel process begins execution by first verifying its operating<br />

parameters, then launching the VIS process. The Panel process<br />

<strong>al</strong>so presents the user with the control elements for data<br />

manipulation and manages the communication between the<br />

whole VIS application and Mosaic.<br />

The VIS process, on the other hand, serves as a rendering<br />

engine. It executes the visu<strong>al</strong>ization commands from the Panel<br />

process, integrates the image data segments from various<br />

VRServers, and presents the complete array of image data to<br />

the Panel.<br />

Thus the scenario following a user’s action on the Panel<br />

will be (1) Panel issues visu<strong>al</strong>ization commands to the VIS<br />

rendering engine, (2) VIS sends rendering requests to

Figure 2: VIS embeded within Mosaic for interactive visu<strong>al</strong>ization in a HTML document.<br />

VRServer(s), then gathers the resulting image segments, (3)<br />

Panel fetches the returned image data, then writes it to the<br />

pixmap, (4) Panel notifies Mosaic upon completion, and (5)<br />

Mosaic bit-blots the pixmap contents into its corresponding<br />

DrawingArea widget. The interprocess communication issue<br />

will be addressed in more details under section 3.3. The<br />

configuration of this software system is depicted in figure 3.<br />

3.3 Interclient communication<br />

We recognized the minimum set of communication protocols<br />

between Mosaic and a particular Panel process:<br />

(a) Messages from Mosaic to a Panel process include the<br />

following:<br />

(i) ExitNotify - requesting the Panel to terminate<br />

itself.when Mosaic exits.<br />

(ii) MapNotify - requesting the Panel to map itself to<br />

the screen when the HTML document containing the<br />

DrawingArea corresponding to the above panel is visible.<br />

(iii) UnmapNotify - requesting the Panel to unmap/<br />

iconify itself when the HTML page containing the DrawingArea<br />

corresponding to the above Panel is cached.<br />

(b) Messages from a Panel process to Mosaic may be one<br />

of the following:<br />

(i) RefreshNotify - informing Mosaic of an update in<br />

the shared pixmap, and requesting Mosaic to update the<br />

correspinding DrawingArea.<br />

(ii) PanelStartNotify - informing Mosaic the Panel is<br />

started successfully, and ready to receive messages.<br />

(iii) PanelExitNotify - informing Mosaic the Panel is<br />

exiting, and Mosaic should not send any more messages to the<br />

Panel.<br />

We have packaged the above protocols and <strong>al</strong>l the required<br />

messaging functions into a library. Modification of an existing<br />

extern<strong>al</strong> application merely involves registration of the extern<strong>al</strong><br />

application’s messaging window (the window to receive Mosaic’s<br />

messages), inst<strong>al</strong>lation of c<strong>al</strong>lback functions corresponding to

the messages from Mosaic, and addition of message-sending<br />

routine invocations. The protocol is summarized in Table 1.<br />

Messages Descriptions<br />

ExitNotify Mosaic exiting<br />

MapNotify DrawingArea visible<br />

UnmapNotify DrawingArea cache<br />

RefreshNotify DrawingArea update<br />

PanelStartNotify Panel starting<br />

PanelExitNotify Panel exiting<br />

Table 1: Mosaic/VIS IPC communication.<br />

4. Results<br />

The results of the above implementation are very encouraging.<br />

The Mosaic/VIS sucessfully <strong>al</strong>lows users to visu<strong>al</strong>ize<br />

HDF volume datasets from various HTTP server sites. Fig 2<br />

shows a snapshot of the WWW visu<strong>al</strong>izer. Distributing the<br />

volume rendering loads results in a remarkable speedup in<br />

image computations. Our performance ananlysis with a<br />

homogeneous pool of Sun SPARCstation 2’s on a relatively<br />

c<strong>al</strong>m network produced reasonable results (Figures 4a, 4b, and<br />

4c. Three tri<strong>al</strong>s per plot). The time-versus-number-ofworkstations<br />

curve decreases as more servers participate, and<br />

plateaus when the number of SPARCstations is 11 in the case<br />

of 256x256 image (9 for 192x192 image, and 7 for 128x128<br />

image). The speed increases at the plateaus are very significant:<br />

about 10 times for the 256x256 image, 8 times for the 192x192<br />

image, and 5 times for the 128x128 image. The outcomes<br />

suggest that performance improvement is a function of the<br />

number of volume rendering servers. Furthermore, the optim<strong>al</strong><br />

number of workstations and the speed increase are larger<br />

when the image size is bigger. This is in complete agreement<br />

with Giertsen’s an<strong>al</strong>ysis. We have <strong>al</strong>so successfully tested the<br />

software system in an environment consisting of heterogenous<br />

workstations: a SGI Indigo2 R4400/150MHz, two SGI Indy<br />

R4000PC/100MHz, a DEC Alpha 3000/500 with a 133MHz<br />

Alpha processor, two Sun SparcStations 10, and two Sun<br />

SparcStations 2, which were located arbitrarily on an Ethernet<br />

network. To our knowledge this is the first demonstration of<br />

the embedding of interactive control of a client/server visu<strong>al</strong>ization<br />

application within a multimedia document in a distributed<br />

hypermedia environment, such as the World Wide Web.<br />

5. Ongoing/Future work<br />

We have begun working on sever<strong>al</strong> extensions and improvements<br />

on the above software system:<br />

<br />

<br />

Example 1: SGML definition for EMBED element.<br />

<br />

TYPE=”hdf/volume”<br />

WIDTH=400<br />

HEIGHT=400><br />

Example 2: EMBED element usage.<br />

5.1 MPEG Data Compression<br />

The data transferred between the visu<strong>al</strong>ization servers and<br />

the clients consists of the exact byte streams computed by the<br />

servers, packaged in the XDR machine independent format.<br />

One way to reduce network transferring time would be to<br />

compress the data before delivery. We propose to use the<br />

MPEG compression technique, which will not only perform<br />

redundancy reduction, but <strong>al</strong>so a qu<strong>al</strong>ity-adjustable entropy<br />

reduction. Furthermore, the MPEG <strong>al</strong>gorithm performs<br />

MOSAIC<br />

INTER-CLIENT<br />

COMMUNICATION<br />

VISUALIZATION<br />

COMMANDS<br />

RENDERING<br />

REQUESTS<br />

PANEL<br />

IMAGE<br />

DATA<br />

VRSERVER(S)<br />

Figure 3: Communication among Mosaic, VIS<br />

and distributed rendering servers.<br />

VIS<br />

IMAGE<br />

SEGMENTS

interframe, beside intraframe, compression. Consequently,<br />

only the compressed difference between the current and the<br />

last frames is shipped to the client.<br />

5.2 Gener<strong>al</strong>ized Extern<strong>al</strong>-Application-to-Mosaic-Document-Page<br />

Display Interface<br />

The protocols specified in Table 1 are simple, and gener<strong>al</strong><br />

enough to <strong>al</strong>low most image-producing programs be modified<br />

to display in the Mosaic document page. We have successfully<br />

incorporated an in-house CAD model rendering program into<br />

Mosaic. Our next undertakings will be to extend the protein<br />

1<br />

2<br />

3<br />

4<br />

5<br />

Number of Servers<br />

128x128<br />

192x192<br />

256x256<br />

6<br />

7<br />

8<br />

9<br />

10<br />

11<br />

12<br />

database (PDB) displaying program, and the xv 2D image<br />

processing program, to create a Mosaic PDB visu<strong>al</strong>ization<br />

server, and a Mosaic 2D image processing server.<br />

5.3 Multiple Users<br />

With multiple users, the VIS/Mosaic distributed visu<strong>al</strong>ization<br />

system will need to manage the server resources, since<br />

multiple users utilizing the same computation<strong>al</strong> servers will<br />

slow the servers down significantly. The proposed solution is<br />

depicted in Fig 5. The server resource manager will <strong>al</strong>locate<br />

servers per VIS client request only if those servers are not<br />

Figure 4: Volume rendering performance for 128 2 , 192 2 , and 256 2 data sets .<br />

13<br />

14<br />

128x128<br />

192x192<br />

256x256<br />

12.00<br />

10.00<br />

8.00<br />

6.00<br />

4.00<br />

2.00<br />

0.00<br />

20.00<br />

18.00<br />

16.00<br />

14.00<br />

Volume Size<br />

Time (sec)

overloaded. Otherwise, negotiation between the resource<br />

manager and the VIS client will be necessary, and, perhaps the<br />

resource manager will <strong>al</strong>locate less busy <strong>al</strong>ternatives to the<br />

client.<br />

5.4 Load Distributing Algorithm<br />

Since the load distributing <strong>al</strong>gorithm in the current VIS<br />

implementation is not the most optim<strong>al</strong> load distribution<br />

solution, we expect to see some improvement in the future<br />

implementation, which will be using sender-initiated <strong>al</strong>gorithms,<br />

described in [Shivaratri].<br />

NETWORK<br />

BOUNDARY<br />

ENCODED<br />

IMAGE DATA<br />

NETWORK<br />

BOUNDARY<br />

6. Conclusions<br />

NETWORK<br />

BOUNDARY<br />

SERVER<br />

RESOURCE<br />

MANAGER<br />

REQUEST<br />

FOR SERVICE<br />

VISUALIZATION<br />

COMMANDS<br />

CLIENT<br />

VISUALIZATION<br />

PROCESSES<br />

VISUALIZATION<br />

SERVER<br />

PROCESS<br />

POOL<br />

Figure 5: Server Resource Management<br />

PROCESS<br />

ALLOCATION<br />

Our system takes the technology of networked multimedia<br />

system (especi<strong>al</strong>ly the World Wide Web) a step further by<br />

proving the possibility of adding new interactive data types to<br />

both the WWW servers and clients. The addition of the 3D<br />

volume data object in the form of an HDF file to the WWW<br />

has been welcomed by many medic<strong>al</strong> researchers, for it is now<br />

possible for them to view volume datasets without a high-cost<br />

workstation. Furthermore, these visu<strong>al</strong>izations can be accessed<br />

via the WWW, through hypertext and hypergraphics links<br />

within an HTML page. Future implementations of this<br />

approach using other types of embedded applications will<br />

<strong>al</strong>low the creation of a new paradigm for the online distribution<br />

of multimedia information via the Internet.<br />

7. References<br />

Argiro, V. “Seeing in Volume”, Pixel, July/August 1990, 35-<br />

39.<br />

Avila, R., Sobierajski, L. and Kaufman A., “Towards a Comprehensive<br />

Volume Visu<strong>al</strong>ization System”, Visu<strong>al</strong>ization<br />

’92 Proceedings, IEEE Computer<br />

Society Press, October 1992, 13-20.<br />

Andreessen, M., “NCSA Mosaic Technic<strong>al</strong> Summary”, from<br />

FTP site ftp.ncsa.uiuc.edu, 8 May 1993.<br />

Bloomer, J., “Power Programming with RPC”, O’Reilly &<br />

Associate, September 1992, 401-451.<br />

Brinkley, J.F., Eno, K., Sundsten, J.W., “Knowledge-based<br />

client-server approach to stuctur<strong>al</strong> information<br />

retriev<strong>al</strong>: the Digit<strong>al</strong> Anatomist Browser”, Computer<br />

methods and Programs in Biomedicine,<br />

Vol. 40, No. 2, June 1993, 131-145.<br />

Broering, N. C., “Georgetown University, The Virtu<strong>al</strong> Medic<strong>al</strong><br />

Library,” Computers in Libraries, Vol. 13,<br />

No. 2, February 1993, 13.<br />

Drebin, R. A., Carpenter, L. and Hanrahan, P., “Volume<br />

Rendering”, Computer Graphics, Vol. 22, No.<br />

4, August 1988, 64-75.<br />

Flanders, B., “Hypertext Multimedia Software: Bell Atlantic<br />

DocuSource”, Computers in Libraries, Vol 13,<br />

No. 1, January 1993, 35-39.<br />

Gelerg, L., “Volume Rendering in AVS5”, AVS Network<br />

news, Vol. 1, Issue 4, 11-14.<br />

Giertsen, C. and Petersen, J., “Par<strong>al</strong>lel Volume Rendering on<br />

a Network of Workstations”, IEEE Computer<br />

Graphics and Applications, November 1993,<br />

16-23.

Jäger, M., Osterfeld, U., Ackermann, H, and Hornung, C.,<br />

“Building a Multimedia ISDN PC”, IEEE Computer<br />

Graphics and Applications, September<br />

1993, 24-33.<br />

Kaufman, A., Cohen, D., and Yagel, R., “Volume Graphics”,<br />

Computer, July 1993, 51-64.<br />

Kiong B., and Tan, T., “A hypertext-like approach to navigating<br />

through the GCG sequence an<strong>al</strong>ysis package”,<br />

Computer Applications in the Biosciences,<br />

Vol. 9, No. 2, 1993, 211-214.<br />

Levoy, M., “Display of Surfaces from Volume Data”, IEEE<br />

Computer Graphics and Applications, Vol. 8,<br />

No. 5, May 1988, 29-37.<br />

Lorensen, W., Cline, H.E., “Marching Cubes: A High Resolution<br />

3D Surface Construction Algorithm”,<br />

Computer Graphics, Vol. 21, No. 4, July 1987,<br />

163-169.<br />

Mercurio, F., “Khoros”, Pixel, March/April 1992, 28-33.<br />

Narayan, S., Sensharrma D., Santori, E.M., Lee, A.A.,<br />

Sabherw<strong>al</strong>, A., Toga, A.W., “Animated visu<strong>al</strong>ization<br />

of a high resolution color three dimension<strong>al</strong><br />

digit<strong>al</strong> computer model of the whole<br />

human head”, Internation<strong>al</strong> Journ<strong>al</strong> of Bio-<br />

Medic<strong>al</strong> Computing, Vol 32, No. 1, January<br />

1993, 7-17.<br />

Nickerson, G., “WorldWideWeb Hypertext from CERN”,<br />

Computers in Libraries, Vol. 12, No. 11, December<br />

1992, 75-77.<br />

Obraczka, K., Danzig, P, and Li, S., “Internet Resource<br />

Discovery Services”, Computer, Vol. 26, No. 9,<br />

September 1993, 8-22.<br />

Pommert, A., Riemer, M., Schiemann, T., Schubert. R.,<br />

Tiede, U., Hoehne, K-H, “Methods and Applications<br />

of Medic<strong>al</strong> 3D-Imaging”, SIGGRAPH<br />

93 course notes for volume visulization, 68-97.<br />

Robison, D., “The Changing States of Current Cites: The<br />

Evolution of an Electronic Journ<strong>al</strong>”, Computers<br />

in Libraries, Vol. 13, No. 6, June 1993, 21-26.<br />

Shivaratri, N.G., Krueger, P., and Singh<strong>al</strong>, M., “Load Distributing<br />

for Loc<strong>al</strong>ly Distributed Systems”, Computer,<br />

December 1992, 33-44.<br />

Singh. J, Hennessy, J. and Gupta A., “Sc<strong>al</strong>ing Par<strong>al</strong>lel Programs<br />

for Multiprocessors: Methodology and<br />

Examples”, Computer, July 1993, 42-49.<br />

Story, G., O’Gorman, L., Fox. D, Schaper, L. and Jagadish,<br />

H.V., “The RightPages Image-Based Electronic<br />

Library for Alerting and Browsing”, Computer,<br />

September 1992, 17-26.<br />

VandeWettering, M., “apE 2.0”, Pixel, November/December<br />

1990, 30-35.<br />

Woodward, P., “Interactive Scientific Visu<strong>al</strong>ization of Fluid<br />

Flow”, Computer, Vol. 26, No. 10, June 1993,<br />

13-25.<br />

Zandt, W.V., “A New ‘Inlook’ On Life”, UNIX Review, Vol<br />

7, No. 3, March 1989, 52-57.

Please reference the following QuickTime movie located in the MOV<br />

directory:<br />

CHEONG.MOV<br />

Copyright © 1994 by Cheong S. Ang, M.S.<br />

QuickTime is a trademark of Apple Computer, Inc.

VolVis: A Diversified Volume Visu<strong>al</strong>ization System<br />

Ricardo Avila ‡ ,Taosong He * ,Lichan Hong * ,Arie Kaufman * ,<br />

Hanspeter Pfister * ,Claudio Silva * ,Lisa Sobierajski * ,Sidney Wang *<br />

‡<br />

Howard Hughes Medic<strong>al</strong> Institute<br />

*<br />

Department of Computer Science<br />

State University of New York at Stony Brook State University of New York at Stony Brook<br />

Stony Brook, NY 11794-5230 Stony Brook, NY 11794-4400<br />

Abstract<br />

VolVis is a diversified, easy to use, extensible, high<br />

performance, and portable volume visu<strong>al</strong>ization system for<br />

scientists and engineers as well as for visu<strong>al</strong>ization<br />

developers and researchers. VolVis accepts as input 3D<br />

sc<strong>al</strong>ar volumetric data as well as 3D volume-sampled and<br />

classic<strong>al</strong> geometric models. Interaction with the data is<br />

controlled by a variety of 3D input devices in an input<br />

device-independent environment. VolVis output includes<br />

navigation preview, static images, and animation<br />

sequences. A variety of volume rendering <strong>al</strong>gorithms are<br />

supported, ranging from fast rough approximations, to<br />

compression-domain rendering, toaccurate volumetric ray<br />

tracing and radiosity, and irregular grid rendering.<br />

1. Introduction<br />

The visu<strong>al</strong>ization of volumetric data has aided many<br />

scientific disciplines ranging from geophysics to the<br />

biomedic<strong>al</strong> sciences. The diversity of these fields coupled<br />

with a growing reliance on visu<strong>al</strong>ization has spawned the<br />

creation of a number of speci<strong>al</strong>ized visu<strong>al</strong>ization systems.<br />

These systems are usu<strong>al</strong>ly limited by machine and data<br />

dependencies and are typic<strong>al</strong>ly not flexible or extensible.<br />

Afew visu<strong>al</strong>ization systems have attempted to overcome<br />

these dependencies (e.g., AVS, SGI Explorer, Khoros) by<br />

taking a data-flow approach. However, the added<br />

computation<strong>al</strong> costs associated with data-flow systems<br />

results in poor performance. In addition, these systems<br />

require that the scientist or engineer invest a large amount<br />

of time understanding the capabilities of each of the<br />

computation<strong>al</strong> modules and how toeffectively link them<br />

together.<br />

VolVis is a volume visu<strong>al</strong>ization system that unites<br />

numerous visu<strong>al</strong>ization methods within a comprehensive<br />

visu<strong>al</strong>ization system, providing a flexible tool for the<br />

scientist and engineer as well as the visu<strong>al</strong>ization<br />

developer and researcher. The VolVis system has been<br />

designed to meet the following key objectives:<br />

Diversity: VolVis supplies a wide range of<br />

function<strong>al</strong>ity with numerous methods provided within each<br />

function<strong>al</strong> component. For example, VolVis provides<br />

various projection methods including ray casting, ray<br />

tracing, radiosity, Marching Cubes, and splatting.<br />

Ease of use: The VolVis user interface is organized<br />

into function<strong>al</strong> components, providing an easy to use<br />

visu<strong>al</strong>ization system. One advantage of this approach over<br />

data-flow systems is that the user does not have to learn<br />

how tolink numerous modules in order to perform a task.<br />

Extensibility: The structure of the VolVis system is<br />

designed to <strong>al</strong>low avisu<strong>al</strong>ization programmer to easily add<br />

new representations and <strong>al</strong>gorithms. For this purpose, an<br />

extensible and hierarchic<strong>al</strong> abstract model was developed<br />

[1] which contains definitions for <strong>al</strong>l objects in the system.<br />

Portability: The VolVis system, written in C, is<br />

highly portable, running on most Unix workstations<br />

supporting X/Motif. The system has been tested on Silicon<br />

Graphics, Sun, Hewlett-Packard, Digit<strong>al</strong> Equipment<br />

Corporation, and IBM workstations and PCs.<br />

Freely available: The high cost of most<br />

visu<strong>al</strong>ization systems and difficulties in obtaining their<br />

source code often lead researchers to write their own tools<br />

for specific visu<strong>al</strong>ization tasks. VolVis is freely available as<br />

source code.<br />

2. System Overview<br />

Figure 1 shows the VolVis pipeline, indicating some<br />

paths that input data could take through the VolVis system<br />

in order to produce visu<strong>al</strong>ization output. Two of the basic<br />

input data classes of VolVis are volumetric data and 3D<br />

geometric data. The input data is processed by the<br />

Modeling and Filtering components of the system to<br />

produce either a 3D volume model or a 3D geometric<br />

surface model of the data. For example, geometric data can<br />

be converted into a volume model by the Modeling<br />

component of the system, as described in Section 3, to<br />

<strong>al</strong>low for volumetric graphic operations. A geometric<br />

surface model can be created from a volume model by the<br />

process of surface extraction.

Key:<br />

3D Sc<strong>al</strong>ar Field<br />

Volumetric<br />

Model<br />

Input Data<br />

Intern<strong>al</strong> Data<br />

Output<br />

Visu<strong>al</strong>ization<br />

Action<br />

Modeling<br />

&<br />

Filtering<br />

Surface<br />

Extraction<br />

Measurement<br />

Manipulation<br />

Environment<br />

Rendering<br />

Image Animation<br />

Figure 1:The VolVis pipeline.<br />

3D Geometric<br />

Objects<br />

Geometric<br />

Surface Model<br />

Virtu<strong>al</strong> Input<br />

Device<br />

Input Device<br />

Abstraction<br />

Physic<strong>al</strong> Input<br />

Device<br />

Navigation<br />

Preview<br />

The Measurement component can be used to obtain<br />

quantitative information from the data models. Surface<br />

area, volume, histogram and distance information can be<br />

extracted from volumes using one of sever<strong>al</strong> methods.<br />

Isosurface volume and surface area measurements can be<br />

taken either on an entire volume or on a surface-tracked<br />

section. Addition<strong>al</strong>ly, surface areas and volumes can be<br />

computed using either a simple non-interpolated voxel<br />

counting method or a Marching Cubes [8] based<br />

measurement method. For geometric surface models,<br />

surface area, volume, and distance measurements can be<br />

performed.<br />

Most of the interaction in VolVis occurs within the<br />

Manipulation component of the system. This part of the<br />

system <strong>al</strong>lows the user to modify object parameters such as<br />

color, texture, and segmentation, and viewing parameters<br />

such as image size and field of view. Within the<br />

Navigation section of the Manipulation component, the<br />

user can interactively modify the position and orientation<br />

of the volumes, the light sources, and the view. This is<br />

closely connected to the Animation section of the<br />

Manipulation component, which <strong>al</strong>lows the user to specify<br />

animation sequences either interactively or with a set of<br />

transformations to be applied to objects in the scene. The<br />

Manipulation component is described in Section 4.<br />

The Rendering component encompasses sever<strong>al</strong><br />

different rendering <strong>al</strong>gorithms, including geometry-based<br />

techniques such as Marching Cubes, glob<strong>al</strong> illumination<br />

methods such as ray tracing and radiosity, and direct<br />

volume rendering <strong>al</strong>gorithms such as splatting. The<br />

Rendering component is described in Section 5.<br />

The Input Device component of the system maps<br />

physic<strong>al</strong> input device data into a device independent<br />

representation that is used by various <strong>al</strong>gorithms requiring<br />

user interaction. As a result, the VolVis system is input<br />

device independent, as described in Section 6.<br />

3. Modeling<br />

Aprimary responsibility of the Modeling component<br />

is the voxelization of geometric data into volumetric model<br />

representations. Voxelizing a continuous model into a<br />

volume raster of voxels requires a geometric<strong>al</strong> sampling<br />

process which determines the v<strong>al</strong>ues to be assigned to<br />

voxels of the volume raster. To reduce object space<br />

<strong>al</strong>iasing, we adopt a volume sampling technique [14] that<br />

estimates the density contribution of the geometric objects<br />

to the voxels. The density of a voxel is determined by a<br />

filter weight function which is proportion<strong>al</strong> to the distance<br />

between the center of the voxel and the geometric<br />

primitive. Inour implementation, precomputed tables of<br />

densities for a predefined set of geometric primitives are<br />

used to assign the density v<strong>al</strong>ue of each voxel. For each<br />

voxel visited by the voxelization <strong>al</strong>gorithm, the distance to<br />

the predefined primitive is used as an index into the tables.<br />

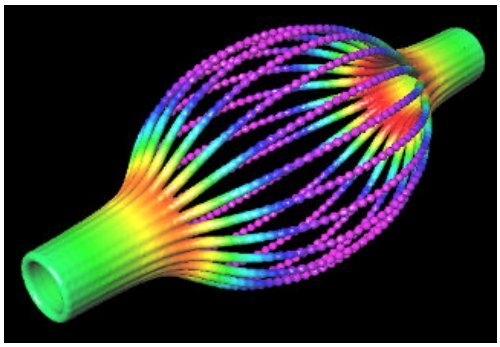

Figure 2:Avolumetric ray traced image of a volumesampled<br />

geometric wine bottle and glasses.

Since the voxelized geometric objects are<br />

represented as volume rasters of density v<strong>al</strong>ues, we can<br />

essenti<strong>al</strong>ly treat them as sampled or simulated volume data<br />

sets, such as 3D medic<strong>al</strong> imaging data sets, and employ<br />

one of many volume rendering techniques for image<br />

generation. One advantage of this approach is that volume<br />

rendering carries the smoothness of the volume-sampled<br />

objects from object space over into image space. Hence,<br />

the silhouette of the objects, reflections, and shadows are<br />

smooth. Furthermore, by not performing any geometric<br />

ray-object intersections or geometric surface norm<strong>al</strong><br />

c<strong>al</strong>culations, a large amount of rendering time is saved. In<br />

addition, CSG operations between two volume-sampled<br />

geometric models are accomplished at the voxel level<br />

during voxelization, thereby reducing the origin<strong>al</strong> problem<br />

of ev<strong>al</strong>uating a CSG tree of such operations down to a<br />

Boolean operation between pairs of voxels. Figure 2<br />

shows a ray traced image of a wine bottle and glasses that<br />

were modeled by CSG operations on volume-sampled<br />

geometric objects. The upper right window inFigure 3<br />

shows a ray traced image of a nut and bolt that were <strong>al</strong>so<br />

modeled by CSG operations.<br />

Figure 3:Anexample VolVis session. The nut and bolt<br />

are volume-sampled geometric models.<br />

4. Manipulation<br />

The Manipulation component of VolVis consists of<br />

three sections: the Object Control section, the Navigation<br />

section, and the Animation section. The Navigation and<br />

Animation sections are <strong>al</strong>so referred to as the Navigator<br />

and Animator, respectively. Both the Navigator and<br />

Animator produce output visu<strong>al</strong>ization, shown in Figure 1<br />

as Navigation Preview and Animation, respectively.<br />

The Object Control section of the system is<br />

extensive, <strong>al</strong>lowing the user to manipulate parameters of<br />

the objects in the scene. This includes modifications to the<br />

color, texture, and shading parameters of each volume, as<br />

well as more complex operations such as positioning of cut<br />

planes and data segmentation. The color and position of<br />

<strong>al</strong>l light sources can be interactively manipulated by the<br />

user. Also, viewing parameters, such as the fin<strong>al</strong> image<br />

size, and glob<strong>al</strong> parameters, such as ambient lighting and<br />

the background color, can be modified.<br />

The Navigator <strong>al</strong>lows the user to interactively<br />

manipulate objects within the system. The user can<br />

translate, sc<strong>al</strong>e and rotate <strong>al</strong>l volumes and light sources, as<br />

well as the view itself. The Navigator can <strong>al</strong>so be used to<br />

interactively manipulate the view inamanner similar to a<br />

flight simulator. Toprovide interactive navigation speed, a<br />

fast rendering <strong>al</strong>gorithm was developed which involves<br />

projecting reduced resolution representations of <strong>al</strong>l objects<br />

in the scene. This task is relatively simple for geometric<br />

objects, where c<strong>al</strong>culating, storing, and projecting a<br />

polygon<strong>al</strong> approximation requires little overhead.<br />

However, when considering a volumetric isosurface the<br />

cost of an addition<strong>al</strong> representation increases considerably.<br />

Asimple and memory efficient method available within<br />

the Navigator creates a reduced resolution representation<br />

of an isosurface by uniformly subdividing the volume into<br />

boxes and projecting the outer faces of <strong>al</strong>l the boxes that<br />

contain a portion of the isosurface. These subvolumes<br />

serve adu<strong>al</strong> purpose in that they are <strong>al</strong>so used by the<br />

PARC (Polygon Assisted Ray Casting) acceleration<br />

method [1] during ray casting and ray tracing.<br />

Although the PARC subvolume representation can<br />

be stored as a compact list of subvolume indices, the<br />

resulting images are boxy and uninformative for many data<br />

sets. To overcome this problem, another method is<br />

provided which utilizes a reduced resolution Marching<br />

Cubes representation of an isosurface. In order to reduce<br />

the amount of data required for this representation, edge<br />

intersections used to compute triangle vertices are<br />

restricted to one of four possible locations. This results in<br />

much smoother images which are typic<strong>al</strong>ly more<br />

informative than the uniform subdivision method. The<br />

Navigator <strong>al</strong>so supports the other VolVis rendering<br />

techniques that are described in Section 5, <strong>al</strong>though<br />

interactive projection rates with these methods can be<br />

achieved only on high-end workstations.<br />

The Animator <strong>al</strong>so <strong>al</strong>lows the user to specify<br />

transformations to be applied to objects within the scene,<br />

but asopposed to the Navigator which is used to apply a<br />

single transformation at a time, the Animator can be used<br />

to specify a sequence of transformations to produce an<br />

animation. The user can preview the animation using one<br />

of the fast rendering techniques within the Navigator. The<br />

user can then select a more accurate and time consuming<br />

rendering technique, such as volumetric ray tracing, to

create a high qu<strong>al</strong>ity animation. In addition to simple<br />

rotation, translation and sc<strong>al</strong>ing animations, the Navigator<br />

can be used to interactively specify a ‘‘flight path’’, which<br />

can then be passed to the Animator, and rendered to create<br />

an animation.<br />

An example session of the VolVis system is shown in<br />

Figure 3. The long window onthe left is the main VolVis<br />

interface window, with buttons for each of the major<br />

components of the system. The current scene is displayed<br />

in the Navigator window onthe left, and in the Rendering<br />

image window onthe right. A low resolution Marching<br />

Cubes technique was used in the Navigator, while a ray<br />

casting technique using the PARC acceleration method<br />

was employed during rendering.<br />

5. Rendering<br />

Rendering is one of the most important and<br />

extensive components of the VolVis system. For the user,<br />

speed and accuracy are both important, yet often<br />

conflicting aspects of the rendering process. For this<br />

reason, a variety of rendering techniques have been<br />

implemented within the VolVis system, ranging from the<br />

fast, rough approximation of the fin<strong>al</strong> image, to the<br />

comparatively slow, accurate rendering within a glob<strong>al</strong><br />

illumination model. Also, each rendering <strong>al</strong>gorithm itself<br />

supports sever<strong>al</strong> levels of accuracy, giving the user an even<br />

greater amount of control. In this section, a few ofthe<br />

rendering techniques developed for the VolVis system are<br />

discussed.<br />

Tw o of the VolVis rendering techniques, volumetric<br />

ray tracing, and volumetric radiosity, are built upon glob<strong>al</strong><br />

illumination models. Standard volume rendering<br />

techniques, which are <strong>al</strong>so supported by VolVis, typic<strong>al</strong>ly<br />

employ only a loc<strong>al</strong> illumination model for shading, and<br />

therefore produce images without glob<strong>al</strong> effects. Including<br />

aglob<strong>al</strong> illumination model within a visu<strong>al</strong>ization system<br />

has sever<strong>al</strong> advantages. First, glob<strong>al</strong> effects can often be<br />

desirable in scientific applications. For example, by<br />

placing mirrors in the scene, a single image can show<br />

sever<strong>al</strong> views of an object in a natur<strong>al</strong>, intuitive manner<br />

leading to a better understanding of the 3D nature of the<br />

scene. Also, complex surfaces are often easier to render<br />

when represented volumetric<strong>al</strong>ly than when represented by<br />

high-order functions or geometric primitives, as described<br />

in Section 3. Volumetric ray tracing is described in<br />

Section 5.1 and volumetric radiosity is discussed in<br />

Section 5.2.<br />

In order to reduce the large storage and transmission<br />

overhead as well as the volume rendering time for<br />

volumetric data sets, a data compression technique is<br />

incorporated into the VolVis system. This technique <strong>al</strong>lows<br />

volume rendering to be directly performed on the<br />

compressed data and is described in Section 5.3.<br />

Although many scanning devices create data sets<br />

that are inherently rectilinear, this restriction poses<br />

problems for fields in which an irregular data<br />

representation is necessary. These fields include<br />

computation<strong>al</strong> fluid dynamics, finite element an<strong>al</strong>ysis, and<br />

meteorology. Therefore, support was added for irregularly<br />

gridded data formats in the VolVis system, as discussed in<br />

Section 5.4.<br />

5.1. Volumetric Ray Tracing<br />

The volumetric ray tracer provided within the VolVis<br />

system is intended to produce accurate, informative images<br />

[11]. In classic<strong>al</strong> ray tracing, the rendering <strong>al</strong>gorithm is<br />

designed to generate images that are accurate according to<br />

the laws of optics. In VolVis, the ray tracer must handle<br />

classic<strong>al</strong> geometric objects as well as volumetric data, and<br />

strict adherence to the laws of optics is not <strong>al</strong>ways<br />

desirable. For example, a scientist may wish to view the<br />

maximum v<strong>al</strong>ue <strong>al</strong>ong the segment of a ray passing<br />

through a volume, instead of the optic<strong>al</strong>ly-correct<br />

composited v<strong>al</strong>ue. Figure 4 illustrates the importance of<br />

including glob<strong>al</strong> effects in a maximum-v<strong>al</strong>ue projection of<br />

a hippocamp<strong>al</strong> pyramid<strong>al</strong> neuron data set which was<br />

obtained using a laser-scanning confoc<strong>al</strong> microscope.<br />

Since maximum-v<strong>al</strong>ue projections do not give depth<br />

information, a floor is placed below the cell, and a light<br />

source above the cell. This results in a shadow ofthe cell<br />

on the floor, adding back the depth information lost by the<br />

maximum-v<strong>al</strong>ue projection.<br />

In order to incorporate both geometric and<br />

volumetric objects into one scene, the classic<strong>al</strong> ray tracing<br />

intensity equation, which is ev<strong>al</strong>uated only at surface<br />

locations, must be extended to include volumetric effects.<br />

The intensity of light, Iλ(x, →<br />

ω ), for a given wav elength λ,<br />

arriving at a position x, from the direction →<br />

ω , can be<br />

computed by:<br />

I λ(x, →<br />

ω ) = I vλ(x, x′) + τ λ(x, x′)I sλ(x′, →<br />

ω )<br />

where x′ is the first surface intersection point encountered<br />

<strong>al</strong>ong the ray →<br />

ω originating at x. Isλ(x′, →<br />

ω )isthe intensity<br />

of light at this surface location, and can be computed with<br />

a standard ray tracing illumination equation [15].<br />

Ivλ(x, x′) isthe volumetric contribution to the intensity<br />

<strong>al</strong>ong the ray from x to x′, and τ λ(x, x′) isthe attenuation<br />

of Isλ(x′, →<br />

ω )byany intervening volumes. These v<strong>al</strong>ues are<br />

determined using volume rendering techniques, based on a<br />

transport theory model of light propagation [7]. The basic<br />

idea is similar to classic<strong>al</strong> ray tracing, in that rays are cast<br />

from the eye into the scene, and surface shading is<br />

performed on the closest surface intersection point. The<br />

(1)

difference is that shading must be performed for <strong>al</strong>l<br />

volumetric data that are encountered <strong>al</strong>ong the ray while<br />

traveling to the closest surface intersection point.<br />

Figure 4:Avolumetric ray traced image of a cell using<br />

amaximum-v<strong>al</strong>ue projection.<br />

For photo-re<strong>al</strong>istic rendering, the user typic<strong>al</strong>ly<br />

wants to include <strong>al</strong>l of the shading effects that can be<br />

c<strong>al</strong>culated within a given time limit. However,<br />

visu<strong>al</strong>ization users may find it necessary to view<br />

volumetric data with no shading effects, such as when<br />

using a maximum-v<strong>al</strong>ue projection. In VolVis, the user has<br />

control over the illumination equations for both volumetric<br />

and geometric objects, and can specify, for each object in<br />

the scene, which shading effects should be computed. For<br />

example, in Figure 4 no shading effects were included for<br />

the maximum-v<strong>al</strong>ue projection of the cell, while <strong>al</strong>l parts<br />

of the illumination equation were considered when shading<br />

the geometric polygon. In another example, the user may<br />

place a mirror behind a volumetric object in a scene in<br />

order to capture two views in one image, but may not want<br />

the volumetric object to cast a shadow onthe mirror, as<br />

shown in Figure 5. The head was obtained using magnetic<br />

resonance imaging, with the brain segmented from the<br />

same data set. The mirror is a volume-sampled polygon<br />

that was created using the modeling technique described in<br />

Section 3.<br />

5.2. Volumetric Radiosity<br />

The ray tracing <strong>al</strong>gorithm described in the previous<br />

section can be used to capture specular interactions<br />

between objects in a scene. In re<strong>al</strong>ity, most scenes are<br />

dominated by diffuse interactions, which are not accounted<br />

for in the standard ray tracing illumination model. For this<br />

reason, VolVis <strong>al</strong>so contains a radiosity <strong>al</strong>gorithm for<br />

volumetric data. Volumetric radiosity includes the<br />

classic<strong>al</strong> surface ‘‘patch’’ element as well as a ‘‘voxel’’<br />

element. As opposed to previous methods that use<br />

participating media to augment geometric scenes [10], this<br />

method is intended to render scenes that may solely consist<br />

of volumetric data. Each patch or voxel element can emit,<br />

absorb, scatter, and transmit light. Both isotropic and<br />

diffuse emission and scattering of light are <strong>al</strong>lowed, where<br />

‘‘isotropic’’ implies direction<strong>al</strong> independence, and<br />

‘‘diffuse’’ implies Lambertian reflection (i.e., dependent on<br />

norm<strong>al</strong> or gradient). Light entering an element that is not<br />

absorbed or scattered by the element is transmitted<br />

unchanged.<br />

Figure 5:Avolumetric ray traced image of a human head.<br />

In order to cope with the high number of voxel<br />

interactions required, a hierarchic<strong>al</strong> technique similar to<br />

[5] is used. An iterative <strong>al</strong>gorithm [2] is then used to shoot<br />

voxel radiosities, where sever<strong>al</strong> factors govern the highest<br />

level inthe hierarchy atwhich two voxels can interact.<br />

These factors include the distance between the two voxels,<br />

the radiosity of the shooting voxel, and the reflectance and<br />

scattering coefficients of the voxel receiving the radiosity.<br />

This hierarchic<strong>al</strong> technique can reduce the number of<br />

interactions required to converge onasolution by more<br />

than four orders of magnitude.<br />

After the view-independent radiosities have been<br />

c<strong>al</strong>culated, a view-dependent image is generated using a<br />

ray casting technique, where the fin<strong>al</strong> pixel v<strong>al</strong>ue is<br />

determined by compositing radiosity v<strong>al</strong>ues <strong>al</strong>ong the ray.<br />

Figure 6 shows a scene containing a volumetric sphere,<br />

polygon, and light source. The light source isotropic<strong>al</strong>ly<br />

emits light, and both the sphere and the polygon diffusely<br />

reflect light. The light source is above the sphere and<br />

directly illuminates the top h<strong>al</strong>f of the sphere. The bottom<br />

h<strong>al</strong>f of the sphere is indirectly illuminated by light<br />

diffusely reflected from the red polygon.

5.3. Compression Domain Volume Rendering<br />

Another rendering method incorporated in VolVis is<br />

adata compression technique for volume rendering. Our<br />