Prime Numbers

Prime Numbers Prime Numbers

9.5 Large-integer multiplication 507 know that the complexity must be O(D ln D) operations, and as we have said, these are usually, in practice, floatingpoint operations (both adds and multiplies are bounded in this fashion). Now the bit complexity is not O((n/b)ln(n/b))—that is, we cannot just substitute D = n/b in the operation-complexity estimate—because floatingpoint arithmetic on larger digits must, of course, be more expensive. When these notions are properly analyzed we obtain the Strassen bound of O(n(C ln n)(C ln ln n)(C ln ln ln n) ···) bit operations for the basic FFT multiply, where C is a constant and the ln ln ··· chain is understood to terminate when it falls below 1. Before we move ahead with other estimates, we must point out that even though this bit complexity is not asymptotically optimal, some of the greatest achievements in the general domain of large-integer arithmetic have been achieved with this basic Schönhage–Strassen FFT, and yes, using floating-point operations. Now, the Schönhage Algorithm 9.5.23 gets neatly around the problem that for a fixed number of signal digits D, the digit operations (small multiplications) must get more complex for larger operands. Analysis of the recursion within the algorithm starts with the observation that at top recursion level, there are two DFTs (but very simple ones—only shifting and adding occur) and the dyadic multiply. Detailed analysis yields the best-known complexity bound of O(n(ln n)(ln ln n)) bit operations, although the Nussbaumer method’s complexity, which we discuss next, is asymptotically equivalent. Next, one can see that (as seen in Exercise 9.67) the complexity of Nussbaumer convolution is O(D ln D) operations in the R ring. This is equivalent to the complexity of floating-point FFT methods, if ring operations are thought of as equivalent to floating-point operations. However, with the Nussbaumer method there is a difference: One may choose the digit base B with impunity. Consider a base B ∼ n, sothat b ∼ ln n, in which case one is effectively using D = n/ ln n digits. It turns out that the Nussbaumer method for integer multiplication then takes O(n ln ln n) additions and O(n) multiplications of numbers each having O(ln n) bits.It follows that the complexity of the Nussbaumer method is asymptotically that of the Schönhage method, i.e., O(n ln n ln ln n) bit operations. Such complexity issues for both Nussbaumer and the original Schönhage–Strassen algorithm are discussed in [Bernstein 1997].

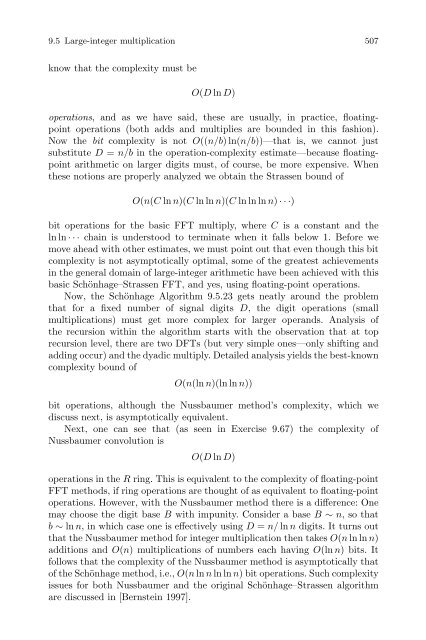

508 Chapter 9 FAST ALGORITHMS FOR LARGE-INTEGER ARITHMETIC Algorithm optimal B complexity Basic FFT, fixed-base ... Oop(D ln D) Basic FFT, variable-base O(ln n) O(n(C ln n)(C ln ln n) ...) Schönhage O(n 1/2 ) O(n ln n ln ln n) Nussbaumer O(n/ ln n) O(n ln n ln ln n) Table 9.1 Complexities for fast multiplication algorithms. Operands to be multiplied have n bits each, which during top recursion level are split into D = n/b digits of b bits each, so the digit size (the base) is B =2 b . All bounds are for bit complexity, except that Oop means operation complexity. 9.5.9 Application to the Chinese remainder theorem We described the Chinese remainder theorem in Section 2.1.3, and there gave a method, Algorithm 2.1.7, for reassembling CRT data given some precomputation. We now describe a method that not only takes advantage of preconditioning, but also fast multiplication methods. Algorithm 9.5.26 (Fast CRT reconstruction with preconditioning). Using the nomenclature of Theorem 2.1.6, we assume fixed moduli m0,...,mr−1 whose product is M, but with r =2 k for computational convenience. The goal of the algorithm is to reconstruct n from its given residues (ni). Along the way, tableaux (qij) of partial products and (nij) of partial residues are calculated. The algorithm may be reentered with a new n if the mi remain fixed. 1. [Precomputation] for(0 ≤ i

- Page 466 and 467: 9.3 Exponentiation 457 Algorithm 9.

- Page 468 and 469: 9.3 Exponentiation 459 But there is

- Page 470 and 471: 9.3 Exponentiation 461 the benefit

- Page 472 and 473: 9.4 Enhancements for gcd and invers

- Page 474 and 475: 9.4 Enhancements for gcd and invers

- Page 476 and 477: 9.4 Enhancements for gcd and invers

- Page 478 and 479: 9.4 Enhancements for gcd and invers

- Page 480 and 481: 9.4 Enhancements for gcd and invers

- Page 482 and 483: 9.5 Large-integer multiplication 47

- Page 484 and 485: 9.5 Large-integer multiplication 47

- Page 486 and 487: 9.5 Large-integer multiplication 47

- Page 488 and 489: 9.5 Large-integer multiplication 47

- Page 490 and 491: 9.5 Large-integer multiplication 48

- Page 492 and 493: 9.5 Large-integer multiplication 48

- Page 494 and 495: 9.5 Large-integer multiplication 48

- Page 496 and 497: 9.5 Large-integer multiplication 48

- Page 498 and 499: 9.5 Large-integer multiplication 48

- Page 500 and 501: 9.5 Large-integer multiplication 49

- Page 502 and 503: 9.5 Large-integer multiplication 49

- Page 504 and 505: 9.5 Large-integer multiplication 49

- Page 506 and 507: 9.5 Large-integer multiplication 49

- Page 508 and 509: 9.5 Large-integer multiplication 49

- Page 510 and 511: 9.5 Large-integer multiplication 50

- Page 512 and 513: 9.5 Large-integer multiplication 50

- Page 514 and 515: 9.5 Large-integer multiplication 50

- Page 518 and 519: 9.6 Polynomial arithmetic 509 can i

- Page 520 and 521: 9.6 Polynomial arithmetic 511 Incid

- Page 522 and 523: 9.6 Polynomial arithmetic 513 where

- Page 524 and 525: 9.6 Polynomial arithmetic 515 such

- Page 526 and 527: 9.6 Polynomial arithmetic 517 Note

- Page 528 and 529: 9.7 Exercises 519 (3) Write out com

- Page 530 and 531: 9.7 Exercises 521 where “do” si

- Page 532 and 533: 9.7 Exercises 523 9.23. How general

- Page 534 and 535: 9.7 Exercises 525 two (and thus, me

- Page 536 and 537: 9.7 Exercises 527 0 2 +3 2 +0 2 is

- Page 538 and 539: 9.7 Exercises 529 9.49. In the FFT

- Page 540 and 541: 9.7 Exercises 531 adjustment step.

- Page 542 and 543: 9.7 Exercises 533 9.69. Implement A

- Page 544 and 545: 9.8 Research problems 535 less than

- Page 546 and 547: 9.8 Research problems 537 1.66), na

- Page 548 and 549: 9.8 Research problems 539 9.82. A c

- Page 550 and 551: 542 Appendix BOOK PSEUDOCODE Becaus

- Page 552 and 553: 544 Appendix BOOK PSEUDOCODE } ...;

- Page 554 and 555: 546 Appendix BOOK PSEUDOCODE Functi

- Page 556 and 557: 548 REFERENCES [Apostol 1986] T. Ap

- Page 558 and 559: 550 REFERENCES [Bernstein 2004b] D.

- Page 560 and 561: 552 REFERENCES [Buchmann et al. 199

- Page 562 and 563: 554 REFERENCES [Crandall 1997b] R.

- Page 564 and 565: 556 REFERENCES [Dudon 1987] J. Dudo

9.5 Large-integer multiplication 507<br />

know that the complexity must be<br />

O(D ln D)<br />

operations, and as we have said, these are usually, in practice, floatingpoint<br />

operations (both adds and multiplies are bounded in this fashion).<br />

Now the bit complexity is not O((n/b)ln(n/b))—that is, we cannot just<br />

substitute D = n/b in the operation-complexity estimate—because floatingpoint<br />

arithmetic on larger digits must, of course, be more expensive. When<br />

these notions are properly analyzed we obtain the Strassen bound of<br />

O(n(C ln n)(C ln ln n)(C ln ln ln n) ···)<br />

bit operations for the basic FFT multiply, where C is a constant and the<br />

ln ln ··· chain is understood to terminate when it falls below 1. Before we<br />

move ahead with other estimates, we must point out that even though this bit<br />

complexity is not asymptotically optimal, some of the greatest achievements<br />

in the general domain of large-integer arithmetic have been achieved with this<br />

basic Schönhage–Strassen FFT, and yes, using floating-point operations.<br />

Now, the Schönhage Algorithm 9.5.23 gets neatly around the problem<br />

that for a fixed number of signal digits D, the digit operations (small<br />

multiplications) must get more complex for larger operands. Analysis of<br />

the recursion within the algorithm starts with the observation that at top<br />

recursion level, there are two DFTs (but very simple ones—only shifting and<br />

adding occur) and the dyadic multiply. Detailed analysis yields the best-known<br />

complexity bound of<br />

O(n(ln n)(ln ln n))<br />

bit operations, although the Nussbaumer method’s complexity, which we<br />

discuss next, is asymptotically equivalent.<br />

Next, one can see that (as seen in Exercise 9.67) the complexity of<br />

Nussbaumer convolution is<br />

O(D ln D)<br />

operations in the R ring. This is equivalent to the complexity of floating-point<br />

FFT methods, if ring operations are thought of as equivalent to floating-point<br />

operations. However, with the Nussbaumer method there is a difference: One<br />

may choose the digit base B with impunity. Consider a base B ∼ n, sothat<br />

b ∼ ln n, in which case one is effectively using D = n/ ln n digits. It turns out<br />

that the Nussbaumer method for integer multiplication then takes O(n ln ln n)<br />

additions and O(n) multiplications of numbers each having O(ln n) bits.It<br />

follows that the complexity of the Nussbaumer method is asymptotically that<br />

of the Schönhage method, i.e., O(n ln n ln ln n) bit operations. Such complexity<br />

issues for both Nussbaumer and the original Schönhage–Strassen algorithm<br />

are discussed in [Bernstein 1997].