Display and Interface Design

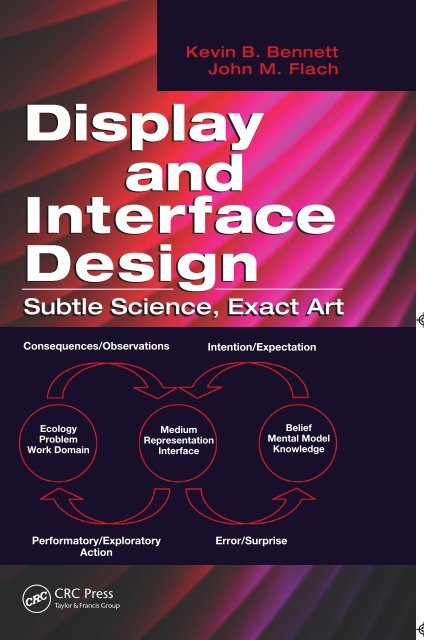

Kevin B. Bennett John M. Flach Display and Interface Design Subtle Science, Exact Art Consequences/Observations Intention/Expectation Ecology Problem Work Domain Medium Representation Interface Belief Mental Model Knowledge Performatory/Exploratory Action Error/Surprise

- Page 2: Display and Interface Design Subtle

- Page 5 and 6: CRC Press Taylor & Francis Group 60

- Page 8 and 9: Contents Preface...................

- Page 10 and 11: Contents ix 4.5.2 Cognitive Illusio

- Page 12 and 13: Contents xi 8.2.4 Redundant Conditi

- Page 14 and 15: Contents xiii 11.4 The Total Energy

- Page 16 and 17: Contents xv 15. Design Principles:

- Page 18: Contents xvii 18. A New Way of Seei

- Page 21 and 22: xx Preface as well as an understand

- Page 24 and 25: Acknowledgments We would like to th

- Page 26: The Authors Kevin B. Bennett is a p

- Page 29 and 30: 2 Display and Interface Design: Sub

- Page 31 and 32: 4 Display and Interface Design: Sub

- Page 33 and 34: 6 Display and Interface Design: Sub

- Page 35 and 36: 8 Display and Interface Design: Sub

- Page 37 and 38: 10 Display and Interface Design: Su

- Page 39 and 40: 12 Display and Interface Design: Su

- Page 42 and 43: 2 A Meaning Processing Approach 2.1

- Page 44 and 45: A Meaning Processing Approach 17 mo

- Page 46 and 47: A Meaning Processing Approach 19 th

- Page 48 and 49: A Meaning Processing Approach 21 Si

- Page 50 and 51: A Meaning Processing Approach 23 en

Kevin B. Bennett<br />

John M. Flach<br />

<strong>Display</strong><br />

<strong>and</strong><br />

<strong>Interface</strong><br />

<strong>Design</strong><br />

Subtle Science, Exact Art<br />

Consequences/Observations<br />

Intention/Expectation<br />

Ecology<br />

Problem<br />

Work Domain<br />

Medium<br />

Representation<br />

<strong>Interface</strong><br />

Belief<br />

Mental Model<br />

Knowledge<br />

Performatory/Exploratory<br />

Action<br />

Error/Surprise

<strong>Display</strong><br />

<strong>and</strong><br />

<strong>Interface</strong><br />

<strong>Design</strong><br />

Subtle Science, Exact Art

<strong>Display</strong><br />

<strong>and</strong><br />

<strong>Interface</strong><br />

<strong>Design</strong><br />

Subtle Science, Exact Art<br />

Kevin B. Bennett<br />

John M. Flach<br />

Boca Raton London New York<br />

CRC Press is an imprint of the<br />

Taylor & Francis Group, an informa business

CRC Press<br />

Taylor & Francis Group<br />

6000 Broken Sound Parkway NW, Suite 300<br />

Boca Raton, FL 33487-2742<br />

© 2011 by Taylor <strong>and</strong> Francis Group, LLC<br />

CRC Press is an imprint of Taylor & Francis Group, an Informa business<br />

No claim to original U.S. Government works<br />

Printed in the United States of America on acid-free paper<br />

10 9 8 7 6 5 4 3 2 1<br />

International St<strong>and</strong>ard Book Number-13: 978-1-4200-6439-1 (Ebook-PDF)<br />

This book contains information obtained from authentic <strong>and</strong> highly regarded sources. Reasonable<br />

efforts have been made to publish reliable data <strong>and</strong> information, but the author <strong>and</strong> publisher cannot<br />

assume responsibility for the validity of all materials or the consequences of their use. The authors <strong>and</strong><br />

publishers have attempted to trace the copyright holders of all material reproduced in this publication<br />

<strong>and</strong> apologize to copyright holders if permission to publish in this form has not been obtained. If any<br />

copyright material has not been acknowledged please write <strong>and</strong> let us know so we may rectify in any<br />

future reprint.<br />

Except as permitted under U.S. Copyright Law, no part of this book may be reprinted, reproduced,<br />

transmitted, or utilized in any form by any electronic, mechanical, or other means, now known or<br />

hereafter invented, including photocopying, microfilming, <strong>and</strong> recording, or in any information storage<br />

or retrieval system, without written permission from the publishers.<br />

For permission to photocopy or use material electronically from this work, please access www.copyright.com<br />

(http://www.copyright.com/) or contact the Copyright Clearance Center, Inc. (CCC), 222<br />

Rosewood Drive, Danvers, MA 01923, 978-750-8400. CCC is a not-for-profit organization that provides<br />

licenses <strong>and</strong> registration for a variety of users. For organizations that have been granted a photocopy<br />

license by the CCC, a separate system of payment has been arranged.<br />

Trademark Notice: Product or corporate names may be trademarks or registered trademarks, <strong>and</strong> are<br />

used only for identification <strong>and</strong> explanation without intent to infringe.<br />

Visit the Taylor & Francis Web site at<br />

http://www.taylor<strong>and</strong>francis.com<br />

<strong>and</strong> the CRC Press Web site at<br />

http://www.crcpress.com

To Jens Rasmussen for his inspiration <strong>and</strong> guidance.<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

Preface................................................................................................................... xix<br />

Acknowledgments.............................................................................................xxiii<br />

The Authors......................................................................................................... xxv<br />

1. Introduction to Subtle Science, Exact Art..................................................1<br />

1.1 Introduction............................................................................................1<br />

1.2 Theoretical Orientation.........................................................................2<br />

1.2.1 Cognitive Systems Engineering: Ecological<br />

<strong>Interface</strong> <strong>Design</strong>........................................................................3<br />

1.2.2 With a Psychological Twist.....................................................4<br />

1.3 Basic versus Applied Science...............................................................5<br />

1.3.1 Too Theoretical!........................................................................5<br />

1.3.2 Too Applied!..............................................................................6<br />

1.4 Pasteur’s Quadrant................................................................................7<br />

1.4.1 The Wright Brothers in the Quadrant...................................8<br />

1.4.2 This Book <strong>and</strong> the Quadrant..................................................9<br />

1.5 Overview.............................................................................................. 10<br />

1.6 Summary.............................................................................................. 11<br />

References........................................................................................................ 13<br />

2. A Meaning Processing Approach.............................................................. 15<br />

2.1 Introduction.......................................................................................... 15<br />

2.2 Two Alternative Paradigms for <strong>Interface</strong> <strong>Design</strong>............................ 16<br />

2.2.1 The Dyadic Paradigm............................................................ 16<br />

2.2.2 The Triadic Paradigm............................................................ 17<br />

2.2.3 Implications for <strong>Interface</strong> <strong>Design</strong>......................................... 18<br />

2.3 Two Paths to Meaning.........................................................................20<br />

2.3.1 Conventional Wisdom: Meaning = Interpretation............20<br />

2.3.2 An Ecological or Situated Perspective:<br />

Meaning = Affordance..........................................................22<br />

2.3.3 Information versus Meaning................................................26<br />

2.4 The Dynamics of Meaning Processing.............................................30<br />

2.4.1 The Regulator Paradox..........................................................30<br />

2.4.2 Perception <strong>and</strong> Action in Meaning Processing.................. 31<br />

2.4.3 Inductive, Deductive, <strong>and</strong> Abductive Forms of<br />

Knowing..................................................................................33<br />

2.5 Conclusion............................................................................................34<br />

2.6 Summary..............................................................................................38<br />

References........................................................................................................38<br />

© 2011 by Taylor & Francis Group, LLC<br />

vii

viii<br />

Contents<br />

3. The Dynamics of Situations....................................................................... 41<br />

3.1 Introduction.......................................................................................... 41<br />

3.2 The Problem State Space.....................................................................42<br />

3.2.1 The State Space for the Game of Fifteen..............................43<br />

3.2.2 The State Space for a Simple Manual Control Task...........46<br />

3.2.3 Implications for <strong>Interface</strong> <strong>Design</strong>.........................................50<br />

3.3 Levels of Abstraction........................................................................... 51<br />

3.3.1 Two Analytical Frameworks for Modeling<br />

Abstraction.............................................................................. 52<br />

3.4 Computational Level of Abstraction................................................. 52<br />

3.4.1 Functional Purpose................................................................54<br />

3.4.2 Abstract Function...................................................................55<br />

3.4.3 Summary.................................................................................56<br />

3.5 Algorithm or General Function Level of Abstraction....................57<br />

3.6 Hardware Implementation.................................................................58<br />

3.6.1 Physical Function...................................................................58<br />

3.6.2 Physical Form..........................................................................59<br />

3.7 Putting It All Together........................................................................59<br />

3.7.1 Abstraction, Aggregation, <strong>and</strong> Progressive<br />

Deepening...............................................................................60<br />

3.7.2 Implications for Work Domain Analyses........................... 61<br />

3.7.3 Summary................................................................................. 62<br />

3.8 Conclusion............................................................................................64<br />

References........................................................................................................65<br />

4. The Dynamics of Awareness...................................................................... 67<br />

4.1 Introduction.......................................................................................... 67<br />

4.1.1 Zeroing In as a Form of Abduction.....................................68<br />

4.1.2 Chunking of Domain Structure........................................... 70<br />

4.1.3 Implications for <strong>Interface</strong> <strong>Design</strong>.........................................71<br />

4.2 A Model of Information Processing..................................................72<br />

4.2.1 Decision Ladder: Shortcuts in Information<br />

Processing................................................................................73<br />

4.3 Automatic Processing......................................................................... 76<br />

4.3.1 Varied <strong>and</strong> Consistent Mappings.........................................77<br />

4.3.2 Two Modes of Processing......................................................77<br />

4.3.3 Relationship to Decision Ladder..........................................78<br />

4.3.4 Implications for <strong>Interface</strong> <strong>Design</strong>.........................................79<br />

4.4 Direct Perception.................................................................................80<br />

4.4.1 Invariance as a Form of Consistent Mapping....................80<br />

4.4.2 Perception <strong>and</strong> the Role of Inferential Processes...............82<br />

4.4.3 Implications for <strong>Interface</strong> <strong>Design</strong>.........................................84<br />

4.5 Heuristic Decision Making................................................................85<br />

4.5.1 Optimization under Constraints..........................................85<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

ix<br />

4.5.2 Cognitive Illusions.................................................................86<br />

4.5.3 Ecological Rationality............................................................87<br />

4.5.3.1 Less Is More.............................................................87<br />

4.5.3.2 Take the Best............................................................88<br />

4.5.3.3 One-Reason Decision Making..............................88<br />

4.6 Summary <strong>and</strong> Conclusions................................................................89<br />

References........................................................................................................90<br />

5. The Dynamics of Situation Awareness....................................................93<br />

5.1 Introduction..........................................................................................93<br />

5.2 Representation Systems <strong>and</strong> Modes of Behavior............................94<br />

5.2.1 Signal Representations/Skill-Based Behavior....................95<br />

5.2.2 Sign Representations/Rule-Based Behavior.......................96<br />

5.2.3 Symbol Representations/Knowledge-Based<br />

Behaviors.................................................................................97<br />

5.3 Representations, Modes, <strong>and</strong> the Decision Ladder........................99<br />

5.3.1 Skill-Based Synchronization.................................................99<br />

5.3.2 Rule-Based Shortcuts........................................................... 101<br />

5.3.3 Knowledge-Based Reasoning............................................. 101<br />

5.3.4 Summary............................................................................... 101<br />

5.4 Ecological <strong>Interface</strong> <strong>Design</strong> (EID).................................................... 102<br />

5.4.1 Complementary Perspectives on EID................................ 103<br />

5.4.2 Qualifications <strong>and</strong> Potential<br />

Misunderst<strong>and</strong>ings.............................................................. 104<br />

5.5 Summary............................................................................................ 105<br />

References...................................................................................................... 106<br />

6. A Framework for Ecological <strong>Interface</strong> <strong>Design</strong> (EID)........................... 109<br />

6.1 Introduction........................................................................................ 109<br />

6.2 Fundamental Principles.................................................................... 111<br />

6.2.1 Direct Manipulation/Direct Perception........................... 112<br />

6.3 General Domain Constraints........................................................... 115<br />

6.3.1 Source of Regularity: Correspondence-Driven<br />

Domains................................................................................. 116<br />

6.3.2 Source of Regularity: Coherence-Driven<br />

Domains................................................................................. 117<br />

6.3.3 Summary............................................................................... 118<br />

6.4 General <strong>Interface</strong> Constraints.......................................................... 119<br />

6.4.1 Propositional Representations............................................ 119<br />

6.4.2 Metaphors.............................................................................. 120<br />

6.4.3 Analogies...............................................................................122<br />

6.4.4 Metaphor versus Analogy...................................................122<br />

6.4.5 Analog (versus Digital)........................................................123<br />

6.5 <strong>Interface</strong> <strong>Design</strong> Strategies............................................................... 124<br />

© 2011 by Taylor & Francis Group, LLC

x<br />

Contents<br />

6.6 Ecological <strong>Interface</strong> <strong>Design</strong>: Correspondence-Driven<br />

Domains.............................................................................................. 126<br />

6.6.1 Nested Hierarchies............................................................... 127<br />

6.6.2 Nested Hierarchies in the <strong>Interface</strong>: Analogical<br />

Representations.................................................................... 129<br />

6.7 Ecological <strong>Interface</strong> <strong>Design</strong>: Coherence-Driven Domains.......... 132<br />

6.7.1 Objective Properties: Effectivities...................................... 133<br />

6.7.2 Nested Hierarchies in the <strong>Interface</strong>: Metaphorical<br />

Representations.................................................................... 134<br />

6.8 Summary............................................................................................ 136<br />

References...................................................................................................... 139<br />

7. <strong>Display</strong> <strong>Design</strong>: Building a Conceptual Base....................................... 141<br />

7.1 Introduction........................................................................................ 141<br />

7.2 Psychophysical Approach................................................................ 142<br />

7.2.1 Elementary Graphical Perception Tasks........................... 143<br />

7.2.2 Limitations............................................................................ 145<br />

7.3 Aesthetic, Graphic Arts Approach.................................................. 146<br />

7.3.1 Quantitative Graphs............................................................. 146<br />

7.3.2 Visualizing Information...................................................... 149<br />

7.3.3 Limitations............................................................................ 152<br />

7.4 Visual Attention................................................................................. 152<br />

7.5 Naturalistic Decision Making.......................................................... 152<br />

7.5.1 Recognition-Primed Decisions........................................... 153<br />

7.5.1.1 Stages in RPD........................................................ 153<br />

7.5.1.2 Implications for Computer-Mediated<br />

Decision Support................................................... 155<br />

7.6 Problem Solving................................................................................. 155<br />

7.6.1 Gestalt Perspective............................................................... 156<br />

7.6.1.1 Gestalts <strong>and</strong> Problem Solving............................. 156<br />

7.6.1.2 Problem Solving as Transformation<br />

of Gestalt................................................................ 157<br />

7.6.2 The Power of Representations............................................ 159<br />

7.6.3 Functional Fixedness........................................................... 160<br />

7.6.4 The Double-Edged Sword of Representations................. 164<br />

7.7 Summary............................................................................................ 165<br />

References...................................................................................................... 166<br />

8. Visual Attention <strong>and</strong> Form Perception................................................... 169<br />

8.1 Introduction........................................................................................ 169<br />

8.2 Experimental Tasks <strong>and</strong> Representative Results.......................... 170<br />

8.2.1 Control Condition................................................................. 171<br />

8.2.2 Selective Attention............................................................... 173<br />

8.2.3 Divided Attention................................................................. 173<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

xi<br />

8.2.4 Redundant Condition.......................................................... 174<br />

8.2.5 A Representative Experiment............................................. 174<br />

8.3 An Interpretation Based on Perceptual Objects <strong>and</strong><br />

Perceptual Glue.................................................................................. 175<br />

8.3.1 Gestalt Laws of Grouping................................................... 176<br />

8.3.2 Benefits <strong>and</strong> Costs for Perceptual Objects........................ 179<br />

8.4 Configural Stimulus Dimensions <strong>and</strong> Emergent Features.......... 180<br />

8.4.1 The Dimensional Structure of Perceptual Input.............. 181<br />

8.4.1.1 Separable Dimensions.......................................... 181<br />

8.4.1.2 Integral Dimensions............................................. 182<br />

8.4.1.3 Configural Dimensions........................................ 183<br />

8.4.2 Emergent Features; Perceptual Salience;<br />

Nested Hierarchies............................................................... 184<br />

8.4.3 Configural Superiority Effect............................................. 185<br />

8.4.3.1 Salient Emergent Features................................... 185<br />

8.4.3.2 Inconspicuous Emergent Features..................... 187<br />

8.4.4 The Importance of Being Dynamic.................................... 188<br />

8.5 An Interpretation Based on Configurality <strong>and</strong><br />

Emergent Features............................................................................. 189<br />

8.5.1 Divided Attention................................................................. 190<br />

8.5.2 Control Condition................................................................. 191<br />

8.5.3 Selective Condition............................................................... 191<br />

8.6 Summary............................................................................................ 192<br />

References...................................................................................................... 195<br />

9. Semantic Mapping versus Proximity Compatibility........................... 197<br />

9.1 Introduction........................................................................................ 197<br />

9.2 Proximity Compatibility Principle.................................................. 198<br />

9.2.1 PCP <strong>and</strong> Divided Attention................................................ 198<br />

9.2.2 PCP <strong>and</strong> Focused Attention................................................200<br />

9.2.3 Representative PCP Study................................................... 201<br />

9.2.4 Summary of PCP..................................................................203<br />

9.3 Comparative Literature Review......................................................203<br />

9.3.1 Pattern for Divided Attention.............................................203<br />

9.3.2 Pattern for Focused Attention............................................203<br />

9.4 Semantic Mapping............................................................................. 207<br />

9.4.1 Semantic Mapping <strong>and</strong> Divided Attention......................208<br />

9.4.1.1 Mappings Matter!................................................. 210<br />

9.4.1.2 Configurality, Not Object Integrality: I............. 212<br />

9.4.1.3 Configurality, Not Object Integrality: II............ 215<br />

9.4.1.4 Summary................................................................220<br />

9.4.2 Semantic Mapping <strong>and</strong> Focused Attention......................220<br />

9.4.2.1 <strong>Design</strong> Techniques to Offset<br />

Potential Costs....................................................... 221<br />

© 2011 by Taylor & Francis Group, LLC

xii<br />

Contents<br />

9.4.2.2 Visual Structure in Focused Attention..............223<br />

9.4.2.3 Revised Perspective on Focused<br />

Attention................................................................226<br />

9.5 <strong>Design</strong> Strategies in Supporting Divided <strong>and</strong><br />

Focused Attention.............................................................................226<br />

9.6 PCP Revisited.....................................................................................227<br />

References......................................................................................................229<br />

10. <strong>Design</strong> Tutorial: Configural Graphics for Process Control................ 231<br />

10.1 Introduction........................................................................................ 231<br />

10.2 A Simple Domain from Process Control........................................ 232<br />

10.2.1 Low-Level Data (Process Variables)................................... 232<br />

10.2.2 High-Level Properties (Process Constraints)................... 232<br />

10.3 An Abstraction Hierarchy Analysis...............................................234<br />

10.4 Direct Perception...............................................................................236<br />

10.4.1 Mapping Domain Constraints into Geometrical<br />

Constraints............................................................................236<br />

10.4.1.1 General Work Activities <strong>and</strong> Functions............238<br />

10.4.1.2 Priority Measures <strong>and</strong> Abstract<br />

Functions................................................................238<br />

10.4.1.3 Goals <strong>and</strong> Purposes.............................................. 239<br />

10.4.1.4 Physical Processes................................................. 239<br />

10.4.1.5 Physical Appearance, Location, <strong>and</strong><br />

Configuration........................................................ 239<br />

10.4.2 Support for Skill-, Rule-, <strong>and</strong> Knowledge-Based<br />

Behaviors............................................................................... 240<br />

10.4.2.1 Skill-Based Behavior/Signals.............................. 240<br />

10.4.2.2 Rule-Based Behavior/Signs................................. 240<br />

10.4.2.3 Knowledge-Based Behavior/Symbols............... 243<br />

10.4.3 Alternative Mappings.......................................................... 249<br />

10.4.3.1 Separable <strong>Display</strong>s................................................ 252<br />

10.4.3.2 Configural <strong>Display</strong>s..............................................254<br />

10.4.3.3 Integral <strong>Display</strong>s...................................................255<br />

10.4.3.4 Summary................................................................255<br />

10.4.4 Temporal Information..........................................................256<br />

10.4.4.1 The Time Tunnels Technique.............................. 257<br />

10.5 Direct Manipulation..........................................................................260<br />

10.6 Summary............................................................................................ 262<br />

References......................................................................................................263<br />

11. <strong>Design</strong> Tutorial: Flying within the Field of Safe Travel.....................265<br />

11.1 Introduction........................................................................................265<br />

11.2 The Challenge of Blind Flight.......................................................... 270<br />

11.3 The Wright Configural Attitude <strong>Display</strong> (WrightCAD)..............272<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

xiii<br />

11.4 The Total Energy Path <strong>Display</strong>........................................................277<br />

11.5 Summary............................................................................................ 282<br />

References......................................................................................................284<br />

12. Metaphor: Leveraging Experience........................................................... 287<br />

12.1 Introduction........................................................................................ 287<br />

12.2 Spatial Metaphors <strong>and</strong> Iconic Objects............................................288<br />

12.2.1 The Power of Metaphors......................................................289<br />

12.2.2 The Trouble with Metaphors..............................................289<br />

12.3 Skill Development.............................................................................290<br />

12.3.1 Assimilation.......................................................................... 291<br />

12.3.2 Accommodation.................................................................... 292<br />

12.3.3 <strong>Interface</strong> Support for Learning by Doing......................... 293<br />

12.4 Shaping Expectations........................................................................ 295<br />

12.4.1 Know Thy User..................................................................... 297<br />

12.5 Abduction: The Dark Side................................................................ 298<br />

12.5.1 Forms of Abductive Error...................................................299<br />

12.5.2 Minimizing Negative Transfer...........................................300<br />

12.6 Categories of Metaphors...................................................................300<br />

12.7 Summary............................................................................................305<br />

References......................................................................................................309<br />

13. <strong>Design</strong> Tutorial: Mobile Phones <strong>and</strong> PDAs........................................... 311<br />

13.1 Introduction........................................................................................ 311<br />

13.2 Abstraction Hierarchy Analysis...................................................... 314<br />

13.3 The iPhone <strong>Interface</strong>.......................................................................... 316<br />

13.3.1 Direct Perception.................................................................. 316<br />

13.3.1.1 Forms Level........................................................... 316<br />

13.3.1.2 Views Level............................................................ 317<br />

13.3.1.3 Work Space Level.................................................. 318<br />

13.3.2 Direct Manipulation............................................................. 320<br />

13.3.2.1 Tap........................................................................... 321<br />

13.3.2.2 Flick......................................................................... 321<br />

13.3.2.3 Double Tap.............................................................322<br />

13.3.2.4 Drag........................................................................322<br />

13.3.2.5 Pinch In/Pinch Out.............................................. 324<br />

13.4 Support for Various Modes of Behavior......................................... 324<br />

13.4.1 Skill-Based Behavior............................................................ 324<br />

13.4.2 Rule-Based Behavior............................................................ 326<br />

13.4.3 Knowledge-Based Behavior................................................ 328<br />

13.5 Broader Implications for <strong>Interface</strong> <strong>Design</strong>..................................... 329<br />

13.5.1 To Menu or Not to Menu? (Is That the Question? Is<br />

There a Rub?).........................................................................330<br />

13.6 Summary............................................................................................333<br />

References......................................................................................................333<br />

© 2011 by Taylor & Francis Group, LLC

xiv<br />

Contents<br />

14. <strong>Design</strong> Tutorial: Comm<strong>and</strong> <strong>and</strong> Control................................................335<br />

14.1 Introduction........................................................................................335<br />

14.2 Abstraction Hierarchy Analysis of Military Comm<strong>and</strong><br />

<strong>and</strong> Control......................................................................................... 337<br />

14.2.1 Goals, Purposes, <strong>and</strong> Constraints...................................... 337<br />

14.2.2 Priority Measures <strong>and</strong> Abstract Functions.......................339<br />

14.2.3 General Work Activities <strong>and</strong> Functions............................339<br />

14.2.4 Physical Activities in Work; Physical Processes<br />

of Equipment.........................................................................339<br />

14.2.5 Appearance, Location, <strong>and</strong> Configuration of<br />

Material Objects....................................................................340<br />

14.2.6 Aggregation Hierarchy........................................................340<br />

14.3 Decision Making................................................................................340<br />

14.3.1 Military Decision-Making Process<br />

(or Analytical Process).........................................................341<br />

14.3.1.1 Situation Analysis.................................................341<br />

14.3.1.2 Develop Courses of Action..................................341<br />

14.3.1.3 Planning <strong>and</strong> Execution.......................................343<br />

14.3.2 Intuitive Decision Making (or Naturalistic<br />

Decision Making).................................................................344<br />

14.4 Direct Perception...............................................................................345<br />

14.4.1 Friendly Combat Resources <strong>Display</strong>.................................347<br />

14.4.2 Enemy Combat Resources <strong>Display</strong>....................................348<br />

14.4.3 Force Ratio <strong>Display</strong>..............................................................350<br />

14.4.4 Force Ratio Trend <strong>Display</strong>................................................... 352<br />

14.4.4.1 A Brief Digression.................................................354<br />

14.4.5 Spatial Synchronization Matrix <strong>Display</strong>...........................355<br />

14.4.6 Temporal Synchronization Matrix <strong>Display</strong>......................356<br />

14.4.7 Plan Review Mode <strong>and</strong> <strong>Display</strong>s.......................................358<br />

14.4.8 Alternative Course of Action <strong>Display</strong>...............................360<br />

14.5 Direct Manipulation.......................................................................... 361<br />

14.5.1 Synchronization Points........................................................ 362<br />

14.5.2 Levels of Aggregation.......................................................... 362<br />

14.5.2.1 Aggregation Control/<strong>Display</strong>............................. 362<br />

14.5.2.2 Control of Force Icons..........................................363<br />

14.6 Skill-Based Behavior.........................................................................364<br />

14.7 Rule-Based Behavior.........................................................................365<br />

14.8 Knowledge-Based Behavior.............................................................365<br />

14.9 Evaluation........................................................................................... 367<br />

14.9.1 Direct Perception.................................................................. 367<br />

14.9.2 Situation Assessment; Decision Making...........................368<br />

14.10 Summary............................................................................................ 370<br />

References...................................................................................................... 371<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

xv<br />

15. <strong>Design</strong> Principles: Visual Momentum................................................... 375<br />

15.1 Introduction........................................................................................ 375<br />

15.2 Visual Momentum.............................................................................377<br />

15.2.1 <strong>Design</strong> Strategies.................................................................. 378<br />

15.2.1.1 Fixed Format Data Replacement......................... 378<br />

15.2.1.2 Long Shot............................................................... 379<br />

15.2.1.3 Perceptual L<strong>and</strong>marks......................................... 379<br />

15.2.1.4 <strong>Display</strong> Overlap....................................................380<br />

15.2.1.5 Spatial Structure<br />

(Spatial Representation/Cognition)................... 381<br />

15.2.2 <strong>Interface</strong> Levels..................................................................... 381<br />

15.3 VM in the Work Space...................................................................... 382<br />

15.3.1 The BookHouse <strong>Interface</strong>....................................................383<br />

15.3.1.1 Spatial Structure...................................................384<br />

15.3.1.2 <strong>Display</strong> Overlap....................................................385<br />

15.3.1.3 Perceptual L<strong>and</strong>marks.........................................386<br />

15.3.1.4 Fixed Format Data Replacement.........................386<br />

15.3.2 The iPhone <strong>Interface</strong>............................................................ 387<br />

15.3.2.1 Spatial Structure................................................... 387<br />

15.3.2.2 Long Shot...............................................................389<br />

15.3.2.3 Fixed Format Data Replacement.........................389<br />

15.3.2.4 Perceptual L<strong>and</strong>marks.........................................390<br />

15.3.3 Spatial Cognition/Way-Finding.........................................390<br />

15.3.3.1 L<strong>and</strong>mark Knowledge......................................... 391<br />

15.3.3.2 Route Knowledge.................................................. 392<br />

15.3.3.3 Survey Knowledge................................................ 392<br />

15.4 VM in Views....................................................................................... 394<br />

15.4.1 The RAPTOR <strong>Interface</strong>........................................................ 395<br />

15.4.1.1 Spatial Dedication; Layering <strong>and</strong><br />

Separation.............................................................. 395<br />

15.4.1.2 Long Shot............................................................... 395<br />

15.4.1.3 Perceptual L<strong>and</strong>marks......................................... 397<br />

15.4.1.4 Fixed Format Data Replacement......................... 398<br />

15.4.1.5 Overlap................................................................... 399<br />

15.5 VM in Forms.......................................................................................400<br />

15.5.1 A Representative Configural <strong>Display</strong>................................ 401<br />

15.5.1.1 Fixed Format Data Replacement.........................402<br />

15.5.1.2 Perceptual L<strong>and</strong>marks.........................................402<br />

15.5.1.3 Long Shot...............................................................403<br />

15.5.1.4 Overlap...................................................................403<br />

15.5.1.5 Section Summary..................................................403<br />

15.6 Summary............................................................................................404<br />

References......................................................................................................405<br />

© 2011 by Taylor & Francis Group, LLC

xvi<br />

Contents<br />

16. Measurement................................................................................................407<br />

16.1 Introduction........................................................................................407<br />

16.2 Paradigmatic Commitments: Control versus Generalization.....408<br />

16.2.1 Dyadic Commitments..........................................................408<br />

16.2.2 Triadic Commitments..........................................................408<br />

16.2.3 Parsing Complexity.............................................................. 410<br />

16.3 What Is the System?........................................................................... 411<br />

16.3.1 Measuring Situations........................................................... 413<br />

16.3.1.1 The Abstraction (Measurement) Hierarchy...... 414<br />

16.3.2 Measuring Awareness......................................................... 416<br />

16.3.2.1 Decision Ladder; SRK........................................... 417<br />

16.3.3 Measuring Performance...................................................... 417<br />

16.3.3.1 Multiple, Context-Dependent Indices................ 418<br />

16.3.3.2 Process <strong>and</strong> Outcome........................................... 419<br />

16.3.3.3 Hierarchically Nested..........................................420<br />

16.4 Synthetic Task Environments..........................................................420<br />

16.4.1 Not Just Simulation..............................................................420<br />

16.4.2 Coupling between Measurement Levels.......................... 421<br />

16.4.3 Fidelity...................................................................................422<br />

16.5 Conclusion..........................................................................................423<br />

References......................................................................................................425<br />

17. <strong>Interface</strong> Evaluation....................................................................................427<br />

17.1 Introduction........................................................................................427<br />

17.2 Cognitive Systems Engineering Evaluative Framework..............428<br />

17.2.1 Boundary 1: Controlled Mental Processes........................429<br />

17.2.2 Boundary 2: Controlled Cognitive Tasks..........................430<br />

17.2.3 Boundary 3: Controlled Task Situation.............................430<br />

17.2.4 Boundary 4: Complex Work Environments..................... 431<br />

17.2.5 Boundary 5: Experiments in Actual Work<br />

Environment......................................................................... 432<br />

17.2.6 Summary............................................................................... 432<br />

17.3 Representative Research Program; Multiboundary Results.......432<br />

17.3.1 Synthetic Task Environment for Process Control............433<br />

17.3.2 Boundary Level 1 Evaluation.............................................434<br />

17.3.3 Boundary Levels 2 <strong>and</strong> 3.....................................................436<br />

17.3.4 Boundary Levels 1 <strong>and</strong> 3.....................................................438<br />

17.4 Conventional Rules <strong>and</strong> Experimental Results.............................441<br />

17.4.1 Match with Dyadic Conventional Rules...........................441<br />

17.4.2 Match with Triadic Conventional Rules...........................442<br />

17.5 Broader Concerns Regarding Generalization...............................444<br />

17.6 Conclusion..........................................................................................446<br />

References......................................................................................................448<br />

© 2011 by Taylor & Francis Group, LLC

Contents<br />

xvii<br />

18. A New Way of Seeing?............................................................................... 451<br />

18.1 Introduction........................................................................................ 451<br />

18.2 A Paradigm Shift <strong>and</strong> Its Implications........................................... 451<br />

18.2.1 Chunking: A Representative Example of<br />

Reinterpretation....................................................................453<br />

18.2.2 Summary...............................................................................454<br />

18.3 <strong>Interface</strong> <strong>Design</strong>.................................................................................454<br />

18.3.1 Constraint Mappings for Correspondence-Driven<br />

Domains.................................................................................455<br />

18.3.2 Constraint Mappings for Coherence-Driven<br />

Domains.................................................................................456<br />

18.3.3 The Eco-Logic of Work Domains.......................................458<br />

18.3.4 Productive Thinking in Work Domains...........................458<br />

18.4 Looking over the Horizon................................................................459<br />

18.4.1 Mind, Matter, <strong>and</strong> What Matters.......................................460<br />

18.4.2 Information Processing Systems........................................ 461<br />

18.4.3 Meaning Processing Systems............................................. 461<br />

References...................................................................................................... 462<br />

Index..............................................................................................................465<br />

© 2011 by Taylor & Francis Group, LLC

Preface<br />

This book is about display <strong>and</strong> interface design. It is the product of over<br />

60 years of combined experience studying, implementing, <strong>and</strong> teaching<br />

about performance in human–technology systems. Great strides have been<br />

made in interface design since the early 1980s, when we first began thinking<br />

about the associated challenges. Technological advances in hardware <strong>and</strong><br />

software now provide the potential to design interfaces that are both powerful<br />

<strong>and</strong> easy to use. Yet, the frustrations <strong>and</strong> convoluted “work-arounds”<br />

that are often still encountered make it clear that there is substantial room<br />

for improvement. Over the years, we have acquired a deep appreciation for<br />

the complexity <strong>and</strong> difficulty of building effective interfaces; it is reflected in<br />

the content of this book. As a result, you are likely to find it to be decidedly<br />

different from most books on the topic.<br />

We view the interface as a tool that will help an individual accomplish<br />

his or her work efficiently <strong>and</strong> pleasurably; it is a form of decision-making<br />

<strong>and</strong> problem-solving support. As such, a recurring question concerns the<br />

relation between the structure of problem representations <strong>and</strong> the quality of<br />

performance. A change in how a problem is represented can have a marked<br />

effect on the quality of performance. This relation is interesting to us as cognitive<br />

psychologists <strong>and</strong> as cognitive systems engineers.<br />

As cognitive psychologists, we believe that the relationship between the<br />

structure of representations <strong>and</strong> the quality of performance has important<br />

implications for underst<strong>and</strong>ing the basic dynamics of cognition. It suggests<br />

that there is an intimate, circular coupling between perception–action <strong>and</strong><br />

between situation–awareness that contrasts with conventional approaches<br />

to cognition (where performance is viewed as a series of effectively independent<br />

stages of general, context-independent information processes). We<br />

believe that the coupling between perception <strong>and</strong> action (i.e., the ability to<br />

“see” the world in relation to constraints on action) <strong>and</strong> between situation<br />

<strong>and</strong> awareness (i.e., the ability to make sense of complex situations) depend<br />

critically on the structure of representations. Thus, in exploring the nature<br />

of representations, we believe that we are gaining important insight into<br />

human cognition.<br />

As cognitive engineers, we believe that the relation between representations<br />

<strong>and</strong> the quality of performance have obvious implications for the design<br />

of interfaces to support human work. The design challenge is to enhance<br />

perspicuity <strong>and</strong> awareness so that action <strong>and</strong> situation constraints are wellspecified<br />

relative to the dem<strong>and</strong>s of a work ecology. The approach is to design<br />

representations so that there is an explicit mapping between the patterns in<br />

the representation <strong>and</strong> the action <strong>and</strong> situation constraints. Of course, this<br />

implies an analysis of work domain situations to identify these constraints,<br />

© 2011 by Taylor & Francis Group, LLC<br />

xix

xx<br />

Preface<br />

as well as an underst<strong>and</strong>ing of awareness to know what patterns are likely<br />

to be salient. We believe that the quality of representing the work domain<br />

constraints will ultimately determine effectivity <strong>and</strong> efficiency; that is, it will<br />

determine the quality of performance <strong>and</strong> the level of effort required.<br />

Intended Audience<br />

The primary target audience for this book is students in human factors <strong>and</strong><br />

related disciplines (including psychology, engineering, computer science,<br />

industrial design, <strong>and</strong> industrial/organizational psychology). This book is<br />

an integration of our notes for the courses that we teach in interface design<br />

<strong>and</strong> cognitive systems engineering. Our goal is to train students to appreciate<br />

basic theory in cognitive science <strong>and</strong> to apply that theory in the design<br />

of technology <strong>and</strong> work organizations. We begin by constructing a theoretical<br />

foundation for approaching cognitive systems that integrates across<br />

situations, representations, <strong>and</strong> awareness—emphasizing the intimate interactions<br />

between them. The initial chapters of the book lay this foundation.<br />

We also hope to foster in our students a healthy appreciation for the value<br />

of basic empirical research in addressing questions about human performance.<br />

For example, in the middle chapters we provide extensive reviews of<br />

the research literature associated with visual attention in relation to the integral,<br />

separable, <strong>and</strong> configural properties of representations. Additionally,<br />

we try to prepare our students to immerse themselves in the complexity of<br />

practical design problems. Thus, we include several tutorial chapters that<br />

recount explorations of design in specific work domains.<br />

Finally, we try to impress on our students the intimate relation between<br />

basic <strong>and</strong> applied science. In fact, we emphasize that basic theory provides<br />

the strongest basis for generalization. The practical world <strong>and</strong> the scientific/<br />

academic world move at a very different pace. <strong>Design</strong>ers cannot wait for<br />

research programs to provide clear empirical answers to their questions. In<br />

order to participate in <strong>and</strong> influence design, we must be able to extrapolate our<br />

research to keep up with the dem<strong>and</strong>s of changing technologies <strong>and</strong> changing<br />

work domains. Theory is the most reliable basis for these extrapolations.<br />

Conversely, application can be the ultimate test of theory. It is typically<br />

a much stronger test than the laboratory, where the assumptions guiding<br />

a theory are often reified in the experimental methodology. Thus, laboratory<br />

research often ends up being demonstrations of plausibility, rather than<br />

strong tests of a theory.<br />

We believe this work will also be of interest to a much broader audience<br />

concerned with applying cognitive science to design technologies that<br />

enhance the quality of human work. This broader audience might identify<br />

with other labels for this enterprise: ergonomics, human factors engineering,<br />

© 2011 by Taylor & Francis Group, LLC

Preface<br />

xxi<br />

human–computer interaction (HCI), semantic computing, resilience engineering,<br />

industrial design, user-experience design, interaction design, etc.<br />

Our goals for this audience parallel the goals for our students: We want to<br />

provide a theoretical basis, an empirical basis, <strong>and</strong> a practical basis for framing<br />

the design questions.<br />

Note that this is not a “how to” book with recipes in answer to specific<br />

design questions (i.e., <strong>Interface</strong>s for Dummies). Rather, our goal is simply to help<br />

people to frame the questions well, with the optimism that a well-framed<br />

question is nearly answered. It is important for people to appreciate that we<br />

are dealing with complex problems <strong>and</strong> that there are no easy answers. Our<br />

goal is to help people to appreciate this complexity <strong>and</strong> to provide a theoretical<br />

context for parsing this complexity in ways that might lead to productive<br />

insights. As suggested by the title, our goal is to enhance the subtlety of the<br />

science <strong>and</strong> to enhance the exactness of the art with respect to designing<br />

effective cognitive systems.<br />

Kevin Bennett<br />

John Flach<br />

© 2011 by Taylor & Francis Group, LLC

Acknowledgments<br />

We would like to thank Don Norman, Jens Rasmussen, James Pomerantz,<br />

<strong>and</strong> a host of graduate students for their comments on earlier drafts. Larry<br />

Shattuck, Christopher Talcott, Silas Martinez, <strong>and</strong> Dan Hall were codevelopers<br />

<strong>and</strong> co-investigators in the research projects for the RAPTOR<br />

military comm<strong>and</strong> <strong>and</strong> control interface. Darby Patrick <strong>and</strong> Paul Jacques<br />

collaborated in the development <strong>and</strong> evaluation of the WrightCAD display<br />

concept. Matthijs Amelink was the designer of the Total Energy Path<br />

l<strong>and</strong>ing display in collaboration with Professor Max Mulder <strong>and</strong> Dr. Rene<br />

van Paassen in the faculty of Aeronautical Engineering at the Technical<br />

University of Delft.<br />

A special thanks from the first author to David Woods for his mentorship,<br />

collaborations, <strong>and</strong> incredible insights through the years. Thanks as well<br />

to Raja Parasuraman, who was as much friend as adviser during graduate<br />

school. Thanks to Jim Howard who introduced me to computers <strong>and</strong> taught<br />

me the importance of being precise. Thanks to Herb Colle, who created a<br />

great academic program <strong>and</strong> an environment that fosters success. Finally,<br />

thanks to my family <strong>and</strong> their patience during the long process of writing.<br />

The second author wants especially to thank Rich Jagacinski <strong>and</strong> Dean<br />

Owen, two mentors <strong>and</strong> teachers who set st<strong>and</strong>ards for quality far above<br />

my reach, who gave me the tools I needed to take the initial steps in that<br />

direction, <strong>and</strong> who gave me the confidence to persist even though the goal<br />

remains a distant target. Also, special thanks to Kim Vicente, the student<br />

who became my teacher. I also wish to acknowledge interactions with Jens<br />

Rasmussen, Max Mulder, Rene van Paassen, Penelope S<strong>and</strong>erson, Neville<br />

Moray, Alex Kirlik, P. J. Stappers, <strong>and</strong> Chris Wickens, whose friendship<br />

along the journey have challenged me when I tended toward complacency<br />

<strong>and</strong> who have encouraged me when I tended toward despair. Finally, I want<br />

to thank my wife <strong>and</strong> sons, who constantly remind me not to take my ideas<br />

or myself too seriously.<br />

This effort was made possible by funding from a number of organizations.<br />

The Ohio Board of Regents <strong>and</strong> the Wright State University Research<br />

Council awarded several research incentive <strong>and</strong> research challenge grants<br />

that funded some of the research projects described in the book <strong>and</strong> provided<br />

a sabbatical for intensive writing. The army project was supported<br />

through participation in the Advanced Decision Architectures Collaborative<br />

Technology Alliance Consortium, sponsored by the U.S. Army Research<br />

Laboratory under cooperative agreement DAAD19-01-2-0009. Development<br />

of the WrightCAD was supported through funding from the Japan Atomic<br />

Energy Research Institute; the basic research on optical control of locomotion<br />

© 2011 by Taylor & Francis Group, LLC<br />

xxiii

xxiv<br />

Acknowledgments<br />

that inspired it was funded by a series of grants from the Air Force Office of<br />

Scientific Research (AFOSR).<br />

Finally, thanks to the software specialists. Daniel Serfaty <strong>and</strong> Aptima<br />

kindly provided us with their DDD ® simulation software <strong>and</strong> Kevin Gildea<br />

helped us use it effectively. R<strong>and</strong>y Green implemented (<strong>and</strong> often reimplemented)<br />

many of the displays, interfaces, <strong>and</strong> environments that are<br />

described here.<br />

Macintosh, iPhone, iPod, <strong>and</strong> Safari are registered trademarks of Apple Inc.<br />

© 2011 by Taylor & Francis Group, LLC

The Authors<br />

Kevin B. Bennett is a professor of psychology at Wright State University,<br />

Dayton, Ohio. He received a PhD in applied experimental psychology from<br />

the Catholic University of America in 1984.<br />

John M. Flach is a professor <strong>and</strong> chair of psychology at Wright State<br />

University, Dayton, Ohio. He received a PhD in human experimental psychology<br />

from the Ohio State University in 1984.<br />

Drs. Flach <strong>and</strong> Bennett are co-directors of the Joint Cognitive Systems<br />

Laboratory at Wright State University. They share interests in human performance<br />

theory <strong>and</strong> cognitive systems engineering, particularly in relation to<br />

ecological display <strong>and</strong> interface design. Their independent <strong>and</strong> collaborative<br />

efforts in these areas have spanned three decades.<br />

© 2011 by Taylor & Francis Group, LLC<br />

xxv

1<br />

Introduction to Subtle Science, Exact Art<br />

1.1 Introduction<br />

Science <strong>and</strong> art have in common intense seeing, the wide-eyed observing<br />

that generates empirical information. (Tufte 2006, p. 9; emphasis added)<br />

The purpose of an evidence presentation is to assist thinking. Thus<br />

presentations should be constructed so as to assist with the fundamental<br />

intellectual tasks in reasoning about evidence: describing the data, making<br />

multivariate comparisons, underst<strong>and</strong>ing causality, integrating a<br />

diversity of evidence, <strong>and</strong> documenting the analysis. (Tufte 2006, p. 137)<br />

The topic of this book is display <strong>and</strong> interface design or, in more conventional<br />

terms, human–computer interaction. Given its popularity <strong>and</strong> the fact<br />

that it impacts the majority of us on a daily basis, it is not surprising that<br />

many books have been written on the subject. A search on Amazon.com<br />

for the term “human–computer interaction” confirms this intuition, since<br />

over 10,000 books are identified. Given this number, even those who are not<br />

inherently skeptical may be inclined to ask a simple question: “Do we really<br />

need yet another one?” Since we have taken the time <strong>and</strong> effort to write this<br />

book, our answer is obviously “yes.”<br />

One reason underlying our affirmative response is a relatively pragmatic<br />

one. Consider the extent to which the interfaces with which you interact (a)<br />

are intuitive to learn <strong>and</strong> (b) subsequently allow you to work efficiently. If<br />

your experience is anything like ours, the list of interfaces that meet these<br />

two simple criteria is a short one. To state the case more bluntly, there are a<br />

lot of bad displays <strong>and</strong> interfaces out there. We own scores of applications<br />

that we have purchased, attempted to learn how to use, <strong>and</strong> essentially given<br />

up because the learning curve was so steep. This is despite the fact that we<br />

are computer literate, the applications are often the industry st<strong>and</strong>ard, <strong>and</strong><br />

we know that they would be useful if we could bring ourselves to invest the<br />

time. For other applications, we have invested the time to learn them, but are<br />

constantly frustrated by the ways in which interface design has made it difficult<br />

to accomplish relatively simple <strong>and</strong> straightforward tasks.<br />

There is no reason for this situation to exist. Advances in computational<br />

technology have provided powerful tools with the potential to build effective<br />

© 2011 by Taylor & Francis Group, LLC<br />

1

2 <strong>Display</strong> <strong>and</strong> <strong>Interface</strong> <strong>Design</strong>: Subtle Science, Exact Art<br />

interfaces for the workplace. However, this potential is rarely realized; interfaces<br />

that are both intuitive <strong>and</strong> efficient (<strong>and</strong> therefore pleasurable to use) are<br />

the exception, rather than the rule. The organizations responsible for building<br />

these interfaces did not begin with the goal of making them unintuitive<br />

<strong>and</strong> inefficient. There are profits to be made when applications have effective<br />

interfaces; there are costs to be avoided in terms of decreased productivity<br />

<strong>and</strong> safety. All things considered, only one logical conclusion can be drawn:<br />

<strong>Display</strong> <strong>and</strong> interface design is a surprisingly complicated endeavor; the difficulty<br />

in getting it right is grossly underestimated, even by researchers <strong>and</strong><br />

practitioners who are experts in the field.<br />

Given the sheer number of books written on the topic <strong>and</strong> the current state<br />

of affairs described earlier, one might be tempted to conclude that something<br />

very important is missing in these books. This conclusion is consistent with<br />

our experiences in teaching courses on display <strong>and</strong> interface design. Over<br />

the last 20 years we have searched for, but never found, a single book that<br />

addresses the topic in a way that meets our needs. Although researchers <strong>and</strong><br />

practitioners from various disciplines have treated some pieces of the puzzle<br />

quite admirably, no one has yet synthesized <strong>and</strong> integrated these puzzle pieces<br />

into a single coherent treatment that meets our needs. As a result, the syllabi<br />

of our courses contain an assortment of book chapters <strong>and</strong> articles rather than<br />

a primary text.<br />

In the end, we have arrived at the conclusion that our perspective on display<br />

<strong>and</strong> interface design may just be a fairly unique one. Our goal in writing<br />

this book is to share that perspective with you: to describe those pieces of the<br />

puzzle that have been treated well, to fill in the gaps that are missing, <strong>and</strong> to<br />

provide a coherent synthesis <strong>and</strong> integration of the topic. To accomplish this<br />

goal we have drawn upon a wealth of experience accrued over decades; this<br />

includes thinking about the issues, conducting research on various topics in<br />

the field, implementing a large number of displays <strong>and</strong> interfaces in a variety<br />

of work domains, <strong>and</strong> conveying the resulting insights to our students <strong>and</strong><br />

colleagues. We will now convey why we feel this perspective is both unique<br />

<strong>and</strong> useful. In the process we will describe how this book is positioned relative<br />

to others that have been written on the topic.<br />

1.2 Theoretical Orientation<br />

[C]omputer scientists <strong>and</strong> engineers should have no problem in underst<strong>and</strong>ing<br />

the nature of interface design as science <strong>and</strong> art; … the contingent<br />

nature of each interface, is a reflex of design’s dual nature as science<br />

(in respect to scientific principles of design applied to computers) <strong>and</strong> art<br />

(in respect to a particular, original way of designing). (Nadin 1988, p. 53,<br />

emphasis original <strong>and</strong> added)<br />

© 2011 by Taylor & Francis Group, LLC

Introduction to Subtle Science, Exact Art 3<br />

One broad category of books written on display <strong>and</strong> interface design<br />

addresses the problem from what might be referred to as a “user-driven”<br />

approach. These books are typically written by social scientists (e.g., psychologists,<br />

anthropologists, educators, etc.). The primary emphasis is on<br />

the contributions of the user: underst<strong>and</strong>ing broad capabilities <strong>and</strong> limitations<br />

(e.g., memory, perception), how users think about their work domain<br />

(e.g., mental models), situated evaluations of display <strong>and</strong> interface concepts<br />

(usability studies), <strong>and</strong> user preferences <strong>and</strong> opinions about design interventions<br />

(iterative design). These books also consider interface technology<br />

(e.g., widgets, menus, form-filling, etc.), although it is typically a secondary<br />

emphasis. Important insights can be gained from this perspective, particularly<br />

when considered in light of the historical backdrop where users were<br />

not considered as an integral part of computer system design.<br />

A second broad category of books addresses the problem from what<br />

might be considered a “technology-driven” approach. These books are typically<br />

written by computer scientists <strong>and</strong> engineers. The primary emphasis<br />

is on underst<strong>and</strong>ing interface technology (e.g., menus, forms, dialog boxes,<br />

keyboards, pointing devices, display size, refresh rate, system response<br />

time, error messages, documentation, etc.) <strong>and</strong> how it can be used in the<br />

interface. These books also typically consider the user (see topics outlined<br />

in the previous paragraph), although it is a secondary emphasis. Once<br />

again, it is necessary to think about interface technology when considering<br />

human–computer interaction, <strong>and</strong> important insights can be gained from<br />

this perspective.<br />

Collectively, these two complementary perspectives constitute conventional<br />

wisdom about how the process of the design <strong>and</strong> evaluation of displays<br />

<strong>and</strong> interfaces should proceed. This emphasis is readily apparent in the<br />

label that is typically applied to this endeavor: human–computer interaction.<br />

The vast majority of books written on the topic share one of these two orientations<br />

or a combination of the two.<br />

1.2.1 Cognitive Systems Engineering: Ecological <strong>Interface</strong> <strong>Design</strong><br />

In our opinion, although these two perspectives are certainly necessary, they<br />

are not sufficient. The human is interacting with the computer for a reason,<br />

<strong>and</strong> that reason is to complete work in a domain. This is true whether the<br />

work is defined in a traditional sense (e.g., controlling a process, flying an<br />

airplane) or the work is more broadly defined (e.g., surfing the Internet, making<br />

a phone call, finding a book of fiction).<br />

This represents one fundamental dimension that differentiates our book<br />

from others written on the topic. Our approach is a problem-driven (as<br />

opposed to user- or technology-driven) approach to the design <strong>and</strong> evaluation<br />

of interfaces. By this we mean that the primary purpose of an interface is to<br />

provide decision-making <strong>and</strong> problem-solving support for a user who is completing<br />

work in a domain. The goal is to design interfaces that (1) are tailored<br />

© 2011 by Taylor & Francis Group, LLC

4 <strong>Display</strong> <strong>and</strong> <strong>Interface</strong> <strong>Design</strong>: Subtle Science, Exact Art<br />

to specific work dem<strong>and</strong>s, (2) leverage the powerful perception- action skills<br />

of the human, <strong>and</strong> (3) use powerful interface technologies wisely.<br />

This can be conceptualized as a “triadic” approach (domain/ecology,<br />

human/awareness, interface/representation) to human–computer interaction<br />

that st<strong>and</strong>s in sharp contrast to the traditional “dyadic” (human, interface)<br />

approaches described before. Ultimately, the success or failure of an<br />

interface is determined by the interactions that occur between all three components<br />

of the triad; any approach that fails to consider all of these components<br />

<strong>and</strong> their interactions (i.e., dyadic approaches) will be inherently <strong>and</strong><br />

severely limited.<br />

The specific approach that guides our efforts has been referred to as cognitive<br />

systems engineering (CSE; Rasmussen, Pejtersen, <strong>and</strong> Goodstein 1994;<br />

Vicente 1999; Flach et al. 1995; Rasmussen 1986; Norman 1986). CSE provides<br />

an overarching framework for analysis, design, <strong>and</strong> evaluation of complex<br />

sociotechnical systems. In terms of interface design, this framework provides<br />

analytical tools that can be applied to identify important characteristics of<br />

domains, the activities that need to be accomplished within a domain, <strong>and</strong><br />

the information that is needed to do so effectively. In short, it allows decisions<br />

to be made about display <strong>and</strong> interface design that are informed by the<br />

characteristics of the underlying work domain.<br />

We also believe that this book is uniquely positioned relative to other<br />

books written from the CSE perspective. Three classic books written on<br />

CSE (Rasmussen et al. 1994; Vicente 1999; Rasmussen 1986) have focused on<br />

descriptions of the framework as a whole. It is a complicated framework,<br />

developed to meet complicated challenges, <strong>and</strong> this was a necessary step.<br />

<strong>Display</strong> <strong>and</strong> interface design were not ignored; general principles were discussed<br />

<strong>and</strong> excellent case studies were described. However, the clear focus<br />

is on the framework, the tools, <strong>and</strong> the analyses; display <strong>and</strong> interface design<br />

is a secondary topic.<br />

Our book reverses the emphasis: display <strong>and</strong> interface design is the primary<br />

focus while CSE provides the orienting underlying framework. It was specifically<br />

designed to complement <strong>and</strong> build upon these classic texts <strong>and</strong> related<br />