TECHNOLOGY AT WORK

1Oclobi

1Oclobi

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

February 2015<br />

Citi GPS: Global Perspectives & Solutions<br />

37<br />

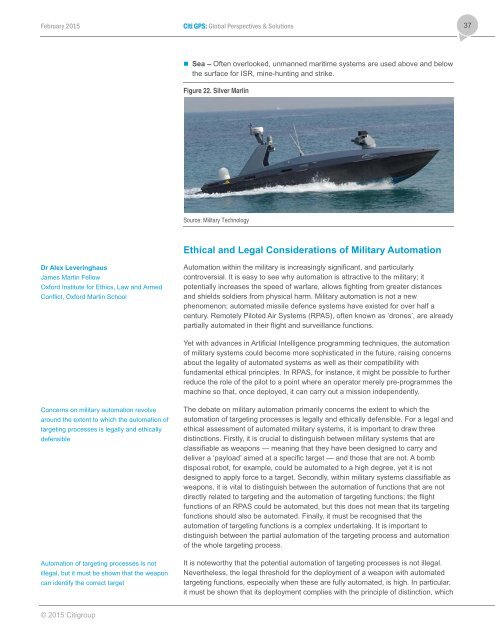

• Sea – Often overlooked, unmanned maritime systems are used above and below<br />

the surface for ISR, mine-hunting and strike.<br />

Figure 22. Silver Marlin<br />

Source: Military Technology<br />

Dr Alex Leveringhaus<br />

James Martin Fellow<br />

Oxford Institute for Ethics, Law and Armed<br />

Conflict, Oxford Martin School<br />

Concerns on military automation revolve<br />

around the extent to which the automation of<br />

targeting processes is legally and ethically<br />

defensible<br />

Automation of targeting processes is not<br />

illegal, but it must be shown that the weapon<br />

can identify the correct target<br />

Ethical and Legal Considerations of Military Automation<br />

Automation within the military is increasingly significant, and particularly<br />

controversial. It is easy to see why automation is attractive to the military; it<br />

potentially increases the speed of warfare, allows fighting from greater distances<br />

and shields soldiers from physical harm. Military automation is not a new<br />

phenomenon; automated missile defence systems have existed for over half a<br />

century. Remotely Piloted Air Systems (RPAS), often known as ‘drones’, are already<br />

partially automated in their flight and surveillance functions.<br />

Yet with advances in Artificial Intelligence programming techniques, the automation<br />

of military systems could become more sophisticated in the future, raising concerns<br />

about the legality of automated systems as well as their compatibility with<br />

fundamental ethical principles. In RPAS, for instance, it might be possible to further<br />

reduce the role of the pilot to a point where an operator merely pre-programmes the<br />

machine so that, once deployed, it can carry out a mission independently.<br />

The debate on military automation primarily concerns the extent to which the<br />

automation of targeting processes is legally and ethically defensible. For a legal and<br />

ethical assessment of automated military systems, it is important to draw three<br />

distinctions. Firstly, it is crucial to distinguish between military systems that are<br />

classifiable as weapons — meaning that they have been designed to carry and<br />

deliver a ‘payload’ aimed at a specific target — and those that are not. A bomb<br />

disposal robot, for example, could be automated to a high degree, yet it is not<br />

designed to apply force to a target. Secondly, within military systems classifiable as<br />

weapons, it is vital to distinguish between the automation of functions that are not<br />

directly related to targeting and the automation of targeting functions; the flight<br />

functions of an RPAS could be automated, but this does not mean that its targeting<br />

functions should also be automated. Finally, it must be recognised that the<br />

automation of targeting functions is a complex undertaking. It is important to<br />

distinguish between the partial automation of the targeting process and automation<br />

of the whole targeting process.<br />

It is noteworthy that the potential automation of targeting processes is not illegal.<br />

Nevertheless, the legal threshold for the deployment of a weapon with automated<br />

targeting functions, especially when these are fully automated, is high. In particular,<br />

it must be shown that its deployment complies with the principle of distinction, which<br />

© 2015 Citigroup