download LR pdf - Kabk

download LR pdf - Kabk

download LR pdf - Kabk

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

AR[t]Magazine about AugmentedReality, art and technologyAPRIL 201223

ColophonTable of contentsISSN Number2213-2481ContactThe Augmented Reality Lab (AR Lab)Royal Academy of Art, The Hague(Koninklijke Academie van Beeldende Kunsten)3236Prinsessegracht 42514 AN The HagueThe Netherlands+31 (0)70 3154795www.arlab.nlinfo@arlab.nlEditorial teamYolande Kolstee, Hanna Schraffenberger,Esmé Vahrmeijer (graphic design)and Jouke Verlinden.ContributorsWim van Eck, Jeroen van Erp, Pieter Jonker,Maarten Lamers, Stephan Lukosch, Ferenc Molnár(photography) and Robert Prevel.CoVer‘George’, an augmented reality headset designedby Niels Mulder during his Post Graduate CourseIndustrial Design (KABK), 2008www.arlab.nlWelcometo AR[t]Introducing added worldsYolande KolsteeInterview withHelen PapagiannisHanna SchraffenbergerThe Technology behind ArPieter JonkerRe-introducing MosquitosMaarten LamersLieven van Velthoven —The Racing StarHanna SchraffenbergerHow Did we do ITWim van EckPixels want to be freed!Introducing AugmentedReality enabling hardwaretechnologiesJouke Verlinden07 60081220Artist in ResidencePortrait: Marina de haasHanna SchraffenbergerA magical leverage —IN search of thekiller application.Jeroen van ErpThe Positioningof Virtual ObjectsRobert PrevelMEDIATED REALITY FOR CRIMESCENE INVESTIGATIONStephan LukoschDie WalküreWim van Eck, AR Lab Student Project667028 72303642764 5

WelcomE...to the first issue of AR[t],the magazine aboutAugmented Reality, artand technology!Starting with this issue, AR[t] is an aspiringmagazine series for the emerging AR communityinside and outside the Netherlands. Themagzine is run by a small and dedicated teamof researchers, artists and lecturers of the ARLab (based at the Royal Academy of Arts, TheHague), Delft University of Technology (TUDelft), Leiden University and SME. In AR[t], weshare our interest in Augmented Reality (AR),discuss its applications in the arts and provideinsight into the underlying technology.At the AR Lab, we aim to understand, develop,refine and improve the amalgamation of thephysical world with the virtual. We do thisthrough a project-based approach and with thehelp of research funding from RAAK-Pro. In themagazine series, we invite writers from the industry,interview artists working with AugmentedReality and discuss the latest technologicaldevelopments.It is our belief that AR and its associatedtechnologies are important to the field of newmedia: media artists experiment with the intersectionof the physical and the virtual and probethe limits of our sensory perception in order tocreate new experiences. Managers of culturalheritage are seeking after new possibilities forworldwide access to their collections. Designers,developers, architects and urban plannersare looking for new ways to better communicatetheir designs to clients. Designers of games andtheme parks want to create immersive experiencesthat integrate both the physical and thevirtual world. Marketing specialists are workingwith new interactive forms of communication.For all of them, AR can serve as a powerful toolto realize their visions.Media artists and designers who want to acquirean interesting position within the domain of newmedia have to gain knowledge about and experiencewith AR. This magazine series is intended toprovide both theoretical knowledge as well as aguide towards first practical experiences with AR.Our special focus lies on the diversity of contributions.Consequently, everybody who wantsto know more about AR should be able to findsomething of interest in this magzine, be theyart and design students, students from technicalbackgrounds as well as engineers, developers,inventors, philosophers or readers who justhappened to hear about AR and got curious.We hope you enjoy the first issue and invite youto check out the website www.arlab.nl to learnmore about Augmented Reality in the arts andthe work of the AR Lab.www.arlab.nl6 7

Artist: KAROLINA SOBECKA | http://www.gravitytrap.comadd information to a book, by looking at thebook and the screen at the same time.Display type II:AR glasses (off-screen)A far more sophisticated but not yet consumerfriendly method uses AR glasses or a headmounted display (HMD), also called a head-updisplay. With this device the extra information ismixed with one’s own perception of the world.The virtual images appear in the air, in the realworld, around you, and are not projected on ascreen. In type II there are two types of mixingthe real world with the virtual world:Video see-through: a camera captures the realworld. The virtual images are mixed with thecaptures (video images) of the real world andthis mix creates an Augmented Reality.Optical see-through: the real world is perceiveddirectly with one’s own eyes in real time. Viasmall translucent mirrors in goggles, virtualimages are displayed on top of the perceivedReality.Display type III:Projection based Augmented RealityWith projection based AR we project virtual 3Dscenes or objects on a surface of a building of anobject (or a person). To do this, we need to knowexactly the dimensions of the object we projectAR info onto. The projection is seen on the objector building with remarkable precision. This cangenerate very sophisticated or wild projectionson buildings. The Augmented Matter in Contextgroup, led by Jouke Verlinden at the Faculty ofIndustrial Design Engineering, TU-Delft, usespro jection-based AR for manipulating the appearanceof products.Connecting art andTechnologyThe 2011 IEEE International Symposium on Mixedand Augmented Reality (ISMAR) was held inBasel, Switzerland. In the track Arts, Media, andHumanities, 40 articles were offered discussingthe connection of ‘hard’ physics and ‘soft’ art.There are several ways in which art and AugmentedReality technology can be connected:we can, for example, make art with AugmentedReality technology, create Augmented Realityartworks or use Augmented Reality technologyto show and explain existing art (such as amonument like the Greek Pantheon or paintingsfrom the grottos of Lascaux). Most of the contributionsof the conference concerned AugmentedReality as a tool to present, explain or augmentexisting art. However, some visual artists use ARas a medium to create art.The role of the artist in working with the emergingtechnology of Augmented Reality has beendiscussed by Helen Papagiannis in her ISMARpaper The Role of the Artist in Evolving AR as aNew Medium (2011). In her paper, Helen Papagiannisreviews how the use of technology as a creativemedium has been discussed in recent years.She points out, that in 1988 John Pearson wroteabout how the computer offers artists “newmeans for expressing their ideas” (p.73., cited inPapagiannis, 2011, p.61). According to Pearson,“Technology has always been, the handmaiden ofthe visual arts, as is obvious, a technical means isalways necessary for the visual communication ofideas, of expression or the development of worksof art—tools and materials are required.” (p. 73)However, he points out that new technologies“were not developed by the artistic communityfor artistic purposes, but by science and industryto serve the pragmatic or utilitarian needs ofsociety.” (p.73., cited in Papagiannis, 2011, p.61)As Helen Papagiannis concludes, it is then up tothe artist “to act as a pioneer, pushing forwarda new aesthetic that exploits the unique materialsof the novel technology” (2011, p.61). LikeHelen, we believe this holds also for the emergingfield of AR technologies and we hope, artists willset out to create exciting new Augmented Realityart and thereby contribute to the interplaybetween art and technology. An interview withHelen Papagiannis can be found on page 12 of thismagazine. A portrait of the artist Marina de Haas,who did a residency at the AR Lab, can be foundon page 60.REFERENCES■ Milgram P. and Kishino, F., “A Taxonomy ofMixed Reality Visual Displays,” IEICE Trans.Information Systems, vol. E77-D, no. 12, 1994,pp. 1321-1329.■ Azuma, Ronald T., “A Survey of AugmentedReality”. In Presence: Teleoperators andVirtual Environments 6, 4 (August 1997),pp. 355-385.■ Papagiannis, H., “The Role of the Artistin Evolving AR as a New Medium”, 2011IEEE International Symposium on Mixed andAugmented Reality(ISMAR) – Arts, Media, andHumanities (ISMAR-AMH), Basel, Switserland,pp. 61-65.■ Pearson, J., “The computer: Liberator orJailer of The creative Spirit.” Leonardo,Supplemental Issue, Electronic Art, 1 (1988),pp. 73-80.10 11

Biography -Helen PapagiannisInterview withHelen PapagiannisBy Hanna SchraffenbergerHelen Papagiannis is a designer, artist,and PhD researcher specializing in AugmentedReality (AR) in Toronto, Canada.Helen has been working with AR since2005, exploring the creative possibilitiesfor AR with a focus on content developmentand storytelling. She is a SeniorResearch Associate at the AugmentedReality Lab at York University, in theDepartment of Film, Faculty of Fine Arts.Helen has presented her interactiveartwork and research at global juriedconferences and events including TEDx(Technology, Entertainment, Design),ISMAR (International Society for Mixedand Augmented Reality) and ISEA (InternationalSymposium for Electronic Art).Prior to her Augmented life, Helen was amember of the internationally renownedBruce Mau Design studio where she wasproject lead on “Massive Change:The Future of Global Design." Read moreabout Helen’s work on her blog and followher on Twitter: @ARstories.www.augmentedstories.comWhat is Augmented Reality?Augmented Reality (AR) is a real-time layering ofvirtual digital elements including text, images,video and 3D animations on top of our existingreality, made visible through AR enabled devicessuch as smart phones or tablets equipped witha camera. I often compare AR to cinema whenit was first new, for we are at a similar momentin AR’s evolution where there are currently noconventions or set aesthetics; this is a time ripewith possibilities for AR’s creative advancement.Like cinema when it first emerged, AR has commencedwith a focus on the technology withlittle consideration to content. AR content needsto catch up with AR technology. As a communityof designers, artists, researchers and commercialindustry, we need to advance content in ARand not stop with the technology, but look atwhat unique stories and utility AR can present.So far, AR technologies are stillnew to many people and oftenAR works cause a magical experience.Do you think AR will loseits magic once people get used tothe technology and have developedan understanding of how ARworks? How have you worked withthis ‘magical element’ in yourwork ‘The Amazing Cinemagician’?I wholeheartedly agree that AR can create amagical experience. In my TEDx 2010 talk, “HowDoes Wonderment Guide the Creative Process”(http://youtu.be/ScLgtkVTHDc), I discuss howAR enables a sense of wonder, allowing us to seeour environments anew. I often feel like a magicianwhen presenting demos of my AR work live;astonishment fills the eyes of the beholder questioning,“How did you do that?” So what happenswhen the magic trick is revealed, as you ask,when the illusion loses its novelty and becomeshabitual? In Virtual Art: Illusion to Immersion(2004), new media art-historian Oliver Graudiscusses how audiences are first overwhelmedby new and unaccustomed visual experiences,but later, once “habituation chips away at theillusion”, the new medium no longer possesses“the power to captivate” (p. 152). Grau writesthat at this stage the medium becomes “staleand the audience is hardened to its attemptsat illusion”; however, he notes, that it is at thisstage that “the observers are receptive to contentand media competence” (p. 152).When the initial wonder and novelty of thetechnology wear off, will it be then that AR isexplored as a possible media format for variouscontent and receive a wider public reception asa mass medium? Or is there an element of wonderthat need exist in the technology for it tobe effective and flourish?1213

Picture: Helen PapagiannisI discuss how as a designer/artist/PhD researcherI am both a practitioner and a researcher, a makerand a believer. As a practitioner, I do, create,design; as a researcher I dream, aspire, hope.I am a make-believer working with a technologythat is about make-believe, about imaginingpossibilities atop actualities. Now, more thanever, we need more creative adventurers andmake-believers to help AR continue to evolveand become a wondrous new medium, unlikeanything we’ve ever seen before! I spoke to theimportance and power of imagination and makebelieve,and how they pertain to AR at this criticaljunction in the medium’s evolution. Whenwe make-believe and when we imagine, we arein two places simultaneously; make-believe isabout projecting or layering our imaginationon top of a current situation or circumstance.In many ways, this is what AR is too: layeringimagined worlds on top of our existing reality.You’ve had quite a success withyour AR pop-up book ‘Who’sAfraid of Bugs?’ In your blog youtalk about your inspiration forthe story behind the book: it wasinspired by AR psychotherapystudies for the treatment ofphobias such as arachnophobia.Can you tell us more?Who’s Afraid of Bugs? was the world’s first AugmentedReality (AR) Pop-up designed for iPad2and iPhone 4. The book combines hand-craftedpaper-engineering and AR on mobile devices tocreate a tactile and hands-on storybook thatexplores the fear of bugs through narrative andplay. Integrating image tracking in the design,as opposed to black and white glyphs commonlyseen in AR, the book can hence be enjoyed aloneas a regular pop-up book, or supplemented withAugmented digital content when viewed througha mobile device equipped with a camera. Thebook is a playful exploration of fears using AR ina meaningful and fun way. Rhyming text takesthe reader through the storybook where various‘creepy crawlies’ (spider, ant, and butterfly) areawaiting to be discovered, appearing virtuallyas 3D models you can interact with. A tarantulaattacks when you touch it, an ant hyperlinks toeducational content with images and diagrams,and a butterfly appears flapping its wings atopa flower in a meadow. Hands are integratedthroughout the book design, whether its pla cingone’s hand down to have the tarantula crawlover you virtually, the hand holding the magnifyinglens that sees the ant, or the hands that popupholding the flower upon which the butterflyappears. It’s a method to involve the reader inthe narrative, but also comments on the uniquetactility AR presents, bridging the digital withthe physical. Further, the story for the ARPop-up Book was inspired by AR psychotherapystudies for the treatment of phobias such asarachnophobia. AR provides a safe, controlledenvironment to conduct exposure therapywithin a patient’s physical surroundings, creatinga more believable scenario with heightenedpresence (defined as the sense of really being inan imagined or perceived place or scenario) andprovides greater immediacy than in Virtual Reality(VR). A video of the book may be watched athttp://vimeo.com/25608606.In your work, technology servesas an inspiration. For example,rather than starting with a storywhich is then adapted to a certaintechnology, you start out withAR technology, investigate itsstrengths and weaknesses and sothe story evolves. However, thisdoes not limit you to only use thestrength of a medium.On the contrary, weaknesses suchas accidents and glitches havefor example influenced your work‘Hallucinatory AR’. Can you tell usa bit more about this work?Hallucinatory Augmented Reality (AR), 2007,was an experiment which investigated thepossibility of images which were not glyphs/ARtrackables to generate AR imagery. The projectsevolved out of accidents, incidents in earlierexperiments in which the AR software was mistakingnon-marker imagery for AR glyphs andattempted to generate AR imagery. This confusion,by the software, resulted in unexpectedand random flickering AR imagery. I decided toexplore the creative and artistic possibilitiesof this effect further and conduct experimentswith non-traditional marker-based tracking.The process entailed a study of what types ofnon-marker images might generate such ‘hallucinations’and a search for imagery that wouldevoke or call upon multiple AR imagery/videosfrom a single image/non-marker.Upon multiple image searches, one imageemerged which proved to be quite extraordinary.A cathedral stained glass window wasable to evoke four different AR videos, the onlyinstance, from among many other images, inwhich multiple AR imagery appeared. Upon closeexamination of the image, focusing in and outwith a web camera, a face began to emerge inthe black and white pattern. A fantastical imageof a man was encountered. Interestingly, itwas when the image was blurred into this faceusing the web camera that the AR hallucinatoryimagery worked best, rapidly multiplying andappearing more prominently. Although numerousattempts were made with similar images,no other such instances occurred; this imageappeared to be unique.The challenge now rested in the choice of whattypes of imagery to curate into this hallucinatoryviewing: what imagery would be best suited tothis phantasmagoric and dream-like form?My criteria for imagery/videos were like-formand shape, in an attempt to create a collage-likeset of visuals. As the sequence or duration ofthe imagery in Hallucinatory AR could not bepredetermined, the goal was to identify imagery16 17

that possessed similarities, through which thepossibility for visual synchronicities existed.Themes of intrusions and chance encounters areat play in Hallucinatory AR, inspired in part bySurrealist artist Max Ernst. In What is the Mechanismof Collage? (1936), Ernst writes:One rainy day in 1919, finding myself on a villageon the Rhine, I was struck by the obsessionwhich held under my gaze the pages of an illustratedcatalogue showing objects designed foranthropologic, microscopic, psychologic, mineralogic,and paleontologic demonstration. ThereI found brought together elements of figurationso remote that the sheer absurdity of that collectionprovoked a sudden intensification ofthe visionary faculties in me and brought forthan illusive succession of contradictory images,double, triple, and multiple images, piling upon each other with the persistence and rapiditywhich are particular to love memories and visionsof half-sleep (p. 427).Of particular interest to my work in exploringand experimenting with Hallucinatory AR wasErnst’s description of an “illusive succession ofcontradictory images” that were “brought forth”(as though independent of the artist), rapidlymultiplying and “piling up” in a state of “halfsleep”.Similarities can be drawn to the processof the seemingly disparate AR images jarringlycoming in and out of view, layered atop oneanother.example of the technology failing. To the artist,however, there is poetry in these glitches, withnew possibilities of expression and new visualforms emerging.On the topic of glitches and accidents, I’d like toreturn to Méliès. Méliès became famous for thestop trick, or double exposure special effect,a technique which evolved from an accident:Méliès’ camera jammed while filming the streetsof Paris; upon playing back the film, he observedan omnibus transforming into a hearse. Ratherthan discounting this as a technical failure, orglitch, he utilized it as a technique in his films.Hallucinatory AR also evolved from an accident,which was embraced and applied in attemptto evolve a potentially new visual mode in themedium of AR. Méliès introduced new formalstyles, conventions and techniques that werespecific to the medium of film; novel styles andnew conventions will also emerge from AR artistsand creative adventurers who fully embracethe medium.[1] Comte de Lautreamont’s often quoted allegory,famous for inspiring both Max Ernst and AndrewBreton, qtd. in: Williams, Robert. “Art Theory: AnHistorical Introduction.” Malden, MA: BlackwellPublishing, 2004: 197“As beautiful as the chanceencounter of a sewingmachine and an umbrellaon an operating table.”Comte de LautréamontOne wonders if these visual accidents are whatthe future of AR might hold: of unwelcomeglitches in software systems as Bruce Sterlingdescribes on Beyond the Beyond in 2009; orperhaps we might come to delight in the visualpoetry of these Augmented hallucinations thatare “As beautiful as the chance encounter of asewing machine and an umbrella on an operatingtable.” 1To a computer scientist, these ‘glitches’, asapplied in Hallucinatory AR, could potentiallybe viewed or interpreted as a disaster, as an18Picture: Pippin Lee19

The Technology BehindAugmented realityAugmented Reality (AR) is a field that is primarilyconcerned with realistically adding computergeneratedimages to the image one perceivesfrom the real world.AR comes in several flavors. Best known is thepractice of using flatscreens or projectors,but nowadays AR can be experienced even onsmartphones and tablet PCs. The crux is that 3Ddigital data from another source is added to theordinary physical world, which is for exampleseen through a camera. We can create this additionaldata ourselves, e.g. using 3D drawingprograms such as 3D Studio Max, but we canalso add CT and MRI data or even live TV imagesto the real world. Likewise, animated threedimensional objects (avatars), which then can bedisplayed in the real world, can be made using avisualization program like Cinema 4D. Instead ofdisplaying information on conventional monitors,the data can also be added to the vision of theuser by means of a head-mounted display (HMD)or Head-Up Display. This is a second, less knownform of Augmented Reality. It is already knownto fighter pilots, among others. We distinguishtwo types of HMDs, namely: Optical See Through(OST) headsets and Video See Through (VST)headsets. OST headsets use semi-transparentmirrors or prisms, through which one can keepseeing the real world. At the same time, virtualobjects can be added to this view using smalldisplays that are placed on top of the prisms.VSTs are in essence Virtual Reality goggles, sothe displays are placed directly in front of youreyes. In order to see the real world, there aretwo cameras attached on the other side of thelittle displays. You can then see the AugmentedReality by mixing the video signal coming fromthe camera with the video signal containing thevirtual objects.20 21

Underlying TechnologyScreens and GlassesUnlike screen-based AR, HMDs provide depthperception as both eyes receive an image.When objects are projected on a 2D screen,one can convey an experience of depth byletting the objects move. Recent 3D screensallow you to view stationary objects in depth.3D televisions that work with glasses quicklyalternate the right and left image - in sync withthis, the glasses use active shutters which letthe image in turn reach the left or the righteye. This happens so fast that it looks like youview both, the left and right image simultaneously.3D television displays that work withoutglasses make use of little lenses which areplaced directly on the screen. Those refractthe left and right image, so that each eye canonly see the corresponding image. See forexample www.dimenco.eu/display-technology.This is essentially the same method as usedon the well known 3D postcards on which abeautiful lady winks when the card is slightlyturned. 3D film makes use of two projectorsthat show the left and right images simultaneously,however, each of them is polarized in adifferent way. The left and right lenses of theglasses have matching polarizations and onlylet through the light of to the correspondingprojector. The important point with screensis that you are always bound to the physicallocation of the display while headset basedtechniques allow you to roam freely. This iscalled immersive visualization — you are immersedin a virtual world. You can walk aroundin the 3D world and move around and entervirtual 3D objects.Video-See-Through AR will become popularwithin a very short time and ultimately becomean extension of the smartphone. This isbecause both display technology and cameratechnology have made great strides with theadvent of smartphones. What currently stillmight stand in the way of smartphone modelsis computing power and energy consumption.Companies such as Microsoft, Google, Sony,Zeiss,... will enter the consumer market soonwith AR technology.Tracking TechnologyA current obstacle for major applications whichsoon will be resolved is the tracking technology.The problem with AR is embedding the virtualobjects in the real world. You can comparethis with color printing: the colors, e.g., cyan,magenta, yellow and black have to be printedproperly aligned to each other. What youoften see in prints which are not cut yet, areso called fiducial markers on the edge of theprinting plates that serve as a reference forthe alignment of the colors. These are alsonecessary in AR. Often, you see that markersare used onto which a 3D virtual object isprojected. Moving and rotating the marker, letsyou move and rotate the virtual object. Sucha marker is comparable to the fiducial markerin color printing. With the help of computervision technology, the camera of the headsetcan identify the marker and based on it’s size,shape and position, conclude the relative positionof the camera. If you move your head relativeto the marker (with the virtual object), thecomputer knows how the image on the displaymust be transformed so that the virtual objectremains stationary. And conversely, if yourhead is stationary and you rotate the marker,it knows how the virtual object should rotateso that it remains on top of the marker.AR smartphone applications such as Layar usethe build in GPS and compass for the tracking.This has an accuracy of meters and measuresangles of 5-10 degrees. Camera-based tracking,however, is accurate to the centimetre and canmeasure angles of several degrees. Nowadays,using markers for the tracking is already outof date and we use so called “natural featuretracking” also called “keypoint tracking”.Here, the computer searches for conspicuous(salient) key points in the left and right cameraimage. If, for example, you twist your head, thisshift is determined on the basis of those key pointswith more than 30 frames per second. This way, a3D map of these keypoints can be built and the computerknows the relationship (distance and angle)between the keypoints and the stereo camera. Thismethod is more robust than marker based trackingbecause you have many keypoints — widely spreadin the scene — and not just the four corners of themarker close together in the scene. If someonewalks in front of the camera and blocks some of thekeypoints, there will still be enough keypoints leftand the tracking is not lost. Moreover, you do nothave to stick markers all over the world.Collaboration with the Royal Academy of Arts(KABK) in The Hague in the AR Lab (RoyalAcademy, TU Delft, Leiden University, variousSMEs) in the realization of applications.The TU Delft has done research on AR since 1999.Since 2006, the university works with the artacademy in The Hague. The idea is that AR is a newtechnology with its own merits. Artists are verygood at finding out what is possible with the newtechnology. Here are some pictures of realizedprojects. liseerde projectenFig 1. The current technology that replaces the markerswith natural feature tracking or so called keypointtracking. Instead of the four corners of the marker, thecomputer itself determines which points in the left andright images can be used as anchor points for calculatingthe 3D pose of the camera in 3D space. From top:1: you can use all points in the left and right imagesto slowly build a complete 3D map. Such a map can,for example, be used to relive your past experiencebecause you can again walk in the now virtual space.2: the 3D keypoint space and the trace of thecamera position within it.3: keypoints (the color indicates the suitability)4: you can place virtual objects (eyes) on an existingsurface2223

There are many applicationsthat can be realized using AR;they will find their way in thecoming decades:1. Head-Up Displays have already been usedfor many years in the Air Force for fighterpilots; this can be extended to othervehicles and civil applications.2. The billboards during the broadcast ofa football game are essentially also AR;more can be done by also ivolving thegame itself an allowing interaction of tehuser, such as off-side line projection.3. In the professional sphere, you can, forexample, visualize where pipes under thestreet lie or should lie. Ditto for designingships, houses, planes, trucks and cars.What’s outlined in a CAD drawing couldbe drawn in the real world, allowingyou to see in 3D if and where there is amismatch.4. You can easily find books you are lookingfor in the library.5. You can find out where restaurants are ina city...6. You can pimp theater / musical / opera /pop concerts with (immersive) AR decor.7. You can arrange virtual furniture or curtainsfrom the IKEA catalog and see howthey look in your home.8. Maintenance of complex devices willbecome easier, e.g. you can virtually seewhere the paper in the copier is jammed.9. If you enter a restaurant or the hardwarestore, a virtual avatar can show you theplace to find that special bolt or table.showing the Serra roomin Museum Boijmans vanBeuningen during theexhibition Sgraffito in 3DPicture: Joachim Rotteveel26 27

Lieven vanVelthoven —The Racing Star“It ain’t fun if it ain’t real time”By Hanna SchraffenbergerWhen I enter Lieven vanVelthoven’s room, the peoplefrom the Efteling have justleft. They are interested in his‘virtual growth’ installation.And they are not the only onesinterested in Lieven’s work. Inthe last year, he has won theJury Award for Best New MediaProduction 2011 of the internationalCinekid Youth MediaFestival as well as the DutchGame Award 2011 for the BestStudent Game. The winningmixed REALITY game ‘Room Racers’has been shown at theDiscovery festival, Mediamatic,the STRP festival and the ZKM inKarlsruhe. His virtual growthinstallation has embellishedthe streets of Amsterdam atnight. Now, he is going to showRoom Racers to me, in his livingroom — where it all started.The room is packed with stuff and on first sightit seems rather chaotic, with a lot of randomthings laying on the floor. There are a fewplants, which probably don’t get enough light,because Lieven likes the dark (that’s when hisprojections look best). It is only when he turnson the beamer, that I realize that his room isactually not chaotic at all. The shoe, magnifyingclass, video games, tape and stapler which coverthe floor are all part of the game.“You create your own race gametracks by placing real stuff on thefloor”Lieven tells me. He hands me a controller andsoon we are racing the little projected carsaround the chocolate spread, marbles, a remotecontrol and a flash light. Trying not to crash thecar into a belt, I tell him what I remember aboutwhen I first met him a few years ago at a MediaTechnology course at Leiden University. Backthen, he was programming a virtual bird, whichwould fly from one room to another, preferringthe room in which it was quiet. Loud and suddensounds would scare the bird away into anotherroom. The course for which he developed it wascalled sound space interaction, and his installationwas solely based on sound. I ask himwhether the virtual bird was his first contactwith Augmented Reality. Lieven laughs.“It’s interesting that you call itAR, as it only uses sound!”Indeed, most of Lieven’s work is based oninteractive projections and plays with visualaugmentations of our real environment. But likethe bird, all of them are interactive and workin real-time. Looking back, the bird was not hisfirst AR work.“My first encounter with AR wasduring our first Media Technologycourse — a visit to the Ars Electronciafestival in 2007 — whereI saw Pablo Valbuena’s AugmentedSculpture. It was amazing. I wasasking myself, can I do somethinglike this but interactive instead?”Armed with a bachelor in technical computerscience from TU Delft and the new found possibilityto bring in his own curiosity and ideas atthe Media Technology Master program at LeidenUniversity, he set out to build his own interactiveprojection based works.30 31

Room RacersUp to four players race their virtual cars around real objectswhich are lying on the floor. Players can drop in or out of thegame at any time. Everything you can find can be placed onthe floor to change the route.Room Racers makes use of projection-based mixed reality.The structure of the floor is analysed in real-time using amodified camera and self-written software. Virtual cars areprojected onto the real environment and interact with thedetected objects that are lying on the floor.The game has won the Jury Award for Best New Media Production2011 of the international Cinekid Youth Media Festival,and the Dutch Game Award 2011 for Best Student Game. RoomRacers shas been shown at several international media festivals.You can play Room Racers at the 'Car Culture' expositionat the Lentos Kunstmuseum in Linz, Austria until 4th of July2012.Picture: Lieven van Velthoven, Room Racers at ZKM | Center for Arts and Mediain Karlsruhe, Germany on June 19th, 20113233

“The first time, I experimentedwith the combination of the realand the virtual myself was in apiece called shadow creatureswhich I made with Lisa Dalhuijsenduring our first semester in 2007.”More interactive projections followed in thenext semester and in 2008, the idea for RoomRacers was born. A first prototype was build ina week: a projected car bumping into real worldthings. After that followed months and monthsof optimizations. Everything is done by Lievenhimself, mostly at night in front of the computer.“My projects are never reallyfinished, they are always work inprogress, but if something worksfine in my room, it’s time to takeit out in the world.”After having friends over and playing with thecars until six o’clock in the morning, Lievenknows it’s time to steer the cars out of his roomand show them to the outside world.“I wanted to present Room Racersbut I didn’t know anyone, andno one knew me. There was nonetwork I was part of.”Uninhibited by this, Lieven took the initiativeand asked the Discovery Festival if they wereinterested in his work. Luckily, they were — andshowed two of his interactive games at the DiscoveryFestival 2010. After the festival requestsstarted coming and the cars kept rolling. WhenI ask him about this continuing success he isdivided:“It’s fun, but it takes a lot of time— I have not been able to programas much as I used to.”His success does surprise him and he especiallydid not expect the attention it gets in an artcontext.“I knew it was fun. That becameclear when I had friends over andwe played with it all night. But Idid not expect the awards. And Idid not expect it to be relevantin the art scene. I do not thinkit’s art, it’s just a game. I don’tconsider myself an artist. I am adeveloper and I like to do interactiveprojections. Room Racers ismy least arty project, neverthelessit got a lot of response in theart context.”A piece which he actually considers more of anartwork is Virtual Growth: a mobile installationwhich projects autonomous growing structuresonto any environment you place it in, be itbuildings, people or nature.“For me AR has to take place inthe real world. I don’t like screens.I want to get away from them. Ihave always been interested inother ways of interacting withcomputers, without mice, withoutscreens. There is a lot of screenbased AR, but for me AR is reallyabout projecting into the realworld. Put it in the real world,identify real world objects, do it inreal-time, thats my philosophy. Itain’t fun if it ain’t real-time. Oneday, I want to go through a citywith a van and do projections onbuildings, trees, people and whateverelse I pass.”For now, he is bound to a bike but that doesnot stop him. Virtual Growth works fast andstable, even on a bike. That has been witnessedin Amsterdam, where the audiovisual bicycleproject ‘Volle Band’ put beamers on bikes andinvented Lieven to augmented the city with hismobile installation. People who experiencedVirtual Growth on his journeys around Amsterdam,at festivals and parties, are enthusiasticabout his (‘smashing!’) entertainment-art. As thevirtual structure grows, the audience membersnot only start to interact with the piece but alsowith each other.“They put themselves in frontof the projector, have it projectingonto themselves and pass onthe projection to other peopleby touching them. I don’t explainanything. I believe in simpleideas, not complicated concepts.The piece has to speak for itself.If people try it, immediately getit, enjoy it and tell other peopleabout it, it works!”Virtual Growth works, that becomes clear fromthe many happy smiling faces the projectiongrows upon. And that’s also what counts forLieven.“At first it was hard, I didn’t getpaid for doing these projects. Butwhen people see them and are enthusiastic,that makes me happy.If I see people enjoying my work,and playing with it, that’s whatreally counts.”I wonder where he gets the energy to work thatmuch alongside being a student. He tells me,what drives him, is that he enjoys it. He likes tospend the evenings with the programming languageC#. But the fact that he enjoys working onhis ideas, does not only keep him motivated butalso has caused him to postpone a few coursesat university. While talking, he smokes hiscigarette and takes the ashtray from the floor.With the road no longer blocked by it, the carstake a different route now. Lieven might take adifferent route soon as well. I ask him, if he willstill be working from his living room, realizinghis own ideas, once he has graduated.“It’s actually funny. It all startedto fill my portfolio in order to geta cool job. I wanted to have somethings to show besides a diploma.That’s why I started realizing myideas. It got out of control andsoon I was realizing one idea afterthe other. And maybe, I’ll justcontinue doing it. But also, thereare quite some companies andjobs I’d enjoy working for. FirstI have to graduate anyway.”If I have learned anything about Lieven and hiswork, I am sure his graduation project will beplaced in the real world and work in in realtime.More than that, it will be fun. It ain’tLieven, if it ain’t’ fun.Name:lieven van VelthovenBorn: 1984Study:media Technology MSc,Leiden UniversityBackground: Computer Science,TU DelftSelected AR Works: Room Racers,Virtual GrowthWatch:http://www.youtube.com/user/lievenvv34 35

How did we do it:adding virtual sculpturesat the Kröller-Müller MuseumBy Wim van EckAlways wanted to create your own augmented reality projectsbut never knew how? Don’t worry, AR[t] is going tohelp you! however, There are many hurdles to take whenrealizing an augmented reality project. Ideally you shouldbe a skillful 3d animator to create your own virtual objects,and a great programmer to make the project technicallywork. providing you don’t just want to make a fancytech-demo, you also need to come up with a great concept!My name is Wim van Eck and I work at the ARLab, based at the Royal Academy of Art. One ofmy tasks is to help art-students realize their AugmentedReality projects. These students havegreat concepts, but often lack experience in 3danimation and programming. Logically I shouldtell them to follow animation and programmingcourses, but since the average deadline for theirprojects is counted in weeks instead of monthsor years there is seldom time for that... In thecoming issues of AR[t] I will explain how the ARLab helps students to realize their projects andhow we try to overcome technical boundaries,showing actual projects we worked on by example.Since this is the first issue of our magazineI will give a short overview of recommendableprograms for Augmented Reality development.We will start with 3d animation programs, whichwe need to create our 3d models. There aremany 3d animation packages, the more wellknown ones include 3ds Max, Maya, Cinema 4d,Softimage, Lightwave, Modo and the open sourceBlender (www.blender.org). These are all greatprograms, however at the AR Lab we mostly useCinema 4d (image 1) since it is very user friendlyand because of that easier to learn. It is a shamethat the free Blender still has a steep learningcurve since it is otherwise an excellent program.You can <strong>download</strong> a demo of Cinema 4d athttp://www.maxon.net/<strong>download</strong>s/demo-version.html,these are some good tutorial sites toget you started:http://www.cineversity.comhttp://www.c4dcafe.comhttp://greyscalegorilla.comImage 1Image 2 Image 3 | Picture by Klaas A. Mulder Image 4In case you don’t want to create your own 3dmodels you can also <strong>download</strong> them from variouswebsites. Turbosquid (http://www.turbosquid.com),for example, offers good quality butoften at a high price, while free sites such asArtist-3d (http://artist-3d.com) have a more variedquality. When a 3d model is not constructedproperly it might give problems when you importit or visualize it. In coming issues of AR[t] wewill talk more about optimizing 3d models forAugmented Reality usage. To actually add these3d models to the real world you need AugmentedReality software. Again there are manyoptions, with new software being added continuously.Probably the easiest to use software isBuildAR (http://www.buildar.co.nz) which isavailable for Windows and OSX. It is easy toimport 3d models, video and sound and there isa demo available. There are excellent tutorialson their site to get you started. In case you wantto develop for iOS or Android the free Junaio(http://www.junaio.com) is a good option. Theironline GLUE application is easy to use, thoughtheir preferred .m2d format for 3d models isnot the most common. In my opinion the mostpowerful Augmented Reality software right nowis Vuforia (https://developer.qualcomm.com/develop/mobile-technologies/Augmented-reality)in combination with the excellent game-engineUnity (www.unity3d.com). This combinationoffers high-quality visuals with easy to scriptinteraction on iOS and Android devicesSweet summer nightsat the Kröller-MüllerMuseum.As mentioned before in the introduction wewill show the workflow of AR Lab projects withthese ‘How did we do it’ articles. In 2009 the ARLab was invited by the Kröller-Müller Museum topresent during the ‘Sweet Summer Nights’, anevening full of cultural activities in the famoussculpture garden of the museum. We were askedto develop an Augmented Reality installationaimed at the whole family and found a diversegroup of students to work on the project. Nowthe most important part of the project started,brainstorming!Our location in the sculpture garden was inbetweentwo sculptures, ‘Man and woman’, astone sculpture of a couple by Eugène Dodeigne(image 2) and ‘Igloo di pietra’, a dome shapedsculpture by Mario Merz (image 3). We decidedto read more about these works, and learnedthat Dodeigne had originally intended to createtwo couples instead of one, placed together in awild natural environment. We decided to virtuallyadd the second couple and also add a morewild environment, just as Dodeigne initially hadin mind. To be able to see these additions weplaced a screen which can rotate 360 degreesbetween the two sculptures (image 4).36 37

=Image 7Image 5A webcam was placed on top of the screen,and a laptop running ARToolkit (http://www.hitl.washington.edu/artoolkit) was mountedon the back of the screen. A large marker wasplaced near the sculpture as a reference pointfor ARToolkit.Now it was time to create the 3d models of theextra couple and environment. The studentsworking on this part of the project didn’t havemuch experience with 3d animation, and therewasn’t much time to teach them, so manuallymodeling the sculptures would be a difficult task.Soon options such as 3d scanning the sculpturewere opted, but it still needs quite some skillto actually prepare a 3d scan for AugmentedReality usage. We will talk more about that ina coming issue of this magazine.But when we look carefully at our setup (image5) we can draw some interesting conclusions.Our screen is immobile, we will always see ouradded 3d model from the same angle. So sincewe will never be able to see the back of the 3dmodel there is no need to actually model thispart. This is a common practice while making 3dmodels, you can compare it with set constructionfor Hollywood movies where they also onlyactually build what the camera will see. This willalready save us quite some work. We can alsosee the screen is positioned quite far away fromthe sculpture, and when an object is viewedfrom a distance it will optically lose its depth.When you are one meter away from an objectand take one step aside you will see the side ofthe object, but if the same object is a hundredmeter away you will hardly see a change in perspectivewhen changing your position (see image6). From that distance people will hardly see thedifference between an actual 3d model and aplain 2d image. This means we could actually usephotographs or drawings instead of a complex 3dmodel, making the whole process easier again.We decided to follow this route.Image 6Image 8Image 9Image 10Image 113839

The Lab collaborated in this project with students from different departmentsof the KABK: Ferenc Molnar, Mit Koevoets, Jing Foon Yu, MarcelKerkmans and Alrik Stelling. The AR Lab team consisted of: YolandeKolstee, Wim van Eck, Melissa Coleman en Pawel Pokutycki, supported byMartin Sjardijn and Joachim Rotteveel.Original photograph by Klaas A. MulderImage 12To be able to place the photograph of thesculpture in our 3d scene we have to assignit to a placeholder, a single polygon, image 7shows how this could look.This actually looks quite awful, we see thestatue but also all the white around it from theimage. To solve this we need to make usage ofsomething called an alpha channel, an optionyou can find in every 3d animation package(image 8 shows where it is located in the materialeditor of Cinema 4d). An alpha channel isa grayscale image which declares which partsof an image are visible, white is opaque, blackis transparent. Detailed tutorials about alphachannels are easily found on the internet.As you can see this looks much better (image 9).We followed the same procedure for the secondstatue and the grass (image 10), using manyseparate polygons to create enough randomnessfor the grass. As long as you see these modelsfrom the right angle they look quite realistic(image 11). In this case this 2.5d approach probablygives even better results than a ‘normal’ 3dmodel, and it is much easier to create. Anotheradvantage is that the 2.5d approach is very easyto compute since it uses few polygons, so youdon’t need a very powerful computer to run itor you can have many models on screen at thesame time. Image 12 shows the final setup.For the iglo sculpture by Mario Merz we useda similar approach. A graphic design studentimagined what could be living inside the iglo,and started drawing a variety of plants andcreatures. Using the same 2.5d approach asdescribed before we used these drawings andplaced them around the iglo, and an animationwas shown of a plant growing out of the iglo(image 12).We can conclude that it is good practice toanalyze your scene before you start making your3d models. You don’t always need to model allthe detail, and using photographs or drawingscan be a very good alternative. The next issueof AR[t] will feature a new ‘How did we do it’, incase you have any questions you can contact meat w.vaneck@kabk.nl40 41

Pixels want to be freed!Introducing Augmented Realityenabling hardware technologiesBy Jouke Verlinden1. IntroductionFrom the early head-up display in the movie“Robocop” to the present, Augmented Reality(AR) has evolved to a manageable ICT environmentthat must be considered by product designersof the 21st century.Instead of focusing on a variety of applicationsand software solutions, this article will discussthe essential hardware of Augmented Reality(AR): display techniques and tracking techniques.We argue that these two fields differentiate ARfrom regular human-user interfaces and tuningthese is essential in realizing an AR experience.As often, there is a vast body of knowledge behindeach of the principles discussed below,hence a large variety of literature references isgiven.Furthermore, the first author of this articlefound it important to elude his own preferencesand experiences throughout this discussion.We hope that this material strikes a chord andmakes you consider employing AR in your designs.After all, why should digital informationalways be confined to a dull, rectangular screen?42 43

2. Display Technologies2.1 Head-mounted displayTo categorise AR display technologies, twoimportant characteristics should be identified:imaging generation principle and physicallayout.Generic AR technology surveys describe alarge variety of display technologies that supportimaging generation (Azuma, 1997; Azumaet al., 2001); these principles can be categorisedinto:3. Projector-based systems: one or moreprojectors cast digital imagery directlyon the physical environment.As Raskar and Bimber (2004, p.72) argued, animportant consideration in deploying an Augmentedsystem is the physical layout of theimage generation. For each imaging generationprinciple mentioned above, the imagingdisplay can be arranged between user andphysical object in three distinct ways:1. Video-mixing. A camera is mounted somewhereon the product; computer graphicsare combined with captured video framesin real time. The result is displayed on anoblique surface, for example, an immersiveHead-Mounted Display (HMD).2. See-through: Augmentation by thisprinciple typically employs half-silvereda) head-attached, which presents digitalimages directly in front of the viewer’seyes, establishing a personal informationdisplay.b) hand-held, carried by a user and does notcover the whole field of viewc) spatial, which is fixed to the environment.mirrors to superimpose computer graphicsonto the user’s view, as found in head-updisplays of modern fighter jets.The resulting imaging and arrangement combinationsare summarised in Table 1.1.Video-mixing 2. See-through 3. Projection-basedA. Head-attached Head-mounted display (HMD)B. Hand-held Handheld devicesSee-through boards Spatial projection-basedC. Spatial Embedded displayTable 1. Image generation principles for Augmented RealityHead-attached systems refer to HMD solutions,which can employ either of the three imagegeneration technologies. Even the first headmounteddisplays developed by virtue of theVirtual Reality already considered a see-throughsystem with half-silvered mirrors to mergevirtual line drawings with the physical environment(Sutherland, 1967). Since then, the varietyof head-attached imaging systems has beenexpanded and encompasses all three principlesfor AR: video-mixing, see-through and directprojection on the physical world (Azuma et al.,2001). A benefit of this approach is its handsfreenature. Secondly, it offers personalisedcontent, enabling each user to have a privateview of the scene with customised and sensitivedata that das not have to be shared. Formost applications, HMDs have been consideredinadequate, both in the case of see-through andvideo-mixing imaging. According to Klinker et al.(2002), HMDs introduce a large barrier betweenthe user and the object and their resolution isinsufficient for IAP — typically 800 × 600 pixelsfor the complete field of view (rendering theuser “legally blind”by American standards).Similar reasoning was found in Bochenek et al.(2001), in which both the objective and subjectiveassessment of HMDs were less than those ofhand-held or spatial imaging devices. However,new developments (specifically high-resolutionOLED displays) show promising new devices, specificallyfor the professional market (Carl Zeiss)and enterntainment (Sony), see figure right.Figure 1. Recent Head Mounted Displays (above: KABK theHague and under: Carl Zeiss).When the AR image generation and layout principles are combined, the following collection ofdisplay technologies are identified: HMD, Handheld devices, embedded screens, see-throughboards and spatial projection-based AR. These are briefly discussed in the following sections.2.2 Handheld displayHand-held video-mixing solutions are based onsmartphones, PDAs or other mobile devicesequipped with a screen and camera. With theadvent of powerful mobile electronics, handheldAugmented Reality technologies are emerging.By employing built-in cameras on smartphonesor PDAs, video mixing is enabled while concurrentuse is being supported by communication4445

GPS AntennaCamera + IMUJoystick HandlesUMPCFigure 2. The Vesp´R device for underground infrastructure visualization (Schall et al., 2008).2.3 Embedded displayAnother AR display option is to include a numberof small LCD screens in the observed object inorder to display the virtual elements directly onthe physical object. Although arguably an augmentationsolution, embedded screens do adddigital information on product surfaces.This practice is found in the later stages of prototypingmobile phones and similar informationappliances. Such screens typically have a similarresolution as that of PDAs and mobile phones,which is QVGA: 320 × 240 pixels. Such devicesare connected to a workstation by a specialisedcable, which can be omitted if autonomouslycomponents are used, such as a smartphone.Regular embedded screens can only be used onplanar surfaces and their size is limited whiletheir weight impedes larger use. With the adventof novel, flexible e-Paper and Organic Light-Emitting Diode (OLED) technologies, it mightbe possible to cover a part of a physical modelwith such screens. To our knowledge, no suchsystems have been developed or commercialisedso far. Although it does not support changinglight effects, the Luminex material approximatesthis by using an LED/fibreglass based fabric (seeFigure 4). A Dutch company recently presenteda fully interactive light-emitting fabric based onintegrated RGB LEDs labelled ‘lumalive’. Theseinitiatives can manifest as new ways to supportprototyping scenarios that require a high localresolution and complete unobstructedness. However,the fit to the underlying geometry remainsa challenge, as well as embedding the associatedcontrol electronics/wiring. An elegant solutionto the second challenge was given by (Saakes etal 2010) entitled “the slow display: by temporarilychanging the color of photochromatic paintproperties by UV laser projection. This effectlasts for a couple of minutes and demonstrateshow fashion and AR could meet.through wireless networks (Schmalstieg andWagner, 2008). The resulting device acts as ahand-held window of a mixed reality. An exampleof such a solution is shown in Figure 2, whichis a combination of an Ultra Mobile PersonalComputer (UMPC), a Global Positioning System‘such systemsare found ineach modernsmartphone’(GPS) antenna for global position tracking, acamera for local position and orientation sensingalong with video mixing. As of today, such systemsare found in each modern smartphone,and apps such as Layar (www.layar.com) andJunaio (www.junaio.com) offer such functionsfor free to the user — allowing different layers ofcontent to the user (often social-media based).The advantage of using a video-mixing approachis that the lag times in processing are less influentialthan with the see-through or projector-basedsystems — the live video feed is also delayed and,thus, establishes a consistent combined image.This hand-held solution works well for occasional,mobile use. Long-term use can cause strain in thearms. The challenges in employing this principleare the limited screen coverage/resolution (typicallywith a 4-in diameter and a resolution of 320× 240 pixels). Furthermore, memory, processingpower and graphics processing is limited to renderingrelatively simple 3D scenes, although thesecapabilities are rapidly improving by the upcomingdual-core and quad-core mobile CPUs.Figure 3. Impression of the Luminex material46 47

2.4 See-through boardSee-through boards vary in size between desktopand hand-held versions. The Augmentedengineering system (Bimber et al., 2001) andthe AR extension of the haptic sculpting project(Bordegoni and Covarrubias, 2007) are examplesof the use of see-through technologies, whichtypically employ a half-silvered mirror to mixvirtual models with a physical object (Figure4). Similar to the Pepper’s ghost phenomenon,standard stereoscopic Virtual Reality (VR) workbenchsystems such as the Barco Baron are usedto project the virtual information. In additionto the need to wear shutter glasses to view stereoscopicgraphics, head tracking is required toalign the virtual image between the object andthe viewer. An advantage of this approach isthat digital images are not occluded by the users’hand or environment and that graphics canbe displayed outside the physical object (i.e.,to display the environment or annotations andtools). Furthermore, the user does not have towear heavy equipment and the resolution of theprojection can be extremely high — enabling acompelling display system for exhibits and tradefairs. However, see-through boards obstruct userinteraction with the physical object. Multipleviewers cannot share the same device, althougha limited solution is offered by the virtualshowcase by establishing a faceted and curvedmirroring surface (Bimber, 2002).Figure 4. The Augmented engineering see-throughdisplay (Bimber et al., 2001).2.5 Spatial projection-based displaysThis technique is also known as Shader Lampsby (Raskar et al., 2001) and was extended in(Raskar&Bimber, 2004) to a variety of imaging solutions,including projections on irregular surfacetextures and combinations of projections with(static) holograms. In the field of advertising andperformance arts, this technique recently gainedpopularity labelled as Projection Mapping: toproject on buildings, cars or other large objects,replacing traditional screens as display means, cf.Figure 5. In such cases, theatre projector systemsare used that are prohibitively expensive (>30.000euros). The principle of spatial projection-basedtechnologies is shown in Figure 6. Casting an imageto a physical object is considered complementaryto constructing a perspective imageof a virtual object by a pinhole camera. If thephysical object is of the same geometry as thevirtual object, a straightforward 3D perspectivetransformation (described by a 4 × 4 matrix)is sufficient to predistort the digital image. Toobtain this transformation, it suffices to indicate6 corresponding points in the physical worldand virtual world: an algorithm entitled LinearCamera Calibration can then be applied (seeAppendix). If the physical and virtual shapes differ,the projection is viewpoint-dependent andthe head position needs to be tracked. Importantprojector characteristics involve weightand size versus the power (in lumens) of theFigure 5. Two projections on a church chapel in Utrecht (Hoeben, 2010).4849

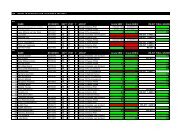

Figure 6. Projection-based display principle(adapted from (Raskar and Low, 2001)), on theright the dynamic shader lamps demonstration(Bandyopadhyay et al., 2001)).projector. There are initiatives to employ LEDlasers for direct holographic projection, whichalso decreases power consumption compared totraditional video projectors and ensures that theprojection is always in focus without requiringoptics (Eisenberg, 2004). Both fixed and handheldspatial projection-based systems have beendemonstrated. At present, hand-held projectorsmeasure 10 × 5 × 2 cm and weigh 150 g, includingthe processing unit and battery. However,the light output is little (15–45 lumens).The advantage of spatial projection-based technologiesis that they support the perception ofall visual and tactile/haptic depth cues withoutthe need for shutter glasses or HMDs. Furthermore,the display can be shared by multipleco-located users. It requires less expensiveequipment, which are often already available atdesign studios. Challenges to projector-based ARapproaches include optics and occlusion. First,only a limited field of view and focus depth canbe achieved. To reduce these problems, multiplevideo projectors can be used. An alternativeso lution is to employ a portable projector, asproposed in the iLamps and the I/O Pad concepts(Raskar et al., 2003) (Verlinden et al., 2008).Other issues include occlusion and shadows,which are cast on the surface by the user orother parts of the system. Projection on nonconvexgeometries depends on the granularityand orientation of the projector. The perceivedquality is sensitive to projection errors (alsoknown as registration errors), especially projectionovershoot (Verlinden et al., 2003b).A solution for this problem is either to include anoffset (dilatation) of the physical model or introducepixel masking in the rendering pipeline. Asprojectors are now being embedded in consumercameras and smartphones, we are expecting thistype of augmentation in the years to come.3. Input TechnologiesIn order to merge the digital and physical, positionand orientation tracking of the physicalcomponents is required. Here, we will discusstwo different types of input technologies: trackingand event sensing. Furthermore, we willbriefly discuss other input modalities.3.1 Position trackingWelch and Foxlin (2002) presented a comprehensiveoverview of the tracking principlesthat are currently available. In the ideal case,the measurement should be as unobtrusive andinvisible as possible while still offering accurateand rapid data. They concluded that there iscurrently no ideal solution (‘silver bullet’) forposition tracking in general, but some respectablealternatives are available. Table 2 summarisesthe most important characteristics ofthese tracking methods for Augmented Realitypurposes. The data have been gathered fromcommercially available equipment (the AscensionFlock of Birds, ARToolkit, Optotrack,Tracking typeSize oftracker(mm)Magnetic 16x16x16 2OpticalpassiveOpticalactive80x80x0.01 >1010x10x5 >10Ultrasound 20x20x10 1MechanicallinkageLaserscanningdefined byworkingenvelopenoneTypicalnumber oftrackers1infiniteLogitech 3D Tracker, Microscribe and Minolta VI-900). All these should be considered for objecttracking in Augmented prototyping scenarios.There are significant differences in the tracker/marker size, action radius and accuracy. Asthe physical model might consist of a numberof parts or a global shape and some additionalcomponents (e.g., buttons), the number of itemsto be tracked is also of importance. For simpletracking scenarios, either magnetic or passiveoptical technologies are often used.In some experiments we found out that a projectorcould not be equipped with a standard Flockof Birds 3D magnetic tracker due to interference.Other tracking techniques should be usedfor this paradigm. For example, the ARToolkitemploys complex patterns and a regular webcamerato determine the position, orientationand identification of the marker. This is done bymeasuring the size, 2D position and perspectivedistortion of a known rectangular marker, cf.Figure 7 (Kato and Billinghurst, 1999).Passive markers enable a relatively untetheredsystem, as no wiring is necessary. The opticalmarkers are obtrusive when markers are visibleto the user while handling the object. Althoughcomputationally intensive, marker-less opticalActionradius/accuracy1.5 m(1 mm)3 m(1 mm)3 m(0.5 mm)1 m(3 mm)0.7 m(0.1 mm)2 m( 0.2mm)DOFIssues6 Ferro-magnetic interference6 line of sight3 line of sight, wired connections6 line of sight56limited degrees of freedom,inertialine of sight, frequency, objectrecognition50Table 2. Summary of tracking technologies.51

Figure 7. Workflow of the ARToolkit optical tracking algorithm,http://www.hitl.washington.edu/artoolkit/documentation/userarwork.htmltracking has been proposed (Prince et al.,2002). bodies. This method has a number of challengesThe employment of Laser-Based tracking systemsis demonstrated by the illuminating Clay ing the recognition of objects and their posture.when used as a real-time tracking means, includ-system by Piper et al. (2002): a slab of Plasticineacts as an interactive surface — the user for gaming such as the Kinect (Microsoft), similarHowever, with the emergence of depth camerasinfluences a 3D simulation by sculpting the clay, systems are now being devised with a very smallwhile the simulation results are projected on the technological threshold.surface. A laser-based Minolta Vivid 3D scanneris employed to continuously scan the clay In particular cases, a global measuring system issurface. In the article, this principle was applied combined with a different local tracking principleto geodesic analysis, yet it can be adapted to to increase the level of detail, for example, todesign applications, e.g., the sculpting of car track the position and arrangement of buttons onFigure 8. Illuminating clay system with a projector/laser scanner (Piper et al., 2002).the object’s surface. Such local positioning systemsmight have less advanced technical requirements;for example, the sampling frequency canbe decreased to only once a minute. One localtracking system is based on magnetic resonance,as used in digital drawing tablets. The Sensetabledemonstrates this by equipping an altered commercialdigital drawing tablet with custom-madewireless interaction devices (Patten et al., 2001).The Senseboard (Jacob et al., 2002) has similarfunctions and an intricate grid of RFID receiversto determine the (2D) location of an RFID tag ona board. In practice, these systems rely on a rigidtracking table, but it is possible to extend this toa flexible sensing grid. A different technology wasproposed by Hudson (2004) to use LED pixels aslight emitters and sensors. By operating one pixelas a sensor whilst its neighbours are illuminated,it is possible to detect light reflected from afingertip close to the surface. This principle couldbe applied to embedded displays, as mentionedin Section 2.3.3.2 Event sensingApart from location and orientation tracking,Augmented prototyping applications requireinter action with parts of the physical object,for example, to mimic the interaction with theartefact. This interaction differs per AR scenario,so a variety of events should be sensedto cater to these applications.Physical sensorsThe employment of traditional sensors labelled‘physical widgets’ (phidgets) has been studiedextensively in the Computer-Human Interface(CHI) community. Greenberg and Fitchett (2001)introduced a simple electronics hardware andsoftware library to interface PCs with sensors(and actuators) that can be used to discernuser interaction. The sensors include switches,sliders, rotation knobs and sensors to measureforce, touch and light. More elaborate componentslike a mini joystick, Infrared (IR) motionsensor, air pressure and temperature sensor arecommercially available. Similar initiatives areiStuff (Ballagas et al., 2003), which also hosts anumber of wireless connections to sensors. Somesystems embed switches with short-range wirelessconnections, for example, the Switcherooand Calder systems (Avrahami and Hudson, 2002;Lee et al., 2004) (cf. Figure 9). This allows agreater freedom in modifying the location of theinteractive components while prototyping. TheSwitcheroo system uses custom-made RFID tags.A receiver antenna has to be located nearby(within a 10-cm distance), so the movement envelopeis rather small, while the physical modelis wired to a workstation. The Calder toolkit(Lee et al., 2004) uses a capacitive couplingtechnique that has a smaller range (6 cm withsmall antennae), but is able to receive and transmitfor long periods on a small 12 mm coin cell.Other active wireless technologies would drawmore power, leading to a system that wouldonly fit a few hours. Although the costs for thissystem have not been specified, only standardelectronics components are required to buildsuch a receiver.Hand trackingInstead of attaching sensors to the physicalenvironment, fingertip and hand trackingtechnologies can also be used to generate userevents. Embedded skins represent a type ofinteractive surface technology that allows theaccurate measurement of touch on the object’ssurface (Paradiso et al., 2000). For example, theSmartskin by Reikimoto (2002) consists of a flexiblegrid of antennae. The proximity or touch ofhuman fingers changes the capacity locally in thegrid and establishes a multi-finger tracking cloth,which can be wrapped around an object. Such asolution could be combined with embedded displays,as discussed in Section 2.3. Direct electric52 53

3.3 Other input modalitiesSpeech and gesture recognition require considerationin AR as well. In particular, pen-basedinteraction would be a natural extension to theexpressiveness of today’s designer skills. Oviattet al. (2000) offer an comprehensive overview ofthe so-called Recognition-Based User Interfaces(RUIs), including the issues and Human Factorsaspects of these modalities. Furthermore,speech-based interaction can also be useful toactivate operations while the hands are used forselection.4. Conclusions and FurtherreadingThis article introduces two important hardwaresystems for AR: displays and input technologies.To superimpose virtual images onto physicalmodels, head mounted-displays (HMDs), seethroughboards, projection-based techniquesand embedded displays have been employed.An important observation is that HMDs, thoughbest known by the public, have serious limitationsand constraints in terms of the field ofview and resolution and lend themselves to akind of isolation. For all display technologies,the current challenges include an untetheredinterface, the enhancement of graphics capabilities,visual coverage of the display and improvementof resolution. LED-based laser projectionand OLEDs are expected to play an importantrole in the next generation of IAP devicesbecause this technology can be employed bysee-through or projection-based displays.To interactively merge the digital and physicalparts of Augmented prototypes, position andorientation tracking of the physical componentsis needed, as well as additional user inputmeans. For global position tracking, a variety ofprinciples exist. Optical tracking and scanningsuffer from the issues concerning line of sightand occlusion. Magnetic, mechanical linkage andultrasound-based position trackers are obtrusiveand only a limited number of trackers can beused concurrently.The resulting palette of solutions is summarizedin Table 3 as a morphological chart. In devising asolution for your AR system, you can use this asa checklist or inspiration of display and input.Figure 9. Mockup equipped with wireless switches that can be relocated to explore usability(Lee et al., 2004).contact can also be used to track user interac-tip and hand tracking as well. A simple exampleDisplay Imaging principleVideo MixingProjectorbasedSee-throughtion; the Paper Buttons concept (Pedersen etal., 2000) embeds electronics on the objects andequips the finger with a two-wire plug that suppliespower and allows bidirectional communicationwith the embedded components when theyare touched. Magic Touch (Pedersen, 2001) usesa similar wireless system; the user wears an RFIDreader on his or her finger and can interact byis the light widgets system (Fails and Olsen,2002) that traces skin colour and determinesfinger/hand position by 2D blobs. The OpenNIlibrary enables hand and body tracking of depthrange cameras such as the Kinect (OpenNi.org).A more elaborate example is the virtual drawingtablet by Ukita and Kidode (2004); fingertipsare recognised on a rectangular sheet by aDisplay arrangmentInput technologiesPositiontrackingEventsensingHeadattachedHandheld/wearableMagnetic PassivemarkersPhysical sensorsWiredconnectionWirelessSpatialOpticalActive 3D lasermarkers scanningVirtualSurfacetracking3DtrackingUltrasoundMechanicaltouching the components, which have hiddenhead-mounted infrared camera. Traditional VRRFID tags. This method has been adapted togloves can also be used for this type of trackingTable 3. Morphological chart of AR enabling technologies.Augmented Reality for design by Kanai et al.(Schäfer et al., 1997).(2007). Optical tracking can be used for finger -54 55

Further readingFor those interested in research in this area,the following publication means offer a range ofdetailed solutions:■ International Symposium on Mixed andAugmented Reality (ISMAR) – ACM-sponsoredannual convention on AR, covering both specificapplications as emerging technologies.accesible through http://dl.acm.org■ Augmented Reality Times — a daily updateon demos and trends in commercial and academicAR systems: http://artimes.rouli.net■ Procams workshop — annual workshop onprojector-camera systems, coinciding withthe IEEE conference on Image Recognitionand Robot Vision. The resulting proceedingsare freely accessible at http://www.procams.org■ Raskar, R. and Bimber, O. (2004) SpatialAugmented Reality, A.K. Peters, ISBN:1568812302 – personal copy can be <strong>download</strong>edfor free at http://140.78.90.140/medien/ar/SpatialAR/<strong>download</strong>.php■ BuildAR – <strong>download</strong> simple webcam-basedapplication that uses markers, http://www.buildar.co.nz/buildar-free-versionAppendix: Linear CameraCalibrationThis procedure has been published in (Raskarand Bimber, 2004) to some degree, but is slightlyadapted to be more accessible for those withless knowledge of the field of image processing.C source code that implements this mathematicalprocedure can be found in appendix A1 of(Faugeras, 1993). It basically uses point correspondencesbetween original x,y,z coordinatesand their projected u,v, counterparts to resolveinternal and external camera parameters.In general cases, 6 point correspondences aresufficient (Faugeras 1993, Proposition 3.11).Let I and E be the internal and external parametersof the projector, respectively. Then a pointP in 3D-space is transformed to:p=[I·E] ·P (1)where p is a point in the projector’s coordinatesystem. If we decompose rotation and translationcomponents in this matrix transformationwe obtain:p=[R t] ·P (2)In which R is a 3x3 matrix corresponding to therotational components of the transformation andt the 3x1 translation vector. Then we split therotation columns into row vectors R1, R2, and R3of formula 3. Applying the perspective divisionresults in the following two formulae:(3)(4)in which the 2D point pi is split into (ui,vi).Given n measured point-point correspondences(p i; P i); (i = 1::n), we obtain 2n equations:R 1·P i– u i·R 3·P i+ t x- u i·t z= 0 (5)R 2·P i– v i·R 3·P i+ t y- u i·t z= 0 (6)We can rewrite these 2n equations as a matrixmultiplication with a vector of 12 unknownvariables, comprising the original transformationcomponents R and t of formula 3. Due to measurementerrors, a solution is usually non-singular;we wish to estimate this transformation witha minimal estimation deviation. In the algorithmpresented at (Bimber & Raskar, 2004), the minimaxtheorem is used to extract these based ondetermining the singular values. In a straightforwardmatter, internal and external transformationsI and E of formula 1 can be extracted fromthe resulting transformation.References■ Avrahami, D. and Hudson, S.E. (2002)‘Forming interactivity: a tool for rapid prototypingof physical interactive products’,Proceedings of DIS ‘02, pp.141–146.■ Azuma, R. (1997)‘A survey of augmented reality’, Presence:Teleoperators and Virtual Environments,Vol. 6, No. 4, pp.355–385.■ Azuma, R., Baillot, Y., Behringer, R., Feiner,S., Julier, S. and MacIntyre, B. (2001)‘Recent advances in augmented reality’, IEEEComputer Graphics and Applications, Vol. 21,No. 6, pp.34–47.■ Ballagas, R., Ringel, M., Stone,M. and Borchers, J. (2003)‘iStuff: a physical user interface toolkit forubiquitous computing environments’, Proceedingsof CHI 2003, pp.537–544.■ Bandyopadhyay, D., Raskar, R. and Fuchs,H. (2001)‘Dynamic shader lamps: painting on movableobjects’, International Symposium on AugmentedReality (ISMAR), pp.207–216.■ Bimber, O. (2002)‘Interactive rendering for projection-basedaugmented reality displays’, PhD dissertation,Darmstadt University of Technology.■ Bimber, O., Stork, A. and Branco, P. (2001)‘Projection-based augmented engineering’,Proceedings of International Conference onHuman-Computer Interaction (HCI’2001),Vol. 1, pp.787–791.■ Bochenek, G.M., Ragusa, J.M. and Malone,L.C. (2001)‘Integrating virtual 3-D display systems intoproduct design reviews: some insights fromempirical testing’, Int. J. Technology Management,Vol. 21, Nos. 3–4, pp.340–352.■ Bordegoni, M. and Covarrubias, M. (2007)‘Augmented visualization system for a hapticinterface’, HCI International 2007 Poster.■ Eisenberg, A. (2004)‘For your viewing pleasure, a projector inyour pocket’, New York Times, 4 November.■ Faugeras, O. (1993)‘Three-Dimensional Computer Vision:a Geometric Viewpoint’, MIT press.■ Fails, J.A. and Olsen, D.R. (2002)‘LightWidgets: interacting in everydayspaces’, Proceedings of IUI ‘02, pp.63–69.■ Greenberg, S. and Fitchett, C. (2001)‘Phidgets: easy development of physical interfacesthrough physical widgets’, Proceedingsof UIST ‘01, pp.209–218.■ Hoeben, A. (2010)“Using a projected Trompe L’Oeil to highlighta church interior from the outside”, EVA2010■ Hudson, S. (2004)‘Using light emitting diode arrays as touchsensitiveinput and output devices’, Proceedingsof the ACM Symposium on User InterfaceSoftware and Technology, pp.287–290.■ Jacob, R.J., Ishii, H., Pangaro, G. and Patten,J. (2002)‘A tangible interface for organizing informationusing a grid’, Proceedings of CHI ‘02,pp.339–346.5657