Tera-scale deep learning - GraphLab

Tera-scale deep learning - GraphLab

Tera-scale deep learning - GraphLab

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

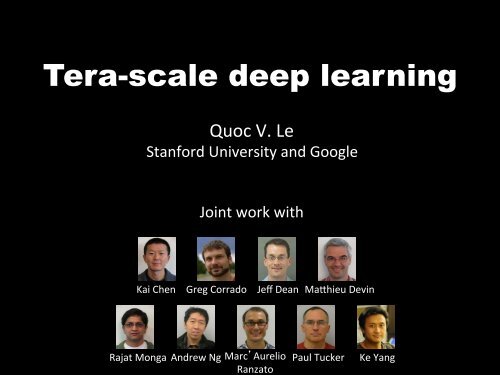

<strong>Tera</strong>-<strong>scale</strong> <strong>deep</strong> <strong>learning</strong>Quoc V. Le Stanford University and Google Joint work with Kai Chen Greg Corrado Jeff Dean MaAhieu Devin Rajat Monga Andrew Ng MarcAurelio Ranzato Paul Tucker Ke Yang

Machine Learning successes Face recogniLon OCR Autonomous car Email classificaLon RecommendaLon systems Web page ranking Quoc Le

The role of Feature ExtracLon in PaAern RecogniLon Classifier Feature extracLon (Mostly hand-‐craWed features) Quoc Le

Hand-‐CraWed Features Computer vision: … SIFT/HOG SURF Speech RecogniLon: … MFCC Spectrogram ZCR Quoc Le

New feature-‐designing paradigm Unsupervised Feature Learning / Deep Learning Show promises for small datasets Expensive and typically applied to small problems Quoc Le

The Trend of BigData Quoc Le

Brain SimulaLon Autoencoder Watching 10 million YouTube video frames Train on 2000 machines (16000 cores) for 1 week Autoencoder 1.15 billion parameters -‐ 100x larger than previously reported -‐ Small compared to visual cortex Autoencoder Image Le, et al., Building high-‐level features using large-‐<strong>scale</strong> unsupervised <strong>learning</strong>. ICML 2012

Key results Face detector Human body detector Cat detector Totally unsupervised! ~85% correct in classifying face vs no face Le, et al., Building high-‐level features using large-‐<strong>scale</strong> unsupervised <strong>learning</strong>. ICML 2012

ImageNet classificaLon 0.005% Random guess 9.5% State-‐of-‐the-‐art (Weston, Bengio ‘11) 15.8% Feature <strong>learning</strong> From raw pixels ImageNet 2009 (10k categories): Best published result: 17% (Sanchez & Perronnin ‘11 ), Our method: 20% Using only 1000 categories, our method > 50% Quoc Le

Scaling up Deep Learning # Examples Prior art 100,000 Our work 10,000,000 # Dimensions 1,000 10,000 # Parameters 10,000,000 1,000,000,000 Data set size Gbytes Tbytes Learned features Edge filters from Images High-‐level features Face, cat detectors Quoc Le

Summary of Scaling up -‐-‐-‐-‐-‐-‐-‐Local connecLvity (Model Parallelism) Asynchronous SGDs (Clever opLmizaLon / Data parallelism) RPCs Prefetching Single Removing slow machines Lots of opLmizaLon Quoc Le

Locally connected networks Machine #1 Machine #2 Machine #3 Machine #4 Features Image Quoc Le

Asynchronous Parallel SGDs (Alex Smola’s talk) Parameter server Quoc Le

• Scale <strong>deep</strong> <strong>learning</strong> 100x larger using distributed training on 1000 machines • Brain simulaLon -‐> Cat neuron • State-‐of-‐the-‐art performances on – Object recogniLon (ImageNet) – AcLon RecogniLon – Cancer image classificaLon • Other applicaLons – Speech recogniLon – Machine TranslaLon Conclusions Model Parallelism ImageNet 0.005% 9.5% 15.8% Random guess Best published result Our method Data Parallelism Parameter server Cat neuron Face neuron

References • Q.V. Le, M.A. Ranzato, R. Monga, M. Devin, G. Corrado, K. Chen, J. Dean, A.Y. Ng. Building high-‐level features using large-‐<strong>scale</strong> unsupervised <strong>learning</strong>. ICML, 2012. • Q.V. Le, J. Ngiam, Z. Chen, D. Chia, P. Koh, A.Y. Ng. Tiled Convolu7onal Neural Networks. NIPS, 2010. • Q.V. Le, W.Y. Zou, S.Y. Yeung, A.Y. Ng. Learning hierarchical spa7o-‐temporal features for ac7on recogni7on with independent subspace analysis. CVPR, 2011. • Q.V. Le, J. Ngiam, A. Coates, A. Lahiri, B. Prochnow, A.Y. Ng. On op7miza7on methods for <strong>deep</strong> <strong>learning</strong>. ICML, 2011. • Q.V. Le, A. Karpenko, J. Ngiam, A.Y. Ng. ICA with Reconstruc7on Cost for Efficient Overcomplete Feature Learning. NIPS, 2011. • Q.V. Le, J. Han, J. Gray, P. Spellman, A. Borowsky, B. Parvin. Learning Invariant Features for Tumor Signatures. ISBI, 2012. • I.J. Goodfellow, Q.V. Le, A.M. Saxe, H. Lee, A.Y. Ng, Measuring invariances in <strong>deep</strong> networks. NIPS, 2009. hAp://ai.stanford.edu/~quocle