When Feedback Doubles the Prelog in AWGN Networks - Sites ...

When Feedback Doubles the Prelog in AWGN Networks - Sites ...

When Feedback Doubles the Prelog in AWGN Networks - Sites ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

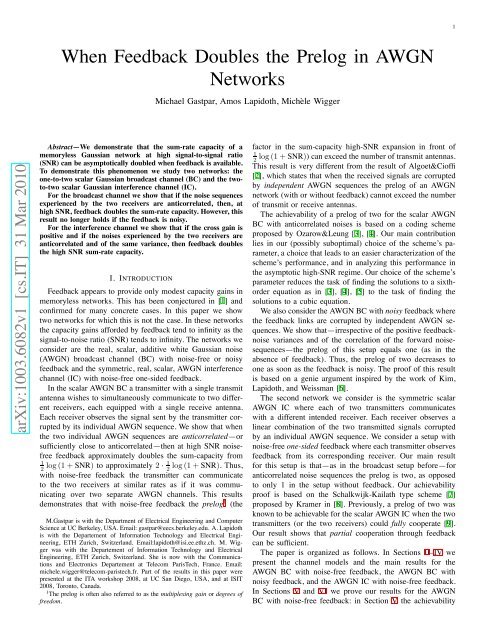

2M 1 ,M 2✲Fig. 1.D ✛❄Trans. X t✻D ✛✲✲Y✐❄ Z 1,t 1,t✲Y✐❄ Z 2,t 2,t✲Receiv.1Receiv.2The two-user <strong>AWGN</strong> BC with noise-free feedback.ˆM 1 ✲ˆM 2 ✲of prelog 2 for anticorrelated noises and a more generalachievability result for approximately anticorrelated noises;and <strong>in</strong> Section VI <strong>the</strong> rest of our results. In Section VII weprove our results for <strong>the</strong> <strong>AWGN</strong> BC with noisy feedback.In Sections VIII and IX we f<strong>in</strong>ally prove our results for <strong>the</strong><strong>AWGN</strong> IC with noise-free one-sided feedback: <strong>in</strong> Section VIII<strong>the</strong> achievability of prelog 2 for anticorrelated noises; and <strong>in</strong>Section IX <strong>the</strong> rest of our results.II. BROADCAST CHANNEL WITH NOISE-FREE FEEDBACKA. The ModelThe real, scalar, <strong>AWGN</strong> BC with noise-free feedback isdepicted <strong>in</strong> Figure 1. Denot<strong>in</strong>g <strong>the</strong> time-t transmitted symbolby x t ∈ R, <strong>the</strong>symbolY 1,t that Receiver 1 receives at time-tisY 1,t = x t + Z 1,t , (1)and <strong>the</strong> symbol Y 2,t that Receiver 2 receives at time-t isY 2,t = x t + Z 2,t , (2)where <strong>the</strong> sequence of pairs {(Z 1,t ,Z 2,t )} is drawn <strong>in</strong>dependentlyand identically distributed (IID) accord<strong>in</strong>g to a bivariatezero-mean Gaussian distribution of covariance matrix( )σ2K = 1 ρ z σ 1 σ 2ρ z σ 1 σ 2 σ22 . (3)We assume that σ1 2 and σ2 2 are positive and refer to <strong>the</strong>m as<strong>the</strong> noise variances and to ρ z ∈ [−1, 1] as <strong>the</strong> noise correlationcoefficient.The transmitter wishes to send Message M 1 to Receiver 1and an <strong>in</strong>dependent message M 2 to Receiver 2. The messagesM 1 and M 2 are assumed to be uniformly distributed over<strong>the</strong> sets M 1 {1,...,⌊2 nR1 ⌋} and M 2 {1,...,⌊2 nR2 ⌋},where n denotes <strong>the</strong> blocklength and R 1 and R 2 <strong>the</strong> respectiverates of transmission.It is assumed that <strong>the</strong> transmitter has access to noise-freefeedback from both receivers, i.e., that after send<strong>in</strong>g x t−1 itlearns both outputs Y 1,t−1 and Y 2,t−1 .Thetransmittercanthus compute its time-t channel <strong>in</strong>put as a function of bothmessages and all previous channel outputs:X t = f (n) (BC,t M1 ,M 2 ,Y1 t−1 ,Y2t−1 ), t ∈{1,...,n}, (4)where <strong>the</strong> encod<strong>in</strong>g function f (n)BC,tis of <strong>the</strong> formf (n)BC,t : M 1 ×M 2 × R t−1 × R t−1 → R (5)and where Y1 t−1 (Y 1,1 ,...,Y 1,t−1 ) and Y2 t−1 (Y 2,1 ,...,Y 2,t−1 ).The channel <strong>in</strong>puts are subject to an expected average blockpowerconstra<strong>in</strong>t Pencod<strong>in</strong>g functions[ n1n E ∑t=1({ > 0. Thus, <strong>in</strong> (4) we only allow fornfBC,t} (n) that satisfyt=1f (n)t(M 1 ,M 2 ,Y t−11 ,Y2 t−1 )) 2]≤ P. (6)After <strong>the</strong> n-th channel use each receiver decodes its <strong>in</strong>tendedmessage based on its observed channel output sequence.Receiver 1 produces <strong>the</strong> guessand Receiver 2 <strong>the</strong> guessˆM 1 = φ (n)ˆM 2 = φ (n)1 (Y 1 n2 (Y 2 n), (7)), (8)where <strong>the</strong> decod<strong>in</strong>g functions φ (n)1 and φ (n)2 are of <strong>the</strong> formφ (n)1 : R n →{1,...,⌊2 nR1 ⌋}, (9)φ (n)2 : R n →{1,...,⌊2 nR2 ⌋}. (10)A rate pair (R 1 ,R 2 ) is said to be achievable if forevery block-length { n <strong>the</strong>re exists a set of n encod<strong>in</strong>gnfunctions fBC,t} (n) as <strong>in</strong> (5) satisfy<strong>in</strong>g <strong>the</strong> power constra<strong>in</strong>t(6) and two decod<strong>in</strong>g functions φ (n)1 and φ (n)2 ast=1<strong>in</strong> [(9) and (10) such that <strong>the</strong> probability of decod<strong>in</strong>g errorPr (M 1 ,M 2 ) ≠(ˆM 1 , ˆM]2 ) tends to 0 as <strong>the</strong> blocklength ntends to <strong>in</strong>f<strong>in</strong>ity, i.e.,[lim Pr (M 1 ,M 2 ) ≠(ˆM 1 , ˆM]2 ) =0.n→∞The closure of <strong>the</strong> set of all achievable rate pairs (R 1 ,R 2 ) iscalled <strong>the</strong> capacity region of this setup. The supremum of <strong>the</strong>sum R 1 + R 2 over all achievable rate pairs (R 1 ,R 2 ) is calledits sum-capacity, andwedenoteitbyC BC,Σ (P, σ 2 1 ,σ2 2 ,ρ z). Inthis paper we are particularly <strong>in</strong>terested <strong>in</strong> <strong>the</strong> prelog, whichcharacterizes <strong>the</strong> logarithmic growth of <strong>the</strong> sum-capacity athigh powers, and is def<strong>in</strong>ed as:B. ResultsC BC,Σ (P, σlim1,σ 2 2,ρ 2 z )P →∞12 log(1 + P ) . (11)It is well known [10] that for <strong>the</strong> <strong>AWGN</strong> BC without feedback<strong>the</strong> prelog equals 1, irrespective of <strong>the</strong> noise-correlationρ z .Thefollow<strong>in</strong>gTheorem1showsthatwithfeedback<strong>the</strong>prelog can be 2.Theorem 1:• If ρ z = −1, <strong>the</strong>n• If ρ z ∈ (−1, 1), <strong>the</strong>nC BC,Σ (P, σ1 2 lim,σ2 2 ,ρ z)P →∞12 log(1 + P ) =2. (12)C BC,Σ (P, σ1 2 lim,σ2 2 ,ρ z)P →∞12 log(1 + P ) =1. (13)

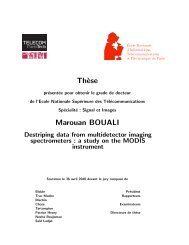

4Fig. 4.M 1✲M 2✲D❄✛X 1,tTrans.1 ✲❅❘·a ✐ ✒·a ✐ ❅ ❅❘Trans.2 ✒ ✲✻X 2,tD ✛✐❄ Z 1,tY 1,t✲✐❄ Z 2,t✲Y 2,tReceiv.1Receiv.2The two-user <strong>AWGN</strong> IC with one-sided noise-free feedback.ˆM 1 ✲ˆM 2 ✲( )σdiagonal 2 2covariance matrix W 1 00 σW 2 .Weshallassume2throughout that <strong>the</strong> feedback noise variances are positiveσ W 1 ,σ W 2 > 0. (22)In this setup <strong>the</strong> transmitter computes its channel <strong>in</strong>puts asX t = f (n) (BCNoisy,t M1 ,M 2 ,V1 t−1 ,V2t−1 ), t ∈{1,...,n},(23)where <strong>the</strong> encod<strong>in</strong>g function f (n)BCNoisy,tis of <strong>the</strong> formf (n)BCNoisy,t : M 1 ×M 2 × R t−1 × R t−1 → R, (24)and where V t−11 (V 1,1 ,...,V 1,t−1 ) and V t−12 (V 2,1 ,...,V 2,t−1 ).The channel <strong>in</strong>put sequence is aga<strong>in</strong> subject to an expectedaverage block-power constra<strong>in</strong>t P>0 as <strong>in</strong> (6).The decod<strong>in</strong>g rules, achievable rates, capacity region, sumcapacityand prelog are def<strong>in</strong>ed as <strong>in</strong> <strong>the</strong> previous section. Thesum-capacity for this setup with noisy feedback is denoted byC BCNoisy,Σ (P, σ 2 1 ,σ2 2 ,ρ z,σ 2 W 1 ,σ2 W 2 ).B. ResultNoisy feedback does not <strong>in</strong>crease <strong>the</strong> prelog.Theorem 4: Irrespective of <strong>the</strong> correlation ρ z ∈ [−1, 1], <strong>the</strong>prelog is one:C BCNoisy,Σ (P, σlim1,σ 2 2,ρ 2 z ,σW 2 1 ,σ2 W 2 )P →∞12 log (1 + P ) =1.Proof: See Section VII.IV. INTERFERENCE CHANNEL WITH NOISE-FREEFEEDBACKThe real scalar <strong>AWGN</strong> IC with noise-free feedback is depicted<strong>in</strong> Figure 4. Unlike <strong>the</strong> broadcast channel, this channelhas two transmitters, each of which wishes to send a messageto its correspond<strong>in</strong>g receiver.A. The ModelTransmitter 1 wishes to send Message M 1 to Receiver 1, andTransmitter 2 wishes to send Message M 2 to Receiver 2. Themessages M 1 and M 2 are as def<strong>in</strong>ed previously. We assumea symmetric channel: ifattimet Transmitter 1 sends <strong>the</strong> real2 For simplicity, we do not treat setups with correlated feedback noises orsetups with feedback noises that are correlated with <strong>the</strong> forward noises.symbol x 1,t and Transmitter 2 sends <strong>the</strong> real symbol x 2,t ,<strong>the</strong>nReceiver 1 observesand Receiver 2 observesHere <strong>the</strong> “cross ga<strong>in</strong>” aY 1,t = x 1,t + ax 2,t + Z 1,t , (25)Y 2,t = ax 1,t + x 2,t + Z 2,t . (26)a>0, (27)is a positive, real constant and <strong>the</strong> noise sequences{(Z 1,t ,Z 2,t )} are IID accord<strong>in</strong>g to a zero-mean bivariateGaussian ( distribution ) of symmetric covariance matrix K =1σ 2 ρz.ρ z 1Each transmitter has access to noise-free feedback fromits correspond<strong>in</strong>g receiver. Thus, each of <strong>the</strong> two transmitterscomputes its time-t channel <strong>in</strong>put asX ν,t = f (n)IC,ν,t(Mν ,Y t−1ν), ν ∈{1, 2},for some encod<strong>in</strong>g functions f (n)IC,ν,tof <strong>the</strong> formf (n)IC,ν,t : M ν × R t−1 → R, ν ∈{1, 2}.The two channel <strong>in</strong>put sequences are subject to <strong>the</strong> sameaverage block-power constra<strong>in</strong>t P>0:[ n1n E ∑ (f (n) (IC,ν,t Mν ,Yνt−1 ) ) ]2≤ P, ν ∈{1, 2}. (28)t=1Decod<strong>in</strong>g rules, achievable rate pairs, capacity region, sumcapacity,and prelog are def<strong>in</strong>ed as for <strong>the</strong> <strong>AWGN</strong> BC. Wedenote <strong>the</strong> sum-capacity of <strong>the</strong> symmetric <strong>AWGN</strong> IC byC IC,Σ (P, σ 2 ,ρ z ,a).In this paper, we do not consider negative cross ga<strong>in</strong>s, because<strong>the</strong> (feedback) capacity region of <strong>the</strong> symmetric <strong>AWGN</strong>IC with cross ga<strong>in</strong> a, powerconstra<strong>in</strong>tsP ,noisevarianceσ 2 ,and noise correlation ρ z co<strong>in</strong>cides with <strong>the</strong> (feedback) capacityregion of <strong>the</strong> symmetric <strong>AWGN</strong> IC with cross ga<strong>in</strong> (−a),power constra<strong>in</strong>ts P ,noisevarianceσ 2 ,andnoisecorrelation(−ρ z ).Indeed,<strong>in</strong>asymmetric<strong>AWGN</strong>ICwithparametersa, P, σ 2 ,ρ z Transmitter 2 and Receiver 2 (or Transmitter 1and Receiver 1) can simulate an <strong>AWGN</strong> IC with parameters(−a),P,σ 2 , (−ρ z ). This is accomplished if Transmitter 2multiplies its <strong>in</strong>puts {X 2,t } by −1 before feed<strong>in</strong>g <strong>the</strong>m to <strong>the</strong>channel (which obviously does not affect <strong>the</strong> transmit power)and if Transmitter 2 and Receiver 2 multiply <strong>the</strong> outputs {Y 2,t }by −1 before process<strong>in</strong>g <strong>the</strong>m.B. ResultWithout feedback, <strong>the</strong> prelog of <strong>the</strong> <strong>AWGN</strong> IC equals 1;with noise-free feedback it can be 2, depend<strong>in</strong>g on <strong>the</strong> noisecorrelation ρ z ∈ [−1, 1].Theorem 5: The prelog of <strong>the</strong> <strong>AWGN</strong> IC with noise-freefeedback satisfies <strong>the</strong> follow<strong>in</strong>g three statements.• If ρ z = −1, <strong>the</strong>n<strong>the</strong>prelogisgivenbyC IC,Σ (P, σ 2 ,ρ z ,a)limP →∞12 log(1 + P ) =2. (29)

5• If ρ z ∈ (−1, 1), <strong>the</strong>n<strong>the</strong>prelogisgivenbyC IC,Σ (P, σ 2 ,ρ z ,a)limP →∞12 log(1 + P ) =1. (30)• If ρ z =1,<strong>the</strong>n<strong>the</strong>prelogsatisfiesC IC,Σ (P, σ 2 ,ρ z ,a)1 ≤ limP →∞12 log(1 + P ) ≤ 2. (31)Moreover, when <strong>the</strong> cross ga<strong>in</strong> a is equal to 1, <strong>the</strong>prelogis more precisely given byProof: See Section IX.C IC,Σ (P, σ 2 ,ρ z ,a)limP →∞12 log(1 + P ) =1. (32)V. ACHIEVABILITY OF PRELOG LARGER THAN 1 FOR THE<strong>AWGN</strong> BC WITH NOISE-FREE FEEDBACKIn this section we consider <strong>the</strong> <strong>AWGN</strong> BC with noisefreefeedback, and prove <strong>the</strong> achievability of prelog 2 whenρ z = −1 and <strong>the</strong> more general achievability result (19).These asymptotic results are achieved by <strong>the</strong> Ozarow-Leungscheme [3], [4] when <strong>the</strong> scheme’s parameter is chosen appropriately.For completeness, we briefly describe <strong>the</strong> Ozarow-Leung scheme with such an appropriate choice of parameter(Section V-A) and analyze its performance (Section V-B). Wef<strong>in</strong>ally analyze <strong>the</strong> high-SNR asymptotics of <strong>the</strong> sum-ratesachieved by this scheme (Sections V-C and V-D).A. SchemeThe scheme by Ozarow and Leung [3], [4] (see also [5] and[8]) is based on <strong>the</strong> iterative strategy proposed by Schalkwijkand Kailath [7].Prior to transmission, <strong>the</strong> transmitter maps <strong>the</strong> Message M ν ,for ν ∈{1, 2}, <strong>in</strong>toaMessagepo<strong>in</strong>tΘ ν :Θ ν 1/2 − M ν − 1⌊2 nRν ⌋ . (33)It <strong>the</strong>n describes Message po<strong>in</strong>t Θ ν <strong>in</strong> n channel uses toReceiver ν.The transmission starts with an <strong>in</strong>itialization phase consist<strong>in</strong>gof <strong>the</strong> first two channel uses. Channel use ν ∈{1, 2} isdedicated to Receiver ν only, and <strong>the</strong> transmitter sends√ (√)P PX ν =P + σN2 Var(Θ ν ) Θ ν + N ,where Var(Θ ν ) denotes <strong>the</strong> variance of <strong>the</strong> random variableΘ ν and where N is a zero-mean Gaussian random variableof variance σN 2 <strong>in</strong>dependent of <strong>the</strong> messages M 1 and M 2and of <strong>the</strong> noise sequence {(Z 1,t ,Z 2,t )}. 3 At <strong>the</strong> end of this<strong>in</strong>itialization phase, each receiver estimates its desired message3 The described scheme can easily be turned <strong>in</strong>to a determ<strong>in</strong>istic scheme of<strong>the</strong> same asymptotic probability of error us<strong>in</strong>g a trick <strong>in</strong>troduced <strong>in</strong> [11] andapplied <strong>in</strong> <strong>the</strong> scheme <strong>in</strong> Section VIII-A.po<strong>in</strong>t. Specifically, Receiver ν, forν ∈{1, 2}, produces<strong>the</strong>estimate ˆΘ ν,2 based on channel output Y ν,ν :√ √Var(Θν ) P + σ2ˆΘ ν,2 NY ν,νP√P√Var(Θν ) P + σ2=Θ ν +N(N + Z ν,ν ),P Pand thus has an estimation error:√ √ɛ ν,2 ˆΘ Var(Θν ) P + σ2ν,2 − Θ ν =N(N + Z ν,ν ).P PThe subsequent n − 2 channel uses are used to ref<strong>in</strong>e <strong>the</strong>receivers’ estimates. To this end, <strong>the</strong> transmitter sends <strong>in</strong> eachsubsequent channel use t ∈{3,...,n} al<strong>in</strong>earcomb<strong>in</strong>ationof Receiver 1’s estimation error ɛ 1,t−1 about Message po<strong>in</strong>tΘ 1 and of Receiver 2’s estimation error ɛ 2,t−2 about Messagepo<strong>in</strong>t Θ 2 .Noticethat<strong>the</strong>transmitterknows<strong>the</strong>seestimationerrors because it is cognizant of both message po<strong>in</strong>ts Θ 1 andΘ 2 ,andthrough<strong>the</strong>feedback,alsoof<strong>the</strong>receivers’estimates.Specifically, at time t ∈{3,...,n} <strong>the</strong> transmitter sendswhereandX t =ρ t−1 √P1+γ 2 +2γ|ρ t−1 |(ɛ1,t−1· √ + γsign(ρ t−1 ) ɛ )2,t−1√ ,α1,t−1 α2,t−1α ν,t−1 Var(ɛ ν,t−1 ) ,Cov[ɛ 1,t−1 ,ɛ 2,t−1 ]√Var(ɛ1,t−1 ) √ Var(2,t− 2) ,and where sign(·) denotes <strong>the</strong> signum function, i.e., sign(x) =1 if x ≥ 0 and sign(x) =−1 o<strong>the</strong>rwise. The parameter γ is(possibly suboptimally) chosen asγ σ 1σ 2. (34)After each channel use t ∈{3,...,n} <strong>the</strong> two receivers update<strong>the</strong>ir message-po<strong>in</strong>t estimates accord<strong>in</strong>g to <strong>the</strong> rule:ˆΘ ν,t = ˆΘ ν,t−1 − Cov[ɛ ν,t−1,Y ν,t ]Y ν,t , ν ∈{1, 2}. (35)Var(Y ν,t )Each receiver decodes its <strong>in</strong>tended message as follows. After<strong>the</strong> reception of <strong>the</strong> n-th channel output, each Receiver νproduces as its guess <strong>the</strong> message ˆM ν whose message po<strong>in</strong>tis closest to its last estimate ˆΘ ν,n ,i.e.,∣ˆM ν = argm<strong>in</strong> m∈{1,...,⌊e nRν ⌋} ∣Θ ν (m) − ˆΘ∣ ∣∣ν,n ,where ties can be resolved arbitrarily.

6B. Analysis of PerformanceWe only present a rough analysis of <strong>the</strong> scheme. For detailssee [3], [4].The probability of error of <strong>the</strong> described scheme tends to 0as <strong>the</strong> blocklength n tends to <strong>in</strong>f<strong>in</strong>ity, if:n∑1R ν < limn→∞ nhere α ν,1 Var(Θ ν ),α ν,2 Var(Θ ν)Pt=2( )12 log αν,t−1, ν ∈{1, 2}; (36)α ν,tP + σN2 (σN 2 + σ 2Pν), ν ∈{1, 2},and <strong>the</strong> variances {α 1,t } n t=3 and {α 2,t } n t=3 are def<strong>in</strong>ed through<strong>the</strong> recursions:σ 1σα 1,t = α 2P (1 − ρ 2 t−1 )+σ2 1 ( σ1σ 2+ σ21,t−1and( σ1σ 2σ 1+2|ρ t−1 |)+ σ2σ 1+2|ρ t−1 |)(P + σ1 2) , (37)σ 2σα 2,t = α 1P (1 − ρ 2 t−1 )+σ2 2 ( σ1σ 2+ σ22,t−1( σ1σ 2σ 1+2|ρ t−1 |)+ σ2σ 1+2|ρ t−1 |)(P + σ2 2) . (38)The sequence of correlation coefficients {ρ t } n t=2 is def<strong>in</strong>ed asρ 2 Cov[ɛ 1,2 ,ɛ 2,2 ]√Var(ɛ1,2 ) Var(ɛ 2,2 ) =σ 2 N√ √σ2N+ σ12 σ2N+ σ22(39)and for t ∈{3,...,n} through Recursion (40) on top of <strong>the</strong>next page. We shall choose <strong>the</strong> variance σN2 such that <strong>the</strong>sequence {ρ t } n t=2 is constant <strong>in</strong> magnitude but alternates <strong>in</strong>sign, i.e., such that for some ρ ∗ ∈ (0, 1):ρ t =(−1) t ρ ∗ , t ∈{2,...,n}. (41)This way, <strong>the</strong> ratios α1,t−1α 1,tand α2,t−1α 2,tare constant for t ∈{3,...,n}, andtrivially<strong>the</strong>limiton<strong>the</strong>right-handsideof(36) equalsn∑( )12 log αν,t−1αt=2ν,t⎛= 1 2 log ⎝2P + σν21limn→∞ nσ 2 νσ 2 1 +σ2 2 +2σ1σ2ρ∗ P (1 − (ρ ∗ ) 2 )+σ 2 ν⎞⎠ . (42)By Equation (39), we can set <strong>the</strong> correlation coefficient ρ 2to every desired value <strong>in</strong> [0, 1) if we choose <strong>the</strong> varianceσN2 appropriately. Therefore, Condition (41) can be satisfiedfor all ρ ∗ ∈ [0, 1) with <strong>the</strong> follow<strong>in</strong>g “fixed po<strong>in</strong>t” property:substitut<strong>in</strong>g ρ ∗ for ρ t−1 <strong>in</strong> Recursion (40) yields ρ t = −ρ ∗ ,and similarly, substitut<strong>in</strong>g −ρ ∗ for ρ t−1 <strong>in</strong> Recursion (40)yields ρ t = ρ ∗ .In[3],[4]itwasshownthatRecursion(40)hasat least one such “fixed po<strong>in</strong>t”. Also, us<strong>in</strong>g simple algebraicmanipulations, <strong>the</strong> “fixed po<strong>in</strong>ts” ρ ∗ of Recursion (40) arefound to be <strong>the</strong> solutions <strong>in</strong> (0, 1) to <strong>the</strong> follow<strong>in</strong>g cubicequation <strong>in</strong> ρ:wherec 2 − 2σ 1σ 2Pρ 3 + c 2 ρ 2 + c 1 ρ + c 0 =0, (43)− P + σ2 1 + σ2 2 + ρ zσ 1 σ 2√ √P + σ21 P + σ222σ 2 1 σ2 2−P √ √ , (44)P + σ12 P + σ22(σ1 2 + σ− ρ2)2z √ √P + σ21 P + σ22c 1 −1 − σ2 1 + σ 2 2P− σ 1σ 2 (σ1 2 + σ2 2 )P √ √ , (45)P + σ12 P + σ22c 0 P + σ2 1 + σ2 2 − ρ z σ 1 σ√ √ 2. (46)P + σ21 P + σ22Comb<strong>in</strong><strong>in</strong>g <strong>the</strong>se observations with (36) and (42), we obta<strong>in</strong><strong>the</strong> follow<strong>in</strong>g lemma.Lemma 1: Choos<strong>in</strong>g <strong>the</strong> parameter γ as <strong>in</strong> (34), <strong>the</strong>Ozarow-Leung scheme achieves all nonnegative rate pairs(R 1 ,R 2 ) satisfy<strong>in</strong>g⎛⎞R 1 < 1 2 log ⎝P + σ12 ⎠2, (47)σ12 P (1 − (ρσ ∗ ) 2 )+σ 21 2+σ2 2 +2σ1σ2ρ∗ 1⎛⎞R 2 < 1 2 log ⎝P + σ22 ⎠2, (48)σ22 P (1 − (ρσ ∗ ) 2 )+σ 21 2+σ2 2 +2σ1σ2ρ∗ 2where ρ ∗ is a 4 solution <strong>in</strong> <strong>the</strong> open <strong>in</strong>terval (0, 1) to <strong>the</strong> cubicequation (43).Remark 1: To characterize <strong>the</strong> scheme’s performance for ageneral parameter γ requires f<strong>in</strong>d<strong>in</strong>g <strong>the</strong> solutions to a sixthorderequation [3], [4]. Our (possibly suboptimal) choice ofγ <strong>in</strong> (34) makes it possible to f<strong>in</strong>d <strong>the</strong> scheme’s performancewhile solv<strong>in</strong>g only a cubic equation; see (43).C. High-SNR asymptotics for constant ρ z = −1In this section we prove that <strong>the</strong> above described schemeachieves prelog 2 when ρ z = −1.Proposition 6: <strong>When</strong> ρ z = −1 <strong>the</strong> Ozarow-Leung schemewith parameter γ = σ1σ 2achieves prelog 2.Proof: Let ρ z be −1. ByLemma1,foreverypowerPand every ɛ>0 <strong>the</strong> Ozarow-Leung scheme with parameterγ = σ1σ 2achieves a sum-rateR Σ (P )⎛= 1 2 log ⎝+ 1 2 log ⎛⎝P + σ12σ1 2(1+ρ∗ (P ))σ1 2+σ2 2 +2σ1σ2ρ∗ (P ) P (1 − ρ∗ (P )) + σ12⎞⎠P + σ22σ2 2(1+ρ∗ (P ))σ1 2+σ2 2 +2σ1σ2ρ∗ (P ) P (1 − ρ∗ (P )) + σ22⎞⎠ − ɛ,where <strong>the</strong> parameter ρ ∗ (P ) is def<strong>in</strong>ed as a solution <strong>in</strong> (0, 1)to <strong>the</strong> cubic equation (43).S<strong>in</strong>ce for each power P > 0 <strong>the</strong> parameter ρ ∗ (P ) lies <strong>in</strong><strong>the</strong> open <strong>in</strong>terval (0, 1), wehaveσ1 2(1 + ρ∗ (P ))σ1 2 + σ2 2 +2σ 1σ 2 ρ ∗ (P ) ≤ 2σ2 1σ1 2 + , σ2 2(49)σ2 2(1 + ρ∗ (P ))σ1 2 + σ2 2 +2σ 1σ 2 ρ ∗ (P ) ≤ 2σ2 2σ1 2 + , σ2 2(50)4 If <strong>the</strong>re is more than one solution <strong>in</strong> [0, 1] to (43) we can freely chooseone. Numerical results suggest that <strong>the</strong>re is only one solution.

7√ √P + σ2ρ t = sign(ρ t−1 ) ·1 P + σ22P (1 −|ρ t−1 | 2 )+(σ1 2 + σ2 2 +2σ 1σ 2 |ρ t−1 |)( (σ1· |ρ t−1 | + σ ) ( )( )2σ1σ2 P (P + σ2+2|ρ t−1 | − + |ρ t−1 | + |ρ t−1 |1 + σ 2 )2 − ρ z σ 1 σ 2 )σ 2 σ 1 σ 2 σ 1 (P + σ1 2)(P + σ2 2 )(40)and we can thus lower bound <strong>the</strong> achievable sum-rate R Σ (P )by⎛⎞R Σ (P ) ≥ 1 2 log ⎝P + σ12 ⎠2σ12 P (1 − ρσ ∗ (P )) + σ 21 2+σ2 12⎛⎞+ 1 2 log ⎝P + σ22 ⎠ − ɛ. (51)2σ22 P (1 − ρσ ∗ (P )) + σ 21 2+σ2 22The desired lower bound on <strong>the</strong> preloglimP →∞R Σ (P )1≥ 2,2log (1 + P )is <strong>the</strong>n obta<strong>in</strong>ed from (51) by show<strong>in</strong>g that as P →∞<strong>the</strong> sequenceof solutions {ρ ∗ (P )} {P>0} tends to -1 approximatelyas −1+P −1 .ThisfollowsfromLemma2thatwestatenext.Lemma 2: Let ρ z be −1. ForeachpowerP>0 let ρ ∗ (P )be <strong>the</strong> solution <strong>in</strong> <strong>the</strong> <strong>in</strong>terval (0, 1) to (43). Then, for everyɛ>0lim P 1−ɛ (1 − ρ ∗ (P )) = 0. (52)P →∞Proof: The result could be proved by first comput<strong>in</strong>g foreach P>0 <strong>the</strong> solution ρ ∗ (P ) to <strong>the</strong> cubic equation (43) (e.g.,us<strong>in</strong>g Cardano’s formula), and <strong>the</strong>n analyz<strong>in</strong>g <strong>the</strong> asymptoticsof <strong>the</strong> solutions as P → ∞. However, this l<strong>in</strong>e of attackis ra<strong>the</strong>r cumbersome. Instead, we show that if a sequence{ρ ∗ (P )} {P>0} <strong>in</strong> (0, 1) violates (52) for some ɛ>0, <strong>the</strong>n<strong>the</strong>re exists some power P>0 such that ρ ∗ (P ) cannot be asolution to (43). For details, see Appendix A.D. High-SNR asymptotics for ρ z (P ) vary<strong>in</strong>g with PWe prove <strong>the</strong> more general asymptotic high-SNR achievabilityresult <strong>in</strong> (19).Proposition 7: Fix a sequence {ρ z (P )} {P>0} and def<strong>in</strong>e<strong>the</strong> limit β as <strong>in</strong> (17):β = limP →∞− log(1 + ρ z (P )).log(P )The Ozarow-Leung scheme with parameter γ = σ1σ 2achievessum-rates {R Σ (P )} {P>0} satisfy<strong>in</strong>gR Σ (P )limP →∞1≥ m<strong>in</strong>{1+β,2}. (53)2log(1 + P )Proof: By Lemma 1 and Inequalities (49) and (50) <strong>in</strong><strong>the</strong> previous subsection, for each power P > 0 and ɛ>0,<strong>the</strong> Ozarow-Leung scheme with parameter γ = σ1σ 2achieves asum-rate R Σ (P ) that is lower bounded by⎛⎞R Σ (P ) ≥ 1 2 log ⎝P + σ12 ⎠2 2σ12 P (1 − ρσ ∗ (P )) + σ 21 2+σ2 12⎛⎞+ 1 2 log ⎝P + σ22 ⎠2− ɛ, (54)2σ22 P (1 − ρσ ∗ (P )) + σ 21 2+σ2 22where ρ ∗ (P ) is def<strong>in</strong>ed as a solution <strong>in</strong> (0, 1) to <strong>the</strong> cubicequation (43). The desired asymptotic lower bound (53) <strong>the</strong>nfollows from (54) and <strong>the</strong> follow<strong>in</strong>g Lemma 3.Lemma 3: for each power P > 0, letanoisecorrelationρ z (P ) ∈ [−1, 1] be given, and let ρ ∗ (P ) be a solution <strong>in</strong>(0, 1) to <strong>the</strong> cubic equation <strong>in</strong> (43). Then,lim P 1−ɛ (1 − ρ ∗ (P )) = 0,P →∞Proof: See Appendix B.{ 1 − β∀ɛ >max2}, 0 .VI. PROOFS OF THEOREMS 1 AND 2 AND NOTE 1 FOR THE<strong>AWGN</strong> BC WITH NOISE-FREE FEEDBACKAs mentioned <strong>in</strong> Note 2, Relations (12)–(14) <strong>in</strong> Theorem 1can be directly obta<strong>in</strong>ed from Theorem 2. Never<strong>the</strong>less, forconvenience to <strong>the</strong> reader we provide a separate proof for eachof <strong>the</strong> relations <strong>in</strong> Theorem 1.A. Proof of Theorem 1Equation (14) follows from <strong>the</strong> follow<strong>in</strong>g more generalrelation: irrespective of <strong>the</strong> noise correlation ρ z ∈ [−1, 1] and<strong>the</strong> noise variances σ 2 1,σ 2 2 > 0 <strong>the</strong> prelog satisfiesC BC,Σ (P, σ1 ≤ lim1,σ 2 2,ρ 2 z )P →∞12 log(1 + P ) ≤ 2. (55)The lower bound <strong>in</strong> (55) holds because without feedback <strong>the</strong>prelog of <strong>the</strong> <strong>AWGN</strong> BC equals 1 irrespective of ρ z ∈ [−1, 1](see <strong>the</strong> capacity result of <strong>the</strong> <strong>AWGN</strong> BC without feedback <strong>in</strong>[10]). The upper bound <strong>in</strong> (55) holds because whenever a ratepair (R 1 ,R 2 ) is achievable over <strong>the</strong> <strong>AWGN</strong> BC with noisefreefeedback, <strong>the</strong>n <strong>the</strong> rates R 1 and R 2 cannot exceed <strong>the</strong>s<strong>in</strong>gle-user capacities (with feedback) of <strong>the</strong> <strong>AWGN</strong> channelsfrom <strong>the</strong> transmitter to <strong>the</strong> correspond<strong>in</strong>g receiver, i.e.,R ν ≤ 1 2 log (1+ P σ 2 ν), ν ∈{1, 2}. (56)Equation (12) follows from Relation (55) and Proposition 6.We next establish Equation (15). Notice that when ρ z =1and σ 2 1 = σ 2 2,<strong>the</strong>twonoisesequences{Z 1,t } and {Z 2,t }co<strong>in</strong>cide, and thus <strong>the</strong> two receivers observe exactly <strong>the</strong> sameoutput sequences, i.e., Y 1,t = Y 2,t at all time <strong>in</strong>stances t ∈{1,...,n}. Consequently,ifReceiver2candecodeM 2 ,<strong>the</strong>nso can Receiver 1 and hence (R 1 ,R 2 ) can be achievable onlyif R 1 + R 2 does not exceed <strong>the</strong> capacity of <strong>the</strong> channel toReceiver 1. This implies that <strong>the</strong> sum-capacity of <strong>the</strong> <strong>AWGN</strong>BC with noise-free feedback co<strong>in</strong>cides with <strong>the</strong> s<strong>in</strong>gle-user

8feedback capacity of <strong>the</strong> <strong>AWGN</strong> channel from <strong>the</strong> transmitterto one of <strong>the</strong> two receivers:C BC,Σ (P, σ 2 ,σ 2 ,ρ z =1)= 1 (2 log 1+ P )σ 2 . (57)This establishes <strong>the</strong> desired result <strong>in</strong> (15).We f<strong>in</strong>ally prove Equation (13). By Relation (55) it sufficesto show that when ρ z lies <strong>in</strong> <strong>the</strong> open <strong>in</strong>terval (−1, 1), <strong>the</strong>n<strong>the</strong> prelog is upper bounded by 1. Invok<strong>in</strong>g <strong>the</strong> cutset bound[12, Theorem 15.10.1] with a cut between <strong>the</strong> transmitter andboth receivers we can upper bound <strong>the</strong> sum-capacity asC BC,Σ (P, σ 2 1,σ 2 2,ρ z ) ≤ max I(X; Y 1 Y 2 ), (58)where <strong>the</strong> maximization is over all <strong>in</strong>put distributions on Xsatisfy<strong>in</strong>g E [ X 2] ≤ P .By<strong>the</strong>entropymaximiz<strong>in</strong>gpropertyof <strong>the</strong> Gaussian distribution under a covariance matrix constra<strong>in</strong>t[12], <strong>the</strong> upper bound <strong>in</strong> (58) is equivalent to⎛⎞PC BC,Σ (P, σ1 2 ,σ2 2 ,ρ z) ≤ 1 ⎝1+2σ 2 1 σ2 2σ 2 1 +σ2 2⎠ , (59)(1 − ρ 2 z)which establishes that for every ρ z ∈ (−1, 1) <strong>the</strong> prelog isupper bounded by 1.B. Proof of Theorem 2The asymptotic upper bound (18) follows aga<strong>in</strong> from <strong>the</strong>cutset bound and <strong>the</strong> entropy maximiz<strong>in</strong>g property of Gaussiandistributions under a covariance constra<strong>in</strong>t. In fact, apply<strong>in</strong>g<strong>the</strong> cutset bound with a cut between <strong>the</strong> transmitter and bothreceivers (<strong>in</strong> analogy to (59)) for given power P > 0, noisevariances σ 2 1 ,σ2 2 > 0, andnoisecorrelationρ z(P ) ∈ (−1, 1)we can upper bound <strong>the</strong> sum-capacity asC BC,Σ (P, σ1 2 ,σ2 2 ,ρ z(P ))⎛≤ 1 2 log ⎝1+≤ 1 2 log ⎛⎝1+σ 2 1 σ2 2σ 2 1 +σ2 22 σ2 1 σ2 2σ 2 1 +σ2 2⎞P⎠(1 −|ρ z (P )| 2 )⎞P⎠ , (60)(1 −|ρ z (P )|)where <strong>in</strong> <strong>the</strong> second <strong>in</strong>equality we used that ρ z (P ) ∈ (−1, 1).Moreover—as argued <strong>in</strong> (56)—<strong>the</strong> sum-capacity cannot exceed<strong>the</strong> sum of <strong>the</strong> two s<strong>in</strong>gle-user capacities of <strong>the</strong> channelsfrom <strong>the</strong> transmitter to <strong>the</strong> two receivers, i.e.,C BC,Σ (P, σ1,σ 2 2,ρ 2 z )≤ 1 (2 log 1+ P )σ12 + 1 (2 log 1+ P )σ22 . (61)The asymptotic upper bound (18) follows <strong>the</strong>n by comb<strong>in</strong><strong>in</strong>g(60) and (61) with <strong>the</strong> def<strong>in</strong>ition of α <strong>in</strong> (16).The asymptotic lower bound (19) follows from Proposition7 <strong>in</strong> Section V-D.C. Proof of Note 1We have to show that <strong>the</strong> achievability results <strong>in</strong> Theorem 1rema<strong>in</strong> valid also <strong>in</strong> <strong>the</strong> weaker setup with only one-sidedfeedback. The achievability results <strong>in</strong> (13) and (14) rema<strong>in</strong>valid because a prelog of 1 is trivially achievable for all valuesof ρ z ∈ [−1, 1] even without feedback [10]. The achievabilityresult <strong>in</strong> (12) rema<strong>in</strong>s valid because for ρ z = −1 <strong>the</strong> capacityregions of <strong>the</strong> setups with one-sided and two-sided feedbackco<strong>in</strong>cide. In fact, after each channel use t <strong>the</strong> transmitter canlocally compute <strong>the</strong> miss<strong>in</strong>g output Y 2,t (or Y 1,t )from<strong>the</strong>observed feedback output Y 1,t (or Y 2,t )and<strong>the</strong><strong>in</strong>putX t :Y 2,t = − σ 2σ 1(Y 1,t − X t )+X t ,t ∈{1,...,n}.VII. PROOF OF THEOREM 4 FOR THE <strong>AWGN</strong> BC WITHNOISY FEEDBACKS<strong>in</strong>ce for all correlation coefficients ρ z ∈ [−1, 1] prelog 1is achievable even without feedback [10], <strong>the</strong> <strong>in</strong>terest<strong>in</strong>g partof Theorem 4 is <strong>the</strong> converse result. We prove this converseresult us<strong>in</strong>g a genie-argument similar to [6].Our proof is based on <strong>the</strong> follow<strong>in</strong>g three steps. In <strong>the</strong>first step we <strong>in</strong>troduce a genie-aided <strong>AWGN</strong> BC withoutfeedback and show that its sum-capacity upper bounds <strong>the</strong>sum-capacity of <strong>the</strong> orig<strong>in</strong>al <strong>AWGN</strong> BC with noisy feedback.In <strong>the</strong> second step we <strong>in</strong>troduce a less noisy <strong>AWGN</strong> BC withnei<strong>the</strong>r genie-<strong>in</strong>formation nor feedback and show that its sumcapacityco<strong>in</strong>cides with <strong>the</strong> sum-capacity of <strong>the</strong> genie-aided<strong>AWGN</strong> BC. In <strong>the</strong> third step, we f<strong>in</strong>ally show that <strong>the</strong> prelogof <strong>the</strong> less noisy <strong>AWGN</strong> BC equals 1, irrespective of <strong>the</strong> noisevariances σ1,σ 2 2,σ 2 W 2 1 ,σ2 W 2 > 0 and <strong>the</strong> correlation coefficientρ z ∈ [−1, 1]. Thesethreestepsestablish<strong>the</strong>desiredconverseresult.We elaborate on <strong>the</strong>se three steps start<strong>in</strong>g with <strong>the</strong> firstone. The genie-aided <strong>AWGN</strong> BC is def<strong>in</strong>ed as <strong>the</strong> orig<strong>in</strong>al<strong>AWGN</strong> BC without feedback, butwithageniethatpriortotransmission reveals <strong>the</strong> sequences {(Z 1,t + W 1,t )} n t=1 and{(Z 2,t +W 2,t )} n t=1 to <strong>the</strong> transmitter and both receivers. Noticethat with this genie <strong>in</strong>formation, after each channel use t, <strong>the</strong>transmitter can locally compute <strong>the</strong> miss<strong>in</strong>g feedback outputsV 1,t and V 2,t :V 1,t = X t +(Z 1,t + W 1,t ), (62)V 2,t = X t +(Z 2,t + W 2,t ). (63)We can thus conclude that <strong>the</strong> sum-capacity of <strong>the</strong> genie-aided<strong>AWGN</strong> BC is at least as large as <strong>the</strong> sum-capacity of <strong>the</strong>orig<strong>in</strong>al <strong>AWGN</strong> BC with feedback.We next elaborate on <strong>the</strong> second step. The less noisy <strong>AWGN</strong>BC is described by <strong>the</strong> channel law:where <strong>the</strong> noise sequences are def<strong>in</strong>ed asỸ 1,t = x t + ˜Z 1,t , (64)Ỹ 2,t = x t + ˜Z 2,t , (65)˜Z 1,t Z 1,t − E[Z 1,t |(Z 1,t + W 1,t ), (Z 2,t + W 2,t )] , (66)˜Z 2,t Z 2,t − E[Z 2,t |(Z 1,t + W 1,t ), (Z 2,t + W 2,t )] , (67)

9and are of variances( )Var ˜Z1,t = σ12 σW 2 1 σ2 2(1 − ρ 2 z)+σW 2 1 σ2 W 2(σ1 2 + σ2 W 1 )(σ2 2 + σ2 W 2 ) − , (68)σ2 1 σ2 2 ρ2 z( )Var ˜Z2,t = σ22 σW 2 2 σ2 1(1 − ρ 2 z)+σW 2 2 σ2 W 1(σ1 2 + σ2 W 1 )(σ2 2 + σ2 W 2 ) − . (69)σ2 1 σ2 2 ρ2 zBy <strong>the</strong> follow<strong>in</strong>g two observations, <strong>the</strong> sum-capacity of thisless noisy <strong>AWGN</strong> BC co<strong>in</strong>cides with <strong>the</strong> sum-capacity of <strong>the</strong>genie-aided <strong>AWGN</strong> BC. Firstly, <strong>the</strong> sum-capacity of <strong>the</strong> lessnoisy <strong>AWGN</strong> BC rema<strong>in</strong>s unchanged if prior to transmissionageniereveals<strong>the</strong>sequences{Z 1,t +W 1,t } and {Z 2,t +W 2,t }to <strong>the</strong> transmitter and both receivers. This follows because byDef<strong>in</strong>itions (66) and (67) <strong>the</strong> genie-<strong>in</strong>formation {Z 1,t + W 1,t }and {Z 2,t + W 2,t } is <strong>in</strong>dependent of <strong>the</strong> reduced noise sequences{ ˜Z 1,t , ˜Z 2,t },andthusplaysonly<strong>the</strong>roleofcommonrandomness which does not <strong>in</strong>crease capacity. Secondly, <strong>the</strong>sum-capacity of <strong>the</strong> genie-aided <strong>AWGN</strong> BC co<strong>in</strong>cides with<strong>the</strong> sum-capacity of <strong>the</strong> less noisy <strong>AWGN</strong> BC, when <strong>in</strong> thislatter case <strong>the</strong> transmitter and both receivers additionallyknow<strong>the</strong> genie-<strong>in</strong>formation {(Z 1,t + W 1,t )} and {(Z 2,t + W 2,t )}.This holds because know<strong>in</strong>g <strong>the</strong> genie-<strong>in</strong>formation {(Z 1,t +W 1,t )} n t=1 and {(Z 2,t + W 2,t )} n t=1 <strong>the</strong> outputs Ỹ1,t and Ỹ2,tcan be transformed <strong>in</strong>to <strong>the</strong> outputs Y 1,t and Y 2,t ,andviceversa.We f<strong>in</strong>ally elaborate on <strong>the</strong> third step. The less noisy <strong>AWGN</strong>BC is a classical <strong>AWGN</strong> BC with nei<strong>the</strong>r feedback nor genie<strong>in</strong>formation,and its sum-capacity is given by [10]:C BCLessNoisy,Σ (P, σ1 2 ,σ2 2 ,ρ z,σW 2 1 ,σ2 W 2⎛) ⎞= 1 2 log P⎝1+ { ( ) ( )} ⎠ , (70)m<strong>in</strong> Var ˜Z1,t , Var ˜Z2,t( ) ( )where <strong>the</strong> variances Var ˜Z1,t , Var ˜Z2,t are def<strong>in</strong>ed <strong>in</strong>(68) and (69). By (68)–(70) <strong>the</strong> prelog of <strong>the</strong> less noisy<strong>AWGN</strong> BC equals 1, irrespective of <strong>the</strong> noise variancesσ1 2,σ2 2 ,σ2 W 1 ,σ2 W 2 > 0 and <strong>the</strong> noise correlation ρ z ∈ [−1, 1].This concludes <strong>the</strong> third step, and thus our proof.VIII. ACHIEVABILITY OF PRELOG 2 FOR THE <strong>AWGN</strong> ICWITH NOISE-FREE FEEDBACKWe prove that <strong>the</strong> prelog of <strong>the</strong> <strong>AWGN</strong> IC with one-sidednoise-free feedback equals 2 when ρ z = −1. As <strong>in</strong> <strong>the</strong>previous section, this prelog is achieved with a Schalkwijk-Kailath-type scheme. More specifically, it is achieved by astraightforward extension of Kramer’s memoryless-LMMSEscheme for <strong>in</strong>terference channels [8, Section VI-B] to channelswith correlated noises.A. Scheme for ρ z = −1Prior to transmission, each transmitter maps its message <strong>in</strong>toamessagepo<strong>in</strong>tas<strong>in</strong>(33).Thetransmitters<strong>the</strong>ndescribe<strong>the</strong>semessage po<strong>in</strong>ts to <strong>the</strong>ir <strong>in</strong>tended receivers us<strong>in</strong>g n channeluses.The first three channel uses are part of an <strong>in</strong>itializationprocedure. In <strong>the</strong> first channel use both transmitters rema<strong>in</strong>silent. Via <strong>the</strong> feedback both transmitters <strong>the</strong>n observe <strong>the</strong>Gaussian random variable N Z 1,1 = −Z 2,1 ,whichwillserve <strong>the</strong>m as common randomness <strong>in</strong> future transmissions.In <strong>the</strong> second channel use Transmitter 2 rema<strong>in</strong>s aga<strong>in</strong> silentand Transmitter 1 sends:√ (√)P PX 1,2 =P + σN2 Var(Θ 1 ) Θ 1 + N σ N,σwhere σ N will be def<strong>in</strong>ed later on. In <strong>the</strong> third channel useTransmitter 1 rema<strong>in</strong>s silent and Transmitter 2 sends:√ (√)P PX 2,3 =P + σN2 Var(Θ 2 ) Θ 2 + N σ N.σAt <strong>the</strong> end of this <strong>in</strong>itialization √ phase Receiver 1 produces<strong>the</strong> estimate ˆΘ√P +σN1,3 2 Var(Θ 1)P PY 1,2 and Receiver 2√<strong>the</strong> estimate ˆΘ√P +σN2,3 2 Var(Θ 2)P PY 2,3 .Theirestimationerrors are thus given by:√ √ɛ 1,3 ˆΘ Var(Θ1 ) P + σ21,3 − Θ 1 =N(N + Z 1,2 ),P Pandɛ 2,3 ˆΘ 2,3 − Θ 2 =√Var(Θ2 )P√P + σ2NP(N + Z 2,2 ).In <strong>the</strong> subsequent (n−3) channel uses, <strong>the</strong> two transmitterstransmit symbols <strong>in</strong> order to help <strong>the</strong> receivers ref<strong>in</strong>e <strong>the</strong>seestimates. In channel use t ∈{4,...,n}, Transmitter1sendsascaledversionofReceiver1’sestimationerrorɛ 1,t−1 aboutmessage po<strong>in</strong>t Θ 1 and Transmitter 2 sends Receiver 2’s estimationerror ɛ 2,t−2 about message po<strong>in</strong>t Θ 2 .Noticethateachtransmitter knows <strong>the</strong> estimation error of its correspond<strong>in</strong>greceiver because it is cognizant of its own message po<strong>in</strong>tand through <strong>the</strong> noise-free one-sided feedback also of <strong>the</strong>estimate at its correspond<strong>in</strong>g receiver. Specifically, at timet ∈{4,...,n} Transmitter 1 sendsX 1,t =√Pɛ 1,t−1 ,α 1,t−1and Transmitter 2 sendsX 2,t =√sign(ρ t−1 )Pɛ 2,t−1 .α 2,t−1After each channel use t ∈{4,...,n} <strong>the</strong> two receivers update<strong>the</strong>ir message-po<strong>in</strong>t estimates accord<strong>in</strong>g to <strong>the</strong> updat<strong>in</strong>g rule(35) <strong>in</strong> Section V-A.After <strong>the</strong> reception of <strong>the</strong> n-th channel output, Receiver νproduces as its guess <strong>the</strong> message ˆM ν whose message po<strong>in</strong>tis closest to its estimate ˆΘ ν,n ,i.e.,∣ˆM ν = argm<strong>in</strong> m∈{1,...,⌊e nRν ⌋} ∣Θ ν (m) − ˆΘ∣ ∣∣ν,n ,where ties can be resolved arbitrarily.

10B. AnalysisWe present only a rough analysis of <strong>the</strong> scheme. For moredetails, see [8].The probability of error of <strong>the</strong> described scheme tends to 0as <strong>the</strong> blocklength n tends to <strong>in</strong>f<strong>in</strong>ity, if for ν ∈{1, 2}:1R ν ≤ limn→∞ nwhere α ν,2 = Var(Θ ν );α ν,3 = Var(Θ) νPand for t ∈{4,...,n}:n∑t=3P + σ 2 NP( )12 log αν,t−1, (71)α ν,t(σ 2 N + σ 2 ), ν ∈{1, 2}; (72)Pa 2 (1 −|ρ t−1 | 2 )+σ 2α ν,t = α ν,t−1P (1 + a 2 , ν ∈{1, 2}.+2a|ρ t−1 |)+σ2 (73)The correlation coefficients {ρ t } n t=3 are def<strong>in</strong>ed asρ 3 =σ 2 Nσ2 N+ σ2, (74)and for t ∈{4,...,n} through Recursion (75) on top of <strong>the</strong>next page. We shall choose <strong>the</strong> variance σN2 such that <strong>the</strong>sequence {ρ t } n t=2 is constant <strong>in</strong> magnitude but alternates <strong>in</strong>sign, i.e., such that for some ρ ∗ IC ∈ (0, 1):ρ t =(−1) t ρ ∗ IC , t ∈{3,...,n}. (76)This way, <strong>the</strong> ratios α1,t−1α 1,tand α2,t−1α 2,tare constant for t ∈{4,...,n}, andtrivially<strong>the</strong>limiton<strong>the</strong>right-handsideof(71) equals1limn→∞ nn∑t=3( )12 log αν,t−1α ν,t= 1 ( P (1 + a 22 log +2aρ ∗ )IC )+σ2Pa 2 (1 − (ρ ∗ IC )2 )+σ 2 . (77)By Equation (74), we can set ρ 3 to every value <strong>in</strong> [0, 1) ifwe appropriately choose <strong>the</strong> variance σN 2 .Therefore,Condition(76) can be satisfied for all ρ ∗ ∈ [0, 1) that correspondto “fixed po<strong>in</strong>ts” of Recursion (75), i.e., for all ρ ∗ such thatsubstitut<strong>in</strong>g ρ t−1 = ρ ∗ <strong>in</strong>to Recursion (75) yields ρ t = −ρ ∗ ,and substitut<strong>in</strong>g ρ t−1 = −ρ ∗ <strong>in</strong>to Recursion (75) yieldsρ t = ρ ∗ . Us<strong>in</strong>g simple algebraic manipulations it can beseen that <strong>the</strong> set of such “fixed po<strong>in</strong>ts” is given by <strong>the</strong> setof solutions <strong>in</strong> [0, 1) to <strong>the</strong> quartic equationwhereρ 4 + d 3 ρ 3 + d 2 ρ 2 + d 1 ρ + d 0 =0, (78)d 3 =σ22aP , (79)d 2 = −2 − σ2 (4 + aρ z )2a 2 , (80)Pd 1 = − σ2 (1 + 2a 2 +2aρ z )2a 3 P−σ4a 3 P 2 , (81)d 0 =1+ σ2 (2a − ρ z )2a 3 . (82)PNotice that Equation (78) has at least one solution <strong>in</strong> [0, 1),and thus, Recursion (75) has at least one “fixed po<strong>in</strong>t” ρ ∗ IC <strong>in</strong>[0, 1). Thisfollowsby<strong>the</strong>Intermediate-ValueTheoremandbecause for ρ =0and ρ z = −1 <strong>the</strong> left hand-side of (78)evaluates to 1+ σ2 (2a+1)2a 3 P> 0 and for ρ =1and ρ z = −1 itevaluates to − σ4a 3 P< 0. Comb<strong>in</strong><strong>in</strong>g<strong>the</strong>seobservationswith2(71) and (77) leads to <strong>the</strong> follow<strong>in</strong>g lemma.Lemma 4: Let power P>0, noisevarianceσ 2 > 0, noisecorrelation ρ z ∈ [−1, 1], andcrossga<strong>in</strong>a ≠0be given. Thescheme described <strong>in</strong> Section VIII-A achieves all rate pairs(R 1 ,R 2 ) satisfy<strong>in</strong>gR ν < 1 ( P (1 + a 22 log +2aρ ∗ )IC )+σ2Pa 2 (1 − (ρ ∗ IC )2 )+σ 2 , ν ∈{1, 2},(83)where ρ ∗ IC is def<strong>in</strong>ed as a solution5 <strong>in</strong> (0, 1) to <strong>the</strong> quarticequation (78).C. High-SNR AsymptoticsProposition 8: <strong>When</strong> ρ z = −1 and a > 0 <strong>the</strong> abovedescribed scheme achieves prelog 2.Proof: By Lemma 4, for each power P>0 and ɛ>0<strong>the</strong> scheme <strong>in</strong> <strong>the</strong> previous section VIII-A achieves a sum-rate( P (1 + a 2 ρ ∗ )ICR Σ (P ) = log)+σ2Pa 2 (1 − (ρ ∗ IC )2 )+σ 2 − ɛ( P (1 + a 2 )+σ 2 )≥ log2Pa 2 (1 − ρ ∗ − ɛ, (84)IC )+σ2where ρ ∗ IC is def<strong>in</strong>ed as a solution <strong>in</strong> (0, 1) to <strong>the</strong> quarticequation (78), and where <strong>the</strong> <strong>in</strong>equality follows because ρ ∗ IClies <strong>in</strong> <strong>the</strong> <strong>in</strong>terval (0, 1).The desired asymptotic lower bound (29) follows from (84)and from <strong>the</strong> follow<strong>in</strong>g Lemma 5.Lemma 5: Let <strong>the</strong> noise variance σ 2 > 0, noisecorrelationρ z = −1, andcrossga<strong>in</strong>a>0 be given. For each powerP>0 let ρ ∗ IC (P ) to be a solution <strong>in</strong> <strong>the</strong> <strong>in</strong>terval (0, 1) to <strong>the</strong>quartic equation (78) <strong>in</strong> ρ. Then,lim P 1−ɛ (1 − ρ ∗ IC (P )) = 0, ∀ɛ >0.P →∞Proof: See Appendix C.IX. PROOF OF THEOREM 5 FOR THE <strong>AWGN</strong> IC WITHNOISE-FREE FEEDBACKWe first prove Relation (31), which follows from <strong>the</strong> follow<strong>in</strong>gmore general relation. Irrespective of <strong>the</strong> symmetricnoise-variance σ 2 > 0, <strong>the</strong>noisecorrelationρ z ∈ [−1, 1], and<strong>the</strong> cross ga<strong>in</strong> a>0, <strong>the</strong>prelogsatisfiesC IC,Σ (P, σ, ρ z ,a)1 ≤ limP →∞12 log(1 + P ) ≤ 2. (85)The lower bound can be achieved, e.g., by silenc<strong>in</strong>g Transmitter1 and lett<strong>in</strong>g Transmitter 2 communicate its Message M 2 toReceiver 2 over <strong>the</strong> result<strong>in</strong>g <strong>in</strong>terference-free <strong>AWGN</strong> channelY 2,t = X 2,t + Z 2,t at a rate R 2 = 1 2 log ( )1+ P σ .Theupper25 If <strong>the</strong>re is more than one such solution, <strong>the</strong>n one can freely choose oneof <strong>the</strong>m.

11ρ t = sign(ρ t−1 ) P (1 + a2 +2a|ρ t−1 |)+σ 2Pa 2 (1 −|ρ 2 t−1(|)+σ2· |ρ t−1 − 2 P (a + |ρ t−1|)(1 + a|ρ t−1 |)P (1 + a 2 +2a|ρ t−1 |)+σ 2 + P (1 + a|ρ t−1 |) 2)(P (1 + a 2 +2a|ρ t−1 |)+σ 2 ) 2 (P (2a + |ρ t−1|(1 + a 2 )) + σ 2 ρ z ) .(75)bound can be derived us<strong>in</strong>g <strong>the</strong> cutset bound and <strong>the</strong> entropymaximiz<strong>in</strong>g property of <strong>the</strong> Gaussian distribution under acovariance matrix constra<strong>in</strong>t. In fact, apply<strong>in</strong>g <strong>the</strong> cutset boundwith a cut between both transmitters and Receiver 2 on oneside and Receiver 1 on <strong>the</strong> o<strong>the</strong>r side yieldsR 1 ≤ max I(X 1 X 2 ; Y 1 )= 1 (2 log 1+ (1 + )a)2 Pσ 2 ,where <strong>the</strong> maximization is over all jo<strong>in</strong>t laws on <strong>the</strong> pair(X 1 ,X 2 ) satisfy<strong>in</strong>g <strong>the</strong> power constra<strong>in</strong>ts E [ X 2 1]≤ P andE [ X 2 2]≤ P .Similarly,apply<strong>in</strong>g<strong>the</strong>cutsetboundwithacutbetween both transmitters and Receiver 1 on one side andReceiver 2 on <strong>the</strong> o<strong>the</strong>r side yieldsR 2 ≤ max I(X 1 X 2 ; Y 2 )= 1 (2 log 1+ (1 + )a)2 Pσ 2 .These two upper bounds establish <strong>the</strong> converse result <strong>in</strong> (31).Equation (29) is established by <strong>the</strong> general Relation (85)and Proposition 8 <strong>in</strong> Section VIII-C.We next establish Equation (32). Towards this end, wenotice that for a =1and ρ z =1<strong>the</strong> two output sequences{Y 1,t } and {Y 2,t } co<strong>in</strong>cide. Therefore, whenever Receiver 1can decode its message M 1 so can Receiver 2, and similarlywhenever Receiver 2 can decode M 2 so can Receiver 1. Thus,for a =1and ρ z =1<strong>the</strong> feedback capacity of our <strong>AWGN</strong>IC co<strong>in</strong>cides with <strong>the</strong> feedback capacity of <strong>the</strong> <strong>AWGN</strong> MACsfrom both transmitters to one of <strong>the</strong> two receivers. S<strong>in</strong>ce <strong>the</strong>se<strong>AWGN</strong> MACs have prelog 1 (with or without feedback) [13],[14], [15] Relation (32) follows.We f<strong>in</strong>ally show that irrespective of <strong>the</strong> cross ga<strong>in</strong> a>0,for ρ z ∈ (−1, 1) <strong>the</strong> prelog is upper bounded by 1, i.e.,C IC,Σ (P, σ, ρ z ,a)limP →∞12 log(1 + P ) ≤ 1, ρ z ∈ (−1, 1). (86)Comb<strong>in</strong>ed with (85) this establishes (30).We prove (86) based on a genie-argument and a generalizedSato-MAC bound [16], similar to <strong>the</strong> upper bounds <strong>in</strong> [17],[18]. Our proof consists of <strong>the</strong> follow<strong>in</strong>g three steps. In <strong>the</strong>first step, we consider a scenario where prior to transmission agenie reveals <strong>the</strong> symbols U n = Z1 n − 1 a Zn 2 to Receiver 1. Thisobviously can only <strong>in</strong>crease <strong>the</strong> sum-capacity of our channel.In <strong>the</strong> second step, we apply Sato’s MAC-bound argument[16] to <strong>the</strong> genie-aided <strong>AWGN</strong> IC obta<strong>in</strong>ed <strong>in</strong> <strong>the</strong> first step: 6 we6 Unlike <strong>in</strong> Sato’s setup, here both transmitters have feedback. As we shallsee, Sato’s MAC-bound argument applies unchanged also to feedback-setups,as <strong>the</strong> argument affects only <strong>the</strong> receivers.show that <strong>the</strong> capacity of this IC is <strong>in</strong>cluded <strong>in</strong> <strong>the</strong> capacity of<strong>the</strong> MAC that results from <strong>the</strong> IC when Receiver 1 is requiredto decode both messages and Receiver 2 no message at all. The<strong>in</strong>clusion can be proved based on <strong>the</strong> follow<strong>in</strong>g observation.<strong>When</strong>ever Receiver 1 has successfully decoded Message M 1 ,<strong>the</strong>n it can reconstruct <strong>the</strong> <strong>in</strong>puts X1n and also <strong>the</strong> outputsobserved at Receiver 2:Y n2 = 1 a (Y n1 − X n 1 )+U n . (87)Consequently, under this assumption it can decode MessageM 2 <strong>in</strong> <strong>the</strong> same way as Receiver 2. This implies that forfixed encod<strong>in</strong>g strategies applied at <strong>the</strong> two transmitters, <strong>the</strong>m<strong>in</strong>imum probability of error <strong>in</strong> <strong>the</strong> MAC setup cannot exceed<strong>the</strong> m<strong>in</strong>imum probability of error <strong>in</strong> <strong>the</strong> IC setup and proves<strong>the</strong> desired <strong>in</strong>clusion.In <strong>the</strong> third step we show that <strong>the</strong> MAC to Receiver 1 hasprelog no larger than 1. Comb<strong>in</strong>ed with <strong>the</strong> previous two stepsthis yields <strong>the</strong> desired upper bound (86). Before elaborat<strong>in</strong>gon this third step, we recall some properties of <strong>the</strong> consideredMAC: Its channel law is described byY 1,t = X 1,t + aX 2,t + Z 1,t ,t ∈{1,...,n};its two transmitters observe <strong>the</strong> generalized feedback signals{Y 1,t } and {Y 2,t }; and before <strong>the</strong> transmission starts itsreceiver learns <strong>the</strong> genie-<strong>in</strong>formation U n .We now prove that <strong>the</strong> prelog of this MAC is upper boundedby 1. To this end we fix an arbitrary sequence of blocklengthn,rates-(R 1 ,R 2 ) cod<strong>in</strong>g schemes for <strong>the</strong> considered MACsuch that <strong>the</strong> probability of error ɛ(n) tends to zero as n tendsto <strong>in</strong>f<strong>in</strong>ity. Then, for every blocklength n we have:R 1 + R 2≤1 n I(M 1,M 2 ; Y1 n ,U n )+ ɛ(n)n= 1 n I(M 1,M 2 ; Y1 n |U n )+ ɛ(n)n= 1 n∑ (h(Y 1,t |Y1 t−1 ,U n )nt=1)−h(Y 1,t |Y1 t−1 ,M 1 ,M 2 ,U n )≤1 n∑ (h(Y 1,t |U t )nt=1)−h(Y 1,t |Y1 t−1 ,M 1 ,M 2 ,Y2 t−1 ,U n )= 1 n∑ ()h(Y 1,t |U t ) − h(Y 1,t |X 1,t ,X 2,t ,U t )nt=1+ ɛ(n)n+ ɛ(n)n

12= 1 nn∑I(Y 1,t ; X 1,t ,X 2,t |U t )t=1≤ 1 2 log (1+ (1 + a)2 P)σ 2 1−ρ 2 z1+a 2 −2aρ z+ ɛ(n)n ,where <strong>the</strong> first <strong>in</strong>equality follows by Fano’s <strong>in</strong>equality; <strong>the</strong> firstequality follows by <strong>the</strong> <strong>in</strong>dependence of <strong>the</strong> genie-<strong>in</strong>formationU n and <strong>the</strong> messages M 1 and M 2 ;<strong>the</strong>second<strong>in</strong>equalityfollowsbecause condition<strong>in</strong>g cannot <strong>in</strong>crease differential entropyand because <strong>the</strong> vector Y2 t−1 can be computed as a functionof M 1 ,Y1 t−1 ,andU t−1 ,see(87);<strong>the</strong>thirdequalityfollowsbecause <strong>the</strong> <strong>in</strong>put X 1,t is a function of <strong>the</strong> Message M 1 and<strong>the</strong> feedback outputs Y1 t−1 ,andsimilarlyX 2,t is a functionof M 2 and Y2 t−1 ,andbecauseof<strong>the</strong>Markovrelation(M 1 ,M 2 ,Y t−11 ,Y t−12 ,U t−1 ,U n t+1 ) − (X 1,t,X 2,t ,U t ) − Y 1,t ;and <strong>the</strong> last <strong>in</strong>equality follows because <strong>the</strong> Gaussian distributionmaximizes differential entropy under a covarianceconstra<strong>in</strong>t. S<strong>in</strong>ce by assumption <strong>the</strong> probabilities of error ɛ(n)tend to 0 as n →∞<strong>the</strong> sum-rate of <strong>the</strong> considered schememust satisfy()R 1 + R 2 ≤ 1 2 log 1+ (1 + a)2 P,σ 2 (1−ρ 2 z )1+a 2 −2aρ zand thus <strong>the</strong> sum-capacity of <strong>the</strong> MAC to Receiver 1()C MAC,Σ (P, σ 2 ,ρ z ,a) ≤ 1 2 log 1+ (1 + a)2 P.σ 2 (1−ρ 2 z )1+a 2 −2aρ zIt immediately follows that for a>0 and ρ z ∈ (−1, 1) <strong>the</strong>prelog is upper bounded by 1, which concludes <strong>the</strong> third stepand <strong>the</strong> proof of (86).ACKNOWLEDGMENTThe authors thank Prof. Frans M. J. Willems, TU E<strong>in</strong>dhoven,for po<strong>in</strong>t<strong>in</strong>g <strong>the</strong>m to [5], which <strong>in</strong>spired <strong>the</strong> <strong>in</strong>vestigationlead<strong>in</strong>g to this work. This work was supported <strong>in</strong> part byNSF grants CNS-0627024 and CCF-0830428.A. Proof of Lemma 2APPENDIXS<strong>in</strong>ce for every P > 0 <strong>the</strong> parameter ρ ∗ (P ) lies <strong>in</strong> <strong>the</strong><strong>in</strong>terval (0, 1):lim P 1−ɛ (1 − ρ ∗ (P )) ≥ 0, ∀ɛ >0.P →∞Thus, we have to prove thatlim P 1−ɛ (1 − ρ ∗ (P )) ≤ 0, ∀ɛ >0. (88)P →∞To this end, we <strong>in</strong>troduce for every P>0 <strong>the</strong> parameterwhich by (43) and (44)–(46) satisfiesg ∗ (P ) 1 − ρ ∗ (P ), (89)0=− (g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2 + γ 1 (P )g ∗ (P )+γ 0 (P ),(90)whereγ 2 (P )=3− 2σ 1σ 2P− P + σ2 1 + σ2 2 + ρ z σ 1 σ√ √ 2P + σ21 P + σ222σ 2 1 σ2 2−P √ √ , (91)P + σ12 P + σ2(2)Pγ 1 (P )=−2 1 − √ √P + σ21 P + σ22+ (2 + ρ z)σ1 2 +(2+ρ z )σ2 2 +2ρ z σ 1 σ√ √ 2P + σ21 P + σ22+ σ2 1 + σ2 2 +4σ 1σ 2P+ σ 1σ 2 (σ1 2 +4σ 1 σ 2 + σ2)2P √ P + σ12 (γ 0 (P )=− σ2 1 +2σ 1 σ 2 + σ 2 2P√P + σ22, (92))P1+ρ z √ √P + σ21 P + σ22− σ 1σ 2 (σ1 2 +2σ 1σ 2 + σ2 2)P √ √ . (93)P + σ12 P + σ22Prov<strong>in</strong>g (88) is <strong>the</strong>n equivalent to prov<strong>in</strong>glim P 1−ɛ g ∗ (P ) ≤ 0, ∀ɛ >0. (94)P →∞We shall prove (94) by contradiction, and thus assume that<strong>the</strong>re exists an ɛ>0 such thatlim P 1−ɛ g ∗ (P ) > 0. (95)P →∞By our assumption, <strong>the</strong> parameter{}ɛ ∗ sup ɛ : lim P 1−ɛ g ∗ (P ) > 0 , (96)P →∞is strictly positive, and we can choose an ɛ 1 <strong>in</strong> <strong>the</strong> open <strong>in</strong>terval(0,ɛ ∗ ).In <strong>the</strong> rest of this appendix we show that under ourassumptionlim ∆ BC(P ) > 0, (97)P →∞where for each P>0 we def<strong>in</strong>e(∆ BC (P ) P 2−ɛ∗ −ɛ 1− (g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2)+γ 1 (P )g ∗ (P )+γ 0 (P ) . (98)Inequality (97) implies that <strong>the</strong>re exist (f<strong>in</strong>ite) values P>0for which ∆ BC (P ) > 0 and thus <strong>the</strong> cubic equation (90) isviolated. This leads to <strong>the</strong> desired contradiction.In <strong>the</strong> follow<strong>in</strong>g we establish Inequality (97) by prov<strong>in</strong>g <strong>the</strong>follow<strong>in</strong>g three limitslim P 2−ɛ∗ −ɛ 1(−(g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2) > 0, (99)P →∞We first notice thatlim P 2−ɛ∗ −ɛ 1γ 1 (P )g ∗ (P )=0, (100)P →∞lim P 2−ɛ∗ −ɛ 1γ 0 (P )=0. (101)P →∞lim 2(P )=2,P →∞(102)lim 1(P )=0,P →∞(103)

13lim γ 0(P )=0, (104)P →∞and thus by cont<strong>in</strong>uity <strong>the</strong> solutions to <strong>the</strong> cubic equation (90)tend to <strong>the</strong> solutions to <strong>the</strong> cubic equation −g 3 +2g 2 =0.S<strong>in</strong>ce for this latter equation g =0is <strong>the</strong> only solution <strong>in</strong> <strong>the</strong><strong>in</strong>terval [0, 1], itmustholdthatlimP →∞ g∗ (P )=0. (105)( )Inequality (99) is <strong>the</strong>n proved as follows. S<strong>in</strong>ce ɛ ∗ +ɛ 12 0, (106)limP →∞which comb<strong>in</strong>ed with limits (102) and (105) yields <strong>the</strong> desired<strong>in</strong>equality (99):lim P 2−ɛ∗ −ɛ 1(−(g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2)P →∞(= lim P 1− ɛ∗ +ɛ 1 22 g ∗ (P ))(−g ∗ (P )+γ 2 (P ))P →∞> 0.To prove equations (100) and (101) we employ <strong>the</strong> follow<strong>in</strong>glimit()lim P P1 − √ √ = σ2 1 + σ2 2, (107)P →∞ P + σ21 P + σ222which can be proved with Bernoulli-de l’Hôpital’s rule. S<strong>in</strong>ce(ɛ ∗ + ɛ 1 ) > 0, Limit(107)comb<strong>in</strong>edwith<strong>the</strong>def<strong>in</strong>itionofγ 0 (P ) <strong>in</strong> (93) establishes Equation (101). Similarly, s<strong>in</strong>ce ɛ 1 >0, Limit(107)comb<strong>in</strong>edwith<strong>the</strong>def<strong>in</strong>itionofγ 1 (P ) <strong>in</strong> (92)yieldslim P 1− ɛ 12 γ1 (P )=0. (108)P →∞Moreover, s<strong>in</strong>ce (ɛ ∗ + ɛ1 2 ) >ɛ∗ by <strong>the</strong> def<strong>in</strong>ition of ɛ ∗ :lim P 1−ɛ∗ −ɛ 1/2 g ∗ (P )=0, (109)P →∞which comb<strong>in</strong>ed with (108) establishes <strong>the</strong> desired equality(100).B. Proof of Lemma 3The first part of <strong>the</strong> proof is analogous to <strong>the</strong> proof <strong>in</strong>Appendix A. Aga<strong>in</strong>, s<strong>in</strong>ce for each P>0 <strong>the</strong> parameter ρ ∗ (P )lies <strong>in</strong> <strong>the</strong> <strong>in</strong>terval (0, 1) <strong>the</strong> lower boundlim P 1−ɛ (1 − ρ ∗ (P )) ≥ 0,P →∞{ 1 − β∀ɛ >max2}, 0trivially holds, and <strong>the</strong> <strong>in</strong>terest<strong>in</strong>g part is to prove{ } 1 − βlim P 1−ɛ (1 − ρ ∗ (P )) ≤ 0, ∀ɛ >max , 0 .P →∞ 2(110)Def<strong>in</strong>e for every P>0g ∗ (P ) 1 − ρ ∗ (P ),which lies <strong>in</strong> (0, 1) and by (43) and (44)–(46) satisfies−(g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2 + γ 1 (P )g ∗ (P )+γ 0 (P )=0,(111)where <strong>in</strong> this appendix <strong>in</strong> <strong>the</strong> def<strong>in</strong>itions of γ 2 (P ), γ 1 (P ),and γ 0 (P ) (Def<strong>in</strong>itions (91)–(93)) <strong>the</strong> correlation coefficientρ z should be replaced by ρ z (P ). Insteadofprov<strong>in</strong>g(110),weshall prove <strong>the</strong> equivalent limitlim P 1−ɛ g ∗ (P ) ≤ 0,P →∞{ 1 − β∀ɛ >max2}, 0 . (112)The proof is lead{ by contradiction. } Thus, assume that <strong>the</strong>reexists an ɛ>max 1−β2 , 0 satisfy<strong>in</strong>glim P 1−ɛ g ∗ (P ) > 0. (113)P →∞Under this assumption, <strong>the</strong> parameter{}ɛ ∗ sup ɛ : lim P 1−ɛ g ∗ (P ) > 0 , (114)P →∞{is larger than max 1−β2},andwecanchooseɛ , 0 1 <strong>in</strong> <strong>the</strong>( { }open <strong>in</strong>terval max 1−β2 , 0 ,ɛ).Toestablish<strong>the</strong>desired∗contradiction, we prove thatlim ∆ BC,2(P ) > 0, (115)P →∞where we def<strong>in</strong>e(∆ BC,2 (P ) P 2−ɛ∗ −ɛ 1− (g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2)+γ 1 (P )g ∗ (P )+γ 0 (P ) .This implies that for some values P>0 <strong>the</strong> term ∆ BC,2 (P ) >0 and thus Equation (111) is violated, which leads to <strong>the</strong>desired contradiction.Us<strong>in</strong>g similar steps as for <strong>the</strong> proof of Equations (99) and(100) <strong>in</strong> Appendix A, it can be shown that(lim P 2−ɛ∗ −ɛ 1− (g ∗ (P )) 3 + γ 2 (P )(g ∗ (P )) 2P →∞)+γ 1 (P )g ∗ (P ) > 0.It <strong>the</strong>refore suffices to prove(116)lim P 2−ɛ∗ −ɛ 1γ 0 (P )=0, (117)P →∞<strong>in</strong> order to establish (115). Towards prov<strong>in</strong>g (117), we firstrewrite γ 0 (P ) asγ 0 (P )=− σ2 1 +2σ 1σ 2 + σ22(( P)P1 − √ √P + σ21 P + σ22+(1+ρ z (P ))and notice that <strong>the</strong> follow<strong>in</strong>g limit((lim P (1−ɛ∗ −ɛ 1)P →∞1 −P√P + σ21√P + σ22σ 1 σ 2−P √ √),P + σ12 P + σ22)P√ √P + σ21 P + σ22

14P+(1+ρ z (P )) √ √ +δ 2 (P )(gIC ∗ (P P + σ21 P + σ2 ))2 + δ 1 (P )g ∗ (P )+δ 0 (P )=0, (126)2) whereσ 1 σ 2−P √ √ =0,P + σ12 P + σ22δ 3 (P ) −4 − σ22aP , (127)(118)δ 2 (P ) 4 − σ2 (4 + aρ z − 3a)directly leads to <strong>the</strong> desired result (117). We now prove2a 2 PLimit (118). Obviously, s<strong>in</strong>ce (ɛ 1 + ɛ ∗ ) > 0:=4− 2σ2 (1 − a)lim P 1−ɛ∗ −ɛ 1σ 1 σ 2P →∞ P √ a 2 , (128)P√ =0, (119)P + σ12 P + σ22 δ 1 (P ) σ2 (−a 2 +2a 2 ρ z +8a +2aρ z +1)2a 3 + σ4Pa 3 P 2and by (107) also) =lim P 1−ɛ∗ −ɛ 1P(1 σ2 (−3a 2 +6a +1)2a 3 + σ4P a 3 P 2 , (129)− √ √ =0. (120)P →∞P + σ21 P + σ22 δ 0 (P ) − σ2 (1 + ρ z )(1 + 2a + a 2 )2a 3 −σ4Pa 3 P 2Moreover, as we shall shortly see, by <strong>the</strong> def<strong>in</strong>ition of <strong>the</strong>parameter β, Equation(17),forallβ ′ 0 such thatComb<strong>in</strong>ed with (119) and (120) this limit establishes (118)and thus (117), and concludes <strong>the</strong> proof.lim P 1−ɛ gIC(P ∗ ) > 0. (132)P →∞We are left with prov<strong>in</strong>g Limit (121). By Equation (17), i.e.,By this assumption, <strong>the</strong> parameter− log(1 + ρ z (P )){}β = limP →∞ log(P )ɛ ∗ sup ɛ : lim P 1−ɛ gIC ∗ (P ) > 0 , (133)P →∞<strong>the</strong>re exists for every ɛ ′ > 0 an unbounded <strong>in</strong>creas<strong>in</strong>g sequence is strictly positive, and we can choose an ɛ 1 <strong>in</strong> <strong>the</strong> open <strong>in</strong>terval{P k } k∈N such that(0,ɛ ∗ ).(β − ɛ ′ ) log(P k ) ≤−log(1 + ρ z (P k )), k ∈ N, In <strong>the</strong> rest of this appendix we prove thati.e., such thatlim P 2−ɛ∗ −ɛ 1∆ IC (P ) > 0, (134)P →∞P −ɛ′k≥ P β−2ɛ′ (1 + ρ z (P k )), k ∈ N. (123) where we def<strong>in</strong>e for each P>0:S<strong>in</strong>ce <strong>the</strong> sequence P −ɛ′ktends to 0 as k →∞and s<strong>in</strong>ce <strong>the</strong> ∆ IC (P ) (gIC(P ∗ )) 4 + δ 3 (P )(gIC(P ∗ )) 3right-hand side of (123) is nonnegative for k ∈ N, wecan+δ 2 (P )(gIC ∗conclude that(P ))2 + δ 1 (P )gIC ∗ (P )+δ 0(P ).lim P Inequality (134) implies that <strong>the</strong>re exist (f<strong>in</strong>ite) values P >β−2ɛ′ (1 + ρ z (P k )) = 0.k→∞ 0 such that ∆ IC > 0 and thus <strong>the</strong> quartic equation (126) isF<strong>in</strong>ally, s<strong>in</strong>ce this argument holds for any ɛ ′ violated. This leads to <strong>the</strong> desired contradiction.> 0, Limit(121)We prove (134) by establish<strong>in</strong>g <strong>the</strong> follow<strong>in</strong>g three <strong>in</strong>equalities:is established.(C. Proof of Lemma 5lim P 2−ɛ∗ −ɛ 1((g∗IC ) 4 + δ 3 (P )(gIC(P ∗ )) 3)The proof of Lemma 5 is similar to <strong>the</strong> proof of Lemma 2P →∞)<strong>in</strong> Appendix A.Aga<strong>in</strong>, s<strong>in</strong>ce for every P>0 <strong>the</strong> parameter ρ ∗ IC (P ) lies <strong>in</strong>+δ 2 (P )(gIC(P ∗ )) 2 > 0, (135)<strong>the</strong> <strong>in</strong>terval (0, 1) we have to prove thatlim P 1−ɛ (1 − ρ ∗ limIC(P )) ≤ 0, ∀ɛ >0. (124)P 2−ɛ∗ −ɛ 1δ 1 (P )gIC ∗ (P )=0, (136)P →∞P →∞limTo this end, we <strong>in</strong>troduce for every P>0 <strong>the</strong> parameterP 2−ɛ∗ −ɛ 1δ 0 (P )=0. (137)P →∞gIC ∗ (P ) 1 − ρ∗ IC (P ), (125) To this end, we first notice thatwhich by (78) and (79)—(82) satisfieslim δ 3(P ) = −4, (138)P →∞(gIC ∗ (P ))4 + δ 3 (P )(gIC ∗ (P ))3 lim δ 2(P ) = 4, (139)P →∞