Data Mining Rule-based Classifiers Classification Rules “if…then ...

Data Mining Rule-based Classifiers Classification Rules “if…then ...

Data Mining Rule-based Classifiers Classification Rules “if…then ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

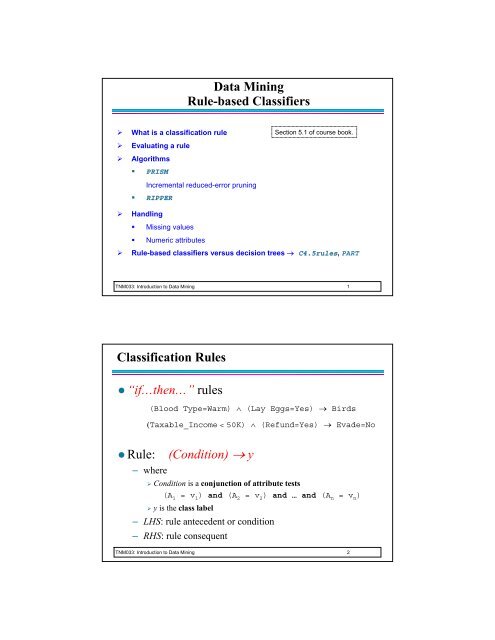

<strong>Data</strong> <strong>Mining</strong><strong>Rule</strong>-<strong>based</strong> <strong>Classifiers</strong>‣ What is a classification ruleSection 5.1 of course book.‣ Evaluating a rule‣ Algorithms• PRISMIncremental reduced-error pruning• RIPPER‣ Handling• Missing values• Numeric attributes‣ <strong>Rule</strong>-<strong>based</strong> classifiers versus decision trees → C4.5rules, PARTTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 1<strong>Classification</strong> <strong>Rule</strong>s• “if…then…” rules(Blood Type=Warm) ∧ (Lay Eggs=Yes) → Birds(Taxable_Income < 50K) ∧ (Refund=Yes) → Evade=No• <strong>Rule</strong>: (Condition) → y– where‣ Condition is a conjunction of attribute tests(A 1 = v 1 ) and (A 2 = v 2 ) and … and (A n = v n )‣ y is the class label– LHS: rule antecedent or condition– RHS: rule consequentTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 2

<strong>Rule</strong>-<strong>based</strong> Classifier (Example)Name Blood Type Give Birth Can Fly Live in Water Classhuman warm yes no no mammalspython cold no no no reptilessalmon cold no no yes fisheswhale warm yes no yes mammalsfrog cold no no sometimes amphibianskomodo cold no no no reptilesbat warm yes yes no mammalspigeon warm no yes no birdscat warm yes no no mammalsleopard shark cold yes no yes fishesturtle cold no no sometimes reptilespenguin warm no no sometimes birdsporcupine warm yes no no mammalseel cold no no yes fishessalamander cold no no sometimes amphibiansgila monster cold no no no reptilesplatypus warm no no no mammalsowl warm no yes no birdsdolphin warm yes no yes mammalseagle warm no yes no birdsR1: (Give Birth = no) ∧ (Can Fly = yes) → BirdsR2: (Give Birth = no) ∧ (Live in Water = yes) → FishesR3: (Give Birth = yes) ∧ (Blood Type = warm) → MammalsR4: (Give Birth = no) ∧ (Can Fly = no) → ReptilesR5: (Live in Water = sometimes) → AmphibiansTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 3Motivation• Consider the rule set– Attributes A, B, C, and D can have values 1, 2, and 3A = 1 ∧ B = 1 → Class = YC = 1 ∧ D = 1 → Class = YOtherwise, Class = N• How to represent it as a decision tree?– The rules need a common attributeA = 1 ∧ B = 1 → Class = YA = 1 ∧ B = 2 ∧ C = 1 ∧ D = 1 → Class = YA = 1 ∧ B = 3 ∧ C = 1 ∧ D = 1 → Class = YA = 2 ∧ C = 1 ∧ D = 1 → Class = YA = 3 ∧ C = 1 ∧ D = 1 → Class = YOtherwise, Class = NTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 4

MotivationB1A2C3C1Y2C3C1 2 3D N N1 2 3D N N1 2 31 2 31 2 31 2 3DNNDNNY N NY N N1 2 3Y N N1 2 3Y N NTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 5Application of <strong>Rule</strong>-Based Classifier• A rule r covers an instance x if the attributes of the instancesatisfy the condition (LHS) of the ruleR1: (Give Birth = no) ∧ (Can Fly = yes) → BirdsR2: (Give Birth = no) ∧ (Live in Water = yes) → FishesR3: (Give Birth = yes) ∧ (Blood Type = warm) → MammalsR4: (Give Birth = no) ∧ (Can Fly = no) → ReptilesR5: (Live in Water = sometimes) → AmphibiansName Blood Type Give Birth Can Fly Live in Water Classhawk warm no yes no ?grizzly bear warm yes no no ?The rule R1 covers a hawk ⇒ Class = BirdThe rule R3 covers the grizzly bear ⇒ Class = MammalTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 6

Building <strong>Classification</strong> <strong>Rule</strong>s• Direct Method‣ Extract rules directly from data‣ e.g.: RIPPER, Holte’s 1R (OneR)• Indirect Method‣ Extract rules from other classification models (e.g.decision trees, etc).‣ e.g: C4.5rulesTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 9A Direct Method: Sequential Covering• Let E be the training set– Extract rules one class at a timeFor each class C1. Initialize set S with E2. While S contains instances in class C3. Learn one rule R for class C4. Remove training records covered by the rule RGoal: to create rules that cover many examples of a class C andnone (or very few) of other classesTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 10

A Direct Method: Sequential Covering• How to learn a rule for a class C?1. Start from an empty rule {} → class = C2. Grow a rule by adding a test to LHS (a = v)3. Repeat Step (2) until stopping criterion is metTwo issues:– How to choose the best test? Which attribute to choose?– When to stop building a rule?TNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 11Example: Generating a <strong>Rule</strong>yb ab b b ab b a ab bbbb bxaa bbyb ab b b ab b a ab bbbb b1·2aa bbxy2·6b ab b b ab b a ab bbbb b1·2aa bbxIf {}then class = aIf (x > 1.2) and (y > 2.6)then class = aIf (x > 1.2)then class = a• Possible rule set for class “b”:If (x ≤ 1.2) then class = bIf (x > 1.2) and (y ≤ 2.6) then class = b• Could add more rules, get “perfect” rule setTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 12

Simple Covering Algorithm• Goal: Choose a test that improves a quality measure for therules.– E.g. maximize rule’s accuracy• Similar to situation in decision trees: problem of selecting anattribute to split on– Decision tree algorithms maximize overall purity• Each new test reduces rule’s coverage:• t total number of instances covered by rule• p positive examples of the class predictedby rule• t – p number of errors made by rule• <strong>Rule</strong>s accuracy = p/tCspace ofexamplesrule so farrule afteradding newtermTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 13When to Stop Building a <strong>Rule</strong>• When the rule is perfect, i.e. accuracy = 1• When increase in accuracy gets below a giventhreshold• When the training set cannot be split any furtherTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 14

PRISM AlgorithmFor each class CInitialize E to the training setWhile E contains instances in class CCreate a rule R with an empty left-hand side that predicts class CUntil R is perfect (or there are no more attributes to use) doFor each attribute A not mentioned in R, and each value v,Consider adding the condition A = v to the left-hand side of RSelect A and v to maximize the accuracy p/t(break ties by choosing the condition with the largest p)Add A = v to RRemove the instances covered by R from ELearn one ruleAvailable in WEKATNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 15<strong>Rule</strong> Evaluation in PRISMt : Number of instancescovered by rulep : Number of instancescovered by rule thatbelong to the positiveclass• Produce rules that don’t cover negative instances, as quickly aspossible• Disadvantage: may produce rules with very small coverage– Special cases or noise? (overfitting)TNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 16

<strong>Rule</strong> Pruning• The PRISM algorithm tries to get perfect rules, i.e.rules with accuracy = 1 on the training set.– These rules can be overspecialized → overfitting– Solution: prune the rules• Two main strategies:– Incremental pruning: simplify each rule as soon as it isbuilt– Global pruning: build full rule set and then prune itTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 19Incremental Pruning: Reduced Error Pruning• The data set is split into a training set and a prune set• Reduced Error Pruning1. Remove one of the conjuncts in the rule2. Compare error rate on the prune set before and afterpruning3. If error improves, prune the conjunct• Sampling with stratification advantageous– Training set (to learn rules)‣ Validation set(to prune rules)– Test set (to determine model accuracy)TNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 20

Direct Method: RIPPER• For 2-class problem, choose one of the classes as positiveclass, and the other as negative class– Learn rules for positive class– Negative class will be default class• For multi-class problem– Order the classes according to increasing class prevalence (fraction ofinstances that belong to a particular class)– Learn the rule set for smallest class first, treat the rest as negative class– Repeat with next smallest class as positive class• Available in WekaTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 21Direct Method: RIPPER• Learn one rule:– Start from empty rule– Add conjuncts as long as they improve FOIL’s information gain– Stop when rule no longer covers negative examples‣ Build rules with accuracy = 1 (if possible)– Prune the rule immediately using reduced error pruning– Measure for pruning: W(R) = (p-n)/(p+n)‣ p: number of positive examples covered by the rule inthe validation set‣ n: number of negative examples covered by the rule inthe validation set– Pruning starts from the last test added to the rule‣ May create rules that cover some negative examples (accuracy < 1)• A global optimization (pruning) strategy is also appliedTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 22

Indirect Method: C4.5rules• Extract rules from an unpruned decision tree• For each rule, r: RHS → c, consider pruning the rule• Use class ordering– Each subset is a collection of rules with the same rule consequent(class)– Classes described by simpler sets of rules tend to appear firstTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 23ExampleName Give Birth Lay Eggs Can Fly Live in Water Have Legs Classhuman yes no no no yes mammalspython no yes no no no reptilessalmon no yes no yes no fisheswhale yes no no yes no mammalsfrog no yes no sometimes yes amphibianskomodo no yes no no yes reptilesbat yes no yes no yes mammalspigeon no yes yes no yes birdscat yes no no no yes mammalsleopard shark yes no no yes no fishesturtle no yes no sometimes yes reptilespenguin no yes no sometimes yes birdsporcupine yes no no no yes mammalseel no yes no yes no fishessalamander no yes no sometimes yes amphibiansgila monster no yes no no yes reptilesplatypus no yes no no yes mammalsowl no yes yes no yes birdsdolphin yes no no yes no mammalseagle no yes yes no yes birdsTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 24

C4.5 versus C4.5rules versus RIPPERGiveBirth?C4.5rules:(Give Birth=No, Can Fly=Yes) → BirdsYesNo(Give Birth=No, Live in Water=Yes) → Fishes(Give Birth=Yes) → MammalsMammalsLive InWater?(Give Birth=No, Can Fly=No, Live in Water=No) → Reptiles( ) → AmphibiansYesNoRIPPER:FishesSometimesAmphibiansYesCanFly?No(Live in Water=Yes) → Fishes(Have Legs=No) → Reptiles(Give Birth=No, Can Fly=No, Live In Water=No)→ Reptiles(Can Fly=Yes,Give Birth=No) → Birds() → MammalsBirdsReptilesTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 25Indirect Method: PART• Combines the divide-and-conquer strategy with separate-andconquerstrategy of rule learning1. Build a partial decision tree on the current set of instances2. Create a rule from the decision tree– The leaf with the largest coverage is made into a rule3. Discarded the decision tree4. Remove the instances covered by the rule5. Go to step one– Available in WEKATNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 26

Advantages of <strong>Rule</strong>-Based <strong>Classifiers</strong>• As highly expressive as decision trees• Easy to interpret• Easy to generate• Can classify new instances rapidly• Performance comparable to decision trees• Can easily handle missing values and numericattributes• Available in WEKA: Prism, Ripper, PART, OneRTNM033: Introduction to <strong>Data</strong> <strong>Mining</strong> 27