Abbas Y. Al-Bayati - Basim A. Hassan - University of Mosul

Abbas Y. Al-Bayati - Basim A. Hassan - University of Mosul

Abbas Y. Al-Bayati - Basim A. Hassan - University of Mosul

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Raf. J. <strong>of</strong> Comp. & Math’s. , Vol. 8, No. 1, 2011The Extended CG Method for Non-Quadratic ModelsReceived on: 25 / 3 / 2007 Accepted on: 4 / 11 / 2007الملخصتم في هذا البحث ربط خوارزمية موسعة للتدرج المترافق مع خوارزمية تهجين المتجهات المترافقة لـِ.Touati-Storey الخوارزمية المقترحة تستخدم خط البحث التام لحل دوال غير خطية ذات أبعاد مختلفةلاختبار كفاءة الخوارزمية المقترحة. أثبتت النتائج العملية أن الخوارزمي ة المقترحة أكثر كفاءة من الخوارزميةABSTRACTالأساسية لـِ . SlobodaThis paper investigates an interleaved algorithm which combines between theextended conjugate gradient with the hybrid method <strong>of</strong> Touati-Storey. This combinedalgorithm is based on the exact line search to solve a number <strong>of</strong> non-linear test functionswith different dimensions. Experimental results indicate that the modified algorithm ismore efficient than the original Sloboda algorithm.1. IntroductionThe problem to be solved by the Sloboda algorithm is to calculate the least value<strong>of</strong> a general differentiable function <strong>of</strong> several variables. Let n be the number <strong>of</strong>variables, x be the vector <strong>of</strong> variables and q(x) be the objective function and g(x) be thegradient <strong>of</strong> q(x). i.e.g(x) = ∇q(x)(1)Conjugate gradient algorithm does not require any explicit second derivativesand it is an iterative method. The sequences <strong>of</strong> points x 1 , x 2 , x 3 , … are calculated bythe successive iteration procedure and it should converge to the point in the space <strong>of</strong> thevariable at which q(x) is least.The conjugate gradient algorithm was first applied to the general unconstrainedminimization problem by Fletcher & Reeves [7]. However, now there are severalversions <strong>of</strong> the algorithm for this calculation. Let x 1 be a given starting point in thespace <strong>of</strong> the variable and let i denote the number <strong>of</strong> the current iteration starting withi=1. the iteration requires the gradient:g i = g(x i ) ,<strong>Abbas</strong> Y. <strong>Al</strong>-<strong>Bayati</strong> <strong>Basim</strong> A. <strong>Hassan</strong>College <strong>of</strong> Computer Sciences and Mathematics<strong>University</strong> <strong>of</strong> <strong>Mosul</strong>if i = 1, let d i be the steepest descent direction,d i = - g i (2)otherwise, for i > 1, we apply the formula:d i = - g i + β i d i-1 (3)where β i and y i have the values:β / y d(4)Ti= yigi+1TiiSawsan S. IsmaelCollege <strong>of</strong> Education<strong>University</strong> <strong>of</strong> <strong>Mosul</strong>13

<strong>Abbas</strong> Y. <strong>Al</strong>-<strong>Bayati</strong> - <strong>Basim</strong> A. <strong>Hassan</strong> and Sawsan S. Ismaely i = g i+1 – g iThe vector norms being Euclidian and by searching for the least value <strong>of</strong> f(x)from x i along the direction d i we can obtain the vector x i+1 :xi+ 1= xi+ λidi(5)Where λ is the value <strong>of</strong> λ that minimizes the function <strong>of</strong> one variable.iφ λ)= f(x + λ d )(6)i( i iThis complete the value <strong>of</strong> the iteration, and another one is begun if f(x i+1 ) or g i+1is not sufficiently small, [10].iLet A denote a symmetric and positive definite i×i matrix. For x ∈ R , wedefine:1 TTq ( x)= x A x + b x + c(7)2Let F : RiR denote a strictly monotonic increasing function and define:f ( x)= F(q(x))(8)Such a function is called an extended quadratic function, Spedicato [12].When a minimization algorithm is applied to f, the i th iterate is denoted by x i , thecorresponding function value by f i and its gradient by G i , the function value andgradient value <strong>of</strong> q are denoted by q i & g i , respectively and the derivative <strong>of</strong> F iat q i isdenoted by F ′i . we note that Gi= Fi′giand define ρi= ci/ ci+1for i, where ci = F¢ , <strong>Al</strong>iAssady and <strong>Al</strong>-<strong>Bayati</strong> [4]2. Non-Quadratic Sloboda MethodSloboda [11] proposed a generalized conjugate gradient algorithm forminimizing a strictly convex function <strong>of</strong> the general form:dFf(x) = F(q(x)) ; > 0(9)dqThis algorithm is as follows:• <strong>Al</strong>gorithm 1:*Step 1: Set i = 1 ; d 1 = - G 1 ; G1= g 1.Step 2: Compute λ by ELS & set x =Step 3: Compute gd1= g(x − λ )2i+2i + 1x i+ λ idi.Step 4: Test for convergence, if achieved stop. if not continue.Step 5: If i=0 nod(n) go to Step 1, else continue.T ***Step 6: g = i + 1w g1- g where digiiw = .Ti+d g2*Step 7: Compute the new search direction di+ 1= - gi+1+ βidiwhere:T *yi gi+1*βi= and yTi= gi+ 1− gi.d yiii1i+214

<strong>Abbas</strong> Y. <strong>Al</strong>-<strong>Bayati</strong> - <strong>Basim</strong> A. <strong>Hassan</strong> and Sawsan S. Ismaelour implementation differs in that we use the scaled quadratic model instead <strong>of</strong> thequadratic itself.CG-algorithm 1. and the generalized VM-algorithm 2. The objective here is toshow that, using <strong>Al</strong>-<strong>Bayati</strong>’s self-scaling VM-update (12), the sequence <strong>of</strong> thegenerating points is the same in the generalized CG-algorithm 1.Before making a few more observations we shall outline briefly the proposedstrategy for the interleaved generalized CG-VM method <strong>Al</strong>-<strong>Bayati</strong> [2].• <strong>Al</strong>gorithm 3:Let f be a non linear scaling <strong>of</strong> the quadratic function f; given x 1 and a matrixH 1 = I ; set G = ; i = 1 and t = 1 initially, k is the iteration index.*1g 1*Step 1: Set dt = - H igt(13)Step 2: For k = t , t+1 , t+2 , … iterate withx = x + λ dk+ 1 k k k,Hy*Tβk 1 i kk= (g +T ,dkykd*k+ 1= - Higk+1+ βkdk,)T *ykgk+1βk= ,Td ykkw =TdigTd gi*ii+12ggwg- g*= *k + 1 k k+ 1 2 k1g(x λ d )k+= 1 2k−k k*y = gk+1- gk2Here i is the index <strong>of</strong> the matrix updated only at restart steps and k is the index<strong>of</strong> iteration and the algorithm is not converged, until a restart is indicated.Step 3: If a restart is indicated, namely that the Powell [9] restarting criterion issatisfied, i.e.*T *Tk+ 1 k k+ 1 k+1|g g | ‡ 0.2 |g g | , (14)Then reset t to the current k , update H i by:T(H y v ) 2 a H yH Hi t tv [(tT) v - (i ti + 1=i−+2) ]bit.bbtb = vTwhere at= ytHiytand t t tStep 4: Replace i by i + 1 and repeat from (13).3. Hybrid Conjugate Gradient MethodTytDespite the numerical superiority <strong>of</strong> PR-method over FR-method the later hasbetter theoretical properties than the formal see <strong>Al</strong>-Baali [3]. Under certain conditionsFR-method can be shown to have global convergence with exact line search Powell [10]t16

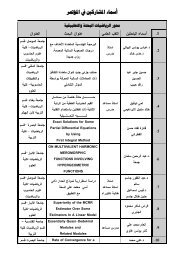

The Extended CG Method for Non-Quadratic Modelsand also with inexact line search satisfying the strong Wolf-Powell condition. Thisanomaly leads to speculation on the best way to choose β i .Touati – Ahmed and Storey in (12) proposed the following hybrid method:2 i-1Step 1: if l ||g i+1|| £ (2m) , with 1 m2 > >h and λ > 0 go to Step 2. Otherwise, Setβ i = 0 .PRPRStep 2: If β < 0 , Set β = , otherwise go to Step 3.Step 3: IfiPRβiPRi = β i⎛(1/2m)|| g⎜⎝iβ i2≤i-12|| gi||||⎞⎟ , with m =h, set β = . Otherwise Set⎠PRiβ iβ .Here m, h and λ user supplied parameters. This hybrid was shown to beglobally convergence under both exact and inexact line searches and to be quitecompetitive with PR- and FR-methods.4. New hybrid algorithm (<strong>Al</strong>gorithm 4):*Step1 : Set dt = - H igt, i = 1, t = 1, k is the index <strong>of</strong> iterations.Step 2: For k = t , t+1 , … iterate withxk+ 1= xk+ λkdk,andH*Tβk 1 i kk= (g +T ,dkyky)Where i is the index <strong>of</strong> the matrix updated only at restart steps.2 k 1Step 3: If l ||g i-1|| £ (2m) + with 1 m2 > >h go to Step 1. Otherwise set β i = 0.PRPRStep 4: If βi< 0 set β i = β i, otherwise go to Step 5.Step 5:IfPR 12 2PRFRBi£ ( m) ||gi-1|| /||gi||with m > η , Set B i= B i, otherwise set B i= B i.2***Step 6: Compute dk+ 1= - Higk+1+ βkdk, where g = k+ 1wkgk+ - g 1 2 kStep 7: If a restart is indicated, namely that the Powell, restarting criterion is satisfied,*T *Ti.e.: |g g | ‡ 0.2 |g g | , then reset t to the current k, update H i by:k-1 kk(H y v )H +k2 aTi t tti t Ti + 1= Hi−vt[( 2 ) vt- ( )]btbbttStep 8: Replace i by i+1 and repeat from (13).5. Conclusions and Numerical results:Several standard test functions were minimized (2 < n < 400) to compare theproposed algorithm with standard Sloboda algorithm which are coded in doubleprecision Fortran 90. The proposed hybrid algorithm needs matrix calculation for400×400, this is the approximately the latest range for this computation <strong>of</strong> the matrix.The numerical results are obtained on personal Pentium IV Computer. The compete set<strong>of</strong> results are given in tables 5.1 and 5.2.Hy17

The Extended CG Method for Non-Quadratic ModelsAppendixThese test function are from general literature [8].1. Generalized Powell FunctionF(X)n/4=∑i=2[(x4i-3-10 x4i-2)2+ 5(xTxo = (3, -1, 0 ,1, K)2. Generalized Wood FunctionF(x) =xn/4∑i=2[100(x4i-2+ 90(x− x- x24i-32 44i 4i-1To= (- 3, -1, - 3 , -1, K)3. Non-diagonal Functions:F(x) =xn∑[100(xi=2To= ( -1, K)))2+ (1 −4i-12 22i- xo)i]4. Generalized Dixon Function- x4i-32x4i −1922= (1−x1 ) + (1−xo)+ ∑(xi- xi+1)i=2To= (-1, K)F(x)x5. Wolfe Functions:))4i22)2+ (x4i-2+ 10 (X+ 1.0]- 2 xn-1222= (- x (3 - x1/2)+ 2x 2 -1) + ∑(xi-1 - x i (3 - x i/2)+ 2x i+ 1 -1) + (x n-1 - x n (3x n/2)-1)i=1To= (-1, K)F(X)x6. Shallo Function+ (1- x+ (1- x )n/22 22∑(x2i -1- x2i)+ (1- x2i-1)i=1To= ( - 2 , - 2 , K)F(x) =x7. Generalized Rosenbrock FunctionF(x) =n/2∑2 2100(x2i − x2i-1)+ (1−x2i-1)i=2To= (-1, 2 ,1, K)x8. Beale Function22 23F(x) = (1.5 − x1 (1−x2))+ (2.25−x1(1−x2))+ (2.625 − x1(1−x2))Txo= (0 , 0)24i-34i-1- x)4i4)4]219

<strong>Abbas</strong> Y. <strong>Al</strong>-<strong>Bayati</strong> - <strong>Basim</strong> A. <strong>Hassan</strong> and Sawsan S. IsmaelREFERENCES[1] <strong>Al</strong>-<strong>Bayati</strong>, A.Y. (1991). “A new family <strong>of</strong> self-scaling variable metric algorithmsfor unconstrained optimization”, Journal <strong>of</strong> Education & Science, <strong>Mosul</strong><strong>University</strong>, IRAQ, 12, pp. (25-54).[2] <strong>Al</strong>-<strong>Bayati</strong>, A.Y. (2001). “New generalized conjugate gradient methods for thenon-quadratic model in unconstrained optimization”, Journal <strong>of</strong> <strong>Al</strong>-Yarmouk,Jordan, 10, pp. (9-25).[3] <strong>Al</strong>-Baali, M. (1985). “Descent property and global convergence <strong>of</strong> the FletcherReeves method with inexact line search”, IMA Journal <strong>of</strong> Numerical Analysis, 5,pp.(121-164).[4] <strong>Al</strong>-Assady, N. H. and <strong>Al</strong>-<strong>Bayati</strong>, A. Y. (1994). “Minimization <strong>of</strong> extendedquadratic function with inexact line searches”, JOTA 82 pp.(139-147).[5] Bunday, B.D. (1984). “Basic optimization method”, Edward Arnold, BedfordSquare, London.[6] Buckley, A.G. (1978). “A combined conjugate gradient quasi Newtonminimization algorithm”, Mathematical Programming, 15, pp.(200-210).[7] Fletcher, R. and Reeves, C.M. (1964). “Function minimization in conjugategradients”, Computer Journal, 7, pp.(54-149).[8] Nocedal J. (2005), " Unconstrained Optimization Test Function Research Institutefor Informatics", Center for advanced Modeling and Optimization, Bucharest1,Romania.[9] Powell, M.J.D. (1977). “Restart procedure for the conjugate gradient method”,Mathematical Programming, 12, pp.(241-254).[10] Powell, M.J. (2000). “UOBYQA unconstrained optimization by quadraticapproximation”, DAMTP 2000, NA14, pp.(2-32).[11] Sloboda, F. (1980). “A generalized conjugate gradient algorithm”, NumericalMathematics, 35, pp.(223-230).[12] Spedicato, E. (1978). “A note on the determination <strong>of</strong> the scaling parameters in aclass <strong>of</strong> quasi-Newton methods for function minimization”, JOTA, 20, pp.(1-20).20