What Is Optimization Toolbox?

What Is Optimization Toolbox? What Is Optimization Toolbox?

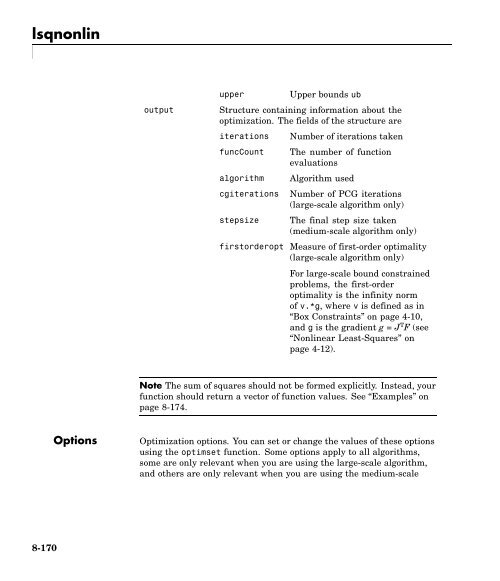

lsqnonlinoutputupperUpper bounds ubStructure containing information about theoptimization. The fields of the structure areiterationsfuncCountalgorithmcgiterationsstepsizeNumber of iterations takenThe number of functionevaluationsAlgorithm usedNumber of PCG iterations(large-scale algorithm only)The final step size taken(medium-scale algorithm only)firstorderopt Measure of first-order optimality(large-scale algorithm only)For large-scale bound constrainedproblems, the first-orderoptimality is the infinity normof v.*g, wherev is defined as in“Box Constraints” on page 4-10,and g is the gradient g = J T F (see“Nonlinear Least-Squares” onpage 4-12).Note The sum of squares should not be formed explicitly. Instead, yourfunction should return a vector of function values. See “Examples” onpage 8-174.OptionsOptimization options. You can set or change the values of these optionsusing the optimset function. Some options apply to all algorithms,some are only relevant when you are using the large-scale algorithm,and others are only relevant when you are using the medium-scale8-170

lsqnonlinalgorithm. See “Optimization Options” on page 6-8 for detailedinformation.The LargeScale option specifies a preference for which algorithm to use.It is only a preference because certain conditions must be met to use thelarge-scale or medium-scale algorithm. For the large-scale algorithm,the nonlinear system of equations cannot be underdetermined; that is,the number of equations (the number of elements of F returned by fun)must be at least as many as the length of x. Furthermore, only thelarge-scale algorithm handles bound constraints:LargeScaleUse large-scale algorithm if possible when set to 'on'.Use medium-scale algorithm when set to 'off'.Medium-Scale and Large-Scale AlgorithmsTheseoptionsareusedbyboththemedium-scale and large-scalealgorithms:DerivativeCheck Compare user-supplied derivatives (Jacobian) tofinite-differencing derivatives.DiagnosticsDiffMaxChangeDiffMinChangeDisplayDisplay diagnostic information about the functionto be minimized.Maximum change in variables for finitedifferencing.Minimum change in variables for finitedifferencing.Level of display. 'off' displays no output;'iter' displays output at each iteration; 'final'(default) displays just the final output.8-171

- Page 403 and 404: fsolveYoucanformulateandsolvethepro

- Page 405 and 406: fsolveLimitationsThe function to be

- Page 407 and 408: fzeroPurposeSyntaxDescriptionFind r

- Page 409 and 410: fzeroDisplayFunValCheckOutputFcnLev

- Page 411 and 412: fzerowrite an M-file called f.m.fun

- Page 413 and 414: fzmultPurposeSyntaxMultiplication w

- Page 415 and 416: linprogPurposeEquationSolve linear

- Page 417 and 418: linproglambdaoutput-2 No feasible p

- Page 419 and 420: linprogsubject toFirst, enter the c

- Page 421 and 422: linprogDiagnosticsLarge-Scale Optim

- Page 423 and 424: linprogthe primal objective < -1e+1

- Page 425 and 426: lsqcurvefitPurposeEquationSolve non

- Page 427 and 428: lsqcurvefitfunThe function you want

- Page 429 and 430: lsqcurvefitoutputupperUpper bounds

- Page 431 and 432: lsqcurvefitJacobianMaxFunEvalsMaxIt

- Page 433 and 434: lsqcurvefitJacobPatternMaxPCGIterSp

- Page 435 and 436: lsqcurvefitNote that at the time th

- Page 437 and 438: lsqcurvefitof J with many nonzeros,

- Page 439 and 440: lsqlinPurposeEquationSolve constrai

- Page 441 and 442: lsqlinlambdaoutput3 Change in the r

- Page 443 and 444: lsqlinDiagnosticsDisplayMaxIterTypi

- Page 445 and 446: lsqlinPrecondBandWidthUpper bandwid

- Page 447 and 448: lsqlinNotesFor problems with no con

- Page 449 and 450: lsqlinReferences[1] Coleman, T.F. a

- Page 451 and 452: lsqnonlinreturn a vector of values

- Page 453: lsqnonlinOutputArguments“Function

- Page 457 and 458: lsqnonlinJacobMultFunction handle f

- Page 459 and 460: lsqnonlinfor(that is, F should have

- Page 461 and 462: lsqnonlinand Requirements on page 2

- Page 463 and 464: lsqnonnegPurposeEquationSolve nonne

- Page 465 and 466: lsqnonneglambdaoutputVector contain

- Page 467 and 468: optimgetPurposeSyntaxDescriptionExa

- Page 469 and 470: optimsetIn the following lists, val

- Page 471 and 472: optimsetLineSearchType'cubicpoly' |

- Page 473 and 474: optimtoolPurposeSyntaxDescriptionTo

- Page 475 and 476: quadprogPurposeEquationSolve quadra

- Page 477 and 478: quadproglambdaoutput3 Change in the

- Page 479 and 480: quadprogLargeScaleUse large-scale a

- Page 481 and 482: quadprogTolPCGTermination tolerance

- Page 483 and 484: quadprogNotesIn general quadprog lo

- Page 485 and 486: quadprogWhen the equality constrain

- Page 487 and 488: IndexIndex ε-Constraint method 3-4

- Page 489 and 490: Indexinfeasible solution warninglin

- Page 491 and 492: Indexdescriptions 6-8possible value

lsqnonlinoutputupperUpper bounds ubStructure containing information about theoptimization. The fields of the structure areiterationsfuncCountalgorithmcgiterationsstepsizeNumber of iterations takenThe number of functionevaluationsAlgorithm usedNumber of PCG iterations(large-scale algorithm only)The final step size taken(medium-scale algorithm only)firstorderopt Measure of first-order optimality(large-scale algorithm only)For large-scale bound constrainedproblems, the first-orderoptimality is the infinity normof v.*g, wherev is defined as in“Box Constraints” on page 4-10,and g is the gradient g = J T F (see“Nonlinear Least-Squares” onpage 4-12).Note The sum of squares should not be formed explicitly. Instead, yourfunction should return a vector of function values. See “Examples” onpage 8-174.Options<strong>Optimization</strong> options. You can set or change the values of these optionsusing the optimset function. Some options apply to all algorithms,some are only relevant when you are using the large-scale algorithm,and others are only relevant when you are using the medium-scale8-170