Packet Classification on Multiple Fields - High Speed Network Lab ...

Packet Classification on Multiple Fields - High Speed Network Lab ...

Packet Classification on Multiple Fields - High Speed Network Lab ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

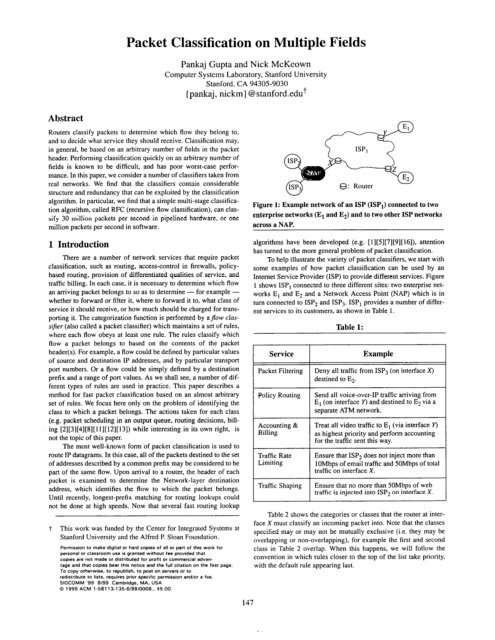

Table 2:enterprise networks and a total of 41,505 rules. Each network providedup to ten separate classifiers for different services. ttTable 3:~~From ISP3 and going toJ32All other packets -Source Link-layer Address, SourceSource Link-layer Address,Destinati<strong>on</strong> <strong>Network</strong>-Layer Address2 The Problem of <str<strong>on</strong>g>Packet</str<strong>on</strong>g> <str<strong>on</strong>g>Classificati<strong>on</strong></str<strong>on</strong>g><str<strong>on</strong>g>Packet</str<strong>on</strong>g> classificati<strong>on</strong> is performed using a packet classifier.also called a policy database, flow classifier, or simply a classifier.A classifier is a collecti<strong>on</strong> of rules or policies. Each rule specifies aclass+ that a packet may bel<strong>on</strong>g to based <strong>on</strong> some criteri<strong>on</strong> <strong>on</strong> Ffields of the packet header. and associates with each class an identifier,classZD. This identifier uniquely specifies the acti<strong>on</strong> associatedwith the rule. Each rule has F comp<strong>on</strong>ents. The iflZ comp<strong>on</strong>ent ofrule R, referred to as R[i]. is a regular expressi<strong>on</strong> <strong>on</strong> the i’h field ofthe packet header (in practice, the regular expressi<strong>on</strong> is limited bysyntax to a simple address/mask or operator/number(s) specificati<strong>on</strong>).A packet P is said to nrntck a particular rule R, if Vi, the i’”field of the header of P satisfies the regular expressi<strong>on</strong> R[i]. It isc<strong>on</strong>venient to think of a rule R as the set of a21 packet headers whichcould ntarch R. When viewed in this way, two distinct rules are saidto be either partially overlapping or n<strong>on</strong>-overlapping, or that <strong>on</strong>e isa subset of the other, with corresp<strong>on</strong>ding set-related definiti<strong>on</strong>s. Wewill assume throughout this paper that when two rules are notmutually exclusive, the order in which they appear in the classifierwill determine their relative priority. For example. in a packet classifierthat performs l<strong>on</strong>gest-prefix address lookups, each destinati<strong>on</strong>prefix is a rule, the corresp<strong>on</strong>ding next hop is its acti<strong>on</strong>, the pointerto the next-hop is the associated cZusslD, and the classifier is thewhole forwarding table. If we assume that the forwarding table hasl<strong>on</strong>ger prefixes appearing before shorter <strong>on</strong>es, the lookup is anexample of the packet classificati<strong>on</strong> problem.2.1 Example of a ClassifierAll examples used in this paper are classifiers from real ISPand enterprise networks. For privacy reas<strong>on</strong>s, we have sanitized theIP addresses and other sensitive informati<strong>on</strong> so that the relativestructure in the classifiers is still preserved.$ First, we’ll take a lookat the data and its characteristics. Then we’ll use the data to evaluatethe packet classificati<strong>on</strong> algorithm described later.An example of some rules from a classifier is shown in Table3. We collected 793 packet classifiers from 101 different TSP andtFor example, each rule in a flow classifier is a flow specificati<strong>on</strong>,where each flow is in a separate class.<strong>Network</strong>- <strong>Network</strong>layerlayer Transport-Destinati<strong>on</strong> Source layer(addr/mask) (addr/mask) Destinati<strong>on</strong>152.163.190. 152.163.80.1 * *69lO.O.O.O 1/0.0.0.0152.168.3.0/0.0.0.255152.168.3.0/0.0.0.255152.168.3.0/0.0.0.255152.163.198.4/0.0.0.0152.163.198. 152.163.36.0 gt 1023 t cP4/0.0.0.0 10.0.0.255TransportlayerProtocolWe found the classifiers to have the following characteristics:1) The classifiers do not c<strong>on</strong>tain a large number of rules. Only0.7% of the classifiers c<strong>on</strong>tain more than 1000 rules, with amean of 50 rules. The distributi<strong>on</strong> of number of rules in a classifieris shown in Figure 2. The relatively small number ofrules per classifier should not come as a surprise: in most networkstoday, the rules are c<strong>on</strong>figured manually by networkoperators, and it is a n<strong>on</strong>-trivial task to ensure correct behavior.2) The syntax allows a maximum of 8 fields to be specified:source/destinati<strong>on</strong> <strong>Network</strong>-layer address (32-bits). source/destinati<strong>on</strong> Transport-layer port numbers (16-bits for TCP andUDP), Type-of-service (TOS) field (8-bits), Protocol field (8-$ We wanted to preserve the properties of set relati<strong>on</strong>ship, e.g.inclusi<strong>on</strong>, am<strong>on</strong>g the rules, or their fields. The way a 32-bit IPaddress pO.pl.pZ.p3 has been sanitized is as follows: (a) A random32-bit number cO.cl.c2.~3 is first chosen, (b) a randompermutati<strong>on</strong> of the 256 numbers 0...255 is then generated to getperm[0..255/ (c) Another random number S between 0 and 255is generated: these randomly generated numbers are comm<strong>on</strong>for all the rules in the classifier, (d) The IP address with bytes:perm[(pO A CO + 0 * s) % 2561, perm[(pl A cl + I * s) % 2561.perm[(p2 A c2 + 2 * s) % 2561 and perm[(p3 h c3 + 3 * s) %2.561 is then returned as the sanitized transformati<strong>on</strong> of the originalIP address. where * denotes the exclusive-or operati<strong>on</strong>.tt In the collected data, the classifiers for different services aremade up of <strong>on</strong>e or more ACLs (access c<strong>on</strong>trol lists). An ACLrule has <strong>on</strong>ly two types of acti<strong>on</strong>s, “deny” or “permit”. In thisdiscussi<strong>on</strong>, we will assume that each ACL is a separate classifier,a comm<strong>on</strong> case in practice.148

3)4)36)7)bits), and Transport-Layer protocol flags @-bits) with a totalof 120 bits. 17% of all rules had 1 field specified, 23% had 3fields specified and 60% had 4 fields specified.’The Transport-layer protocol field is restricted to a small set ofvalues: in all the packet classifiers we examined, it c<strong>on</strong>tained<strong>on</strong>ly TCP, UDP, ICMP, IGMP, (E)IGRP, GRE and 1PINIP orthe wildcard ‘*‘, i.e. the set of all transport protocols.The Transport-layer fields have a wide variety of specificati<strong>on</strong>s.Many (10.2%) of them are range specificati<strong>on</strong>s (e.g. ofthe type gr 1023, i.e. greater than 1023, or rnrzge 20-24). Inparticular, the specificati<strong>on</strong> ‘gt 1023’ occurs in about 9% ofthe rules. This has an interesting c<strong>on</strong>sequence. It has been suggestedin literature (for example in [ 171) that to ease implementati<strong>on</strong>,ranges could be represented by a series of prefixes.In that case, this comm<strong>on</strong> range would require six separateprefixes (1024-2047,2048-4095,4096-8191,8192-16383,16384-32767,32768-65535) resulting in a large increase inthe size of the classifier.About 14% of all the classifiers had a rule with a n<strong>on</strong>-c<strong>on</strong>tiguousmask (10.2% of all rules had n<strong>on</strong>-c<strong>on</strong>tiguous masks). Forexample, a rule which has a <strong>Network</strong>-layer address/mask specificati<strong>on</strong>of 137.98.217.Ol8.22.160.80 has a n<strong>on</strong>-c<strong>on</strong>tiguousmask (i.e. rwr a simple prefix) in its specificati<strong>on</strong>. This observati<strong>on</strong>came as a surprise. One suggested reas<strong>on</strong> is that somenetwork operators choose a specific numbering/addressingscheme for their routers. It tells us that a packet classificati<strong>on</strong>algorithm cannot always rely <strong>on</strong> <strong>Network</strong>-layer addressesbeing prefixes.It is comm<strong>on</strong> for many different rules in the same classifier toshare a number of field specificati<strong>on</strong>s (compare, for example,the entries in Table 3). These arise because a network operatorfrequently wants to specify the same policy for a pair of communicatinggroups of hosts or subnetworks (e.g. deny everyhost in group1 to access any host in group2). Hence. given asimple address/mask syntax specificati<strong>on</strong>, a separate rule iscomm<strong>on</strong>ly written for each pair in the two (or more) groups.We will see later how we make use of this observati<strong>on</strong> in ouralgorithm.We found that 8% of the rules in the classifiers were redundant.We say that a rule R is redundant if <strong>on</strong>e of the followingc<strong>on</strong>diti<strong>on</strong> holds:(a) There exists a rule T appearing earlier than R in the classifiersuch that R is a subset of T. Thus, no packet will evermatch RS and R is redundant. We call this hckward redundancy;4.4% of the rules were backward redundant.(b) There exists a rule Tappearing after R in the classifier suchthat (i) R is a subset of r, (ii) R and T have the same acti<strong>on</strong>sand (iii) For each rule V appearing in between R and Tin theclassifier. either V is disjoint from R, or V has the same acti<strong>on</strong>as R. We call this&ward redundancy; 3.6% of the rules wereforward redundant. In this case, R can be eliminated to obtaina new smaller classifier. A packet matching R in the originalt If a field is not specified, the wildcard specificati<strong>on</strong> is assumed.Note that this is affected by the syntax of the rule specificati<strong>on</strong>language.$ Recall that rules are prioritized in order of their appearance inthe classifier.fdFigure 2: The distibuti<strong>on</strong> of the total number of rules perclassifier. Note the logarithmic scale <strong>on</strong> both axes.classifier will match Tin the new classifier, but with the sameacti<strong>on</strong>.3 GoalsIn this secti<strong>on</strong>, we highlightpacket-classificati<strong>on</strong> scheme:1)2)3)4)5)6)our objectives when designing aThe algorithm should be fast enough to operate at OC48c linerates(2.5Gb/s) and preferably at OC192c linerates (lOGb/s).For applicati<strong>on</strong>s requiring a deterministic classificati<strong>on</strong> time,we need to classify 7.8 milli<strong>on</strong> packets/s and 31.2 milli<strong>on</strong>packets/s respectively (assuming a minimum length IP datagramof 40 bytes). In some applicati<strong>on</strong>s, an algorithm thatperforms well in the merage case may be acceptable using aqueue prior to the classificati<strong>on</strong> engine. For these applicati<strong>on</strong>s,we need to classify 0.88 milli<strong>on</strong> packets/s and 3.53 milli<strong>on</strong>packets/s respectively (assuming an average Internet packetsize of 354-bytes [ 181).The algorithm should ideally allow matching <strong>on</strong> arbitraryfields, including Link-layer, <strong>Network</strong>-layer, Transport-layerand - in some excepti<strong>on</strong>al cases -the Applicati<strong>on</strong>-layerheaders.++ It makes sense for the algorithm to optimize for thecomm<strong>on</strong>ly used header fields, but it should not preclude theuse of other header fields.The algorithm should support general classificati<strong>on</strong> rules,including prefixes, operators (like range, less than, greaterthan, equal to, etc.) and wildcards. N<strong>on</strong>-c<strong>on</strong>tiguous masks maybe required.The algorithm should be suitable for implementati<strong>on</strong> in bothhardware and software. Thus it needs to be fairly simple toallow high speed implementati<strong>on</strong>s. For the highest speeds (e.g.for OC192c at this time), we expect that hardware implementati<strong>on</strong>will be necessary and therefore the scheme should beamenable to pipelined implementati<strong>on</strong>.Even though memory prices have c<strong>on</strong>tinued to fall, the memoryrequirements of the algorithm should not be prohibitivelyexpensive. Furthermore, when implemented in software, amemory-efficient algorithm can make use of caches. Whenimplemented in hardware, the algorithm can benefit fromfaster <strong>on</strong>-chip memory.The algorithm should scale in terms of both memory andtt That is why packet-classifying routers have been called “layerlessswitches”.149

speed with the size of the classifier. An example from the destinati<strong>on</strong>routing lookup problem is the popular multiway trie[ 161 which can. in the worst case require enormous amounts ofmemory. but performs very well and with much smaller storage<strong>on</strong> real-life routing tables. We believe it to be important toevaluate algorithms with realistic data sets.7) In this paper. we will assume that classifiers change infrequently(e.g. when a new classifier is manually added or atrouter boot time). When this assumpti<strong>on</strong> holds, an algorithmcould employ reas<strong>on</strong>ably static data structures. Thus, a preprocessingtime of several sec<strong>on</strong>ds may be acceptable to calculatethe data structures. Note that this assumpti<strong>on</strong> may nothold in some applicati<strong>on</strong>s, such as when routing tables arechanging frequently, or when fine-granularity flows aredynamically or automatically allocated.Later. we describe a simple heuristic algorithm called RF?(Recursive Flow <str<strong>on</strong>g>Classificati<strong>on</strong></str<strong>on</strong>g>) that seems to work well with aselecti<strong>on</strong> of classifiers in use today. It appears practical to use theclassificati<strong>on</strong> scheme for OC192c rates in hardware and up toOC48c rates in software. However, it runs into problems with spaceand preprocessing time for big classifiers (more than 6000 ruleswith 4 fields). We describe an optimizati<strong>on</strong> which decreases thestorage requirements of the basic RFC scheme and enables it tohandle a classifier with 15,000 rules with 4 fields in less than 4MB.4 Previous WorkWe start with the simplest classificati<strong>on</strong> algorithm: for each arrivingpacket, evaluate each rule sequentially until a rule is found thatmatches all the headers of the packet. While simple and efficient inits use of memory. this classifier clearly has poor scaling properties;time to perform a classificati<strong>on</strong> grows linearly with the number ofrules.A hardware-<strong>on</strong>ly algorithm could employ a ternary CAM (c<strong>on</strong>tentaddressablememory). Ternary CAMS store words with three-valueddigits: ‘0’, ‘1’ or ‘X’ (wildcard). The rules are stored in theCAM array in the order of decreasing priority. Given a packetheaderto classify, the CAM performs a comparis<strong>on</strong> against all of itsentries in parallel. and a priority encoder selects the first matchingrule. While simple and flexible, CAMS are currently suitable <strong>on</strong>lyfor small tables; they are too expensive, too small and c<strong>on</strong>sume toomuch power for large classifiers. Futhermore, some operators arenot directly supported, and so the memory array may be used veryinefficiently. For example, the rule ‘gt 1023’ requires six arrayentries to be used. But with c<strong>on</strong>tinued improvements in semic<strong>on</strong>ductortechnology, large ternary CAMS may become viable in thefuture.A soluti<strong>on</strong> called ‘Grid of Tries was proposed in [17]. In thisscheme. the trie data structure is extended to two fields. This is agood soluti<strong>on</strong> if the filters are restricted to <strong>on</strong>ly two fields, but is noteasily extendible to more fields. In the same paper, a general soluti<strong>on</strong>for multiple fields called ‘Crossproductitzg is described. Ittakes about 1SMB for 50 rules and for bigger classifiers, theauthors propose a caching technique (<strong>on</strong>-demand crossproducting)with a n<strong>on</strong>-deterministic classificati<strong>on</strong> time.tNot to be c<strong>on</strong>fused with “Request For Comments”Another recent proposal [15] describes a scheme optimized forimplementati<strong>on</strong> in hardware. Employing bit-level parallelism tomatch multiple fields c<strong>on</strong>currently, the scheme is reported to supportup to 512 rules, classifying <strong>on</strong>e milli<strong>on</strong> packets per sec<strong>on</strong>dwith an FPGA device and five IM-bit SRAMs. As described. thescheme examines five header fields in parallel and uses bit-levelparallelism to complete the operati<strong>on</strong>. In the basic scheme. thememory storage is found to scale quadratically and the memorybandwidth linearly with the size of the classifier. A variati<strong>on</strong> isdescribed that decreases the space requirement at the expense ofhigher executi<strong>on</strong> time. In the same paper, the authors describe analgorithm for the special case of two fields with <strong>on</strong>e field including<strong>on</strong>ly intervals created by prefixes. This takes O(nrmzber-of-prefklengths+ log”) time and O(n) space for a classifier with n rules.There arc several standard problems in the field of computati<strong>on</strong>algeometry that resemble packet classificati<strong>on</strong>. One example is thepoint locati<strong>on</strong> problem in multidimensi<strong>on</strong>al space. i.e. the problemof finding the enclosing regi<strong>on</strong> of a point, given a set of regi<strong>on</strong>s.However, the regi<strong>on</strong>s are assumed to be n<strong>on</strong>-overlapping (asopposed to our case). Even for n<strong>on</strong>-overlapping regi<strong>on</strong>s, the bestbounds for n rules and F fields, for F > 3 , are O(logn) in time withO(Z) space; or O(lOgFel n) time and O(n) space [6]. Clearly this isimpractical: with just 100 rules and 4 fields, ttF space is about1 OOMB ; and log”-’ n time is about 350 memory accesses.5 Proposed Algorithm RFC (Recursive Flow<str<strong>on</strong>g>Classificati<strong>on</strong></str<strong>on</strong>g>)5.1 Structure of the ClassifiersAs the last example above illustrates, the task of classificati<strong>on</strong> isextremely complex in the worst case. However, we can expect thereto be structure in the classifiers which, if exploited, can simplify thetask of the classificati<strong>on</strong> algorithm.To illustrate the structure we found in our dataset. let’s start with anexample in just two dimensi<strong>on</strong>s, as shown in Figure 3. We can representa classifier with two fields (e.g. source and destinati<strong>on</strong> prefixes)in 2-dimensi<strong>on</strong>al space, where each rule is represented by arectangle. Figure 3a shows three such rectangles, where each rectanglerepresents a rule with a range of values in each dimensi<strong>on</strong>.The classifier c<strong>on</strong>tains three explicitly defined rules, and the default(background) rule. Figure 3b shows how three rules can overlap tocreate five regi<strong>on</strong>s (each regi<strong>on</strong> is shaded differently), and Figure3c shows three rules creating seven regi<strong>on</strong>s. A classificati<strong>on</strong> algorithmmust keep a record of each regi<strong>on</strong> and be able to determinethe regi<strong>on</strong> to which each newly arriving packet bel<strong>on</strong>gs. Intuitively,the more regi<strong>on</strong>s the classifier c<strong>on</strong>tains, the more storage isrequired. and the l<strong>on</strong>ger it takes to classify a packet.Even though the number of rules is the same in each figure, the taskof the classifcati<strong>on</strong> algorithm becomes progressively harder as itneeds to distinguish more regi<strong>on</strong>s. In general, it can be shown thatthe number of regi<strong>on</strong>s created by n-rules in F dimensi<strong>on</strong>s can be asmuch as O(ttF).We analyzed the structure in our dataset to determine the number ofoverlapping regi<strong>on</strong>s, and we found it to be c<strong>on</strong>siderably smallerthan the worst case. Specifically for the biggest classifier with 1734rules, we found the number of distinct overlapping regi<strong>on</strong>s in four150

9=- 2912Simple One-Step <str<strong>on</strong>g>Classificati<strong>on</strong></str<strong>on</strong>g>(a) 4 regi<strong>on</strong>s(b) 5 regi<strong>on</strong>s(c) 7 regi<strong>on</strong>sFigure 3: Some possible arrangementsof three rectangles.dimensi<strong>on</strong>s to be 4316, compared to a worst possible case ofapproximately JO’“. Similarly we found the number of overlaps tobe relatively small in each of the classifiers we looked at. As wewill see, our algorithm will exploit this structure to simplify its task.5.2 AlgorithmThe problem of packet classificati<strong>on</strong> can be viewed as <strong>on</strong>e of mappingS bits in the packet header to T bits of cZusslD, (whereT = 1ogN , and T

24-bits used to express the Transport-layer Destinati<strong>on</strong> and Transport-layerProtocol (chunk #6 and #4 respectively) are reduced tojust three bits by Phases 0 and 1 of the RFC algorithm. We startwith chunk #6 that c<strong>on</strong>tains the 16-bit Transport-layer Destinati<strong>on</strong>.The corresp<strong>on</strong>ding column in Table 3 partiti<strong>on</strong>s the possible valuesinto four sets: (a) (www=80) (b) (20,21) (c) (>1023) (d) (allremaining numbers in the range O-65535); which can be encodedusing two bits OOb through 11,. We call these two bit values the“equivalence class IDS” (e@s). So, in Phase 0 of the RFC algorithm,the memory corresp<strong>on</strong>ding to chunk #6 is indexed using the216 different values of chunk #6. In each locati<strong>on</strong>. we place thee9ZD for this Transport-layer Destinati<strong>on</strong>. For example, the value inthe memory corresp<strong>on</strong>ding to “chunk #6 = 20” is OOh , corresp<strong>on</strong>d-ing to the set (20,21). In this way, a 16-bit to two-bit reducti<strong>on</strong> isobtained for chunk #6 in Phase 0. Similarly, the eight-bit TransportlayerProtocol column in Table 3 c<strong>on</strong>sists of three sets: (a) (tcp) (b)[udp) (c) (all remaining numbers in the range O-255), which canbe encoded using two-bit e9IDs. And so chunk #4 undergoes aneight-bit to two-bit reducti<strong>on</strong> in Phase 0.In the sec<strong>on</strong>d phase, we c<strong>on</strong>sider the combinati<strong>on</strong> of the TransportlayerDestinati<strong>on</strong> and Protocol fields. From Table 3 we can see thatthe five sets are: (a) {({SO], (udp))] (b) (((20-211, (udp})) (c)1((80), (tcpl)l (4 I(&$ lO23), (tcpl)l (e) (all remainingcrossproducts}; which can be represented using three-bit e9ZDs.The index into the memory in Phase 1 is c<strong>on</strong>structed from the twotwo-bit e9lDs from Phase 0 (in this case, by c<strong>on</strong>catenating them).Hence, in Phase 1 we have reduced the number of bits from four tothree. If we now c<strong>on</strong>sider the combinati<strong>on</strong> of both Phase 0 andPhase 1, we find that 24 bits have been reduced to just three bits.In what follows, we will use the term “Chunk Equivalence Set”(CES) to denote a set above, e.g. each of the three sets: (a) (tcp) (b)(udp) (c) (all remaining numbers in the range O-2551 is said to be aCES because if there are two packets with protocol values lying inthe same set and have otherwise identical headers, the rules of theclassifier do not distinguish between them.Each CES can be c<strong>on</strong>structed in the following manner:First Phase (Phase 0): C<strong>on</strong>sider a fixed chunk of size b bits, andthose comp<strong>on</strong>ent(s) of the rules in the classifier corresp<strong>on</strong>ding tothis chunk. Project the rules in the classifier <strong>on</strong> to the number line[0,2’ - I] . Each comp<strong>on</strong>ent projects to a set of (not necessarilyc<strong>on</strong>tiguous) intervals <strong>on</strong> the number line. The end points of all theintervals projected by these comp<strong>on</strong>ents form a set of n<strong>on</strong>-overlappingintervals. Two points in the same interval always bel<strong>on</strong>g to thesame equivalence set. Also, two intervals are in the same equivalenceset if exactly the same rules project <strong>on</strong>to them. As an examplec<strong>on</strong>sider chunk #6 (Destinati<strong>on</strong> port) of the classifier in Table 3.The end-points of the intervals (KX.14) and the c<strong>on</strong>structed equivalencesets (EO..E3) are shown in Figure 7. The RFC table for thischunk is filled with the corresp<strong>on</strong>ding e9lDs. Thus. in this example,table(20) = OOb , table(23) = 11 b etc. The pseudocode for com-puting the e9lDs in Phase 0 is shown in Figure 19 in the Appendix.To facilitate the calculati<strong>on</strong> of the e9lDs for subsequent RFCphases, we assign a class bitmap (CBM) for each CES indicatingZO?l 8/l lq23I I I IIO 11 12 13 14EO= {20,21) E2 = ( 1024-65535 }El = {80)E3 = (O-19,22-79,81-1023]Figure 7: An example of computing the four equivalence classesEO...E3 for chunk #6 (corresp<strong>on</strong>ding to the ldbit Transport-Layer destinati<strong>on</strong> port number) in the classifier of Table 3.which rules in the classifier c<strong>on</strong>tain this CES for the corresp<strong>on</strong>dingchunk. This bitmap has <strong>on</strong>e bit for each rule in the classifier. Forexample, EO in Figure 7 will have the CBM 101000 indicatingthat the first and the third rules of the classifier in Tab P e 3 c<strong>on</strong>tainEO in chunk #6. Note that the class bitmap is not physically storedin the lookup table: it is just used to facilitate the calculati<strong>on</strong> of thestored e9ZDs by the preprocessing algorithm.Subsequent Phases: A chunk in a subsequent phase is formed by acombinati<strong>on</strong> of two (or more) chunks obtained from memory lookupsin previous phases, with a corresp<strong>on</strong>ding CES. If, for example,the resulting chunk is of width b bits, we again create equivalencesets such that two b-bit numbers that are not distinguished by therules of the classifier bel<strong>on</strong>g to the same CES. Thus, (20,udp) and(21,udp) will be in the same CES in the classifier of Table 3 inPhase 1. To determine the new equivalence sets for this phase, wecompute all possible intersecti<strong>on</strong>s of the equivalence sets from theprevious phases being combined. Each distinct intersecti<strong>on</strong> is anequivalence set for the newly created chunk. The pseudocode forthis preprocessing is shown in Figure 20 of the Appendix.5.3 A simple complete example of RFCRealizing that the preprocessing steps are involved, we present acomplete example of a classifier, showing how the RFC preprocessingis performed to determine the c<strong>on</strong>tents of the memories, andhow a packet can be looked up as part of the RFC operati<strong>on</strong>. Theexample is shown in Figure 22 in the Appendix. It is based <strong>on</strong> a 4-field classifier of Table 6, also in the Appendix.6 Implementati<strong>on</strong> ResultsIn this secti<strong>on</strong>, we c<strong>on</strong>sider how the RFC algorithm can be implementedand how it performs. First, we c<strong>on</strong>sider the complexity ofpreprocessing and the resulting storage requirements. Then we c<strong>on</strong>siderthe lookup performance to determine the rate at which packetscan be classified.6.1 RFC PreprocessingWith our classifiers, we choose to split the 32-bit <strong>Network</strong>-layersource and destinati<strong>on</strong> address fields into two 1Bbit chunks each.These are chunks #O. 1 and #2,3 respectively. As we found a maximumof four fields in our classifiers, this means that Phase 0 ofRFC has six chunks: chunk #4 corresp<strong>on</strong>ds to the protocol and protocol-flagsfield and chunk #5 corresp<strong>on</strong>ds to the Transport-layer152

Chunk#2345Phase 0 Phase 1 Phase 2Figure 8: Two example reducti<strong>on</strong> trees for P=3 RFC phases.Figure 10: The RFC storage requirements in Megabytes fortwo Phases using the classifiers available to us. This specialcase of RFC is identical to the Crossproducting method of1171.Phase 0 Phase 1 Phase 2 Phase 301tree-B2 ClassID34Chunk# 9Figure 9: Two example reducti<strong>on</strong> trees for P=4 RFC phases.Destinati<strong>on</strong> field.The performance of RFC can be tuned with two parameters: (i) Thenumber of phases, P, that we choose to use, and (ii) Given a valueof P, the reducti<strong>on</strong> tree used. For instance, two of the several possiblereducti<strong>on</strong> trees for P=3 and P=4 are shown in Figure 8 and Figure9 respectively. (For P=2, there is <strong>on</strong>ly <strong>on</strong>e reducti<strong>on</strong> treepossible). When there is more than <strong>on</strong>e reducti<strong>on</strong> tree possible for agiven value of P, we choose a tree based <strong>on</strong> two heuristics: (i) wecombine those chunks together which have the most “correlati<strong>on</strong>”e.g. we combine the two 16-bit chunks of <strong>Network</strong>-layer sourceaddress in the earliest phase possible, and (ii) we combine as manychunks as we can without causing unreas<strong>on</strong>able memory c<strong>on</strong>sumpti<strong>on</strong>.Following these heuristics, we find that the “best” reducti<strong>on</strong>tree for P=3 is tree-B in Figure 8, and the “best” reducti<strong>on</strong> tree forP=4 is tree-A in Figure 9.Figure 11: The RFC storage requirements in Kilobytes forthree phases using the classifiers available to us. The reducti<strong>on</strong>tree used is tree-i in Figure 8.Now, let us look at the performance of RFC using our set of classifiers.Our first goal is to keep the total amount of memory reas<strong>on</strong>ablysmall. The memory requirements for each of our classifiers isplotted in Figure 10, Figure 11 and Figure 12 for P=2, 3 and 4phases respectively. The graphs show how the memory usageincreases with the number of rules in each classifier. For practicalpurposes, it is assumed that memory is <strong>on</strong>ly available in widths of8, 12 or 16 bits. Hence, an eqfD requiring 13 bits is assumed tooccupy 16 bits in the RFC table.As we might expect, the graphs show that as we increase the numberof phases from three to four, we require a smaller total amountof memory. However, this comes at the expense of two additi<strong>on</strong>almemory accesses, illustrating the trade-off between memory c<strong>on</strong>sumpti<strong>on</strong>and lookup time in RFC. Our sec<strong>on</strong>d goal is to keep thepreprocessing time small. And so in Figure 13 we plot the preprocessingtime required for both three and four phases of RFC.+The graphs indicate that, for these classifiers, RFC is suitable iftThe case P=2 is not plotted: it was found to take hours of preprocessingtime because of the unwieldy size of the RFC tables.153

Phase0,IPhase 1aicatedSDRAMl400 -,I ,!,I, II.11 ,I3030 a30 .oo KmEL Of FE12c.l ,400 ,600 180Figure 12: The RFC storage requirements in Kilobytes forfour phases using the classifiers available to us. The reducti<strong>on</strong>tree used is tree-A in Figure 9.Figure 13: The preprocessing times for three and four phases insec<strong>on</strong>ds, using the set of classifiers available to us. This data istaken by running the RFC preprocessing code <strong>on</strong> a 333MHzPentium-II PC running the Linux operating system.(and <strong>on</strong>ly if) the rules change relatively slowly; for example, notmore than <strong>on</strong>ce every few sec<strong>on</strong>ds. Thus, it may be suitable in envir<strong>on</strong>mentswhere rules are changed infrequently. for example if theyare added manually, or when a router reboots.For applicati<strong>on</strong>s where the tables change more frequently, it may bepossible to make incremental changes to the tables. This is a subjectrequiring further investigati<strong>on</strong>.Finally, note that there are some similarities between the RFC algorithmand the bit-level parallel scheme in [ 151; each distinct bitmapin [15] corresp<strong>on</strong>ds to a CES in the RFC algorithm. Also, note thatwhen there are just two phases, RFC corresp<strong>on</strong>ds to the crossproductingmethod described in [17].6.2 RFC Lookup PerformanceThe RFC lookup operati<strong>on</strong> can be performed both in hardware andin software.+ We will discuss the two cases separately, exploring thePhase 2SDRAM2Figure 14: An exampie hardware design for RFC with threephases. The latches for holding data in the pipeline and the <strong>on</strong>chipc<strong>on</strong>trol logic are not shown.This design achieves OC192rates in the worst case for 40Byte packets, assuming that thephases are pipelined with 4 clock cycles (at 125MHz clockrate) per pipeline stage.lookup performance in each case.HardwareAn example hardware implementati<strong>on</strong> for the tree tree-B in Figure8 (three phases) is illustrated in Figure 14 for four fields (six chunksin Phase 0). This design is suitable for all the classifiers in ourdataset, and uses two 4Mbit SRAMs and two 4-bank 64MbitSDRAMs 1191 clocked at 125 MHz.$ The design is pipelined suchthat a new lookup play begin every four clock cycles. The pipelinedRFC lookup proceeds as follows:1) Pipeline Stage 0: Phase 0 (Clock cycles O-3): In the first threeclock cycles, three accesses are made to the two SRAMdevices in parallel to yield the six eqIDs of Phase 0. In thefourth clock cycle, the eqIDs from Phase 0 are combined tocompute the two indices for the next phase.2) Pipeline Stage 1: Phase l(Clock cycles 4-7): The SDRAMdevices can be accessed every two clock cycles, but weassume that a given bank can be accessed again <strong>on</strong>ly aftereight clock cycles. By keeping the two memories for Phase 1in different banks of the SDRAM, we can perform the Phase 1lookups in four clock cycles. The data is replicated in the othertwo banks (i.e. two banks of memory hold a fully redundantcopy of the lookup tables for Phase 1). This allows Phase 1lookups to be performed <strong>on</strong> the next packet as so<strong>on</strong> as the currentpacket has completed. In this way, any given bank isaccessed <strong>on</strong>ce every eight clock cycles.3) Pipeline Stage 2: Phase 2 (Clock cycles 8-11): Only <strong>on</strong>elookup is to be made. The operati<strong>on</strong> is otherwise identical toPhase 1.Hence, we can see that approximately 30 milli<strong>on</strong> packets can betNote that the RFC preprocessing is always performed in software.$ These devices are in producti<strong>on</strong> in industry at the time of writingthis paper. In fact, even bigger and faster devices are availabletoday - see [19]1.54

classified per sec<strong>on</strong>d (to be exact, 3 1.25 milli<strong>on</strong> packets per sec<strong>on</strong>dwith a 125MHz clock) with a hardware cost of approximately $50.+This is fast enough to process minimum length packets at theOC 192~ rate.SoftwareFigure 21 (Appendix) provides pseudocode to perform RFC lookups.When written in ‘C’, approximately 30 lines of code arerequired to implement RFC. When compiled <strong>on</strong> a 333Mhz Pentium-IIPC running Windows NT we found that the worst case pathfor the code took(140cZks + 9. fm) for three phases, and(146&s + 11 . fm) for four phases, where t,,l is the memoryaccess timc.$ With tm = 60~1s , this corresp<strong>on</strong>ds to 0.98~~ and1.1~s for three and four phases respectively. This implies RFC canperform close to <strong>on</strong>e milli<strong>on</strong> packets per sec<strong>on</strong>d in the worst casefor our classifiers. The average lookup time was found to beapproximately 50% faster than the worst case: Table 4 shows theaverage time taken per lookup for 100,000 randomly generatedpackets for some classifiers.Number of Rules inClassifierTable 4:39 587113 582646 668827 6111112 7331734 621Average Time perlookup (ns)The pseudocode in Figure 21 calculates the indices into each memoryusing multiplicati<strong>on</strong>/additi<strong>on</strong> operati<strong>on</strong>s <strong>on</strong> eqIDs from previousphases. Alternatively. the indices can be computed by simplec<strong>on</strong>catenati<strong>on</strong>. This has the effect of increasing the memory c<strong>on</strong>sumedbecause the tables are then not as tightly packed. Given thesimpler processing, we might expect the classificati<strong>on</strong> .fime todecrease at the expense of increased memory usage. Indeed thememory c<strong>on</strong>sumed grows approximately two-fold <strong>on</strong> the classifiers.Surprisingly, we saw no significant reducti<strong>on</strong> in classificati<strong>on</strong>times. We believe that this is because the processing time is dominatedby memory access time as opposed to the CPU cycle time.6.3 Larger ClassifiersAs we have seen, RFC performs well <strong>on</strong> the real-life classifiersavailable to us. But how will RFC perform with larger classifiersthat might appear in the future’? Unfortunately, it is difficult to accuratelypredict the memory c<strong>on</strong>sumpti<strong>on</strong> of RFC as a functi<strong>on</strong> of thesize of the classifier: the performance of RFC is determined by thestructure present in the classifier. With pathological sets of rules,RFC could scale geometrically with the number of rules. Fortunately.such cases do not seem to appear in practice.To estimate how RFC might perform with future. larger classifiers,we synthesized large artificial classifiers. We used two differentways to create large classifiers (given the importance of the structure,it did not seem meaningful to generate rules randomly):1) A large classifier can be created by c<strong>on</strong>catenating the classifiersbel<strong>on</strong>ging to the same network, and treating the result as asingle classifier. Effectively, this means merging together theindividual classifiers for different services. Such an implementati<strong>on</strong>is actually desirable in scenarios where the designermay not want more than <strong>on</strong>e set of RFC tables for the wholenetwork. Jn such cases, the classID obtained would have to becombined with some other informati<strong>on</strong> (such as classifier ID)to obtain the correct intended acti<strong>on</strong>. By <strong>on</strong>ly c<strong>on</strong>catenatingclassifiers from the same network, we were able to create classifiersup to 3,896 rules. For each classifier created. WC performedRFC with both three and four phases. The results areshown in Figure 15.2) To create even larger classifiers, we c<strong>on</strong>catenated all the classifiersof a few (up to ten) different networks. The performanceof RFC with four phases is plotted as the ‘Basic RFC’curve in Figure 18. We found that RFC frequently runs intostorage problems for classifiers with more than 6000 rules.Employing more phases does not help as we must combine atleast two chunks in every phase, and end up with <strong>on</strong>e chunk inthe final phase.” An alternative way to process large classifierswould be to split them into two (or more) parts and c<strong>on</strong>structseparate RFC tables for each part. This would of coursecome at the expense of doubling the number of memoryaccesses.SS7 Variati<strong>on</strong>sSeveral variati<strong>on</strong>s and improvements of RFC are possible. First, itshould be easy to see how RFC can be extended to process a largernumber of fields in each packet header.Sec<strong>on</strong>d, we can possibly speed up RFC by taking advantage ofavailable fast lookup algorithms that find l<strong>on</strong>gest matching prefixesin <strong>on</strong>e field. Note that in our examples, we use three memoryaccesses each for the source and destinati<strong>on</strong> <strong>Network</strong>-layer addresst Under the assumpti<strong>on</strong> that SDRAMs are now available at $1.50per megabyte, and SRAMs are $12 for a 4Mbyte device.$ The performance of the lookup code was analyzed usingVTune[20], an Intel performance analyzer for processors of thePentium family.tt With six chunks in Phase 0, we could have increased the numberof phases to a maximum of six. However we found noappreciable improvement by doing so.$$ Actually, for Phase 0, we need not lookup memory twice for thesame chunk if we use wide memories. This would help usaccess the c<strong>on</strong>tents of both the RFC tables in <strong>on</strong>e memoryaccess.155

0 500 ,ow,503NwnbEtR”b. 25ca m30 35w ,cmFigure 15: The memory c<strong>on</strong>sumed by RFC For three andfour phases <strong>on</strong> classifiers created by merging all the classifiersof <strong>on</strong>e network.Figure 16: The memory c<strong>on</strong>sumed with three phases with theadjGrp optimizati<strong>on</strong> enabled <strong>on</strong> the large classifiers created byc<strong>on</strong>catenating all the classifiers of <strong>on</strong>e networklookups during the first two phases of RFC. This is necessarybecause of the c<strong>on</strong>siderable number of n<strong>on</strong>-c<strong>on</strong>tiguous address/mask specificati<strong>on</strong>s. In the event that <strong>on</strong>ly prefixes are present inthe specificati<strong>on</strong>, <strong>on</strong>e can use a more sophisticated and faster techniquefor looking up in <strong>on</strong>e dimensi<strong>on</strong> e.g. <strong>on</strong>e of the methodsdescribed in [1][5][7][9] or [16].Third, we can employ a technique described below to reduce thememory requirements when processing large classifiers.7.1 Adjacency GroupsSince the size of the RFC tables depends <strong>on</strong> the number ofchunk equivalence classes, we focus our efforts <strong>on</strong> trying to reducethis number. This we do by merging two or more rules of the originalclassifier as explained below. We find that each additi<strong>on</strong>al phaseof RFC further increases the amount of compacti<strong>on</strong> possible <strong>on</strong> theoriginal classifier.First we define some notati<strong>on</strong>. We call two distinct rules R andS. with R appearing first. in the classifier to be adjacent in ditnensi<strong>on</strong>‘i’ if all of the following three c<strong>on</strong>diti<strong>on</strong>s hold: (1) They havethe same acti<strong>on</strong>, (2) All but the i’” field have the exact same specificati<strong>on</strong>in the two rules, and (3) All rules appearing in between Rand S in the classifier have either the same acti<strong>on</strong> or are disjointfrom R. Two rules are said to be simply adjacent if they are adjacentin some dimensi<strong>on</strong>. Thus the sec<strong>on</strong>d and third rules in the classifierof Table 3 are adjacent+ in the dimensi<strong>on</strong> corresp<strong>on</strong>ding to theTransport-layer Destinati<strong>on</strong> field. Similarly the fifth rule is adjacentto the sixth but not to the fourth. Once we have determined that tworules R and S are ad.jacent, we merge them to form a new rule Twith the same acti<strong>on</strong> as R (or S). It has the same specificati<strong>on</strong>s asthat of R (or S) for all the fields except that of the i”l which is simplythe logical-OR of the ifh field specificati<strong>on</strong>s of R and S. The thirdtAdjacency can be also be looked at this way: treat each rulewith F fields as a boolean expressi<strong>on</strong> of F (multi-valued) variables.Initially each rule is a c<strong>on</strong>juncti<strong>on</strong> i.e. a logical-AND ofthese variables. Two rules are defined to be adjacent of they areadjacent vertices in the F-dimensi<strong>on</strong>al hypercube created by thesymbolic representati<strong>on</strong> of the F fields.c<strong>on</strong>diti<strong>on</strong> above ensures that the relative priority of the rules inbetween R and Swill not be affected by this merging.An adjacency grump (adjGrp) is defined recursively as: (1)Every rule in the original classifier is an adjacency group, and (2)Every merged rule which is created by merging two or more adjacencygroups is an adjacency group.We compact the classifier as follows. Initially, every rule is inits own adjGrp. Next, we combine adjacent rules to create a newsmaller classifier. One simple way of doing this is to iterate over allfields in turn, checking for adjacency in each dimensi<strong>on</strong>. Afterthese iterati<strong>on</strong>s are completed, the resulting classifier will have nomore adjacent rules. We do similar merging of adjacent rules aftereach RFC phase. As each RFC phase collapses some dimensi<strong>on</strong>s,groups which were not adjacent in earlier phases may become so inlater stages. In this way, the number of adjGrps and hence the sizeof the classifier keeps <strong>on</strong> decreasing with every phase. An exampleof this operati<strong>on</strong> is shown in Figure 17.Note that there is absolutely no change in the actual lookupoperati<strong>on</strong>: the equivalence IDS are now simply pointers to bitmapswhich keep track of adjacency groups rather than the original rules.To dem<strong>on</strong>strate the benefits of this optimizati<strong>on</strong> for both three andfour phases, we plot in Figure 16 the memory c<strong>on</strong>sumed with threephases <strong>on</strong> the 101 large classifiers created by c<strong>on</strong>catenating all theclassifiers bel<strong>on</strong>ging to <strong>on</strong>e network; and in Figure 18, the memoryc<strong>on</strong>sumed with four phases <strong>on</strong> the even larger classifiers created byc<strong>on</strong>catenating all the classifiers of different networks together. Thefigures show that this optimizati<strong>on</strong> helps reduce storage requirements.With this optimizati<strong>on</strong>, RFC can now handle a 15,000 ruleclassifier with just 3.85MB. The reas<strong>on</strong> for the reducti<strong>on</strong> in storageis that several rules in the same classifier comm<strong>on</strong>ly share a numberof specificati<strong>on</strong>s for many fields.However, the space savings come at a cost. For although the classifierwill correctly identify the acti<strong>on</strong> for each arriving packet. it cannottell which rule in the original classifier it matched. Because therules have been merged to form adjGrps, the distincti<strong>on</strong> betweeneach rule has been lost. This may be undesirable in applicati<strong>on</strong>s thatmaintain matching statistics for each rule.1.56

Table 5:SchemeProsC<strong>on</strong>sSequential Evaluati<strong>on</strong>Grid of Tries[ 171Crossproducting[ 171Bit-level Parallelism[ 151Recursive Flow <str<strong>on</strong>g>Classificati<strong>on</strong></str<strong>on</strong>g>Good storage requirements. Works for arbitrary numberof fields.Good storage requirements and fast lookup rates fortwo fields. Suitable for big classifiers.Fast accesses. Suitable for multiple fields. Can beadapted to n<strong>on</strong>-c<strong>on</strong>tiguous masks.Suitable for multiple fields. Can be adapted to n<strong>on</strong>c<strong>on</strong>tiguousmasks.Suitable for multiple fields. Works for n<strong>on</strong>-c<strong>on</strong>tiguousmasks. Reas<strong>on</strong>able memory requirements for real-lifeclassifiers. Fast lookup rate.Slow lookup rates.Not easily extendible to more than two fields. Not suitablefor n<strong>on</strong>-c<strong>on</strong>tiguous masks.Large memory requirements. Suitable without cachingfor small classifiers up to 50 rules.Large memory bandwidth required. Comparativelyslow lookup rate. Hardware <strong>on</strong>ly.Large preprocessing time and memory requirementsfor large classifiers (i.e. having more than 6000 ruleswithout adjacency group optimizati<strong>on</strong>).R(al,bl,cl,dl)S(al,bl,d,dl)T(a2,bl,c2,dl)U(a2,bl,cl,dl)V(al,bl,c4,d2)W(al,bLc3.d2)X(a2,bl.c3,d2)Y(a2,bl,c4,d2)Merge al<strong>on</strong>gDimensi<strong>on</strong> 3RS(al.bl,cl+c2,dl)TU(a2,bl,cl+c2,dl)VW(al.bl,c3+c4,d2)XY(a2,bl,c3+c4,d2)Merge al<strong>on</strong>gDimensi<strong>on</strong> 1RSTU(al+a2,bl,cl+c2,dl)VWXY(al+a2,bl,c3+c4,d2).Carry out an RFC Phase.Assume:chunks 1 and 2 are combinedand also chunks 3 and 4 are combined IRSTUVWXY(ml,nI+n2)RSTU(ml,nl)VWXY(ml,n2)’ C<strong>on</strong>tinue with RFC...I,_________________.: (al+a2.b1) reduces to ml :: (cl+c2,dl) reduces to nl :v1 (c3+c4,d2) reduces to n2 :.---____--_____---.Figure 17: Example of Adjacency Groups. Some rules of aclassifier are shown. Each rule is denoted symbolically byRuleName(FieldName1, FieldName2,...). The ‘4 denotes alogical OR. All rules shown are assumed to have the sameacti<strong>on</strong>.8 Comparis<strong>on</strong> with other packet classificati<strong>on</strong>schemesTable 5 shows a qualitative comparis<strong>on</strong> of some of the schemes fordoing packet classificati<strong>on</strong>./4m - I. >_.__.__..----*__.._._.. --..--_____... ---.i 35w_.___.... ---; __.... ---p 3oco-Bi 2500 -i-1500i: ..Iwo/.I ,,_/!)T: __..~~~ :-1:5cKl /$./0 0 2wo 4c.30 ecc.3Num:%Rulesj.loo00 1m Iuoo 16woFigure 18: The memory c<strong>on</strong>sumed with four phases with theadjGrp optimizati<strong>on</strong> enabled <strong>on</strong> the large classifiers created byc<strong>on</strong>catenating all the classifiers of a few different networks.Also shown is the memory c<strong>on</strong>sumed when the optimizati<strong>on</strong> isnot enabled (i.e. the basic RFC) scheme. Notice the absence ofsome points in the Basic RFC curve. For those classifiers, thebasic RFC takes too much memory/preprocessing time.9 C<strong>on</strong>clusi<strong>on</strong>sIt is relatively simple to perform packet classificati<strong>on</strong> at high speedusing large amounts of storage; or at low speed with small amountsof storage. When matching multiple fields (dimensi<strong>on</strong>s) simultaneously,it is difficult to achieve both high classificati<strong>on</strong> rate andmodest storage in the worst case. We have found that real classifiers(today) exhibit c<strong>on</strong>siderable amount of structure and redundancy.This makes possible simple classificati<strong>on</strong> schemes that exploit thestructure inherent in the classifier. We have presented <strong>on</strong>e suchalgorithm, called RFC which appears to perform well with theselecti<strong>on</strong> of real-life classifiers available to us. For applicati<strong>on</strong>s inwhich the tables do not change frequently (for example, not morethan <strong>on</strong>ce every few sec<strong>on</strong>ds) a custom hardware implementati<strong>on</strong>can achieve OC192c rates with a memory cost of less than $50, anda software implementati<strong>on</strong> can achieve OC48c rates. RFC wasfound to c<strong>on</strong>sume too much storage for classifiers with four fields157

and more than 6,000 rules. But by further exploiting structure andredundancy in the classifiers, a modified versi<strong>on</strong> of RFC appears tobe practical for up to 15,000 rules.10 AcknowledgmentsWe would like to thank Darren Kerr at Cisco Systems for help inproviding the classifiers used in this paper. We also wish to thankAndrew McRae at Cisco Systems for independently suggesting theuse of bitmaps in storing the colliding rule set, and for useful feedbackabout the RFC algorithm.11 References[l] A. Brodnik, S. Carlss<strong>on</strong>, M. Degermark, S. Pink. “SmallForwarding Tables for Fast Routing Lookups,” Proc.ACM SIGCOMM 1997, pp. 3-14, Cannes, France.[2] Abhay K. Parekh and Robert G. Gallager, “A generalizedprocessor sharing approach to flow c<strong>on</strong>trol in integratedservices networks: The single node case,” IEEE/ACMTransacti<strong>on</strong>s <strong>on</strong> <strong>Network</strong>ing, vol. 1, pp. 344-357, June1993.[3] Alan Demers, Srinivasan Keshav, and Scott Shenker,“Analysis and simulati<strong>on</strong> of a fair queueing algorithm,”Internetworking: Research and Experience, vol. 1, pp. 3-26, January 1990.[4] Braden et al., “Resource Reservati<strong>on</strong> Protocol (RSVP) -Versi<strong>on</strong> 1 Functi<strong>on</strong>al Specificati<strong>on</strong>,” RFC 2205, September1997.[5] B. Lamps<strong>on</strong>, V. Srinivasan, and G. Varghese, “IP lookupsusing multiway and multicolumn search,” in Proceedingsof the C<strong>on</strong>ference <strong>on</strong> Computer Communicati<strong>on</strong>s (IEEEINFOCOMM), (San Francisco, California), vol. 3, pp.1248-1256, March/April 1998.[6] M.H. Overmars and A.F. van der Stappen, “Rangesearching and point locati<strong>on</strong> am<strong>on</strong>g fat objects,” in Journalof Algorithms, 21(3), pp. 629-656, 1996.[7] M. Waldvogel, G. Varghese, J. Turner, B. Plattner. “Scalable<strong>High</strong>-<strong>Speed</strong> IP Routing Lookups,” Proc. ACM SIG-COMM 1997, pp. 25-36, Cannes, France.[8] M. Shreedhar and G. Varghese, “Efficient Fair QueuingUsing Deficit Round-Robin,” IEEE/ACM Transacti<strong>on</strong>s<strong>on</strong> <strong>Network</strong>ing, vol. 4,3, pp. 375-385, 1996.[9] P Gupta, S. Lin, and N. McKeown, “Routing lookups inhardware at memory access speeds,” in Proceedings ofthe C<strong>on</strong>ference <strong>on</strong> Computer Communicati<strong>on</strong>s (IEEEINFOCOMM), (San Francisco, California), vol. 3, pp.1241-1248, March/April 1998.[IO] P. Newman, T. Ly<strong>on</strong> and G.Minshall, “Flow labelled IP:a c<strong>on</strong>necti<strong>on</strong>less approach to ATM’, Proceedings of theC<strong>on</strong>ference <strong>on</strong> Computer Communicati<strong>on</strong>s (IEEE INFO-COMM), (San Francisco, California), vol. 3, pp 1251-1260, April 1996.[ll] R. Guerin, D. Williams, T. Przygienda, S. Kamat, andA. Orda, “QoS routing mechanisms and OSPF extensi<strong>on</strong>s,”Internet Draft, Internet Engineering Task Force,March 1998. Work in progress.[12] Richard Edell, Nick McKeown, and Pravin Varaiya,“Billing Users and Pricing for TCP”, IEEE JSAC SpecialIssue <strong>on</strong> Advances in the Fundamentals of <strong>Network</strong>ing,September 1995.[ 131 Sally Floyd and Van Jacobs<strong>on</strong>, “Random early detecti<strong>on</strong>gateways for c<strong>on</strong>gesti<strong>on</strong> avoidance,” IEEE/ACM Transacti<strong>on</strong>s<strong>on</strong> <strong>Network</strong>ing, vol. 1, pp. 397-413, August1993.[14] Steven McCanne and Van Jacobs<strong>on</strong>, “A BSD <str<strong>on</strong>g>Packet</str<strong>on</strong>g>Filter: A New Architecture for User-level <str<strong>on</strong>g>Packet</str<strong>on</strong>g> Capture,”in Proc. of Usenix Winter C<strong>on</strong>ference, (San Diego,California), pp. 259-269, Usenix, January 1993.[15] T.V. Lakshman and D. Stiliadis, “<strong>High</strong>-<strong>Speed</strong> Policybased<str<strong>on</strong>g>Packet</str<strong>on</strong>g> Forwarding Using Efficient Multi-dimensi<strong>on</strong>alRange Matching”, Proc. ACM SIGCOMM, pp.191-202, September1998.[16] V.Srinivasan and G.Varghese, “Fast IP Lookups usingC<strong>on</strong>trolled Prefix Expansi<strong>on</strong>”, in Proc. ACM Sigmetrics,June 1998.[17] VSrinivasan, S.Suri, G.Varghese and M.Waldvogel,“Fast and Scalable Layer4 Switching”, in Proc. ACMSIGCOMM, pp. 203-214, September 1998.[ 181 http://www.nlanr.net/N/NA/Leam/plen.970625.hist.[19] http://www.toshiba.com/taec/n<strong>on</strong>flash/comp<strong>on</strong>ents/memory.html.[20] http://developer.intel.com/vtune/analyzer/index.htm.12 AppendixI* Begin Pseudocode *II* Phase 0, Chunkj of width b bits*/for each rule rl in the classifierbeginproject the ifh comp<strong>on</strong>ent of rl <strong>on</strong>to the number line (from 0 to Zb-l),marking the start and end points of each of its c<strong>on</strong>stituent intervals.endforI* Now scan through the number line looking for distinct equivalenceclasses*/bmp := 0; I* all bits of bmp are initialised to ‘0’ *Ifor n in 0..2’-1beginif (any rule starts or ends at n)beginupdate bmp;if (bmp not seen earlier)begineq := new-Equivalence-Class();eq-xbm := bmp:endifendifelse cq := the equivalence class whose cbm is bmp;table-Oj[n] = eq->ID; /* fill ID in the rfc table*/endfor/* end of pseudocode *!Figure 19: Pseudocode for RFC preprocessing for chunkjPhase 0of158

Table 6:/* Begin Pseudocode */I* Assume that the chunk #i is formed from combining m distinct chunkscl, c2, .... cm of phases ~1.~2, .... pm where pl, p2, .... pm cbm & c2eq->cbm & ... & cmeq->cbm;/* bitwiseANDing */neweq := searchList(listEqs, intersectedBmp);if (not found in IistEqs)begin/* create a new equivalence class *Ineweq := new-Equivalence-Class();neweq->cbm := bmp;add neweq to 1istEqs;cndifI* Fill up the relevant RFC table c<strong>on</strong>tents.*/tablej-i[indx] := neweq->ID;indx++;endforI* end of pseudocode *Iindx = indx * (total #equivIDs of chd->chkParents[i]) +eqNums[phaseNum of chd->chkParents[ill[chkNum of chd->chkParents[i]]:/*** Alternatively: indx = (indx chk-Parentsri])) A (eqNums[phaseNum of chkParents[i]J[chkNum of chkParents[i]]***IendforeqNums[phaseNum][chkNum] = c<strong>on</strong>tents of appropriate rfctable ataddress indx.endforreturn eqNums[O][numPhases-I]: I* this c<strong>on</strong>tains the desired classID*II* end of pseudocode *IFigure 21: Pseudocode for the RFC Lookup operati<strong>on</strong> with thefields of the packet in pktlields.Figure 20: Pseudocode for RFC preprocessing for chunk i of Phase j,.i>O)/* Begin Pseudocode *Ifor (each chunk, chkNum of phase 0)eqNums[O][chkNum] = c<strong>on</strong>tents of appropriate rfctable at memory addresspkt<strong>Fields</strong>lchkNum]:for (phaseNum = l..numPhases-1)for (each chunk, chkNum, in Phase phaseNum)beginI* chd stores the number and descripti<strong>on</strong> about this chunk’s parents chk-Prants[O..numChkParents]*/chd = parent descriptor of (phaseNum, chkNum);indx = eqNums[phaseNum of chkParents[O]][chkNum of chkParents[Ol];for (i=l..chd->numChkParents-1)begin

Phase 0 Phase 1 Phase 265535 ~-T---jChunkifBytes.3 and 4 ‘!of Source<strong>Network</strong> Address :(1.32) 256I<strong>Network</strong> AddresS(0.0) :Destn6553;‘M lun65535 IChunk#2(4.6) : 1 - I I \ 1Transport-layer ;Port number 19(1.32) ;!6553; nChunk#3 -‘:\655i5 BChunkK5co3e0189::Chunk#O001112 10001833 110014 1111100123400cl1IB 2Chunk#I0I2345678910111213141516Chunk#OAccesses made by the lookup of a packet withSrc <strong>Network</strong>-layer Address = 0.83.1.32Dst <strong>Network</strong>-layer Address = 0.0.4.6Transport-layer Protocol = 17 (udp)Dst Transport-layer port number = 22Figure 22: This figure shows the c<strong>on</strong>tents of RFC tables for the example classifier of Table 6. The sequence of accesses made by theexample packet have also been shown using big gray arrows. The memory locati<strong>on</strong>s accessed in this sequence have been marked inbold.Rule/#160