Fast Simultaneous Alignment of Multiple Range Images Using Index ...

Fast Simultaneous Alignment of Multiple Range Images Using Index ...

Fast Simultaneous Alignment of Multiple Range Images Using Index ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Date de publication: 1-10-1986 ISSN: 0154 9898BULLETIN DE LA SOCIÉTÉ BOTANIQUE DU CENTRE-OUEST, NOUVELLE SÉRIE, TOME 17, 1986191Remarques taxinomiques et nomenclaturalessur quelques micromycètespar Martial de RULAMORT (*)L'analyse critique de la littérature mycologique contemporaine m'incite à proposerles modifications suivantes, qui me paraissent, pour différentes raisons, jutifiées.Dans sa remarquable note sur les Venturia des peupliers de la section Leuce,MORELET adopte une position par trop modeste, en proposant de reconnaître 3 variétésà l'intérieur de Venturia tremu/ae Aderhold. Je considère que les différences constatéessont suffisantes pour donner à ces taxa le rang d'espèce, ce qui implique:Venturia populi-elbse (Morelet) comb. nov. (= Venturia tremu/ae var. popu/i-a/baeMorelet, Cryptogamie, Mycol. 6 : 112, 1985) qui admet pour anamorphe Pol/acciapopùtl-slbee (Morelet) comb. nov. (= Pol/accia rediose var. popu/i-a/bae Morelet,ibidem). Venturia more/etiisp. nov. Pseudothecia globosa vel conica, primo immersa,demum plus minusve erumpentia ; ostiolis setis acutis aterrimis ; ascosporis circa15 - 20 x 7 - 9 t'm, luteolis, clavatis infra medium septatis ; conidiis curvulis angus-.tioribus medio 23 x 8 t'm. Typus teleomorphosis in ramis et foliis emortuis Populitremu/oidis, in America boreali, in herbario de Rulamort nO 1389 depositum. AnamorphePol/accia/ethifera (Peck in Sace.) Morelet [non P. /etifera (Peck) Morelet).Par ailleurs, du fait de confusions possibles, le binôme Venturia macu/aris (Fr. :Fr.) Müll. et v. Arx devrait être remplacé par Venturia ma cu/osa (Sace.) comb. nov.1= Sphaerel/a mecotose Saccardo, Syll. Fung. 1: 487, 1882) basé sur le même type,et non par Venturia orbicu/aris (Peck) Morelet comme le propose cet auteur. En effet,V. orbicu/aris paraît distinct de V. mecutose au niveau de l'ascocarpe. L'étude dutype montre une paroi plus épaisse (24 t'm contre 13 t'm) uniquement composéede cellules anguleuses brunes, donc sans couche externe de cellules prismatiques,plus claires, comme chez V. meculose.En résumé, on trouve sur les peupliers de la section l.euce, 2 espèces maculicolesIV. orbicu/aris en Amérique, V. macu/osa en Europe) et 4 espèces non maculicoles(1 non pathogène: V. viennotii Morelet ; 3 pathogènes: V. trematee et V. popu/ia/baeen Europe, V. more/etii en Amérique).Dans un autre domaine, il est évident que G/oeosporium tril/H, Oidium hap/ophyl/iet Macrophoma bottomee, appartiennent respectivement aux genres Asteroma,Oidiopsis et Phyl/osticta d'où :Asteroma tril/ii(EII. et Ev.) comb. nov. (= G/oeosporium tril/iiEII. et Ev. Proc. Acad.nat. Sei. Phil. 371,1894).Oidiopsis hap/ophyl/i (Magn.) comb. nov. (= Oidium hap/ophyl/i P. Magn. Verhandl.Zool. - bot. Gesellsch. Wien : 445, 1900).Phyl/osticta bo/toniae (Dearness) comb. nov. (= Macrophoma bo/toniae J. Dearness,Mycologia 18: 245, 1926).(*) M. de R. : 15, rue Molière, 54280 SEICHAMPS.

When the number <strong>of</strong> range images is very large, amethod that simultaneously aligns range images isrequired. The algorithms described above align two rangeimages; when using these algorithms, error accumulationincreases as the number <strong>of</strong> range images increases. In suchcases, a method that simultaneously aligns range images isuseful. Neugebauer et al. proposed a simultaneousregistration method that adopted projection search <strong>of</strong>correspondences and point-plane error metric [9].Benjamaa et al. extended the method proposed byBergevin et al. [10] and implemented a simultaneousalignment method while they accelerated the pair-wisealignment algorithm by using multi z-buffers [11].Although various methods have been proposed, theproblem for every method is the computation cost <strong>of</strong>correspondence search. If the number <strong>of</strong> vertices <strong>of</strong> tworange images is equally assumed to be N by the originalICP, their complexity is O(N 2 ) since correspondences aresearched for in all vertices. In order to accelerate ICP,there are techniques [12, 13] that use Kd-trees and thatnarrow the search range by using data cache [14, 15, 16].However, the complexity <strong>of</strong> Kd-tree search is O(NlogN).That is, sufficient acceleration cannot be achieved bythese algorithms. The computational complexity <strong>of</strong> theinverse calibration method proposed by Blais is O(N) [6].However, this method requires precise sensor parameters(intrinsic parameters <strong>of</strong> CCD camera, parameters <strong>of</strong>scanning mechanism) and pre-computed look-up tables. Inaddition, the creation <strong>of</strong> the look-up tables is very timeconsuming because Euclidian distances between eachelement <strong>of</strong> a table and every ray <strong>of</strong> sampled points have tobe calculated.Another problem in aligning a large number <strong>of</strong> rangeimages is the computation cost <strong>of</strong> matrix operations inwhich rigid transformations <strong>of</strong> range images are computed.To directly solve a non-linear least squares problem isvery time consuming [13]. In this case, the linearizedalgorithm is effective in dealing with a large data set [7].However, the computation time to solve the linearequations with conventional solvers (SVD, Choleskydecomposition, etc.) rapidly increases as the number <strong>of</strong>range images increases because the coefficient matrixbecomes very large.We propose a fast method to align a large number <strong>of</strong>range images simultaneously. Our method has threecharacteristics. 1) The process <strong>of</strong> searching correspondingpoints is accelerated by using index images, which arerapidly created without sensor parameters. 2) The methodemploys the point-plane error metric and linearized errorevaluation. 3) An iterative solver (incomplete Choleskyconjugate gradient method) is applied in order toaccelerate the computation <strong>of</strong> the rigid transformations. InSection 2, the details <strong>of</strong> our algorithm are described. Someexperimental results that demonstrate the effectiveness <strong>of</strong>our method are shown in Section 3. Our conclusions aredescribed in Section 4.2. <strong>Alignment</strong> algorithmIn this section, the details <strong>of</strong> our alignment algorithmare explained. We assume that all range images have beenconverted to mesh models. The algorithm is applied in thefollowing steps:1. To compute, for all pairs <strong>of</strong> partial meshes,(a) to search all correspondence <strong>of</strong> vertices(b) to evaluate error terms <strong>of</strong> all correspondence pairs2. To compute transformation matrices <strong>of</strong> all pairs forimmunizing all errors3. To iterate steps 1 and 2 until the terminationcondition is satisfiedFirst, we explain the fast method to search correspondingpoints. Then, the details <strong>of</strong> error evaluation and thecomputation <strong>of</strong> rigid transformations are described.2.1. Correspondence searchOur algorithm employs points and planes to evaluaterelative distance as the Chen and Medioni method [5]. Thecorresponding pairs are searched along the line <strong>of</strong> sight(Fig. 1). Here, the line <strong>of</strong> sight is defined as the opticalaxis <strong>of</strong> a range sensor. Let us denote one mesh as the basemesh and its corresponding mesh as the target mesh. Anextension <strong>of</strong> the line <strong>of</strong> sight, from a vertex <strong>of</strong> the basemesh, crosses a triangle patch <strong>of</strong> the target mesh andcreates the intersecting point. In order to eliminate falsecorrespondences, if the distance between the vertex andthe corresponding point is larger than a certain thresholdvalue, the correspondence is removed. Thiscorrespondence search is computed for all pairs <strong>of</strong> meshmodels.Though the threshold distance is given empirically asl given , the smaller value is selected as l th compared with theaverage distance <strong>of</strong> all corresponding points rˆ .⎧ l given ( if : l given < rˆ)lth = ⎨(2)⎩ rˆ( otherwize)1rˆ ∑ y i − x i(3)N= NiN is the number <strong>of</strong> vertices included in the base mesh.CorrespondenceRay direction <strong>of</strong>scene imagexn x<strong>Range</strong> finderyn yBase imageTarget imageFigure 1. Searching corresponding points

To search correspondences quickly, our method usesindex images. Though the complexity <strong>of</strong> this process isO(N,), the same as that <strong>of</strong> the inverse calibration method,sensor parameters are not required. Furthermore, thesearching process can be accelerated by graphicshardware. The details <strong>of</strong> correspondence search usingindex images are described below.2.1.1 Creation <strong>of</strong> index imagesAn index image works as a look-up table to retrieve theindex <strong>of</strong> corresponding patches. Here, we describe theprocedures for creating an index image as follows:1. A unique index number is assigned to each trianglepatch <strong>of</strong> a target mesh.2. <strong>Index</strong> numbers are converted to unique colors.3. Triangle patches <strong>of</strong> the target mesh are rendered on animage plane with the index colors.First, a unique integer value is assigned to each trianglepatch. Since the assigned value can be any integer number,0 to n-1 are assigned sequentially, where n is the number<strong>of</strong> triangle patches.Next, the assigned index values are converted to uniquecolors. If the precision <strong>of</strong> index values is the same as that<strong>of</strong> rendering colors, the index values are converteddirectly. Assume that the precision <strong>of</strong> each color channelis expressed by q bits. [0 q-1] bits <strong>of</strong> an index value areassigned to Red, and [q 2q-1] bits to Green, and the nextto Blue, and highest q bits are assigned to Alpha. If theprecision is not the same, the indices have to be convertedcarefully.All triangle patches are rendered onto an image planeusing the index colors (Fig. 2). The pixels in which thetriangle patches are not rendered are filled with anexceptional color like white. The target mesh is assumedto be described in its measured coordinate system.zyTarget range imagexwh<strong>Index</strong> imageFigure 2. Rendering <strong>of</strong> index imageProjection methodGenerally, perspective projection is used for renderingthe index images. Perspective projection works well forthe range images that are measured by sensors that adopt amethod like light sectioning. On the other hand, in thecase <strong>of</strong> range images taken by sensors with scanningmechanisms using mirrors, the spherical projection isbetter because the angles between sampled points areequal (or near) to each other.Zoom imageFigure 3. Example <strong>of</strong> index imagesView frustumTo obtain a sufficient number <strong>of</strong> corresponding points,rendering areas have to be determined properly. Allvertices <strong>of</strong> a target mesh are projected onto the indeximage plane. Then, the rectangular area (u min , v min , u max ,v max ) that involves all projected vertices is obtained. Theview frustum is computed so that all vertices are renderedin this area. Minimum and maximum depths are alsoacquired at the projection process.Image resolutionThe resolution <strong>of</strong> an index image (L u , L v ) has to bedetermined as the following conditions are fulfilled.≥ 2×w/Δw(4)L uL vmin≥ 2×h / Δh(5)minw = u max− u min(6)h = v max− v min(7)Variables w and h represent the height and width <strong>of</strong> therendering area respectively (Fig. 2). Δ w andminΔ hminrepresent the minimum height and minimum width <strong>of</strong> alltriangles projected onto the index image plane.The image resolution (L u , L v ) can be roughlydetermined so that the conditions described in inequality 4and inequality 5 are satisfied. Although the parameters (w,h, Δ wmin, Δ hmin) are different in each partial mesh, aunique resolution that satisfies the conditions <strong>of</strong> all meshmodels works well for all index images.The rendering process is accelerated by using graphicshardware. The rendering time becomes small enough toignore even if the images are rendered at each iterativestep. A large memory space for storing look-up tables isnot required. The memory space for only one index imagecan be shared by all mesh models. Figure 3 shows anexample <strong>of</strong> index images.

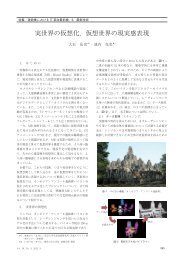

larger and sparser as the number <strong>of</strong> mesh models increasesand has 6×6 non-zero patterns as shown in Fig. 5.n×6(n-1)×6n×6(n-1)×6∑i≠j,kATijkAijk6×6 Non-Zero AreaPositive DefiniteSymmetry MatrixFigure 5 Characteristics <strong>of</strong> coefficient matrixSince the computational complexity <strong>of</strong> direct solvers istoo high, we applied an iterative solver to this problem.The computational complexity <strong>of</strong> Choleskydecomposition that is the most popular direct solver for asymmetric positive definite matrix is O(n 3 ). Then, weemployed the pre-conditioned conjugate gradient method(PCG). Though the complexity <strong>of</strong> PCG is O(n 3 ), the sameas Cholesky decomposition, the number <strong>of</strong> iterations canbe drastically reduced by pre-conditioning. We employedincomplete Cholesky decomposition as thepre-conditioner. Since it is known that the coefficientmatrix has 6×6 non-zero patterns, we implemented theincomplete Cholesky conjugate gradient method (ICCG)specialized for the matrix pattern.Assume that the matrix M is the ((n-1)×6) × ((n-1)×6)coefficient matrix shown in Fig. 5. rβ is the (n-1)×6vector that is a part <strong>of</strong> the right side <strong>of</strong> Eq. 13. r β doesnot include the transformations <strong>of</strong> the first mesh model.Equation 13 is re-written as follows.r rM α = β(14)r Tα = ( mr1... mrn−1)(15)A matrix C is assumed to be a regular ((n-1)×6)×((n-1)×6)matrix. Equation 14 is written as follows:r−1T −1T r −1C M ( C ) C α = C β(16)To simplify the equation, we define the matrix M ~ andthe vector r ~ β ′ as follows.−1T 1M = C M(C )−(17)r r−1β ′ = C β(18)Equation 16 is redefined by using the variables above.r rM ~ α ′ = β ′(19)T r rC α = α ′(20)If the coefficient matrix M ~ is near the identity matrix,solving Eq. 19 is drastically accelerated by the conjugategradient method. That is, the C T C has to be nearly equal tothe original coefficient matrix M.TM ≅ C C(21)But the computation cost to decompose the matrix is veryhigh. The matrix M is incompletely decomposed by theCholesky decomposition. In this process, only non-zeroareas are computed: Another element is filled with zero.M = UD(22)Since the matrix M is sparse, the decomposition process isperformed very quickly. Then, matrix C is given asfollows:1/ 2C = UD(23)Once matrix C has been computed, rigid transformationsare calculated from Eq. 19 and Eq. 20 by the conventionalconjugate gradient method.3. Experimental resultsIn this section, the effectiveness <strong>of</strong> our method isdemonstrated by some experimental results. Two datasets are used for the experiments. Target objects are theface <strong>of</strong> Deva in Cambodia (Fig. 6(a)) and the Nara GreatBuddha statue in Japan (Fig. 6(b)). The face <strong>of</strong> Deva wasmeasured by VIVID900 [19]. The resolution <strong>of</strong>VIVID900 was fixed to 640×480, and the view angledepended on mounted lenses. We used a wide lens forscanning the face <strong>of</strong> Deva. The Great Buddha statue wasmeasured by Cyrax2400 [20]. The resolution and viewangle <strong>of</strong> the sensor were flexible: users could changethem arbitrarily. In the scanning <strong>of</strong> Nara Great Buddha,we adjusted the parameters according to measurementenvironments. Generally, 800×800 was used as themeasurement resolution. The details <strong>of</strong> these data setsare shown in Table 1 and Table 2 respectively.Table 1. Data set 1: The face <strong>of</strong> DevaSensor VIVID900<strong>Images</strong> 45Vertices Max: 76612, Min: 38190, Ave: 67674Triangles Max: 150786, Min: 71526, Ave: 130437Table 2. Data set 2: The Nara Great BuddhaSensor Cyrax2500<strong>Images</strong> 114Vertices Max: 155434, Min: 11231, Ave: 81107Triangles Max: 300920, Min: 18927, Ave: 148281Vertices that measured outside the objects had beenremoved previously. Obtained point crowds had beenconverted into triangle mesh models. Since the originaldata sets were too large to deal with using one PC, thesizes <strong>of</strong> the data were reduced to 1 / 4.Our method is evaluated according to the followingthree criteria:1. Number <strong>of</strong> corresponding points with respect to theresolution <strong>of</strong> index images2. Computation time <strong>of</strong> matrix operations withrespect to the number <strong>of</strong> mesh models3. Computation time <strong>of</strong> alignment with respect to the

number <strong>of</strong> verticesThe PC used for the experiments had Athlon MP 2400+processor, 2Gbyte memory, and a GeForce4Ti4600graphics card.with the image resolution.Number <strong>of</strong> correspondences(v' c /v c )10.80.60.40.2Minimum(Model1)Maximum(Model1)Minimum(Model2)Maximum(Model2)(a)(b)Figure 6. Target objects(a : the face <strong>of</strong> Deva, b : the Nara Great Buddha)3.1 Number <strong>of</strong> corresponding points with theresolution <strong>of</strong> the index imageThe number <strong>of</strong> corresponding points is evaluated withrespect to the resolution <strong>of</strong> the index image. As describedabove, if enough resolution cannot be assigned to theindex image, all triangle patches are not rendered: all thecorresponding points cannot be acquired. Here, therelation between the resolution <strong>of</strong> index images and thenumber <strong>of</strong> corresponding points is verified.Corresponding points were searched for several pairs <strong>of</strong>mesh models by gradually changing the resolution <strong>of</strong>index images. We selected a set <strong>of</strong> mesh models that haveminimum and maximum number <strong>of</strong> triangle patches fromeach data set as target meshes. Base meshes werearbitrarily selected. Experimental results are shown in Fig.7. The vertical axis represents the ratio <strong>of</strong> the number <strong>of</strong>corresponding points acquired by our method v’ c to theground truth v c . The resolutions <strong>of</strong> index images arerepresented in the horizontal axis as the square root <strong>of</strong>total pixels.As shown in Fig. 7, when the resolution <strong>of</strong> the indeximage becomes larger than a certain size (800×800),almost all <strong>of</strong> the corresponding points are obtained.Furthermore, the resolution required for obtaining enoughcorresponding points becomes larger as the number <strong>of</strong>triangle patches <strong>of</strong> target mesh increases. In fact, instead<strong>of</strong> the number <strong>of</strong> triangle patches, the measurementresolution is concerned with the required index imageresolution. However, in this case, it can be said that thenumber <strong>of</strong> triangle patches has the similar characteristicswith the measurement resolution because the sampledpoints are distributed densely and uniformly in bothvertical and horizontal directions.It is not required to estimate the image resolution foreach mesh model. Even if, due to any problems, forexample the limitation <strong>of</strong> graphics memory space, a largeenough resolution cannot be assigned to the index image,several corresponding points are acquired in compliance00 200 400 600 800 1000Resolution <strong>of</strong> index images(pixel 1/2 )Figure 7. Number <strong>of</strong> corresponding points withresolution <strong>of</strong> index imagesTime(ms)120010008006004002000Face <strong>of</strong> DevaNara Great Buddha0 25000 50000 75000 100000 125000 150000Number <strong>of</strong> verticesFigure 8. Time to solve linear equations3.2 Time to solve the linear equationsThe computation time to solve the linear equations isevaluated. As described above, the computation time tosolve the linear system is greatly influenced by thenumber <strong>of</strong> mesh models. Thus, the relation between thecomputation time and the number <strong>of</strong> mesh models isevaluated here. Data set 2 is used for this experiment. Thecomputation time <strong>of</strong> the matrix operations only issequentially measured by changing the number <strong>of</strong> meshmodels.The experimental results are shown in Fig. 8. Thehorizontal axis represents the number <strong>of</strong> mesh models,and the vertical axis represents the computation time. Theresults with usual Cholesky decomposition are also shownin this figure in comparison with our method. Thethreshold value <strong>of</strong> the ICCG was set to 1.0 × 10 -6 .In the case that the number <strong>of</strong> mesh models is lowerthan 60, Cholesky decomposition is faster than ourmethod. On the other hand, the computation time <strong>of</strong> our

method increases at a slow rate and becomes smallerthan that <strong>of</strong> Cholesky decomposition when the number <strong>of</strong>mesh models is higher than 70. Moreover, the differencesbetween Cholesky decomposition and our method becomelarger as the number <strong>of</strong> mesh models increases. That is, itcan be said that ICCG is effective for aligning a largenumber <strong>of</strong> mesh models simultaneously.3.3. Computation time with number <strong>of</strong> verticesThe computation time is evaluated with respect to thenumber <strong>of</strong> vertices included in the mesh models. In thisexperiment, it is proven that the computational complexity<strong>of</strong> aligning a pair <strong>of</strong> mesh models is O(N), where N is thenumber <strong>of</strong> vertices. It can be also said that the complexity<strong>of</strong> searching corresponding points is O(N) because it canbe assumed that the computation time <strong>of</strong> another task issmall enough to ignore.Each mesh model was aligned to itself so that thenumber <strong>of</strong> vertices <strong>of</strong> base model and target model wereequal to each other. Since the number <strong>of</strong> correspondingpoints also affects the computation time, all mesh modelswere not moved. That is, the amount <strong>of</strong> movement isinfinitely zero; the number <strong>of</strong> corresponding points isnearly equal to the number <strong>of</strong> vertices. <strong>Index</strong> images wererendered at each iterative step. The image resolution wasfixed to 800×800. The computation time was evaluatedaccording to the average time taken for 20 iterations.Experimental results are shown in Fig. 9. Thehorizontal axis represents the number <strong>of</strong> vertices, and thevertical axis represents the computation time. It is clearthat the computation time is increasing linearly with thenumber <strong>of</strong> vertices. Moreover, there are no differencesbetween two data sets though these data were taken bydifferent sensors. That is, the efficiency <strong>of</strong> our methoddoes not depend on the sensors used for measurements.1400alignment method to these models. Figure 10 shows thealignment results <strong>of</strong> data set 1. The total computation timewas 1738 seconds after 20 iterations. Figure 11 shows theresults <strong>of</strong> data set 2. The number <strong>of</strong> iterations was 20, thesame as for data set 1. Total computation time was 7832seconds. The figures show that all mesh models werecorrectly aligned.Figure 10. <strong>Alignment</strong> results (the face <strong>of</strong> Deva)1200Time (ms)1000800600Cholesky decomposition6x6 ICCG40020000 20 40 60 80 100Number <strong>of</strong> range imagesFigure 9. Computation time with number <strong>of</strong> vertices3.4. <strong>Alignment</strong> resultsThe alignment results <strong>of</strong> these data sets are shown inFig. 10 and Fig. 11. Previously, we had aligned all meshmodels one by one. Then we applied the simultaneousFigure 11. <strong>Alignment</strong> results (Nara Great Buddha)

4. ConclusionIn this paper, we proposed a fast, simultaneousalignment method for a large number <strong>of</strong> range images. Inorder to accelerate the task <strong>of</strong> searching correspondingpoints, we utilized index images that are rapidly renderedusing graphics hardware and are used as look-up tables.Instead <strong>of</strong> sensor parameters, only an approximateresolution <strong>of</strong> index images is required for this search. Inorder to accelerate the computation <strong>of</strong> rigidtransformations, we employed a linearized error function.Since the computation time to solve the linear systembecomes large as the number <strong>of</strong> range images increases,we applied the incomplete Cholesky conjugate gradient(ICCG) method. Experimental results showed theeffectiveness <strong>of</strong> our method. One <strong>of</strong> our future works willbe to improve the accuracy <strong>of</strong> alignment results.AcknowledgmentThis work is supported by Leading Project (Digitalarchive <strong>of</strong> cultural properties) <strong>of</strong> the Ministry <strong>of</strong>Education, Culture, Sports, Science and Technology <strong>of</strong> theJapanese Government. The author would like to thank thestaffs <strong>of</strong> the Todaiji Temple in Nara, Japan. The Bayontemple in Cambodia was digitized with the cooperation <strong>of</strong>JSA (Japanese Government Team for SafeguardingAngkor).References[1] K. Ikeuchi and Y. Sato, Modeling from Reality, KluwerAcademic Press, 2001.[2] K. Ikeuchi, A. Nakazawa, K. Hasegawa and T. Oishi, “TheGreat Buddha Project: Modeling Cultural Heritage for VRSystems through Observation,” IEEE ISMAR’03, Tokyo,Japan. Nov., 2003.[3] M. Levoy et al. “The Digital Michelangelo Project,” Proc.SIGGRAPH 2000, pp. 131-144, 2000.[4] P. J. Besl and N. D. McKay, “A method for registration <strong>of</strong>3-D shapes,” IEEE Transactions on Pattern Analysis andMachine Intelligence, 14(2), 239-256, 1992.[5] Y. Chen and G. Medioni, “Object modeling by registration<strong>of</strong> multiple range images,” Image and Vision Computing,10(3), 145-155, 1992.[6] G. Blais and M. Levine, “Registering Multiview <strong>Range</strong>Data to Create 3D Computer Objects,” IEEE Transactionson Pattern Analysis and Machine Intelligence, Vol. 17, No.8, 1995.[7] S. Rusinkiewicz, O. Hall-Holt and M. Levoy, “Real-Time3D Model Acquisition,” ACM Transactions on Graphics.21(3): 438-446, July 2002.[8] T. Masuda, K. Sakaue and N. Yokoya, “Registration andIntegration <strong>of</strong> <strong>Multiple</strong> <strong>Range</strong> <strong>Images</strong> for 3-D ModelConstruction,” Proc. Computer Society Conference onComputer Vision and Pattern Recognition, 1996.[9] P. J. Neugebauer. “Reconstruction <strong>of</strong> Real-World Objectsvia <strong>Simultaneous</strong> Registration and Robust Combination <strong>of</strong><strong>Multiple</strong> <strong>Range</strong> <strong>Images</strong>.” International Journal <strong>of</strong> ShapeModeling, 3(1&2):71-90, 1997.[10] R. Bergevin, M. Soucy, H. Gagnon, and D. Laurendeau.To-wards a general multi-view registration technique. IEEETransactions on Pattern Analysis and Machine Intelligence,18(5):540–547, May 1996.[11] R. Benjemaa and F. Schmitt. “<strong>Fast</strong> global registration <strong>of</strong> 3dsampled surfaces using a multi-z-buffer technique,” Proc.Int. Conf. on Recent Advances in 3-D Digital Imaging andModeling, pages 113–120, May 1997.[12] Z. Zhang, “Iterative point matching for registration <strong>of</strong>free-form curves and surfaces,” International Journal <strong>of</strong>Computer Vision, 13(2):119–152, 1994.[13] K. Nishino and K. Ikeuchi, “Robust <strong>Simultaneous</strong>Registration <strong>of</strong> <strong>Multiple</strong> <strong>Range</strong> <strong>Images</strong>,” Proc. Fifth AsianConference on Computer Vision, pp454-461, Jan., 2002.[14] David A. Simon, Martial Hebert, and Takeo Kanade,“Realtime 3-D pose estimation using a high-speed rangesensor,” Proc. IEEE Intl. Conf. Robotics and Automation,pages 2235-2241,San Diego, California, May 8-13 1994.[15] M. Greenspan and G. Godin, “A Nearest Neighbor Methodfor Efficient ICP,” Proc. International Conference on 3DDigital Imaging and Modeling (3DIM), pp.161-168, 2001.[16] R. Sagawa, T. Masuda and K. Ikeuchi, “Effective NearestNeighbor Search for Aligning and Merging <strong>Range</strong> <strong>Images</strong>,”Proc. the Fourth International Conference on 3-D DigitalImaging and Modeling (3DIM-03), pp.79-86, 2003.[17] http://www.minoltausa.com/vivid/[18] http://www.cyra.com.