Hybrid MPI and OpenMP programming tutorial - Prace Training Portal

Hybrid MPI and OpenMP programming tutorial - Prace Training Portal Hybrid MPI and OpenMP programming tutorial - Prace Training Portal

likwid-pin• Inspired and based on ptoverride (Michael Meier, RRZE) and taskset• Pins process and threads to specific cores without touching code• Directly supports pthreads, gcc OpenMP, Intel OpenMP• Allows user to specify skip mask (i.e., supports many different compiler/MPIcombinations)• Can also be used as replacement for taskset• Uses logical (contiguous) core numbering when running inside a restricted set ofcores• Supports logical core numbering inside node, socket, coreExample: STREAM benchmark on 12-core Intel Westmere:Anarchy vs. thread pinningno pinning• Usage examples:– env OMP_NUM_THREADS=6 likwid-pin -t intel -c 0,2,4-6 ./myApp parameters– env OMP_NUM_THREADS=6 likwid-pin –c S0:0-2@S1:0-2 ./myApp– env OMP_NUM_THREADS=2 mpirun –npernode 2 \likwid-pin -s 0x3 -c 0,1 ./myApp parametersPinning (physical cores first)Hybrid Parallel ProgrammingSlide77/154Rabenseifner, Hager, JostHybrid Parallel ProgrammingSlide78/154Rabenseifner, Hager, JostTopology (“mapping”) choices with MPI+OpenMP:More examples using Intel MPI+compiler & home-grown mpirunMPI/OpenMP hybrid “how-to”: Take-home messagesOne MPI process pernodeenv OMP_NUM_THREADS=8 mpirun -pernode \likwid-pin –t intel -c 0-7 ./a.outOne MPI process persocketOpenMP threadspinned “round robin”across cores innodeTwo MPI processesper socketHybrid Parallel ProgrammingSlide79/154env OMP_NUM_THREADS=4 mpirun -npernode 2 \-pin "0,1,2,3_4,5,6,7" ./a.outenv OMP_NUM_THREADS=4 mpirun -npernode 2 \-pin "0,1,4,5_2,3,6,7" \likwid-pin –t intel -c 0,2,1,3 ./a.outRabenseifner, env OMP_NUM_THREADS=2 Hager, Jostmpirun -npernode 4 \-pin "0,1_2,3_4,5_6,7" \likwid-pin –t intel -c 0,1 ./a.out• Do not use hybrid if the pure MPI code scales ok• Be aware of intranode MPI behavior• Always observe the topology dependence of– Intranode MPI– OpenMP overheads• Enforce proper thread/process to core binding, using appropriatetools (whatever you use, but use SOMETHING)• Multi-LD OpenMP processes on ccNUMA nodes require correctpage placement• Finally: Always compare the best pure MPI code with the bestOpenMP code!Hybrid Parallel ProgrammingSlide80/154Rabenseifner, Hager, Jost

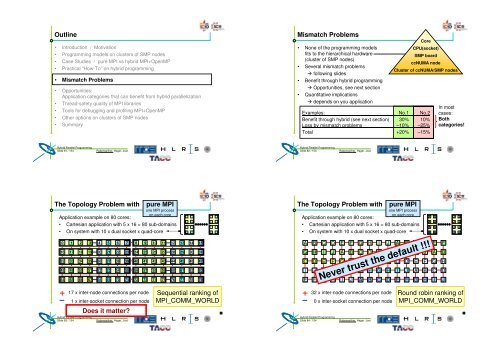

Outline• Introduction / Motivation• Programming models on clusters of SMP nodes• Case Studies / pure MPI vs hybrid MPI+OpenMP• Practical “How-To” on hybrid programming• Mismatch Problems• Opportunities:Application categories that can benefit from hybrid parallelization• Thread-safety quality of MPI libraries• Tools for debugging and profiling MPI+OpenMP• Other options on clusters of SMP nodes• SummaryMismatch Problems• None of the programming modelsfits to the hierarchical hardware(cluster of SMP nodes)• Several mismatch problems following slides• Benefit through hybrid programming Opportunities, see next section• Quantitative implications depends on you applicationCoreCPU(socket)SMP boardccNUMA nodeCluster of ccNUMA/SMP nodesExamples: No.1 No.2Benefit through hybrid (see next section) 30% 10%Loss by mismatch problems –10% –25%Total +20% –15%In mostcases:Bothcategories!Hybrid Parallel ProgrammingSlide81/154Rabenseifner, Hager, JostHybrid Parallel ProgrammingSlide82/154Rabenseifner, Hager, JostThe Topology Problem withpure MPIone MPI processon each coreApplication example on 80 cores:• Cartesian application with 5 x 16 = 80 sub-domains• On system with 10 x dual socket x quad-core0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 1516 17 18 19 20 21 22 23 24 25 26 27 28 29 30 3132 33 34 35 36 37 38 39 40 41 42 43 44 45 46 4748 49 50 51 52 53 54 55 56 57 58 59 60 61 62 6364 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79The Topology Problem withpure MPIone MPI processon each coreApplication example on 80 cores:• Cartesian application with 5 x 16 = 80 sub-domains• On system with 10 x dual socket x quad-coreA AA AA AA AA0 B1 C2 D3 E4 F5 G6 H7 8IJ9 10 A 11 B 12 C 13 D 14 E 15 F16 G 17 H 18 I 19 J 20 A 21 B 22 C 23 D 24 E 25 F 26 G 27 H 28 I 29 J 30 A 31 B32 C 33 D 34 E 35 F 36 G 37 H 38 I 39 J 40 A 41 B 42 C 43 D 44 E 45 F 46 G 47 H48 I 49 J 50 A 51 B 52 C 53 D 54 E 55 F 56 G 57 H 58 I 59 J 60 A 61 B 62 C 63 D64 E 65 F 66 G 67 H 68 I 69 J 70 A 71 B 72 C 73 D 74 E 75 F 76 G 77 H 78 I 79 JNever trust the default !!!JJJJJJJJ17 x inter-node connections per node1 x inter-socket connection per nodeDoes it matter?Hybrid Parallel ProgrammingSlide83/154Rabenseifner, Hager, JostSequential ranking ofMPI_COMM_WORLD32 x inter-node connections per node0 x inter-socket connection per nodeHybrid Parallel ProgrammingSlide84/154Rabenseifner, Hager, JostRound robin ranking ofMPI_COMM_WORLD

- Page 1 and 2: Hybrid MPI & OpenMPParallel Program

- Page 3: Pure MPIHybrid Masteronlypure MPIon

- Page 6: — skipped —SUN: Running hybrid

- Page 11 and 12: Outline• Introduction / Motivatio

- Page 13 and 14: — skipped —Running the codeExam

- Page 15 and 16: Avoiding locality problems• How c

- Page 17 and 18: CCCCCCOpenMP OverheadThread synchro

- Page 19: Likwid Tool Suite• Command line t

- Page 23 and 24: Numerical Optimization inside of an

- Page 25 and 26: Experiment: Matrix-vector-multiply

- Page 27 and 28: Comparison:MPI based parallelizatio

- Page 29 and 30: Memory consumptionCase study: MPI+O

- Page 31 and 32: MPI rules with OpenMP /Automatic SM

- Page 33 and 34: — skipped —Thread Correctness -

- Page 35 and 36: — skipped —Top-down - several l

- Page 37 and 38: — skipped —Remarks on MPI and P

- Page 39 and 40: Summary• This tutorial tried to-

- Page 41 and 42: References (with direct relation to

- Page 43 and 44: Further references• Matthias Hess

Outline• Introduction / Motivation• Programming models on clusters of SMP nodes• Case Studies / pure <strong>MPI</strong> vs hybrid <strong>MPI</strong>+<strong>OpenMP</strong>• Practical “How-To” on hybrid <strong>programming</strong>• Mismatch Problems• Opportunities:Application categories that can benefit from hybrid parallelization• Thread-safety quality of <strong>MPI</strong> libraries• Tools for debugging <strong>and</strong> profiling <strong>MPI</strong>+<strong>OpenMP</strong>• Other options on clusters of SMP nodes• SummaryMismatch Problems• None of the <strong>programming</strong> modelsfits to the hierarchical hardware(cluster of SMP nodes)• Several mismatch problems following slides• Benefit through hybrid <strong>programming</strong> Opportunities, see next section• Quantitative implications depends on you applicationCoreCPU(socket)SMP boardccNUMA nodeCluster of ccNUMA/SMP nodesExamples: No.1 No.2Benefit through hybrid (see next section) 30% 10%Loss by mismatch problems –10% –25%Total +20% –15%In mostcases:Bothcategories!<strong>Hybrid</strong> Parallel ProgrammingSlide81/154Rabenseifner, Hager, Jost<strong>Hybrid</strong> Parallel ProgrammingSlide82/154Rabenseifner, Hager, JostThe Topology Problem withpure <strong>MPI</strong>one <strong>MPI</strong> processon each coreApplication example on 80 cores:• Cartesian application with 5 x 16 = 80 sub-domains• On system with 10 x dual socket x quad-core0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 1516 17 18 19 20 21 22 23 24 25 26 27 28 29 30 3132 33 34 35 36 37 38 39 40 41 42 43 44 45 46 4748 49 50 51 52 53 54 55 56 57 58 59 60 61 62 6364 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79The Topology Problem withpure <strong>MPI</strong>one <strong>MPI</strong> processon each coreApplication example on 80 cores:• Cartesian application with 5 x 16 = 80 sub-domains• On system with 10 x dual socket x quad-coreA AA AA AA AA0 B1 C2 D3 E4 F5 G6 H7 8IJ9 10 A 11 B 12 C 13 D 14 E 15 F16 G 17 H 18 I 19 J 20 A 21 B 22 C 23 D 24 E 25 F 26 G 27 H 28 I 29 J 30 A 31 B32 C 33 D 34 E 35 F 36 G 37 H 38 I 39 J 40 A 41 B 42 C 43 D 44 E 45 F 46 G 47 H48 I 49 J 50 A 51 B 52 C 53 D 54 E 55 F 56 G 57 H 58 I 59 J 60 A 61 B 62 C 63 D64 E 65 F 66 G 67 H 68 I 69 J 70 A 71 B 72 C 73 D 74 E 75 F 76 G 77 H 78 I 79 JNever trust the default !!!JJJJJJJJ17 x inter-node connections per node1 x inter-socket connection per nodeDoes it matter?<strong>Hybrid</strong> Parallel ProgrammingSlide83/154Rabenseifner, Hager, JostSequential ranking of<strong>MPI</strong>_COMM_WORLD32 x inter-node connections per node0 x inter-socket connection per node<strong>Hybrid</strong> Parallel ProgrammingSlide84/154Rabenseifner, Hager, JostRound robin ranking of<strong>MPI</strong>_COMM_WORLD