2012 PROCEEDINGS - Public Relations Society of America

2012 PROCEEDINGS - Public Relations Society of America

2012 PROCEEDINGS - Public Relations Society of America

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

involves researchers reviewing the content <strong>of</strong> their respective measurement items and advancing<br />

an argument that they seem to identify what is claimed (Reinard, 2001). McKnight and<br />

Hawthorn (2006) <strong>of</strong>fered that ―this type <strong>of</strong> measurement validity is perhaps the most commonly<br />

applied one in program evaluations and performance measurement‖ (p. 139). Reviewers, usually<br />

the evaluator or other stakeholders, make judgments about the questions posed and to what<br />

extent they were well written.<br />

Reinard (2001) cited value in content validity as it involves and includes more experts<br />

than face validity. Babbie (1995) stated that this validity uses experts and refers ―to the degree to<br />

which a measure covers the range <strong>of</strong> meanings included within the concept‖ (p. 128). McDavid<br />

and Hawthorn (2006) <strong>of</strong>fered that ―the issue is how well a particular measure <strong>of</strong> a given<br />

construct matches the full range <strong>of</strong> content <strong>of</strong> the construct‖ (p. 140). There is no external<br />

criterion for the validity <strong>of</strong> subjective questions (Fowler, 2009) and usually, one must ask experts<br />

in the respective area to review the work and serve as impartial judges <strong>of</strong> this subjective content<br />

(Stacks, 2011). The respondents must understand the question, they most know the answer to<br />

enable them to answer the question, they must be able to recall the answer, and they must desire<br />

to tell the truth (Fowler, 2009).<br />

Construct validity is based on the ―logical relationships among variables‖ (Babbie, 1995,<br />

p. 127). ―Developing a measure <strong>of</strong>ten involves assessing a pool <strong>of</strong> items that are collectively<br />

intended to be a measure <strong>of</strong> a construct‖ (McDavid & Hawthorn, 2006, p. 140). Reviewing how<br />

well those respective subparts measured the construct is indeed construct validity (Stacks, 2011).<br />

Criterion related validity is the degree to which something is measured against some<br />

external criterion (Babbie, 1995). This, <strong>of</strong> course, is used in instances where research is<br />

attempting to make predictions about behavior or is trying to relate the research to other<br />

measures (Stacks, 2011).<br />

External. External validity is about ―generalizing the causal results <strong>of</strong> a program<br />

evaluation to other settings, other people, other program variations and other times‖ (McDavid &<br />

Hawthorn, 2006, p. 112). Some types <strong>of</strong> research aim to make inferences, calling for external<br />

validity.<br />

Methodology<br />

Data Collection Procedures<br />

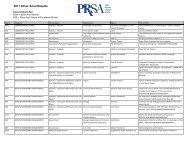

Table 1 illustrates the data collection procedures <strong>of</strong> this study. Ten total focus groups<br />

were conducted as well as four pilot tests. In the initial stages <strong>of</strong> survey construction, three focus<br />

groups were conducted with public relations practitioners. These pr<strong>of</strong>essionals gave input by<br />

answering questions about what should be measured to gauge client satisfaction and how those<br />

things should be asked. To ensure well-run focus groups, they were kept small, as Brown (2009)<br />

suggested. As organization is imperative, pre-determined questions were generated for said<br />

groups and pr<strong>of</strong>essionals gave input about said questions. After three separate focus groups, a<br />

first draft <strong>of</strong> the survey questions were constructed based on common themes that surfaced<br />

during the conversations. These themes were found by reviewing the tapes <strong>of</strong> the focus groups<br />

and by reviewing memos and focus group documents.<br />

General questions, in support <strong>of</strong> the themes found, were then constructed. These<br />

questions were then presented to five more focus groups. These groups aided in the evolution <strong>of</strong><br />

the questions by critically reviewing them and giving feedback. Again, these groups were<br />

recorded. The researcher observed and interacted with participants in an effort to see how the<br />

questions were interpreted and if they were perceived to measure worthwhile aspects <strong>of</strong> client<br />

9