Chi-Square Test for Goodness of Fit - Department of Physics

Chi-Square Test for Goodness of Fit - Department of Physics

Chi-Square Test for Goodness of Fit - Department of Physics

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>Chi</strong>-<strong>Square</strong> <strong>Test</strong> <strong>for</strong> <strong>Goodness</strong> <strong>of</strong> <strong>Fit</strong><br />

Scientists <strong>of</strong>ten use the <strong>Chi</strong>-square (χ 2 ) test to determine the “goodness <strong>of</strong> fit” between<br />

theoretical and experimental data. In this test, we compare observed values with theoretical or<br />

expected values. Observed values are those that the researcher obtains empirically through direct<br />

observation. The theoretical or expected values are developed on the basis <strong>of</strong> an established<br />

theory or a working hypothesis. For example, we might expect that if we flip a coin 200 times,<br />

that we would tally 100 heads and 100 tails. In checking our hypothesis, we might find only 92<br />

heads and 108 tails. Should we reject this coin as being fair? Should we just attribute the<br />

difference between expected and observed frequencies to random fluctuation?<br />

Consider a second example: let’s suppose that we have an unbiased, six-sided die. We<br />

roll this die 300 times and tally the number <strong>of</strong> times each side appears:<br />

Face<br />

Frequency<br />

1 42<br />

2 55<br />

3 38<br />

4 57<br />

5 64<br />

6 44<br />

Ideally, we might expect every side to appear 50 times. What should we conclude from these<br />

results? Is the die biased?<br />

Null Hypothesis<br />

The use <strong>of</strong> the chi-squared distribution is hypothesis testing follows this process: (1) a<br />

null hypothesis H 0 is stated, (2) a test statistic is calculated, the observed value <strong>of</strong> the test statistic<br />

is compared to a critical value, and (3) a decision is made whether or not to reject the null<br />

hypothesis. An attractive feature <strong>of</strong> the chi-squared goodness-<strong>of</strong>-fit test is that it can be applied<br />

to any univariate distribution <strong>for</strong> which you can calculate the cumulative distribution function.<br />

The null hypothesis is a statement that is assumed true. It is rejected only when the data has a<br />

degree <strong>of</strong> statistical confidence that the null hypothesis is false, when the level <strong>of</strong> confidence<br />

exceeds a pre-determined level, usually 95 %, that causes a rejection <strong>of</strong> the null hypothesis. If<br />

experimental observations indicate that the null hypothesis should be rejected, it means either<br />

that the hypothesis is indeed false or the measured data gave an improbable result indicating that<br />

the hypothesis is false, when it is really true. This is an un<strong>for</strong>tunate property <strong>of</strong> statistics.<br />

Calculating <strong>Chi</strong>-squared<br />

For the chi-square goodness-<strong>of</strong>-fit computation, the data are divided into k bins and the<br />

test statistic is defined as<br />

k<br />

2<br />

2 ( Oi<br />

− Ei<br />

)<br />

χ = ∑<br />

(7)<br />

i=<br />

1 Ei<br />

where O i is the observed frequency <strong>for</strong> bin i and E i is the expected frequency <strong>for</strong> bin i. <strong>Chi</strong>squared<br />

is always positive and may range from 0 to ∞.<br />

The chi-squared goodness-<strong>of</strong>-fit test is applied to binned data (i.e., data put into classes)<br />

and is sensitive to the choice <strong>of</strong> bins. This is actually not a restriction, since <strong>for</strong> non-binned data,<br />

a histogram or frequency table can be made be<strong>for</strong>e generating the chi-square test. However, the

values <strong>of</strong> the chi-squared test statistic are dependent on how the data is binned. Another<br />

disadvantage <strong>of</strong> the chi-square test is that it requires a sufficient sample size in order <strong>for</strong> the chisquare<br />

approximation to be valid. There is no optimal choice <strong>for</strong> the bin width (since the optimal<br />

bin width depends on the distribution). Most reasonable choices should produce similar, but not<br />

identical, results. One method that may work is to choose bins that have a width <strong>of</strong> s/3 and lower<br />

and upper bins at the sample mean ±6s, where s is the sample standard deviation. For the chisquare<br />

approximation to be valid, the expected frequency should be at least 5. This test is not<br />

valid <strong>for</strong> small samples, and if some <strong>of</strong> the counts are less than five, you may need to combine<br />

some bins in the tails.<br />

Let’s apply this now to the above examples:<br />

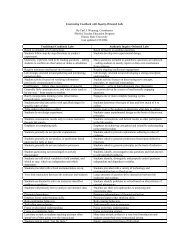

Table I: The Coin Toss Example<br />

Face O E (O − E) 2 / E<br />

Heads 92 100 0.64<br />

Tails 108 100 0.64<br />

Totals 200 200 χ 2 =1.28<br />

Table II: The 6-sided Die Example<br />

Face O E (O − E) 2 / E<br />

1 42 50 1.28<br />

2 55 50 0.50<br />

3 38 50 2.88<br />

4 57 50 0.98<br />

5 64 50 3.92<br />

6 44 50 0.72<br />

Totals 300 300 χ 2 =10.28<br />

Degrees <strong>of</strong> Freedom<br />

We have seen how to calculate a value <strong>for</strong> chi-squared, but so far, it doesn’t have much<br />

meaning. The chi-square distribution is tabulated and available in most texts on statistics (and<br />

reprinted here). To use the table, one must know how many degrees <strong>of</strong> freedom df are associated<br />

with the number <strong>of</strong> categories in the sample data. This is because there is a family <strong>of</strong> chi-square<br />

distributions, each a function <strong>of</strong> the number <strong>of</strong> degrees <strong>of</strong> freedom.<br />

The number <strong>of</strong> degrees <strong>of</strong> freedom is typically equal to k −1. For example, in the die<br />

example, the expected frequencies <strong>for</strong> each <strong>of</strong> the two categories (heads, tails) are not<br />

independent. To obtain the expected frequency <strong>of</strong> tails (100), we need only subtract the expected<br />

frequency <strong>of</strong> heads (100) from the total frequency (200). Similarly, <strong>for</strong> the die example, there<br />

are six possible categories <strong>of</strong> outcomes: the occurrence <strong>of</strong> each <strong>of</strong> the faces. Under the<br />

assumption that the die is fair, we expect a frequency <strong>of</strong> 50 <strong>for</strong> each <strong>of</strong> the faces, but these again<br />

are not independent. Once the frequency count is known <strong>for</strong> five <strong>of</strong> the bins, the frequency <strong>of</strong><br />

the sixth bin is determined, since the total count is 300. Thus, only the frequencies in five <strong>of</strong> the<br />

six bins are free to vary − leading to five degrees <strong>of</strong> freedom <strong>for</strong> this example.

Levels <strong>of</strong> Confidence<br />

A chi-square table, like Table III, lists the chi-squared distribution in terms <strong>of</strong> df and in<br />

terms <strong>of</strong> the level <strong>of</strong> confidence, α = 1 − p. This chi-squared goodness-<strong>of</strong>-fit method is not<br />

without risk; and the data may lead to the rejection when in fact it is true. This is why we speak<br />

<strong>of</strong> confidence. In the coin flip example, the null hypothesis is that the frequency <strong>of</strong> “heads” is<br />

equal to the frequency <strong>of</strong> “tails.” In the more general case, we do not require equal probability<br />

<strong>for</strong> each <strong>of</strong> the categories. There are many cases where an expected category will contain the<br />

majority <strong>of</strong> tally marks over all other categories (one such example would be a survey enquiring<br />

about the public’s choice in an upcoming presidential election that includes all candidates on the<br />

ballot).<br />

In Table III, the critical values <strong>of</strong> χ 2 are given <strong>for</strong> up to 20 degrees <strong>of</strong> freedom. Four<br />

different percentile points in each distribution are given <strong>for</strong> 1 − p = 0.10, 0.05, and 0.025. The<br />

standard practice in the world <strong>of</strong> statistics is to use a 95 % level <strong>of</strong> confidence in the hypothesis<br />

decision making. Thus, if the value <strong>of</strong> chi-squared that is calculated indicates a value <strong>of</strong> p that is<br />

less than or equal to 0.05, then the null hypothesis should be rejected. In the coin-flip example,<br />

you can toss a coin and get 14 heads out <strong>of</strong> twenty flips and find p = 0.0577. This would indicate<br />

that such an observation can happen by chance and the coin can be considered a fair coin. Such<br />

a finding would be described by statisticians as “not statistically significant at the 5 % level.” If<br />

one found 15 heads out <strong>of</strong> 20 tosses, then p would be somewhat less than 0.05 and the coin<br />

would be considered biased. This would be described as “statistically significant at the 5 %<br />

level.” The significance level <strong>of</strong> the test is not determined by the p value. It is pre-determined<br />

by the experimenter. You can choose a 90 % level, a 95 % level, a 99 % level, etc.<br />

For the coin flip example, with one degree <strong>of</strong> freedom. The χ 2 <strong>for</strong> the experiment given<br />

in Table I is only 1.28. This corresponds to a p = 0.26, which is somewhat greater than 0.05.<br />

There<strong>for</strong>e, the null hypothesis that the die is fair cannot be rejected. The smaller the p-value, the<br />

greater is the likelihood that the null hypothesis should be rejected.<br />

In the case <strong>of</strong> the data in Table II <strong>for</strong> the die, the chi-square value is 10.28, which<br />

corresponds to a 93 % confidence level. The die would be considered fair.<br />

Why p = 0.05 or a 95 % Level <strong>of</strong> Confidence used?<br />

Long ago, be<strong>for</strong>e the wide-spread availability <strong>of</strong> computers, calculating p values was<br />

somewhat difficult, so the values were tabulated <strong>for</strong> people to interpolate the p values. The<br />

tables that were most commonly used were published by Ronald A. Fisher beginning in the<br />

1930s. These tables were subsequently reproduced in statistics books everywhere. In Fisher’s<br />

books, he argued the level <strong>of</strong> p = 0.05 as the measure <strong>of</strong> whether something significant is going<br />

on by stating,<br />

The value <strong>for</strong> p = 0.05 or 1 in 20 is 1.96 or nearly 2; it is convenient to<br />

take this point as a limit in judging whether a deviation ought to be<br />

considered significant or not. Deviations exceeding twice the standard<br />

deviation are thus <strong>for</strong>mally regarded as significant. Using this criterion,<br />

we should be led to follow up a false indication only once in 22 trials,<br />

even if the statistics were the only guide available.<br />

Fisher continued his discussion in another part <strong>of</strong> his book,

If one<br />

in twenty does not seem<br />

high enough odds, we<br />

may, if we prefer it,<br />

draw the line at one in fifty (the 2 % point), or one in<br />

a hundred (the 1 %<br />

point) ). Personally, the writer prefers to set a low standard <strong>of</strong> significance<br />

at the 5 percent point, and ignore entirely all resultss which fail to reach<br />

this level. A scientific fact should be regardedd as experimentally<br />

established only if a properly designed experiment rarely fails to give this<br />

level <strong>of</strong> significance.<br />

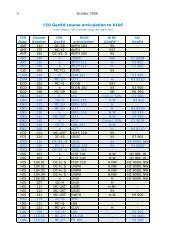

Table III. The <strong>Chi</strong>-<strong>Square</strong> Distribution<br />

α<br />

2<br />

χ<br />

df<br />

α = 0.10<br />

α = 0.05<br />

α = 0.025<br />

df<br />

α = 0.10 α = 0.05 α = 0.025<br />

1<br />

2.706<br />

3.841<br />

5.024<br />

11<br />

17.275<br />

19.675<br />

21.920<br />

2<br />

4.605<br />

5.991<br />

7.378<br />

12<br />

18.549<br />

21.026<br />

23.337<br />

3<br />

6.251<br />

7.815<br />

9.348<br />

13<br />

19.812<br />

22.3622 24.736<br />

4<br />

7.779<br />

9.488<br />

11.143<br />

14<br />

21.064<br />

23.685<br />

26.119<br />

5<br />

9.236<br />

11.071<br />

12.833<br />

15<br />

22.307<br />

24.996<br />

27.4888<br />

6<br />

10.645<br />

12.592<br />

14.449<br />

16<br />

23.5422 26.2966 28.845<br />

7<br />

12.017<br />

14.067<br />

16.013<br />

17<br />

24.769<br />

27.5877 30.1911<br />

8<br />

13.362<br />

15.507<br />

17.535<br />

18<br />

25.9899 28.869<br />

31.526<br />

9<br />

14.684<br />

16.919<br />

19.023<br />

19<br />

27.204<br />

30.1444 32.8522<br />

10<br />

15.987<br />

18.307<br />

20.483<br />

20<br />

28.4122 31.410<br />

34.170<br />

Fractional Uncertainty Revisited<br />

When a reported value is determined by taking the average <strong>of</strong> a set<br />

<strong>of</strong> independent<br />

readings,<br />

the fractional uncertainty is given by the ratio <strong>of</strong> the uncertainty divided by the average<br />

value. For this example,

fractional uncertainty =<br />

=<br />

uncertainty<br />

average<br />

0.05 cm<br />

31.19 cm<br />

= 0.0016 ≈ 0.002<br />

Note that the fractional uncertainty is dimensionless (the uncertainty in cm was divided<br />

by the average in cm). An experimental physicist might make the statement that this<br />

measurement “is good to about 1 part in 500" or "precise to about 0.2%."<br />

The fractional uncertainty is also important because it is used in propagating uncertainty<br />

in calculations using the result <strong>of</strong> a measurement, as discussed in the next section.<br />

Propagation <strong>of</strong> Uncertainty<br />

Let say we are given a functional relationship between several measured variables<br />

(x, y, z),<br />

Q = f(x,y,z)<br />

What is the uncertainty in Q if the uncertainties in x, y, and z are known?<br />

To calculate the variance in Q = f(x,y) as a function <strong>of</strong> the variances in x and y we use<br />

the following:<br />

2<br />

2 2⎛<br />

∂Q<br />

⎞ 2 ⎛ ∂Q<br />

⎞<br />

σQ = σx⎜<br />

⎟ + σ<br />

y⎜<br />

⎟ + 2σ<br />

⎝ ∂x<br />

⎠ ⎝ ∂y<br />

⎠<br />

2<br />

xy<br />

⎛ ∂Q<br />

⎞⎛<br />

∂Q<br />

⎞<br />

⎜ ⎟⎜<br />

⎟<br />

⎝ ∂x<br />

⎠⎝<br />

∂y<br />

⎠<br />

(8)<br />

If the variables x and y are uncorrelated (σ xy = 0), the last term in the above equation is zero.<br />

We can derive the equation 8 as follows:<br />

Assume we have several measurements <strong>of</strong> the quantities x (e.g. x 1 , x 2 ...x i ) and y (e.g. y 1 ,<br />

y 2 ...y i ). Then, the average <strong>of</strong> x and y is<br />

N<br />

N<br />

1<br />

1<br />

x = ∑ x i<br />

and y = ∑ y i<br />

N i=<br />

1<br />

N i=<br />

1<br />

Assume that the measured values are close to the average values, evaluating Q at those measured<br />

values... Q = f ( x,<br />

y)<br />

. Let Q i = f(x i ,y i ) Now, expand Q i about the average values.<br />

⎛ ∂Q<br />

⎞ ⎛ ∂Q<br />

⎞<br />

Qi<br />

= f ( x,<br />

y)<br />

+ ( xi<br />

− x)<br />

⎜ ⎟ + ( yi<br />

− y)<br />

⎜ ⎟ + higher order terms<br />

⎝ ∂x<br />

⎠<br />

y<br />

x ⎝ ∂ ⎠ y<br />

But, let’s take the difference and neglect the higher order terms:<br />

⎛ ∂Q<br />

⎞ ⎛ ∂Q<br />

⎞<br />

Qi<br />

− Q = ( xi<br />

− x)<br />

⎜ ⎟ + ( yi<br />

− y)<br />

⎜ ⎟<br />

⎝ ∂x<br />

⎠<br />

y<br />

x ⎝ ∂ ⎠ y<br />

The variance is

σ<br />

2<br />

Q<br />

1<br />

=<br />

N<br />

1<br />

=<br />

N<br />

N<br />

∑<br />

i=<br />

1<br />

N<br />

2<br />

2⎛<br />

∂Q<br />

⎞<br />

∑(<br />

xi<br />

− x)<br />

⎜ ⎟<br />

i= 1 ⎝ ∂x<br />

⎠μ<br />

2⎛<br />

∂Q<br />

⎞<br />

= σx⎜<br />

⎟<br />

⎝ ∂x<br />

⎠<br />

( Q − Q)<br />

i<br />

2<br />

μ<br />

x<br />

2<br />

2 ⎛ ∂Q<br />

⎞<br />

+ σ<br />

y⎜<br />

⎟<br />

⎝ ∂y<br />

⎠<br />

x<br />

2<br />

μ<br />

1<br />

+<br />

N<br />

y<br />

2<br />

N<br />

N<br />

2⎛<br />

∂Q<br />

⎞ 2<br />

⎛ ∂Q<br />

⎞ ⎛ ∂Q<br />

⎞<br />

∑(<br />

yi<br />

− y)<br />

⎜ ⎟ + ∑(<br />

xi<br />

− x)(<br />

yi<br />

− y)<br />

⎜ ⎟ ⎜ ⎟<br />

i= 1 ⎝ ∂y<br />

⎠ N<br />

μ i= 1<br />

⎝ ∂x<br />

⎠μ<br />

⎝ ∂y<br />

⎠μ<br />

⎛ ∂Q<br />

⎞<br />

+ 2⎜<br />

⎟<br />

⎝ ∂x<br />

⎠<br />

μ<br />

x<br />

⎛ ∂Q<br />

⎞<br />

⎜ ⎟<br />

⎝ ∂y<br />

⎠<br />

μ<br />

y<br />

N<br />

∑<br />

i=<br />

1<br />

y<br />

( x − x)(<br />

y<br />

i<br />

i<br />

− y)<br />

x<br />

y<br />

Since the derivatives are evaluated at the average values ( x, y ) we can pull them out <strong>of</strong> the<br />

summation.<br />

Example: Power in an electric circuit is P = I 2 R.<br />

Let I = 1.0 ± 0.1 A and R = 10.0 ± 1.0 Ω.<br />

Determine the power and its uncertainty using propagation <strong>of</strong> errors,<br />

assuming I and R are uncorrelated.<br />

σ<br />

2<br />

P<br />

2<br />

I<br />

2<br />

2⎛<br />

∂P<br />

⎞<br />

= σI<br />

⎜ ⎟<br />

⎝ ∂I<br />

⎠<br />

2<br />

= σ (2IR)<br />

I = 1<br />

+ σ ( I<br />

2<br />

R<br />

2<br />

= (0.1) (2 × 1.0 × 10.0)<br />

2<br />

)<br />

2<br />

2<br />

2<br />

2 ⎛ ∂P<br />

⎞<br />

+ σR⎜<br />

⎟<br />

⎝ ∂R<br />

⎠<br />

R=<br />

10<br />

+ (1.0)<br />

2<br />

(1.0<br />

2<br />

)<br />

2<br />

= 5 watts<br />

2<br />

The uncertainty in the power is the square root <strong>of</strong> the variance.<br />

P = I 2 R = 10.0 ± 2 W<br />

If the true value <strong>of</strong> the power was 10.0 W, and we measured it many times<br />

with an uncertainty s = ± 2 W and Gaussian statistics apply, then 68% <strong>of</strong><br />

the measurements would lie in the range <strong>of</strong> 8 to 12 watts<br />

More Examples:<br />

In each <strong>of</strong> the following examples, the uncertainty and the fractional uncertainty are<br />

given.<br />

(a) f = x + y (b) f = xy<br />

∂f<br />

∂f<br />

∂f<br />

= 1 and = 1<br />

= y and<br />

∂x<br />

∂y<br />

∂x<br />

σ<br />

σ<br />

f<br />

f<br />

f<br />

=<br />

=<br />

σ<br />

2<br />

x<br />

σ<br />

2<br />

x<br />

+ σ<br />

2<br />

y<br />

+ σ<br />

2<br />

y<br />

x + y<br />

f<br />

σ<br />

σ<br />

f<br />

f<br />

=<br />

=<br />

y<br />

2<br />

σ<br />

x<br />

σ<br />

2<br />

x<br />

2<br />

2<br />

x<br />

2<br />

+ x σ<br />

σ<br />

+<br />

y<br />

2<br />

y<br />

2<br />

2<br />

y<br />

∂f<br />

∂y<br />

= x<br />

(c)<br />

f =<br />

x<br />

y

∂f<br />

1<br />

= and<br />

∂x<br />

y<br />

∂f<br />

x<br />

= −<br />

∂y<br />

y<br />

2 2 2<br />

2 2<br />

σ x σ<br />

x y σ<br />

f σ σ<br />

x y<br />

σ<br />

f<br />

= + and = +<br />

2 4<br />

2 2<br />

y y<br />

f x y<br />

2<br />

Note: the fractional uncertainty in f, as shown in (b) and (c) above, has the same <strong>for</strong>m <strong>for</strong><br />

multiplication and division: The fractional uncertainty in a product or quotient is the square root<br />

<strong>of</strong> the sum <strong>of</strong> the squares <strong>of</strong> the fractional uncertainty <strong>of</strong> each individual term, as long as the<br />

terms are not correlated.<br />

Example: Find the fractional uncertainty in v, where v = at where a = 9.8 ± 0.1 m/s 2 and t = 1.2 ±<br />

0.1 s.<br />

σ<br />

v<br />

v<br />

=<br />

σ<br />

a<br />

2<br />

a<br />

2<br />

σ<br />

+<br />

t<br />

2<br />

t<br />

2<br />

=<br />

⎛ 0.1⎞<br />

⎜ ⎟<br />

⎝ 9.8 ⎠<br />

2<br />

⎛ 0.1⎞<br />

+ ⎜ ⎟<br />

⎝ 3.4 ⎠<br />

2<br />

= 0.031<br />

or<br />

3.1%<br />

Notice that since the relative uncertainty in t (2.9 %) is significantly greater than the relative<br />

uncertainty <strong>for</strong> a (1.0 %), the relative uncertainty in v is essentially the same as <strong>for</strong> t (about 3%).<br />

Time-saving approximation: "A chain is only as strong as its weakest link."<br />

If one <strong>of</strong> the uncertainty terms is more than 3 times greater than the other terms, the rootsquares<br />

<strong>for</strong>mula can be skipped, and the combined uncertainty is simply the largest uncertainty.<br />

This shortcut can save a lot <strong>of</strong> time without losing any accuracy in the estimate <strong>of</strong> the overall<br />

uncertainty.<br />

The Upper-Lower Bound Method <strong>of</strong> Uncertainty Propagation<br />

An alternative and sometimes simpler procedure to the tedious propagation <strong>of</strong><br />

uncertainty law that is the upper-lower bound method <strong>of</strong> uncertainty propagation. This<br />

alternative method does not yield a standard uncertainty estimate (with a 68% confidence<br />

interval), but it does give a reasonable estimate <strong>of</strong> the uncertainty <strong>for</strong> practically any situation.<br />

The basic idea <strong>of</strong> this method is to use the uncertainty ranges <strong>of</strong> each variable to calculate the<br />

maximum and minimum values <strong>of</strong> the function. You can also think <strong>of</strong> this procedure as<br />

examining the best and worst case scenarios. For example, if you took an angle measurement: θ<br />

= 25° ± 1° and you needed to find f = cos θ , then<br />

f max = cos(26°) = 0.8988 f min = cos(24°) = 0.9135<br />

f ≈ 0.906 ± 0.007<br />

Note that even though θ was only measured to 2 significant figures, f is known to 3 figures.

As shown in this example, the uncertainty estimate from the<br />

upper-lower bound method<br />

is generally larger than the standard uncertainty estimate found from the<br />

propagation <strong>of</strong><br />

uncertainty law.<br />

The upper-lower bound method is especially useful when the functional relationship is<br />

not clear<br />

or is incomplete. One<br />

practical application is <strong>for</strong>ecasting the expected range in an<br />

expense budget. In this case, some expenses may be fixed, while others may be uncertain, and<br />

the range<br />

<strong>of</strong> these uncertain terms could be used to predict the upper and lower bounds on the<br />

total expense.<br />

Use <strong>of</strong> Significant<br />

Figures <strong>for</strong> Simple Propagatio<br />

on <strong>of</strong> Uncertainty<br />

By following<br />

a few simple rules, significant figures can be used to find<br />

the appropriate<br />

precision<br />

<strong>for</strong> a calculated result <strong>for</strong> the four most basicc math functions, all without the use <strong>of</strong><br />

complicated <strong>for</strong>mulas <strong>for</strong> propagating uncertainties.<br />

For multiplication and division, the number <strong>of</strong> significant figures that are reliably known<br />

in a product or quotient is the same as the smallest number <strong>of</strong> significant figures in any <strong>of</strong> the<br />

original numbers.<br />

Example:<br />

6.6 (2 significant figures)<br />

× 7328.7 (5 significant figures)<br />

48369.42<br />

= 4.8 × 10 4 (2 significant figures)<br />

For addition and subtraction, the result should be rounded<br />

reported <strong>for</strong> the least precise number.<br />

Examples:<br />

223.64<br />

5560.5<br />

+54<br />

+0.008<br />

278<br />

5560.5<br />

<strong>of</strong>f to the last decimal place<br />

If<br />

a calculated number is<br />

to be used in further calculations,<br />

it is good practice to keep at<br />

least one extra digit to reduce rounding errorss that may accumulate.<br />

Then the final<br />

answer should be rounded according<br />

to the above guidelines.<br />

Uncertainty and Significant<br />

Figures<br />

For the same<br />

reason thatt it is dishonest to report a result with more significant figures<br />

than are reliably known, the uncertainty value should also not be reported<br />

with excessive<br />

precision.<br />

For example,<br />

if we measure the density <strong>of</strong> copper, it would<br />

be unreasonable to report a<br />

result like:<br />

measured density = 8.93 ± 0.4753 g/cm 3 WRONG!<br />

The uncertainty in the measurement cannot be known to that precision. In most<br />

experimental work, the confidence in the uncertainty estimate is not much better than about ±<br />

50% because <strong>of</strong> all the various sources <strong>of</strong> error, none <strong>of</strong> whichh can be known<br />

exactly. There<strong>for</strong>e, to be consistent with this large uncertainty in the uncertainty (!)

the uncertainty value should be stated to only one significant figure (or perhaps 2 sig. figs. if the<br />

first digit is a 1). Experimental uncertainties should be rounded to one (or at most two)<br />

significant figures. So, the the above result should be reported as<br />

To help give a sense <strong>of</strong> the amount <strong>of</strong> confidence that can be placed in the standard<br />

deviation, Table IV indicates the relative uncertainty associated with the standard deviation <strong>for</strong><br />

various sample sizes. Note that in order <strong>for</strong> an uncertainty value to be reported to 3 significant<br />

figures, more than 10 000 readings would be required to justify this degree <strong>of</strong> precision!<br />

When an explicit uncertainty estimate is made, the uncertainty term indicates how many<br />

significant figures should be reported in the measured value (not the other way around!). For<br />

example, the uncertainty in the density measurement above is about 0.5 g/cm 3 , so this tells us<br />

that the digit in the tenths place is uncertain, and should be the last one reported. The other digits<br />

in the hundredths place and beyond are insignificant, and should not be reported:<br />

measured density = 8.9 ± 0.5 g/cm 3<br />

RIGHT!<br />

An experimental value should be rounded to an appropriate number <strong>of</strong> significant figures<br />

consistent with its uncertainty. This generally means that the last significant figure in any<br />

reported measurement should be in the same decimal place as the uncertainty.<br />

In most instances, this practice <strong>of</strong> rounding an experimental result to be consistent with<br />

the uncertainty estimate gives the same number <strong>of</strong> significant figures as the rules discussed<br />

earlier <strong>for</strong> simple propagation <strong>of</strong> uncertainties <strong>for</strong> adding, subtracting, multiplying, and dividing.<br />

Caution: When conducting an experiment, it is important to keep in mind that precision is<br />

expensive (both in terms <strong>of</strong> time and material resources). Do not waste your time trying to<br />

obtain a precise result when only a rough estimate is required. The cost increases exponentially<br />

with the amount <strong>of</strong> precision required, so the potential benefit <strong>of</strong> this precision must be weighed<br />

against the extra cost.<br />

Table IV. Relative Uncertainty Associated with the Standard Deviation <strong>for</strong> Various Sample<br />

Sizes<br />

N Relative Uncert.* Sig. Figs. Valid Implied Uncertainty<br />

2 71% 1 ± 10% to 100%<br />

3 50% 1 ± 10% to 100%<br />

4 41% 1 ± 10% to 100%<br />

5 35% 1 ± 10% to 100%<br />

10 24% 1 ± 10% to 100%<br />

20 16% 1 ± 10% to 100%

30 13% 1 ± 10% to 100%<br />

50 10% 2 ± 1% to 10%<br />

100 7% 2 ± 1% to 10%<br />

10000 0.7% 3 ± 0.1% to 1%<br />

*The relative uncertainty is given by the approximate <strong>for</strong>mula:<br />

σ σ<br />

σ<br />

=<br />

1<br />

2( N −1)<br />

Combining and Reporting Uncertainties<br />

In 1993, the International Standards Organization (ISO) published the first <strong>of</strong>ficial worldwide<br />

Guide to the Expression <strong>of</strong> Uncertainty in Measurement. Be<strong>for</strong>e this time, uncertainty<br />

estimates were evaluated and reported according to different conventions depending on the<br />

context <strong>of</strong> the measurement or the scientific discipline. Here are a few key points from this 100-<br />

page guide, which can be found in modified <strong>for</strong>m on the NIST website (see References).<br />

When reporting a measurement, the measured value should be reported along with an<br />

estimate <strong>of</strong> the total combined standard uncertainty <strong>of</strong> the value. The total uncertainty is found<br />

by combining the uncertainty components based on the two types <strong>of</strong> uncertainty analysis:<br />

Type A evaluation <strong>of</strong> standard uncertainty – method <strong>of</strong> evaluation <strong>of</strong> uncertainty by<br />

the statistical analysis <strong>of</strong> a series <strong>of</strong> observations. This method primarily includes random<br />

errors.<br />

Type B evaluation <strong>of</strong> standard uncertainty – method <strong>of</strong> evaluation <strong>of</strong> uncertainty by<br />

means other than the statistical analysis <strong>of</strong> series <strong>of</strong> observations. This method includes<br />

systematic errors and any other uncertainty factors that the experimenter believes are<br />

important.<br />

The individual uncertainty components should be combined using the law <strong>of</strong> propagation<br />

<strong>of</strong> uncertainties, commonly called the "root-sum-<strong>of</strong>-squares" or "RSS" method. When this is<br />

done, the combined standard uncertainty should be equivalent to the standard deviation <strong>of</strong> the<br />

result, making this uncertainty value correspond with a 68% confidence interval. If a wider<br />

confidence interval is desired, the uncertainty can be multiplied by a coverage factor (usually k<br />

= 2 or 3) to provide an uncertainty range that is believed to include the true value with a<br />

confidence <strong>of</strong> 95% or 99.7% respectively. If a coverage factor is used, there should be a clear<br />

explanation <strong>of</strong> its meaning so there is no confusion <strong>for</strong> readers interpreting the significance <strong>of</strong> the<br />

uncertainty value.<br />

You should be aware that the ± uncertainty notation may be used to indicate different<br />

confidence intervals, depending on the scientific discipline or context. For example, a public<br />

opinion poll may report that the results have a margin <strong>of</strong> error <strong>of</strong> ± 3%, which means that<br />

readers can be 95% confident (not 68% confident) that the reported results are accurate within 3<br />

percentage points. In physics, the same average result would be reported with an uncertainty <strong>of</strong> ±

1.5% to indicate the 68% confidence interval.<br />

Conclusion: "When do measurements agree with each other?"<br />

We now have the resources to answer the fundamental scientific question that was asked<br />

at the beginning <strong>of</strong> this error analysis discussion: "Does my result agree with a theoretical<br />

prediction or results from other experiments?"<br />

Generally speaking, a measured result agrees with a theoretical prediction if the<br />

prediction lies within the range <strong>of</strong> experimental uncertainty. Similarly, if two measured values<br />

have standard uncertainty ranges that overlap, then the measurements are said to be consistent<br />

(they agree). If the uncertainty ranges do not overlap, then the measurements are said to be<br />

discrepant (they do not agree). However, you should recognize that this overlap criteria can give<br />

two opposite answers depending on the evaluation and confidence level <strong>of</strong> the uncertainty. It<br />

would be unethical to arbitrarily inflate the uncertainty range just to make the measurement agree<br />

with an expected value. A better procedure would be to discuss the size <strong>of</strong> the difference<br />

between the measured and expected values within the context <strong>of</strong> the uncertainty, and try to<br />

discover the source <strong>of</strong> the discrepancy if the difference is truly significant.<br />

References<br />

Taylor, John. An Introduction to Error Analysis, 2nd. ed. University Science Books:<br />

Sausalito, 1997.<br />

Baird, DC Experimentation: An Introduction to Measurement Theory and Experiment<br />

Design, 3rd. ed. Prentice Hall: Englewood Cliffs, 1995.<br />

Bevington, Phillip and Robinson, D. Data Reduction and Error Analysis <strong>for</strong> the Physical<br />

Sciences, 2nd. ed. McGraw-Hill: New York, 1991.<br />

Fisher, RA. Statistical Methods <strong>for</strong> Research Workers, Oliver & Boyd Publishers, 1958.<br />

ISO. Guide to the Expression <strong>of</strong> Uncertainty in Measurement. International Organization <strong>for</strong><br />

Standardization (ISO) and the International Committee on Weights and Measures<br />

(CIPM): Switzerland, 1993.<br />

NIST. Guidelines <strong>for</strong> Evaluating and Expressing the Uncertainty <strong>of</strong> NIST Measurement<br />

Results, 1994. Available online: http://physics.nist.gov/Pubs/guidelines/contents.html<br />

Portions <strong>of</strong> this document on measurements and error was modified from a document originally prepared by<br />

The University <strong>of</strong> North Carolina at Chapel Hill, <strong>Department</strong> <strong>of</strong> <strong>Physics</strong> and Astronomy