Parallel programming with OpenMP - LinkSCEEM

Parallel programming with OpenMP - LinkSCEEM

Parallel programming with OpenMP - LinkSCEEM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

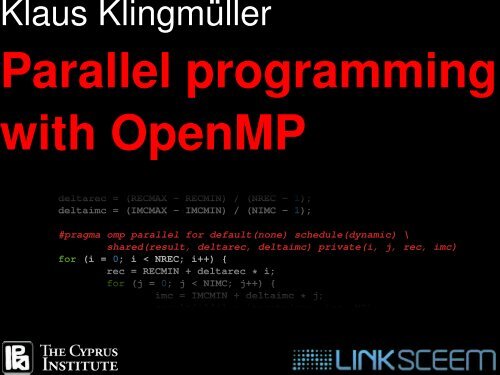

Klaus Klingmüller<br />

<strong>Parallel</strong> <strong>programming</strong><br />

<strong>with</strong> <strong>OpenMP</strong><br />

deltarec = (RECMAX - RECMIN) / (NREC - 1);<br />

deltaimc = (IMCMAX - IMCMIN) / (NIMC - 1);<br />

#pragma omp parallel for default(none) schedule(dynamic) \<br />

shared(result, deltarec, deltaimc) private(i, j, rec, imc)<br />

for (i = 0; i < NREC; i++) {<br />

rec = RECMIN + deltarec * i;<br />

for (j = 0; j < NIMC; j++) {<br />

imc = IMCMIN + deltaimc * j;<br />

result[i][j] = iterate(rec, imc, NI);

Klaus Klingmüller<br />

The Cyprus Institute<br />

Acknowledgement<br />

Alexander Schnurpfeil

Distributed memory<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

CPU<br />

CPU<br />

CPU<br />

CPU<br />

RAM<br />

RAM<br />

RAM<br />

RAM<br />

Shared memory<br />

CPU CPU CPU CPU<br />

RAM

Distributed memory<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

MPI<br />

CPU<br />

CPU<br />

CPU<br />

CPU<br />

RAM<br />

RAM<br />

RAM<br />

RAM<br />

Shared memory<br />

CPU CPU CPU CPU<br />

RAM

Distributed memory<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

MPI<br />

CPU<br />

CPU<br />

CPU<br />

CPU<br />

RAM<br />

RAM<br />

RAM<br />

RAM<br />

Shared memory<br />

<strong>OpenMP</strong><br />

CPU CPU CPU CPU<br />

RAM

Shared memory nodes<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

MPI + <strong>OpenMP</strong><br />

CPU CPU CPU CPU<br />

RAM<br />

CPU CPU CPU CPU<br />

RAM<br />

CPU CPU CPU CPU<br />

RAM

Klaus Klingmüller<br />

The Cyprus Institute<br />

Why not simply one MPI task per CPU?<br />

Sometimes this actually is the best solution<br />

But not, if<br />

• memory consumption is relevant<br />

• communication overhead is a problem<br />

• the nature of the problem limits the number<br />

of MPI tasks<br />

Often only little extra work required for<br />

MPI/<strong>OpenMP</strong> hybrid-parallelisation

Klaus Klingmüller<br />

The Cyprus Institute<br />

Targets<br />

• Multiprocessor systems<br />

• Multi-core CPUs<br />

• Simultaneous multithreading CPUs<br />

Programming languages<br />

C, C++, Fortran

Klaus Klingmüller<br />

The Cyprus Institute<br />

Highlights<br />

• Easy to use<br />

- compiler works out the details<br />

- incrementel parallelisation<br />

- single source code for sequential<br />

and parallel version<br />

• De facto standard<br />

- widely adopted<br />

- strong vendor support

History<br />

• Proprietary designs by some vendors (SGI, CRAY,<br />

ORACLE, IBM, ) end of the 1980s<br />

• Different unsuccessful attempts to standardize API<br />

• PCF<br />

• ANSI X3H5<br />

• <strong>OpenMP</strong>-Forum founded to define portable API<br />

• 1997 first API for Fortran (V1.0)<br />

• 1998 first API for C/C++ (V1.0)<br />

• 2000 Fortran V2.0<br />

• 2002 C/C++ V2.0<br />

• 2005 combined C/C++/Fortran specification V2.5<br />

• 2008 combined C/C++/Fortran specification V3.0<br />

• 2011 combined C/C++/Fortran specification V3.1<br />

16. August 2011 Slide 12

Klaus Klingmüller<br />

The Cyprus Institute<br />

Why start using <strong>OpenMP</strong> now?<br />

• Multi-core CPUs everywhere<br />

• More and more cores per CPU<br />

Cy-Tera: 12 cores per node<br />

24 simultaneous threads<br />

• OpenACC extends <strong>OpenMP</strong>'s concept to<br />

GPU <strong>programming</strong><br />

tomorrow's lecture<br />

• Many integrated cores (MIC):<br />

1 TFLOPS on one PCIe card

Klaus Klingmüller<br />

The Cyprus Institute<br />

Literature/Links<br />

• Chapman, Jost, van der Pas, Using <strong>OpenMP</strong><br />

• www.openmp.org<br />

Specifications, summary cards<br />

• www.compunity.org<br />

• www.google.com#q=openmp<br />

• linksceem.eu/ATutor/go.php/7/index.php

Klaus Klingmüller<br />

The Cyprus Institute<br />

euclid.cyi.ac.cy<br />

tar -xf /opt/examples/pragma-training/openmp_tutorial.tar.gz

Klaus Klingmüller<br />

The Cyprus Institute<br />

Demo: Mandelbrot set<br />

Complex numbers c,<br />

for which the sequence<br />

z 0<br />

,z 1<br />

,z 2<br />

,...,<br />

where<br />

z 0<br />

=0,<br />

z i+1<br />

=z 2 i<br />

+c,<br />

is bounded

mandelbrot/cmandelbrot-v1.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

int main()<br />

{<br />

int i, j, result[NREC][NIMC];<br />

double deltarec, deltaimc, rec, imc;<br />

deltarec = (RECMAX - RECMIN) / (NREC - 1);<br />

deltaimc = (IMCMAX - IMCMIN) / (NIMC - 1);<br />

for (i = 0; i < NREC; i++) {<br />

rec = RECMIN + deltarec * i;<br />

for (j = 0; j < NIMC; j++) {<br />

imc = IMCMIN + deltaimc * j;<br />

result[i][j] = iterate(rec, imc, NI);<br />

}<br />

}<br />

printpgm(NREC, NIMC, NI, result[0]);<br />

}<br />

return 0;

mandelbrot/fmandelbrot-v1.f90<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

PROGRAM mandelbrot<br />

IMPLICIT NONE<br />

INTEGER, PARAMETER :: ni = 30000, nrec = 800, nimc = 600<br />

REAL, PARAMETER :: recmin = -2., recmax = .666, imcmin = -1., imcmax =1.<br />

INTEGER :: n, i, j, result(nrec, nimc)<br />

REAL :: rec, imc, deltarec, deltaimc<br />

COMPLEX :: c<br />

deltarec = (recmax - recmin) / (nrec - 1)<br />

deltaimc = (imcmax - imcmin) / (nimc - 1)<br />

DO j = 1, nimc<br />

imc = imcmin + deltaimc * (j - 1)<br />

DO i = 1, nrec<br />

rec = recmin + deltarec * (i - 1)<br />

c = CMPLX(rec, imc)<br />

CALL iterate(c, ni, n)<br />

result(i, j) = n<br />

END DO<br />

END DO<br />

CALL printpgm(nrec, nimc, ni, result)<br />

END PROGRAM mandelbrot

Klaus Klingmüller<br />

The Cyprus Institute<br />

C<br />

deltarec = (RECMAX - RECMIN) / (NREC - 1);<br />

deltaimc = (IMCMAX - IMCMIN) / (NIMC - 1);<br />

Fortran<br />

#pragma omp parallel for default(none) \<br />

shared(result, deltarec, deltaimc) private(i, j, rec, imc)<br />

for (i = 0; i < NREC; i++) {<br />

rec = RECMIN + deltarec * i;<br />

for (j = 0; j < NIMC; j++) {<br />

imc = IMCMIN + deltaimc * j;<br />

result[i][j] = iterate(rec, imc, NI);<br />

deltarec = (recmax - recmin) / (nrec - 1)<br />

deltaimc = (imcmax - imcmin) / (nimc - 1)<br />

!$OMP PARALLEL DO DEFAULT(NONE) &<br />

!$OMP SHARED(result, deltarec, deltaimc) PRIVATE(n, i, j, rec, imc, c)<br />

DO j = 1, nimc<br />

imc = imcmin + deltaimc * (j - 1)<br />

DO i = 1, nrec<br />

rec = recmin + deltarec * (i - 1)<br />

c = CMPLX(rec, imc)<br />

CALL iterate(c, ni, n)<br />

result(i, j) = n<br />

END DO<br />

END DO<br />

!$OMP END PARALLEL DO<br />

CALL printpgm(nrec, nimc, ni, result)

Klaus Klingmüller<br />

The Cyprus Institute<br />

C<br />

deltarec = (RECMAX - RECMIN) / (NREC - 1);<br />

deltaimc = (IMCMAX - IMCMIN) / (NIMC - 1);<br />

Fortran<br />

#pragma omp parallel for default(none) \<br />

shared(result, deltarec, deltaimc) private(i, j, rec, imc)<br />

for (i = 0; i < NREC; i++) {<br />

rec = RECMIN + deltarec * i; Compile & run<br />

for (j = 0; j < NIMC; j++) {<br />

qsub cmandelbrot-v1.pbs<br />

result[i][j] = iterate(rec, imc, NI);<br />

...<br />

cat out.txt<br />

deltarec = (recmax - recmin) / (nrec - 1)<br />

deltaimc = (imcmax - imcmin) / (nimc - 1)<br />

!$OMP PARALLEL DO DEFAULT(NONE) &<br />

!$OMP SHARED(result, deltarec, deltaimc) PRIVATE(n, i, j, rec, imc, c)<br />

DO j = 1, nimc<br />

imc = imcmin + deltaimc * (j - 1)<br />

DO i = 1, nrec<br />

rec = recmin + deltarec * (i - 1)<br />

c = CMPLX(rec, imc)<br />

CALL iterate(c, ni, n)<br />

result(i, j) = n<br />

END DO<br />

END DO<br />

!$OMP END PARALLEL DO<br />

make<br />

imc = IMCMIN + deltaimc * j;<br />

CALL printpgm(nrec, nimc, ni, result)<br />

Compile & run<br />

make<br />

qsub fmandelbrot-v1.pbs<br />

...<br />

cat out.txt

Klaus Klingmüller<br />

The Cyprus Institute<br />

C<br />

deltarec = (RECMAX - RECMIN) / (NREC - 1);<br />

deltaimc = (IMCMAX - IMCMIN) / (NIMC - 1);<br />

Fortran<br />

#pragma omp parallel for default(none) schedule(dynamic) \<br />

shared(result, deltarec, deltaimc) private(i, j, rec, imc)<br />

for (i = 0; i < NREC; i++) {<br />

rec = RECMIN + deltarec * i;<br />

for (j = 0; j < NIMC; j++) {<br />

imc = IMCMIN + deltaimc * j;<br />

result[i][j] = iterate(rec, imc, NI);<br />

deltarec = (recmax - recmin) / (nrec - 1)<br />

deltaimc = (imcmax - imcmin) / (nimc - 1)<br />

!$OMP PARALLEL DO DEFAULT(NONE) SCHEDULE(DYNAMIC) &<br />

!$OMP SHARED(result, deltarec, deltaimc) PRIVATE(n, i, j, rec, imc, c)<br />

DO j = 1, nimc<br />

imc = imcmin + deltaimc * (j - 1)<br />

DO i = 1, nrec<br />

rec = recmin + deltarec * (i - 1)<br />

c = CMPLX(rec, imc)<br />

CALL iterate(c, ni, n)<br />

result(i, j) = n<br />

END DO<br />

END DO<br />

!$OMP END PARALLEL DO<br />

CALL printpgm(nrec, nimc, ni, result)

Klaus Klingmüller<br />

The Cyprus Institute<br />

Basics

Basic <strong>Parallel</strong> Programming Paradigm: SPMD<br />

• SPMD: Single Program Multiple Data<br />

• Programmer writes one program, which is executed on all<br />

processors<br />

• Basic paradigm for implementing parallel programs<br />

• Special cases (e.g., different control flow) handled inside<br />

the program<br />

16. August 2011 Slide 5

Processes and Threads<br />

Process<br />

active instance of program code<br />

unique process id (pid)<br />

stack for storing local data<br />

Threads<br />

one or more threads belong to a<br />

process<br />

thread id (tid)<br />

has its own stack<br />

heap for storing dynamic data<br />

space for storing global data<br />

16. August 2011 Slide 8

Threads<br />

• Elements of a process, e.g. program code, data area, heap<br />

etc. are shared among the existing threads<br />

• A single program <strong>with</strong> several threads is able to handle<br />

several tasks concurrently<br />

• Shared access to common resources:<br />

• Less information has to be stored<br />

• It is more efficient to switch between threads instead of<br />

processes<br />

<strong>OpenMP</strong> is based on this thread concept<br />

16. August 2011 Slide 9

Single-threaded vs. Multi-threaded<br />

16. August 2011 Slide 10

Klaus Klingmüller<br />

The Cyprus Institute<br />

Initial thread<br />

Fork<br />

Team of threads<br />

Join<br />

Initial thread

<strong>Parallel</strong> construct<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

C, C++<br />

#pragma omp parallel [clause[[,] clause]. . . ]<br />

structured block<br />

Fortran<br />

!$omp parallel [clause[[,] clause]. . . ]<br />

structured block<br />

!$omp end parallel

parallel_construct/parallel_construct.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

int main()<br />

{<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

printf("+++\n");<br />

#pragma omp parallel<br />

{<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

}<br />

printf("+++\n");<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

}<br />

return 0;

parallel_construct/parallel_construct.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

Fortran:<br />

use omp_lib<br />

int main()<br />

{<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

printf("+++\n");<br />

#pragma omp parallel<br />

{<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

}<br />

printf("+++\n");<br />

printf("This is thread %d. ", omp_get_thread_num());<br />

printf("Number of threads: %d.\n", omp_get_num_threads());<br />

}<br />

return 0;

Syntax of Directives<br />

• Directives are special compiler pragmas<br />

• Directive and API function names are case-sensitive and<br />

are written lowercase<br />

• There are no END directives as in Fortran, but rather the<br />

directives apply to the following structured block (statement<br />

<strong>with</strong> one entry and one exit)<br />

#pragma omp DirectiveName [ParameterList]<br />

structured-block<br />

• Directive continuation lines:<br />

#pragma omp DirectiveName here-is-something \<br />

and-here-is-some-more<br />

16. August 2011 Slide 21

Syntax of Directives<br />

• Directives are special-formatted comments<br />

• Directives ignored by non-<strong>OpenMP</strong> compiler<br />

• Case-insensitive<br />

!$OMP DirectiveName [ParameterList]<br />

block<br />

!$OMP END DirectiveName<br />

• Directive continuation lines:<br />

!$OMP DirectiveName here-is-something &<br />

!$OMP and-here-is-some-more<br />

16. August 2011 Slide 22

Directives<br />

• In general, all directives have the same structure In C/C++<br />

and Fortran<br />

#pragma omp DirectiveName [ParameterList]<br />

!$OMP DirectiveName [ParameterList]<br />

• There are directives for<br />

• <strong>Parallel</strong>ism<br />

• Work<br />

• Synchronization<br />

• Many parameters apply to directives equally (e.g.,<br />

PRIVATE(var-list))<br />

16. August 2011 Slide 25

Environment Variables<br />

• Set default number of threads for parallel regions<br />

• Set scheduling type for parallel loops <strong>with</strong> scheduling<br />

strategy RUNTIME<br />

16. August 2011 Slide 18

Run-time Library Functions<br />

#include // header<br />

omp_set_num_threads(nthreads)<br />

• Set number of threads to use for subsequent parallel<br />

regions (overrides value of OMP_NUM_THREADS)<br />

nthreads = omp_get_num_threads()<br />

• Returns the number of threads in current team<br />

iam = omp_get_thread_num()<br />

• Returns thread number ( iam = 0 .. nthreads-1)<br />

iam = omp_get_wtime()<br />

• Returns elapsed wall clock time in seconds<br />

16. August 2011 Slide 19

Data Environment I<br />

• Data objects (variables) can be shared or private<br />

• By default almost all variables are shared<br />

• Accessible to all threads<br />

• Single instance in shared memory<br />

• Caveat: pointers itself are shared but not contents<br />

• Variables can be declared private<br />

• Then each thread allocates its own private copy of the<br />

data<br />

• Only exists during the execution of a parallel region!<br />

• Value undefined upon entry of parallel region<br />

16. August 2011 Slide 15

Data Environment II<br />

• Exceptions to default shared:<br />

• Loop index variable in parallel loop<br />

• Local variables of subroutines called inside parallel<br />

regions<br />

16. August 2011 Slide 16

Running <strong>OpenMP</strong> I<br />

16. August 2011 Slide 14

Directive Parameters for Data Environment I<br />

• Variables (varList) are declared to be private<br />

PRIVATE(varList)<br />

• Variables (varList) are declared to be shared<br />

SHARED(varList)<br />

• Set the default data mode (private only allowed in Fortran)<br />

DEFAULT(shared | private | none)<br />

• Variables (varList) are declared to be private but also get<br />

initialized <strong>with</strong> the value the original shared variable had<br />

just before entering the parallel region<br />

FIRSTPRIVATE(varList)<br />

16. August 2011 Slide 26

Directive Parameters for Data Environment II<br />

• Variables (varList) are declared private and the value of the<br />

variable of the last thread finishing the parallel region is<br />

written to the corresponding shared variable afterwards<br />

LASTPRIVATE(varList)<br />

• Variables can appear in both FIRSTPRIVATE and<br />

LASTPRIVATE<br />

• (varList): comma-separated list of variables<br />

16. August 2011 Slide 27

Running <strong>OpenMP</strong> II<br />

16. August 2011 Slide 17

<strong>Parallel</strong> Region II<br />

• Nesting of parallel regions is allowed, but not all compilers<br />

implement this feature yet. The Master thread is always<br />

thread 0, which executes the sequential code of the<br />

program<br />

• Typically, inside of parallel regions further <strong>OpenMP</strong><br />

constructs are used to coordinate the work on the different<br />

threads work share constructs like a parallel loop<br />

16. August 2011 Slide 29

Exercises: Introduction I<br />

• Go to directory /ex_introduction<br />

a) Write a program where the master thread forks a parallel<br />

region. Each thread should print its thread number.<br />

Furthermore, only the master thread should print the used<br />

number of threads.<br />

b) Let the program run <strong>with</strong> two and four threads respectively.<br />

Set the number of threads by using<br />

1) The corresponding environment variable<br />

2) The corresponding <strong>OpenMP</strong> library function<br />

For Fortran programmers: include „ use omp_lib“ in your<br />

code when you use the intel compiler<br />

16. August 2011 Slide 54

Klaus Klingmüller<br />

The Cyprus Institute<br />

Loops

Loop construct<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

C, C++<br />

#pragma omp for [clause[[,] clause]. . . ]<br />

for-loop<br />

Fortran<br />

!$omp do [clause[[,] clause]. . . ]<br />

do-loop<br />

!$omp end do [nowait]

loop_construct/loop_construct-v1.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

#define N 10<br />

int main()<br />

int i;<br />

#pragma omp parallel<br />

{<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

printf("i = %d (thread %d)\n", i, omp_get_thread_num());<br />

}<br />

}<br />

}<br />

return 0;

Do-loop Execution I<br />

Program<br />

(<strong>Parallel</strong>) Execution<br />

Thread 0 Thread 1 Thread 2<br />

a = 1<br />

a = 1<br />

a = 2<br />

!$OMP PARALLEL<br />

a = 2<br />

do i=1,9<br />

!$OMP call DO work(i)<br />

enddo<br />

do i=1,9<br />

call work(i)<br />

enddo<br />

!$OMP END PARAL.<br />

a = 2<br />

a = 2 a = 2 a = 2<br />

do i=1,9<br />

do call i=1,9 i=1,3 work(i)<br />

do i=1,9 i=4,6 do i=1,9 i=7,9<br />

enddo<br />

call work(i) call work(i) call work<br />

enddo enddo enddo<br />

a = 3<br />

a = 3<br />

16. August 2011 Slide 35

<strong>Parallel</strong> Loop<br />

• Iterations of a parallel loop are executed in parallel by all<br />

threads of the current team<br />

• The calculations inside an iteration must not depend on<br />

other iterations responsibility of the programmer<br />

• A schedule determines how iterations are divided among<br />

the threads<br />

• Specified by SCHEDULE parameter<br />

• Default: undefined!<br />

• The form of the loop has to allow computing the number of<br />

iterations prior to entry into the loop e.g., no WHILE<br />

loops<br />

• Implicit barrier synchronization at the end of the loop unless<br />

NOWAIT parameter is specified<br />

16. August 2011 Slide 33

<strong>Parallel</strong> Loop for C/C++<br />

• Same restrictions as <strong>with</strong> Fortran DO-loop<br />

• No END pragma NOWAIT parameter is specified at entry<br />

of loop<br />

• for loop can only have very restricted form<br />

#pragma omp for<br />

for ( var = first ; var cmp-op end ; incr-expr)<br />

loop-body<br />

Comparison operators (cmp-op)<br />

=<br />

• Increment expression (inctr-expr)<br />

++var, var++, --var, var--,<br />

var += incr, var -= incr,<br />

var = var + incr, var = incr + var<br />

• first, end, incr: constant expressions<br />

16. August 2011 Slide 46

Canonical Loop Structure<br />

Restrictions on loops to simplify compiler-based parallelization<br />

• Allows number of iterations to be computed at loop entry<br />

• Program must complete all iterations of the loop<br />

• no break or goto leaving the loop<br />

• no exit or goto leaving the loop<br />

• Exiting current iteration and starting next one possible<br />

• continue allowed<br />

• cycle allowed<br />

• Termination of the entire program inside the loop<br />

possible<br />

• exit allowed<br />

• stop allowed<br />

16. August 2011 Slide 47

Combined Constructs (Shortcut Version)<br />

• If a parallel region only contains a parallel loop or parallel<br />

sections a shortcut notation can be used:<br />

16. August 2011 Slide 60

loop_construct/loop_construct-v1.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

#define N 10<br />

int main()<br />

int i;<br />

#pragma omp parallel<br />

{<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

printf("i = %d (thread %d)\n", i, omp_get_thread_num());<br />

}<br />

}<br />

}<br />

return 0;

loop_construct/loop_construct-v2.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

#define N 10<br />

int main()<br />

{<br />

int i;<br />

#pragma omp parallel for<br />

for (i = 0; i < N; i++) {<br />

printf("i = %d (thread %d)\n", i, omp_get_thread_num());<br />

}<br />

}<br />

return 0;

Example: Work Sharing <strong>with</strong> <strong>Parallel</strong> Loop I<br />

16. August 2011 Slide 48

Example: Work Sharing <strong>with</strong> <strong>Parallel</strong> Loop II<br />

<strong>Parallel</strong> region and parallel for-loop<br />

# pragma omp parallel<br />

{<br />

#pragma omp for schedule(static)<br />

for (i=0 ; i

Example: Work Sharing <strong>with</strong> <strong>Parallel</strong> Loop III<br />

<strong>Parallel</strong> region and parallel do-loop<br />

!$OMP PARALLEL<br />

!$OMP DO SCHEDULE(STATIC)<br />

do i = 1, 100<br />

enddo<br />

!$OMP END DO<br />

a(i) = b(i) + c(i)<br />

!$OMP END PARALLEL<br />

!$OMP PARALLEL DO SCHEDULE(STATIC)<br />

do i = 1, 100<br />

a(i) = b(i) + c(i)<br />

enddo<br />

!$OMP END PARALLEL DO<br />

16. August 2011 Slide 50

Static and Dynamic Scheduling<br />

• Static scheduling<br />

• Distribution is done at loop-entry time based on<br />

• Number of threads<br />

• Total number of iterations<br />

• Less flexible<br />

• Almost no scheduling overhead<br />

• Dynamic scheduling<br />

• Distribution is done during execution of the loop<br />

• Each thread is assigned a subset of the iterations at loop<br />

entry<br />

• After completion each thread asks for more iterations<br />

• More flexible<br />

• Can easily adjust to load imbalances<br />

• More scheduling overhead (synchronization)<br />

16. August 2011 Slide 38

Scheduling Strategies I<br />

• Distribution of iterations occurs in chunks<br />

• Chunks may have different sizes<br />

• Chunks are assigned either statically or dynamically<br />

• There are different assignment algorithms (types)<br />

• SCHEDULE parameter<br />

schedule (type [, chunk])<br />

• Schedule types<br />

• static<br />

• dynamic<br />

• guided<br />

• runtime<br />

16. August 2011 Slide 39

Scheduling Strategies II<br />

• Schedule type STATIC <strong>with</strong>out chunk size<br />

• One chunk of iterations per thread, all chunks (nearly) equal size<br />

• Schedule type STATIC <strong>with</strong> chunk size<br />

• Chunks <strong>with</strong> specified size are assigned in round-robin fashion<br />

• Schedule type DYNAMIC<br />

• Threads request new chunks dynamically<br />

• Default chunk size is 1<br />

• Schedule type GUIDED<br />

• First chunk has implementation-dependent size<br />

• Size of each successive chunk decreases exponentially<br />

• Chunks are assigned dynamically<br />

• Chunks size specifies minimum size, default is 1<br />

16. August 2011 Slide 40

Scheduling Strategies III<br />

• Schedule type RUNTIME<br />

• Scheduling strategy is determined by environment variable<br />

• If variable is not set, scheduling implementation dependent<br />

export OMP_SCHEDULE=“type [, chunk]“<br />

• No SCHEDULE parameter<br />

• Scheduling implementation dependent<br />

• Correctness of program must not depend on scheduling<br />

strategy<br />

• Setting via Runtime Libraries Routines possible (<strong>OpenMP</strong><br />

3.0)<br />

• omp_set_schedule / omp_get_schedule<br />

16. August 2011 Slide 41

Scheduling - Summary<br />

Iterations<br />

type chunk size number<br />

static no n/p p<br />

static yes c n/c (overlapping)<br />

dynamic optional c n/c<br />

guided optional first n/p, then<br />

decreasing<br />

less than n/c<br />

runtime no depends depends<br />

n: number of iterations, p: number of threads, c: chunk size<br />

16. August 2011 Slide 42

Reason for Different Work Schedules<br />

Work load should be balanced, e.g. each thread needs same<br />

period of time to handle its task<br />

• Load might randomly differ from iteration to iteration<br />

dynamic scheduling<br />

• Execution speed might increase/decrease <strong>with</strong> increasing iteration<br />

index<br />

‣ guided scheduling: chunksizeis proportional to number of<br />

iterations left divided by the number of threads in the team<br />

16. August 2011 Slide 43

Exercise: <strong>OpenMP</strong> Work Distribution<br />

do i = 1,10<br />

end do<br />

call work(i)<br />

Which schedule clause results in which work distribution?<br />

a)<br />

b)<br />

c)<br />

d)<br />

1 2 3 4 5 6 7 8 9 10<br />

1 2 3 4 5 6 7 8 9 10<br />

1 2 3 4 5 6 7 8 9 10<br />

1 2 3 4 5 6 7 8 9 10<br />

= thread 0<br />

= thread 1<br />

= thread 2<br />

= thread 3<br />

16. August 2011 Slide 44

<strong>OpenMP</strong> Work Distribution<br />

• Sequential:<br />

1 2 3 4 5 6 7 8 9 10<br />

• Cyclic:<br />

• Block:<br />

do i = 1,10<br />

call work(i)<br />

end do<br />

• <strong>OpenMP</strong> also provides:<br />

1 2 3 4 5 6 7 8 9 10<br />

!$omp do schedule(static,1) ...<br />

1 2 3 4 5 6 7 8 9 10<br />

1 2 3 4 5 6 7 8 9 10<br />

!$omp do schedule(static) ...<br />

schedule(dynamic [, chunk]), schedule(guided [, chunk])<br />

16. August 2011 Slide 45

Klaus Klingmüller<br />

The Cyprus Institute<br />

Thread<br />

Re c

Klaus Klingmüller<br />

The Cyprus Institute<br />

Thread<br />

Re c

Klaus Klingmüller<br />

The Cyprus Institute<br />

xercise<br />

Test different scheduling options in the<br />

Mandelbrot example and compare the<br />

performance

Synchronization: NOWAIT<br />

• Use NOWAIT parameter for parallel loops which do not<br />

need to be synchronized upon exit of the loop<br />

• keeps synchronization overhead low<br />

# pragma omp parallel<br />

{<br />

}<br />

#pragma omp for nowait<br />

for (i=0 ; i

Synchronization: NOWAIT<br />

• Use NOWAIT parameter for parallel loops which do not<br />

need to be synchronized upon exit of the loop<br />

• keeps synchronization overhead low<br />

!$OMP PARALLEL<br />

!$OMP DO<br />

do i = 1, 100<br />

enddo<br />

a(i) = b(i) + c(i)<br />

!$OMP END DO NOWAIT<br />

!$OMP DO<br />

do i = 1, 100<br />

enddo<br />

z(i) = sqrt(b(i))<br />

!$OMP END DO NOWAIT<br />

!$OMP END PARALLEL<br />

16. August 2011 Slide 52

Loop Nests<br />

• <strong>Parallel</strong> loop construct only applies to loop directly following<br />

it<br />

• Nesting of work-sharing constructs is illegal in <strong>OpenMP</strong>!<br />

• <strong>Parallel</strong>ization of nested loops<br />

• Normally try to parallelize outer loop less overhead<br />

• Sometimes necessary to parallelize inner loops (e.g., small n) rearrange<br />

loops?<br />

• Manually re-write into one loop<br />

• Nested parallel regions not yet supported by all compilers<br />

16. August 2011 Slide 53

If Clause: Conditional <strong>Parallel</strong>ization I<br />

• Avoiding parallel overhead because of low number of loop<br />

iterations<br />

Explicit versioning:<br />

if (size > 10000) {<br />

#pragma omp parallel for<br />

for (i=0 ; i

If Clause: Conditional <strong>Parallel</strong>ization II<br />

Using the „if clause“:<br />

#pragma omp parallel for if (size > 10000)<br />

for (i=0 ; i

Exercises: Introduction II<br />

a) Write a program that implements DAXPY (Double<br />

precision real Alpha X Plus Y):<br />

<br />

y<br />

<br />

x<br />

(vector sizes and values can be chosen as you like)<br />

‣ Measure the time it takes in dependence of the number<br />

of threads<br />

<br />

y<br />

16. August 2011 Slide 55

Klaus Klingmüller<br />

The Cyprus Institute<br />

xercise<br />

Matrix times vector<br />

∑<br />

Solution in using_openmp_examples/<br />

i<br />

a ij x j

Klaus Klingmüller<br />

The Cyprus Institute<br />

Reduction

Reduction Parameter<br />

• For solving cases like this, <strong>OpenMP</strong> provides the<br />

REDUCTION parameter<br />

16. August 2011 Slide 73

Reduction Parameter<br />

• For solving cases like this, <strong>OpenMP</strong> provides the<br />

REDUCTION parameter<br />

16. August 2011 Slide 74

Reductions<br />

• Reductions often occur <strong>with</strong>in parallel regions or loops<br />

• Reduction variables have to be shared in enclosing parallel<br />

context<br />

• Thread-local results get combined <strong>with</strong> outside variables<br />

using reduction operation<br />

• Note: order of operations unspecified can produce<br />

different results than sequential version<br />

• Applications: Compute sum of array or find the largest<br />

element<br />

16. August 2011 Slide 75

Reductions, Example<br />

16. August 2011 Slide 76

Reduction Operators<br />

The table shows:<br />

• The operators allowed for reductions<br />

• The initial values used to initialize<br />

Operator Data Types Initial Value<br />

+ floating point, integer 0<br />

* floating point, integer 1<br />

- floating point, integer 0<br />

& integer all bits on<br />

| integer 0<br />

^ integer 0<br />

&& integer 1<br />

|| integer 0<br />

16. August 2011 Slide 77

Reduction Operators I<br />

The table shows:<br />

• The operators and intrinsic allowed for reductions<br />

• The initial values used to initialize<br />

Operator Data Types Initial Value<br />

+ floating point, integer (complex<br />

or real)<br />

* floating point, integer (complex<br />

or real)<br />

- floating point, integer (complex<br />

or real)<br />

0<br />

1<br />

0<br />

.AND. logical .TRUE.<br />

.OR. logical .FALSE.<br />

.EQV. logical .TRUE.<br />

.NEQV. logical .FALSE.<br />

16. August 2011 Slide 78

Reduction Operators II<br />

Operator Data Types Initial Value<br />

MAX<br />

MIN<br />

floating point, integer<br />

(real only)<br />

floating point, integer<br />

(real only)<br />

IAND integer all bits on<br />

IOR integer 0<br />

IEOR integer 0<br />

smallest possible value<br />

largest possible value<br />

16. August 2011 Slide 79

Klaus Klingmüller<br />

The Cyprus Institute<br />

xercises<br />

.<br />

x·y<br />

. n!

eduction_clause/reduction_clause.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

int main()<br />

{<br />

int i;<br />

double a[3], b[3], dotp;<br />

a[0] = .2;<br />

a[1] = 2.2;<br />

a[2] = -1.;<br />

b[0] = 1.;<br />

b[1] = -.5;<br />

b[2] = 1.5;<br />

dotp = 0;<br />

#pragma omp parallel for reduction(+:dotp) \<br />

default(none) shared(a, b) private(i)<br />

for (i = 0; i < 3; i++) {<br />

dotp += a[i] * b[i];<br />

}<br />

printf("%g\n", dotp);<br />

}<br />

return 0;

Exercises: Advanced Topics I<br />

• Go to directory /ex_advanced_topics<br />

a) can be calculated in the following way:<br />

x<br />

i<br />

n<br />

1<br />

4.0<br />

(1.0 x^2)<br />

1<br />

x<br />

n<br />

x ( i 0.5) x<br />

Write a program that does the calculation and parallelize it<br />

using <strong>OpenMP</strong> (10^8 iterations). How much time does it<br />

take?<br />

Play around <strong>with</strong> the „schedule“ clause. Which settings<br />

gives the best performance?<br />

16. August 2011 Slide 96

Klaus Klingmüller<br />

The Cyprus Institute<br />

xercise<br />

Monte Carlo computation of<br />

π<br />

Random number generators in random/ (don't use them<br />

for science), solution in pi/

pi/cpi.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#ifdef _OPENMP<br />

#include <br />

#else<br />

#define omp_get_thread_num() 0<br />

#endif<br />

#define N 1000000000<br />

#include "../random/rand_r.c"<br />

int main()<br />

{<br />

long i, counter;<br />

unsigned seed;<br />

double x, y, pi;<br />

counter = 0;<br />

#pragma omp parallel default(none) private(i, x, y, seed) \<br />

reduction(+: counter)<br />

{<br />

seed = omp_get_thread_num();<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

x = (double) rand_r(&seed) / RAND_MAX;<br />

y = (double) rand_r(&seed) / RAND_MAX;<br />

if (x * x + y * y

pi/cpi.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#ifdef _OPENMP<br />

#include <br />

#else<br />

#define omp_get_thread_num() 0<br />

#endif<br />

#define N 1000000000<br />

#include "../random/rand_r.c"<br />

int main()<br />

{<br />

long i, counter;<br />

unsigned seed;<br />

double x, y, pi;<br />

counter = 0;<br />

#pragma omp parallel default(none) private(i, x, y, seed) \<br />

reduction(+: counter)<br />

{<br />

seed = omp_get_thread_num();<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

x = (double) rand_r(&seed) / RAND_MAX;<br />

y = (double) rand_r(&seed) / RAND_MAX;<br />

if (x * x + y * y

Conditional Compilation<br />

• Preprocessor macro _OPENMP for C/C++ and Fortran:<br />

#ifdef _OPENMP<br />

iam = omp_get_thread_num();<br />

#endif<br />

• Special comment for Fortran preprocessor<br />

!$ iam = OMP_GET_THREAD_NUM()<br />

• Helpful check of serial and parallel version of the code<br />

16. August 2011 Slide 30

pi/cpi.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#ifdef _OPENMP<br />

#include <br />

#else<br />

#define omp_get_thread_num() 0<br />

#endif<br />

#define N 1000000000<br />

#include "../random/rand_r.c"<br />

int main()<br />

{<br />

long i, counter;<br />

unsigned seed;<br />

double x, y, pi;<br />

counter = 0;<br />

#pragma omp parallel default(none) private(i, x, y, seed) \<br />

reduction(+: counter)<br />

{<br />

seed = omp_get_thread_num();<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

x = (double) rand_r(&seed) / RAND_MAX;<br />

y = (double) rand_r(&seed) / RAND_MAX;<br />

if (x * x + y * y

Klaus Klingmüller<br />

The Cyprus Institute<br />

Synchronisation

What now?<br />

16. August 2011 Slide 61

Critical Region<br />

16. August 2011 Slide 62

Critical Region II<br />

• A critical region restricts execution of the associated block<br />

of statements to a single thread at a time<br />

• An optional name may be used to identify the critical region<br />

• A thread waits at the beginning of a critical region until no<br />

other thread is executing a critical region (anywhere in the<br />

program) <strong>with</strong> the same name<br />

16. August 2011 Slide 63

Example: Critical Region<br />

• Note: the loop body only consists of a critical region<br />

• The program gets extremely slow No speedup<br />

16. August 2011 Slide 64

Example: Critical Region<br />

• Note: the loop body only consists of a critical region<br />

• The program gets extremely slow No speedup<br />

16. August 2011 Slide 65

Critical Region III<br />

16. August 2011 Slide 66

Example: Critical Region II<br />

16. August 2011 Slide 67

Example: Critical Region II<br />

16. August 2011 Slide 68

Atomic Statements<br />

• The ATOMIC directives ensures that a specific memory<br />

location is updated atomically.<br />

• <strong>OpenMP</strong> implementation could replace it <strong>with</strong> a CRITICAL<br />

construct. ATOMIC construct permits better optimization<br />

(based on hardware instructions)<br />

#<br />

• The statement following the ATOMIC directive must have<br />

one of the following forms:<br />

16. August 2011 Slide 69

Atomic Statements<br />

• The ATOMIC directives ensures that a specific memory<br />

location is updated atomically.<br />

• <strong>OpenMP</strong> implementation could replace it <strong>with</strong> a CRITICAL<br />

construct. ATOMIC construct permits better optimization<br />

(based on hardware instructions)<br />

• The statement following the ATOMIC directive must have<br />

one of the following forms:<br />

16. August 2011 Slide 70

Example: Atomic Statement<br />

s = 0<br />

#pragma omp parallel private(i, s_local)<br />

{<br />

s_local = 0.0;<br />

#pragma omp for<br />

for (i=0 ; i

Example: Atomic Statement<br />

16. August 2011 Slide 72

Locks<br />

• More flexible way of implementing “critical regions”<br />

• Lock variable type: omp_lock_t, passed by address<br />

• Initialize lock<br />

omp_init_lock(&lockvar)<br />

• Remove (deallocates) lock<br />

omp_destroy_lock(&lockvar)<br />

• Blocks calling thread until lock is available<br />

omp_set_lock(&lockvar)<br />

• Releases locks<br />

omp_unset_lock(&lockvar)<br />

• Test and try to set lock (returns 1 if success else 0)<br />

intvar = omp_test_lock(&lockvar)<br />

16. August 2011 Slide 83

Locks<br />

• More flexible way of implementing “critical regions”<br />

• Lock variable have to be of type integer<br />

• Initialize lock<br />

CALL OMP_INIT_LOCK(lockvar)<br />

• Remove (deallocates) lock<br />

CALL OMP_DESTROY_LOCK(lockvar)<br />

• Blocks calling thread until lock is available<br />

CALL_SET_LOCK(lockvar)<br />

• Releases locks<br />

CALL_OMP_UNSET_LOCK(lockvar)<br />

• Test and try to set lock (returns TRUE if success)<br />

logicalvar = OMP_TEST_LOCK(lockvar)<br />

16. August 2011 Slide 84

Example: Locks<br />

16. August 2011 Slide 85

Example: Locks<br />

16. August 2011 Slide 86

Master / Single I<br />

• Sometimes it is useful that <strong>with</strong>in a parallel region just one<br />

thread is executing code, e.g., to read/write data. <strong>OpenMP</strong><br />

provides two ways to accomplish this:<br />

• MASTER construct: The master thread (thread 0)<br />

executes the enclosed code, all other threads ignore<br />

this block of statements, i.e. there is no implicit<br />

barrier<br />

#pragma omp master<br />

{ statementBlock }<br />

!$OMP MASTER<br />

statementBlock<br />

!$OMP END MASTER<br />

16. August 2011 Slide 80

Master / Single II<br />

• Single construct: The first thread reaching the<br />

directive executes the code. All threads execute an<br />

implicit barrier synchronization unless NOWAIT is<br />

specified<br />

#pragma omp single [nowait, private(list),...]<br />

{ statementBlock }<br />

!$OMP SINGLE [PRIVATE(list), FIRSTPRIVATE(list)]<br />

statementBlock<br />

!$OMP END SINGLE [NOWAIT]<br />

16. August 2011 Slide 81

single_construct/single_construct.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

#define N 4<br />

int main()<br />

{<br />

int i, j;<br />

j = 1;<br />

#pragma omp parallel default(none) shared(j) private(i)<br />

{<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

printf("i = %d, j = %d (thread %d)\n", i, j,<br />

omp_get_thread_num());<br />

}<br />

#pragma omp single<br />

{<br />

j = 2;<br />

printf("Thread %d changes the shared variable j.\n",<br />

omp_get_thread_num());<br />

}<br />

}<br />

#pragma omp for<br />

for (i = 0; i < N; i++) {<br />

printf("i = %d, j = %d (thread %d)\n", i, j,<br />

omp_get_thread_num());<br />

}<br />

}<br />

return 0;

Barrier<br />

• The barrier directive explicitly synchronizes all the threads<br />

in a team.<br />

• When encountered, each thread in the team waits until all<br />

the others have reached this point<br />

#pragma omp barrier<br />

!$OMP BARRIER<br />

• There are also implicit barriers at the end<br />

• of parallel regions<br />

• of work share constructs (DO/for, SECTIONS, SINGLE)<br />

these can be disabled by specifying NOWAIT<br />

16. August 2011 Slide 82

Klaus Klingmüller<br />

The Cyprus Institute<br />

Misc

Section construct<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

C, C++<br />

#pragma omp sections [clause[[,] clause]. . . ]<br />

{<br />

#pragma omp section<br />

structured block<br />

#pragma omp section<br />

structured block<br />

...<br />

}<br />

Fortran<br />

!$omp sections [clause[[,] clause]. . . ]<br />

!$omp section<br />

structured block<br />

!$omp section<br />

structured block<br />

...<br />

!$omp end sections [nowait]

section_construct/section_construct.c<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

#include <br />

#include <br />

int main()<br />

{<br />

#pragma omp parallel sections<br />

{<br />

#pragma omp section<br />

printf("Section 1: thread %d\n", omp_get_thread_num());<br />

#pragma omp section<br />

printf("Section 2: thread %d\n", omp_get_thread_num());<br />

#pragma omp section<br />

printf("Section 3: thread %d\n", omp_get_thread_num());<br />

}<br />

#pragma omp section<br />

printf("Section 4: thread %d\n", omp_get_thread_num());<br />

}<br />

return 0;

Workshare construct<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

Fortran<br />

!$omp workshare<br />

structured block<br />

!$omp end workshare [nowait]

workshare_construct/<br />

Klaus Klingmüller<br />

The Cyprus Institute<br />

workshare_construct.f90<br />

PROGRAM workshare_construct<br />

IMPLICIT NONE<br />

INTEGER, PARAMETER :: n = 5<br />

REAL :: a(n), b(n), c(n)<br />

INTEGER :: i<br />

a = (/ (.1 * i, i = 1, n) /)<br />

b = (/ (.5 * i, i = 1, n) /)<br />

!$OMP PARALLEL WORKSHARE<br />

c = a + b<br />

!$OMP END PARALLEL WORKSHARE<br />

WRITE(*, *) a<br />

WRITE(*, *) b<br />

WRITE(*, *) c<br />

END PROGRAM mandelbrot

Orphaned Work-sharing Construct<br />

• Work-sharing construct can occur anywhere outside the<br />

lexical extent of a parallel region orphaned construct<br />

• Case 1: called in a parallel context works as expected<br />

• Case 2: called in a sequential context “ignores” directive<br />

• Global variables are shared<br />

16. August 2011 Slide 87

Klaus Klingmüller<br />

The Cyprus Institute<br />

<strong>OpenMP</strong> 3.x

Loop <strong>Parallel</strong>ism I (<strong>OpenMP</strong> 3.0)<br />

• <strong>OpenMP</strong> 3.0 guarantees for STATIC schedule<br />

• same number of iterations<br />

• second loop is divided in the same chunks<br />

• not guaranteed in <strong>OpenMP</strong> 2.5<br />

16. August 2011 Slide 89

Loop <strong>Parallel</strong>ism II (<strong>OpenMP</strong> 3.0)<br />

• Loop collapsing<br />

!$OMP PARALLEL DO COLLAPSE(2)<br />

do i = 1, n<br />

enddo<br />

do j = 1, m<br />

enddo<br />

do k =1, l<br />

enddo<br />

!$OMP END PARALLEL DO<br />

foo(i, j, k)<br />

• Rectangular iteration space from the outer two loops<br />

• outer loop is collapsed into one larger loop <strong>with</strong> more<br />

iterations<br />

16. August 2011 Slide 90

Task <strong>Parallel</strong>ism (<strong>OpenMP</strong> 3.0)<br />

Tasks:<br />

• Unit of independent work (block of code, like sections)<br />

• Direct or deferred execution of work by one thread of<br />

team<br />

• Tasks are composed of<br />

• code to execute, and<br />

• the data environment (constructed at creation time)<br />

• internal control variables (ICV)<br />

• can be tied to a thread: only this thread can execute the<br />

task<br />

• can be used for unbounded loops, recursive algorithms,<br />

working on linked lists (pointer), consumer/producer<br />

16. August 2011 Slide 91

Task directive<br />

• ParameterList:<br />

• data scope: default, private, firstprivate, shared<br />

• conditional: if (expr)<br />

‣create task if expr true, otherwise direct execution<br />

schedule: untied<br />

• Each encountering thread creates a new task<br />

• Tasks can be nested, into another task or a worksharing<br />

construct<br />

16. August 2011 Slide 92

Task synchronization (<strong>OpenMP</strong> 3.0)<br />

• Task is executed by a thread of current team sometimes<br />

• Completion of all task created by all threads of current team<br />

at synchronization points:<br />

• Barriers<br />

• implicit: e.g. end of parallel region<br />

• explicit: e.g. OMP BARRIER<br />

• Task Barrier:<br />

#pragma omp taskwait<br />

!$OMP TASKWAIT<br />

encountering task suspends until all its child tasks<br />

(generated before taskwait) are completed<br />

16. August 2011 Slide 93

Task vs Sections<br />

Task<br />

• <strong>OpenMP</strong> task can be<br />

defined by any thread of<br />

team<br />

• a thread of the team<br />

executes the <strong>OpenMP</strong> task<br />

• definition of <strong>OpenMP</strong> tasks<br />

at any location<br />

• dynamic setup of <strong>OpenMP</strong><br />

tasks<br />

Sections<br />

• independend tasks can be<br />

performed in parallel<br />

• static blocks of code<br />

• Nature of task and number<br />

of tasks must be known at<br />

compile time<br />

16. August 2011 Slide 94

Exercises: Advanced Topics II<br />

a) <strong>Parallel</strong>ize fibonacci.c using <strong>OpenMP</strong> tasks (Fibonacci<br />

sequence: 0, 1, 1, 2, 3, 5, 8, 13, …)<br />

16. August 2011 Slide 97

Klaus Klingmüller<br />

The Cyprus Institute<br />

Troubleshooting

Race Conditions and Data Dependencies I<br />

Most important rule: „<strong>Parallel</strong>ization of code must not affect the<br />

correctness of a program!“<br />

• In loops: the results of each single iteration must not<br />

depend on each other<br />

• Race conditions must be avoided<br />

• Result must not depend on the order of threads<br />

• Correctness of the program must not depend on number<br />

of threads<br />

• Correctness must not depend on the work schedule<br />

16. August 2011 Slide 99

Race Conditions and Data Dependencies II<br />

• Threads read and write to the same object at the same time<br />

‣ Unpredictable results (sometimes work, sometimes not)<br />

‣ Wrong answers <strong>with</strong>out a warning signal!<br />

• Correctness depends on order of read/write accesses<br />

• Hard to debug because the debugger often runs the<br />

program in a serialized, deterministic ordering.<br />

• To insure that readers do not get ahead of writers, thread<br />

synchronization is needed.<br />

• Distributed memory systems: messages are often used<br />

to synchronize, <strong>with</strong> readers blocking until the message<br />

arrives.<br />

• Shared memory systems: need barriers, critical regions,<br />

locks, ...<br />

16. August 2011 Slide 100

Race Conditions and Data Dependencies II<br />

• Note: be careful <strong>with</strong> synchronization<br />

• Degrades performance<br />

• Deadlocks: threads lock up waiting for a locked resource<br />

that never will become available<br />

16. August 2011 Slide 101

Exercises: Data Dependencies I<br />

a)<br />

b)<br />

c)<br />

d)<br />

for (int i=1 ; i

Exercises: Data Dependencies II<br />

e)<br />

f)<br />

for (int i=1 ; i

Intel Inspector XE (Formerly Intel Thread Checker)<br />

• Memory error and thread checker tool<br />

• Supported languages on linux systems:<br />

• C/C++, Fortran<br />

• Maps errors to the source code line and call stack<br />

• Detecs problems that are not recognized by the compiler<br />

(e.g. race conditions, data dependencies)<br />

16. August 2011 Slide 104

Basics<br />

Shared memory<br />

Fork-Join<br />

SPMD Threads<br />

Compiler directives<br />

<strong>Parallel</strong> construct<br />

Reduction<br />

Private vs. shared<br />

Critical region<br />

Loops<br />

Scheduling<br />

Atomic statements<br />

<strong>OpenMP</strong> 3.x<br />

Tasks<br />

Race conditions<br />

Data dependencies<br />

Synchronisation<br />

Single Barrier<br />

Locks Master<br />

Troubleshooting<br />

Thread checker<br />

Klaus Klingmüller<br />

The Cyprus Institute