Linear Algebra Exercises-n-Answers.pdf

Linear Algebra Exercises-n-Answers.pdf

Linear Algebra Exercises-n-Answers.pdf

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

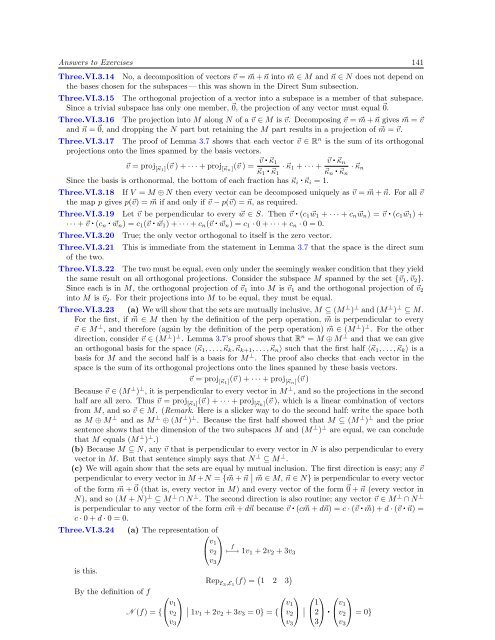

<strong>Answers</strong> to <strong>Exercises</strong> 141<br />

Three.VI.3.14 No, a decomposition of vectors ⃗v = ⃗m + ⃗n into ⃗m ∈ M and ⃗n ∈ N does not depend on<br />

the bases chosen for the subspaces — this was shown in the Direct Sum subsection.<br />

Three.VI.3.15 The orthogonal projection of a vector into a subspace is a member of that subspace.<br />

Since a trivial subspace has only one member, ⃗0, the projection of any vector must equal ⃗0.<br />

Three.VI.3.16 The projection into M along N of a ⃗v ∈ M is ⃗v. Decomposing ⃗v = ⃗m + ⃗n gives ⃗m = ⃗v<br />

and ⃗n = ⃗0, and dropping the N part but retaining the M part results in a projection of ⃗m = ⃗v.<br />

Three.VI.3.17 The proof of Lemma 3.7 shows that each vector ⃗v ∈ R n is the sum of its orthogonal<br />

projections onto the lines spanned by the basis vectors.<br />

⃗v = proj [⃗κ1](⃗v ) + · · · + proj [⃗κn](⃗v ) = ⃗v ⃗κ 1<br />

· ⃗κ 1 + · · · + ⃗v ⃗κ n<br />

· ⃗κ n<br />

⃗κ 1 ⃗κ 1 ⃗κ n ⃗κ n<br />

Since the basis is orthonormal, the bottom of each fraction has ⃗κ i ⃗κ i = 1.<br />

Three.VI.3.18 If V = M ⊕ N then every vector can be decomposed uniquely as ⃗v = ⃗m + ⃗n. For all ⃗v<br />

the map p gives p(⃗v) = ⃗m if and only if ⃗v − p(⃗v) = ⃗n, as required.<br />

Three.VI.3.19 Let ⃗v be perpendicular to every ⃗w ∈ S. Then ⃗v (c 1 ⃗w 1 + · · · + c n ⃗w n ) = ⃗v (c 1 ⃗w 1 ) +<br />

· · · + ⃗v (c n ⃗w n ) = c 1 (⃗v ⃗w 1 ) + · · · + c n (⃗v ⃗w n ) = c 1 · 0 + · · · + c n · 0 = 0.<br />

Three.VI.3.20<br />

Three.VI.3.21<br />

of the two.<br />

True; the only vector orthogonal to itself is the zero vector.<br />

This is immediate from the statement in Lemma 3.7 that the space is the direct sum<br />

Three.VI.3.22 The two must be equal, even only under the seemingly weaker condition that they yield<br />

the same result on all orthogonal projections. Consider the subspace M spanned by the set {⃗v 1 , ⃗v 2 }.<br />

Since each is in M, the orthogonal projection of ⃗v 1 into M is ⃗v 1 and the orthogonal projection of ⃗v 2<br />

into M is ⃗v 2 . For their projections into M to be equal, they must be equal.<br />

Three.VI.3.23 (a) We will show that the sets are mutually inclusive, M ⊆ (M ⊥ ) ⊥ and (M ⊥ ) ⊥ ⊆ M.<br />

For the first, if ⃗m ∈ M then by the definition of the perp operation, ⃗m is perpendicular to every<br />

⃗v ∈ M ⊥ , and therefore (again by the definition of the perp operation) ⃗m ∈ (M ⊥ ) ⊥ . For the other<br />

direction, consider ⃗v ∈ (M ⊥ ) ⊥ . Lemma 3.7’s proof shows that R n = M ⊕ M ⊥ and that we can give<br />

an orthogonal basis for the space 〈⃗κ 1 , . . . , ⃗κ k , ⃗κ k+1 , . . . , ⃗κ n 〉 such that the first half 〈⃗κ 1 , . . . , ⃗κ k 〉 is a<br />

basis for M and the second half is a basis for M ⊥ . The proof also checks that each vector in the<br />

space is the sum of its orthogonal projections onto the lines spanned by these basis vectors.<br />

⃗v = proj [⃗κ1](⃗v ) + · · · + proj [⃗κn](⃗v )<br />

Because ⃗v ∈ (M ⊥ ) ⊥ , it is perpendicular to every vector in M ⊥ , and so the projections in the second<br />

half are all zero. Thus ⃗v = proj [⃗κ1 ](⃗v ) + · · · + proj [⃗κk ](⃗v ), which is a linear combination of vectors<br />

from M, and so ⃗v ∈ M. (Remark. Here is a slicker way to do the second half: write the space both<br />

as M ⊕ M ⊥ and as M ⊥ ⊕ (M ⊥ ) ⊥ . Because the first half showed that M ⊆ (M ⊥ ) ⊥ and the prior<br />

sentence shows that the dimension of the two subspaces M and (M ⊥ ) ⊥ are equal, we can conclude<br />

that M equals (M ⊥ ) ⊥ .)<br />

(b) Because M ⊆ N, any ⃗v that is perpendicular to every vector in N is also perpendicular to every<br />

vector in M. But that sentence simply says that N ⊥ ⊆ M ⊥ .<br />

(c) We will again show that the sets are equal by mutual inclusion. The first direction is easy; any ⃗v<br />

perpendicular to every vector in M +N = {⃗m + ⃗n ∣ ∣ ⃗m ∈ M, ⃗n ∈ N} is perpendicular to every vector<br />

of the form ⃗m + ⃗0 (that is, every vector in M) and every vector of the form ⃗0 + ⃗n (every vector in<br />

N), and so (M + N) ⊥ ⊆ M ⊥ ∩ N ⊥ . The second direction is also routine; any vector ⃗v ∈ M ⊥ ∩ N ⊥<br />

is perpendicular to any vector of the form c⃗m + d⃗n because ⃗v (c⃗m + d⃗n) = c · (⃗v ⃗m) + d · (⃗v ⃗n) =<br />

c · 0 + d · 0 = 0.<br />

Three.VI.3.24<br />

(a) The representation of<br />

⎛<br />

⎝ v 1<br />

⎞<br />

v 2<br />

⎠<br />

v 3<br />

f<br />

↦−→ 1v 1 + 2v 2 + 3v 3<br />

is this.<br />

Rep E3 ,E 1<br />

(f) = ( 1 2 3 )<br />

By the definition of f<br />

⎛<br />

N (f) = { ⎝ v ⎞<br />

⎛<br />

1<br />

v 2<br />

⎠ ∣ 1v 1 + 2v 2 + 3v 3 = 0} = { ⎝ v ⎞ ⎛<br />

1<br />

v 2<br />

⎠ ∣ ⎝ 1 ⎞<br />

2⎠<br />

v 3 v 3 3<br />

⎛<br />

⎝ v ⎞<br />

1<br />

v 2<br />

⎠ = 0}<br />

v 3