Linear Algebra Exercises-n-Answers.pdf

Linear Algebra Exercises-n-Answers.pdf

Linear Algebra Exercises-n-Answers.pdf

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

and that (⃗v<br />

− (c1 · ⃗κ 1 + · · · + c k · ⃗κ k ) ) ( (c 1 · ⃗κ 1 + · · · + c k · ⃗κ k ) − (d 1 · ⃗κ 1 + · · · + d k · ⃗κ k ) ) = 0<br />

134 <strong>Linear</strong> <strong>Algebra</strong>, by Hefferon<br />

This first thing is not so bad because the zero vector is by definition orthogonal to every other vector, so<br />

we could accept this situation as yielding an orthogonal set (although it of course can’t be normalized),<br />

or we just could modify the Gram-Schmidt procedure to throw out any zero vectors. The second thing<br />

to worry about if we drop the phrase ‘linearly independent’ from the question is that the set might be<br />

infinite. Of course, any subspace of the finite-dimensional R n must also be finite-dimensional so only<br />

finitely many of its members are linearly independent, but nonetheless, a “process” that examines the<br />

vectors in an infinite set one at a time would at least require some more elaboration in this question.<br />

A linearly independent subset of R n is automatically finite — in fact, of size n or less — so the ‘linearly<br />

independent’ phrase obviates these concerns.<br />

Three.VI.2.14<br />

The process leaves the basis unchanged.<br />

Three.VI.2.15 (a) The argument is as in the i = 3 case of the proof of Theorem 2.7. The dot<br />

product<br />

)<br />

⃗κ i<br />

(⃗v − proj [⃗κ1 ](⃗v ) − · · · − proj [⃗vk ](⃗v )<br />

can be written as the sum of terms of the form −⃗κ i proj [⃗κj ](⃗v ) with j ≠ i, and the term ⃗κ i (⃗v −<br />

proj [⃗κi ](⃗v )). The first kind of term equals zero because the ⃗κ’s are mutually orthogonal. The other<br />

term is zero because this projection is orthogonal (that is, the projection definition makes it zero:<br />

⃗κ i (⃗v − proj [⃗κi](⃗v )) = ⃗κ i ⃗v − ⃗κ i ((⃗v ⃗κ i )/(⃗κ i ⃗κ i )) · ⃗κ i equals, after all of the cancellation is done,<br />

zero).<br />

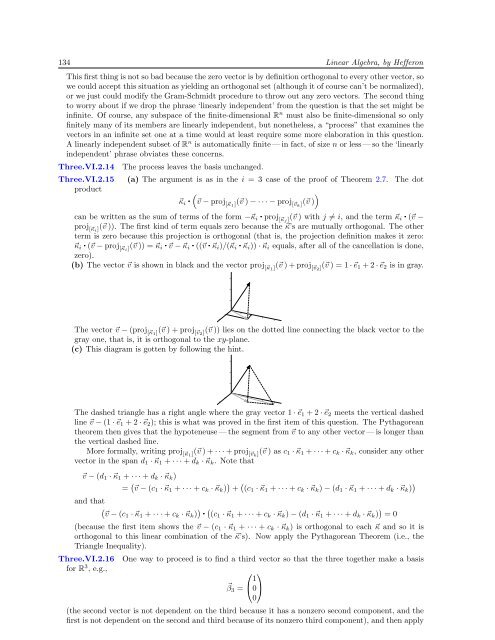

(b) The vector ⃗v is shown in black and the vector proj [⃗κ1 ](⃗v ) + proj [⃗v2 ](⃗v ) = 1 · ⃗e 1 + 2 · ⃗e 2 is in gray.<br />

The vector ⃗v − (proj [⃗κ1 ](⃗v ) + proj [⃗v2 ](⃗v )) lies on the dotted line connecting the black vector to the<br />

gray one, that is, it is orthogonal to the xy-plane.<br />

(c) This diagram is gotten by following the hint.<br />

The dashed triangle has a right angle where the gray vector 1 · ⃗e 1 + 2 · ⃗e 2 meets the vertical dashed<br />

line ⃗v − (1 · ⃗e 1 + 2 · ⃗e 2 ); this is what was proved in the first item of this question. The Pythagorean<br />

theorem then gives that the hypoteneuse — the segment from ⃗v to any other vector — is longer than<br />

the vertical dashed line.<br />

More formally, writing proj [⃗κ1 ](⃗v ) + · · · + proj [⃗vk ](⃗v ) as c 1 · ⃗κ 1 + · · · + c k · ⃗κ k , consider any other<br />

vector in the span d 1 · ⃗κ 1 + · · · + d k · ⃗κ k . Note that<br />

⃗v − (d 1 · ⃗κ 1 + · · · + d k · ⃗κ k )<br />

= ( ⃗v − (c 1 · ⃗κ 1 + · · · + c k · ⃗κ k ) ) + ( (c 1 · ⃗κ 1 + · · · + c k · ⃗κ k ) − (d 1 · ⃗κ 1 + · · · + d k · ⃗κ k ) )<br />

(because the first item shows the ⃗v − (c 1 · ⃗κ 1 + · · · + c k · ⃗κ k ) is orthogonal to each ⃗κ and so it is<br />

orthogonal to this linear combination of the ⃗κ’s). Now apply the Pythagorean Theorem (i.e., the<br />

Triangle Inequality).<br />

Three.VI.2.16<br />

for R 3 , e.g.,<br />

One way to proceed is to find a third vector so that the three together make a basis<br />

⎛ ⎞<br />

1<br />

⃗β 3 = ⎝0⎠<br />

0<br />

(the second vector is not dependent on the third because it has a nonzero second component, and the<br />

first is not dependent on the second and third because of its nonzero third component), and then apply