NIST 800-44 Version 2 Guidelines on Securing Public Web Servers

NIST 800-44 Version 2 Guidelines on Securing Public Web Servers

NIST 800-44 Version 2 Guidelines on Securing Public Web Servers

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

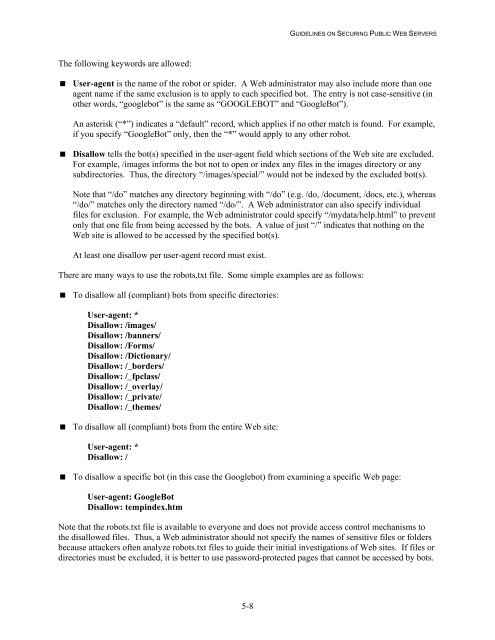

GUIDELINES ON SECURING PUBLIC WEB SERVERS<br />

The following keywords are allowed:<br />

User-agent is the name of the robot or spider. A <strong>Web</strong> administrator may also include more than <strong>on</strong>e<br />

agent name if the same exclusi<strong>on</strong> is to apply to each specified bot. The entry is not case-sensitive (in<br />

other words, “googlebot” is the same as “GOOGLEBOT” and “GoogleBot”).<br />

An asterisk (“*”) indicates a “default” record, which applies if no other match is found. For example,<br />

if you specify “GoogleBot” <strong>on</strong>ly, then the “*” would apply to any other robot.<br />

Disallow tells the bot(s) specified in the user-agent field which secti<strong>on</strong>s of the <strong>Web</strong> site are excluded.<br />

For example, /images informs the bot not to open or index any files in the images directory or any<br />

subdirectories. Thus, the directory “/images/special/” would not be indexed by the excluded bot(s).<br />

Note that “/do” matches any directory beginning with “/do” (e.g. /do, /document, /docs, etc.), whereas<br />

“/do/” matches <strong>on</strong>ly the directory named “/do/”. A <strong>Web</strong> administrator can also specify individual<br />

files for exclusi<strong>on</strong>. For example, the <strong>Web</strong> administrator could specify “/mydata/help.html” to prevent<br />

<strong>on</strong>ly that <strong>on</strong>e file from being accessed by the bots. A value of just “/” indicates that nothing <strong>on</strong> the<br />

<strong>Web</strong> site is allowed to be accessed by the specified bot(s).<br />

At least <strong>on</strong>e disallow per user-agent record must exist.<br />

There are many ways to use the robots.txt file. Some simple examples are as follows:<br />

To disallow all (compliant) bots from specific directories:<br />

User-agent: *<br />

Disallow: /images/<br />

Disallow: /banners/<br />

Disallow: /Forms/<br />

Disallow: /Dicti<strong>on</strong>ary/<br />

Disallow: /_borders/<br />

Disallow: /_fpclass/<br />

Disallow: /_overlay/<br />

Disallow: /_private/<br />

Disallow: /_themes/<br />

To disallow all (compliant) bots from the entire <strong>Web</strong> site:<br />

User-agent: *<br />

Disallow: /<br />

To disallow a specific bot (in this case the Googlebot) from examining a specific <strong>Web</strong> page:<br />

User-agent: GoogleBot<br />

Disallow: tempindex.htm<br />

Note that the robots.txt file is available to every<strong>on</strong>e and does not provide access c<strong>on</strong>trol mechanisms to<br />

the disallowed files. Thus, a <strong>Web</strong> administrator should not specify the names of sensitive files or folders<br />

because attackers often analyze robots.txt files to guide their initial investigati<strong>on</strong>s of <strong>Web</strong> sites. If files or<br />

directories must be excluded, it is better to use password-protected pages that cannot be accessed by bots.<br />

5-8