Machine Learning Module (2011/2012) - Sweet

Machine Learning Module (2011/2012) - Sweet

Machine Learning Module (2011/2012) - Sweet

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

UNIVERSITY OF AVEIRO<br />

DEPARTMENT OF ELECTRONICS TELECOMMUNICATIONS AND INFORMATICS<br />

UC II - <strong>Machine</strong> <strong>Learning</strong> <strong>Module</strong> (<strong>2011</strong>/<strong>2012</strong>)<br />

Practical Exercises nº 1<br />

Part I - K Nearest Neighbour (KNN) and Naive Bayes classifiers<br />

Fig. 1<br />

Classification problems are described by the block diagram on Fig. 1. Each entry of the vector x is<br />

related with a deferent attribute (or feature) of the object and label is the name of its class.<br />

Ex.1. Table 1 describes the train arrivals into two classes (on time and late) and several attributes<br />

(features) were also stored.<br />

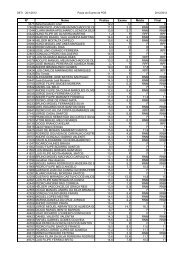

Table 1<br />

a) Map the data set with the general model for a classification problem<br />

• What are the possible values for label?<br />

• How many attributes? What is the type of each attribute/feature of the data set?<br />

• What is the dimension of the classification problem?<br />

• What is the size of the training data set?<br />

b) Estimate the following parameters of a Naive Bayes Classifier:<br />

• A-priori probabilities: P ( Ci<br />

) , C<br />

i<br />

is the class label.<br />

• Conditional probabilities (likelihood): P atribute = valueC )<br />

(<br />

i<br />

Organize the parameter values into a table.<br />

c) The goal is to classify the following new object (instance) :<br />

weekday winter high heavy What is the class????<br />

d) Estimate P x = new objectC ) . Explain the assumptions made on this step.<br />

(<br />

i<br />

e) What is the final decision about the new instance?<br />

f) Apply a Laplace correction (if necessary).

Ex. 2. The following table describes 20 Portuguese teenagers with weight and height. Given x<br />

where x1 = 60 is the weight and x2 = 165 is the height. Decide if x is a boy or a girl, using:<br />

a) KNN classifier with K = 1 and Euclidian distance.<br />

b) KNN classifier with K = 3 and Euclidian distance.<br />

c) Naive Bayes Classifier. Formalize the complete model (see the Appendix)<br />

Ex. 2 Spam detection<br />

Given the following data set of messages (Table 1) classified as spam and not spam (ham). We get<br />

a new message M =”today is secret” and want to calculate what is the probability that M is a spam<br />

message applying the Bayes rule. P(Spam/M) =? Create a “bag of words” and count the<br />

occurrences of words in spam and not spam messages. Apply Laplace smoothing if necessary.<br />

Table 1<br />

Spam<br />

1. Offer is secret<br />

2. Click secret link<br />

3. Secret sport link<br />

Further Questions<br />

Not spam (Ham)<br />

1. Play sport today<br />

2. Went play sport<br />

3. Secret sport vents<br />

4. Sport is today<br />

5. Sport costs money<br />

• Estimation of conditional probabilities with qualitative attributes. For instance, P atribute = valueC )<br />

(<br />

i<br />

assumes that value exists in the training set. And if value does not exist in the instances related with<br />

class ? Can you propose a solution (tip: Laplace correction)<br />

• How to apply KNN (K nearest neighbour) to a data set with qualitative attributes? What difficulties<br />

need to be overcome?<br />

• Comment the following :"in a two class problem the KNN classifier the number of neighbours<br />

k should be an odd number".<br />

• Comment the following: "using Euclidean distance criteria in KNN it is convenient to have attributes on<br />

a similar scale". Explain with an example.<br />

• Comment:"the KNN classifier needs the training set during the test phase while Naive Bayes do not<br />

need the training set during test phase".

Appendix<br />

Fig. 2 plots of the teenager data set<br />

Fig. 2<br />

The Health System collected the data of the students and concluded that both attributes follow a<br />

Gaussian distribution<br />

P(<br />

A<br />

i<br />

| C<br />

j<br />

2<br />

ij<br />

(<br />

−<br />

A i<br />

−µ<br />

2<br />

ij )<br />

2<br />

ij<br />

1<br />

2σ<br />

) = e<br />

(1)<br />

2πσ<br />

Eq. (1) serves as a likelihood function for the attribute<br />

Ai<br />

with respect to the class<br />

C<br />

j<br />

. Its<br />

parameters mean ( µ<br />

ij<br />

) and standard deviation ( σ<br />

ij<br />

) are described on the following table. The<br />

values on table can be used to estimate likelihood functions of each attribute.

Part II Introduction to Rapid Miner (RM)<br />

Ex3.<br />

3.1 Download and extract into a local folder (for example ml<strong>2012</strong>) the Pima Indian data set from the UCI<br />

<strong>Machine</strong> <strong>Learning</strong> Repository, accessible from<br />

http://archive.ics.uci.edu/ml/machine-learning-databases/pima-indians-diabetes/<br />

This data set collects data about 768 patients with and without developing diabetes onset within five years.<br />

The attributes in the data set are:<br />

1) Pregnant: number of times pregnant<br />

2) PlasmaGlucose: the plasma glucose concentration measured using a two-hour oral glucose<br />

tolerance test.<br />

3) DiastolicBP: diastolic blood pressure (mmHg)<br />

4) TriceptsSFT: tricepts skin fold thickness (mm)<br />

5) SerumInsulin: two-hours serum insulin (mu U/mt)<br />

6) BMI: body mass index (weight in kg; height in m)<br />

7) DPF: diabetes pedigree function<br />

8) Age: age of the patient (years)<br />

9) Class: diabetes onset within five years (0 or 1)<br />

3.2. Run Rapid Miner (RM) 5 and create a New Local Repository (into a new folder, for example<br />

ML<strong>2012</strong>\RM_repository) to organize all your data sets and processes.<br />

3.3. (1 st way to import data into RM). From menu File-Import Data-Import CSV File, follow the instructions<br />

of the wizard to import pima-indians-diabetes.data.<br />

At step 2 choose a proper column separator. At step 4 give suggestive names to the attributes and specify<br />

correctly their role (label or regular attribute). At step 5 choose a name for data set, for example<br />

DiabetesData, and locate it into your Local Rapid Miner Repository<br />

3.4. (2 nd way to import data into RM). Create a new process (ImportData) to import the data from pimaindians-diabetes.data<br />

file into the repository, using:<br />

a) Operator ReadCSV to load data from a data file. Be careful in choosing a proper column separator<br />

and choosing correctly (yes/not) the first row as attribute names.<br />

b) Operator Store to add the data set into your RM repository. Choose a proper name.<br />

3.5. Create a new process (DataDescription1) to perform the following tasks:<br />

a) Use the Retrieve operator to load the DiabetesData from the repository or directly move the<br />

database icon into your process.<br />

b) Run the process, switch to the Results Perspective (Workspace) and explore the Data View and Plot<br />

View options. Choose quartile option to analyze the distribution of some attributes (for example<br />

Pregnant and age). Choose Scatter matrix (with plots-class) to observe the data distribution.<br />

3.6. Create a new process (DataDescription2) to perform the following tasks:<br />

a) Retrieve the DiabetesData from the repository.<br />

b) Use the Filter Examples operator to remove the records with missing values in attributes<br />

TriceptsSFT and BMI. Tip: use a logical expression such as (a = 0 and b = 0).<br />

Ex. 4 Create a new process (tean_classif) to solve the problem of ex. 2 applying RM.<br />

Suggestion: Import the training data (file tean_training.txt from moodle), create and import the testing<br />

data [60 165], use operators Retreave, k-NN, Naive Bayes, Apply Model.<br />

Remarks: Do not forger RM is a FREE SW (errors are possible). It is better to add names of the attributes<br />

still in the original data files.