From digital image series to 3D models - Arctron

From digital image series to 3D models - Arctron

From digital image series to 3D models - Arctron

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Martin Schaich<br />

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

Possibilities for software application<br />

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

Three-dimensional computer <strong>models</strong> have a lot of advantages for use in as-built<br />

documentations and damage mapping. This software generates precise <strong>3D</strong> <strong>models</strong><br />

based on a <strong>series</strong> of <strong>digital</strong> <strong>image</strong>s and offers an alternative solution <strong>to</strong> expensive <strong>3D</strong><br />

scanning.<br />

<strong>3D</strong> <strong>models</strong> in a res<strong>to</strong>rational context<br />

<strong>3D</strong> <strong>models</strong> present us with new opportunities that can help us in the complex everyday work<br />

of res<strong>to</strong>ration and cultural heritage preservation. On one hand, they assist in <strong>digital</strong><br />

documentation. Findings and damage areas can be reliably mapped. This is especially<br />

interesting for complex <strong>3D</strong> objects. On the other hand, this technology is capable of virtual<br />

reproduction of works of art. The dissemination of these <strong>models</strong> using internet and<br />

multimedia solutions (including publication and presentation, animations and elaborate<br />

computer films) offers exciting and interesting possibilities.<br />

There are different ways <strong>to</strong> create a <strong>3D</strong> model. The most common one is the <strong>3D</strong> scan, during<br />

which the object is scanned without contact. This can be done using laser scanners,<br />

triangulation scanners or structured-light scanners (for high resolution). If necessary, the<br />

scanning data can be combined with <strong>to</strong>tal station or GPS data for referencing.<br />

In practice, a <strong>digital</strong> <strong>3D</strong> scan (requiring special equipment and knowledge) is way <strong>to</strong>o<br />

laborious and expensive for res<strong>to</strong>rers. To these professionals, the software aSPECT <strong>3D</strong> offers<br />

an alternative. This software generates <strong>3D</strong> <strong>models</strong> from <strong>digital</strong> <strong>image</strong> sequences, which can<br />

be recorded using commercially available <strong>digital</strong> cameras.<br />

Pho<strong>to</strong>graphic recording and <strong>image</strong> quality<br />

At the beginning, a suitable <strong>image</strong> <strong>series</strong> of the object has <strong>to</strong> be recorded. For that, basic<br />

pho<strong>to</strong>graphic knowledge about the correlation between exposure time and aperture, depth of<br />

field and the use of different lenses is – of course – required. Dis<strong>to</strong>rtions, for example<br />

produced by using so-called “fish-eye”-lenses and extreme wide angle lenses, cannot be<br />

processed by the algorithm.<br />

In general, no calibrated cameras are needed. Even small, low-cost compact cameras or<br />

system cameras deliver usable results. Nonetheless, for high quality survey results, semiprofessional<br />

or professional SLR systems are recommended.<br />

The software requires well-focussed <strong>image</strong>s with a sufficient depth of field. Moving shadows<br />

or light points affect the computation process as well as reflections (e.g. from direct flash<br />

light). While homogenous lighting is easy <strong>to</strong> realize in a studio setting, it is not always<br />

possible during outdoor projects. However, experience has shown that usable results can<br />

even be derived from data with difficult content like hard shadows. During the pho<strong>to</strong>graphic<br />

recording, it is important <strong>to</strong> circle the object as “hemispherically” as possible with different

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

vertical angles (one per circle) (Fig. 1). A single panorama recording, during which the<br />

pho<strong>to</strong>grapher stays in one position, does not produce <strong>3D</strong> results.<br />

Fig. 1: aSPECT <strong>3D</strong> res<strong>to</strong>ration project “Old Fritz”. Left: Pictures taken with a commercially available camera. Result after the<br />

bundling with the cameras positioned and oriented in space. The coloured prisms around the <strong>image</strong>s visualize the neighbouring<br />

qualities of the <strong>image</strong>s and the lenses’ focal lengths. Right: First “basic <strong>3D</strong> result” as a dense, coloured point cloud. © ArcTron <strong>3D</strong><br />

GmbH, 2012.<br />

Preselection of <strong>image</strong>s<br />

Before the software aSPECT <strong>3D</strong> can start computing the model, the <strong>image</strong>s have <strong>to</strong> be<br />

checked and sorted with relation <strong>to</strong> the criteria described above. Large projects often<br />

produce <strong>image</strong>s in various stages with very different perspectives and lighting conditions. In<br />

these cases, it is useful <strong>to</strong> sort the <strong>image</strong>s: e.g. all aerial <strong>image</strong>s of the building, all pictures<br />

from the ground, by time of day or room location etc. A similar approach applies <strong>to</strong><br />

archaeological or res<strong>to</strong>ration projects which document stratigraphic layers. For that, the<br />

software offers an <strong>image</strong> database, in which the <strong>image</strong>s can be tagged. Furthermore, all<br />

internal camera information (EXIF-data) concerning sensor, lenses, focal length, shutter<br />

speed, ISO-value etc. are s<strong>to</strong>red and later on used for the computation.<br />

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> point cloud<br />

After checking and preselecting the <strong>image</strong>s, the software computes a <strong>3D</strong> point cloud from the<br />

selected pictures. It utilizes a technology, which has established itself in computer based<br />

<strong>image</strong> processing: SFM (“Structure <strong>From</strong> Motion” 1 ).<br />

Different algorithms (e.g. Sift, ASift, Hesian-affine, MSER etc. 2 ) are used for computing <strong>3D</strong><br />

object information. They identify distinctive points (“features”) in several pictures from<br />

1 Different providers allow working with such solution in the „cloud“. For that, the <strong>image</strong>s have <strong>to</strong> be uploaded<br />

<strong>to</strong> the respective providers, which is hard <strong>to</strong> realize and not really sensible for large <strong>image</strong> <strong>series</strong>, sensitive data<br />

or when operating in remote regions. Generally compare: Remondino, F., El-Hakim, S.F., Gruen, A. & Zhang, L.<br />

(2008): Turning<strong>image</strong>sin<strong>to</strong> <strong>3D</strong>-<strong>models</strong>. IEEE Signal Processing Magazine 25 (4), S. 55-65; Kersten, T.,<br />

Lindstaedt, M., Mechelke, K., & Zobel, K. (2012): Au<strong>to</strong>matische <strong>3D</strong>-Objektrekonstruktion aus unstrukturierten<br />

<strong>digital</strong>en Bilddaten für Anwendungen in Architektur, Denkmalpflege und Archäologie. 32. Wiss.-techn.<br />

Jahrestagung der DGPF (März 2012), Potsdam, Germany.

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

different perspectives. The algorithm also includes the EXIF-data of each picture, e.g. which<br />

camera was used with what kind of sensor and lenses. Pho<strong>to</strong>grammetric procedures are<br />

used in order <strong>to</strong> reconstruct the camera position and orientation. All is done by correlating the<br />

<strong>image</strong>s <strong>to</strong> each other.<br />

In the first step, the <strong>image</strong>s are put in<strong>to</strong> spatial correlation using the so-called bundle block<br />

adjustment 3 . The result is a <strong>3D</strong> point cloud of all identified features. In a subsequent<br />

computational step, this basic data can be transformed in<strong>to</strong> an already very detailed, socalled<br />

“dense”, coloured point cloud 4 . (Fig. 1)<br />

SFM-technology is suitable for objects with amorphous geometries and / or objects with<br />

structured / multi edged surfaces. Artifacts, which display an abundance of corresponding<br />

<strong>image</strong> points and shades lend themselves <strong>to</strong> a successful result. Among the objects<br />

rather unsuited for SFM are unstructured, monochrome, translucent, reflective, evenly<br />

patterned or so-called self-similar surfaces, in which structures and characteristics are<br />

repeated. Such objects produce rather bad or incomplete results.<br />

Fully au<strong>to</strong>mated processes<br />

The computational process for generating <strong>3D</strong> point clouds from <strong>image</strong>s is fully au<strong>to</strong>mated.<br />

That means that the user does not have <strong>to</strong> carry out any more steps after selecting the<br />

<strong>image</strong>s. Processing time is dependant on the number and size of the <strong>image</strong>s. Data banks<br />

containing several hundred or thousand pictures, require high quality and very powerful<br />

computers. Most importantly, these computers need a multiple processor architecture with<br />

64bit processors, a lot of main memory and they need <strong>to</strong> support parallel computational<br />

processes on the graphic board (GPU). Usually, current “workstations” meet these<br />

requirements.<br />

Scaling and georeferencing<br />

Initially, the resulting point cloud is neither scaled nor georeferenced. This means, that the<br />

ratio of the point cloud <strong>to</strong> reality remains unknown. The point cloud needs <strong>to</strong> be allocated <strong>to</strong><br />

definite coordinates in a superordinate coordinate system. Afterwards, the <strong>3D</strong> object is<br />

scaled, meaning the size of the object is within a defined, <strong>to</strong>lerable deviation.<br />

aSPECT <strong>3D</strong> offers different approaches for this procedure. The simplest way is scaling the<br />

object via a scale that was pho<strong>to</strong>graphed along with the object. The GPS and <strong>to</strong>tal station<br />

2 Lowe, D. (2004), Distinctive <strong>image</strong> features from scale-invariant keypoints. International Journal of Computer<br />

Vision 60,2, S. 91-110.<br />

3 th<br />

Wu, C., Agarwal, S., Curless, B. & Seitz, S. (2011): Multicore Bundle Adjustment. 24 IEEE Conference on<br />

Computer Vision and Pattern Recognition. Colorado Springs, USA.<br />

4<br />

Furukawa, Y. & Ponce, J. (2007): Accurate, dense and robust multiview stereopsis. In: Proc. International<br />

Conference on Computer Vision and Pattern Recognition. S. 1-8.

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

interface allows correlating reference points within the point cloud <strong>to</strong> independently<br />

measured control points. By deploying a so-called multi-point transformation, the point clouds<br />

are transformed in<strong>to</strong> the target coordinate system, whereupon the errors are checked and<br />

can be optimized in general (Fig. 2).<br />

Fig. 2: Scaling and georeferencing in aSPECT <strong>3D</strong> . Left: Pho<strong>to</strong>graphs of a house façade as a SFM <strong>image</strong> <strong>series</strong>. Middle: Single<br />

reference points are recorded with a <strong>to</strong>tal station and processed in aSPECT <strong>3D</strong> . Right:: Using a multi-point transformation, the<br />

scaling and georeferencing is carried out with the computation of the errors. © ArcTron <strong>3D</strong> GmbH, 2012.<br />

Larger objects (e.g. roof landscapes, façades) sometimes consist of several point clouds<br />

merged <strong>to</strong>gether, because they were recorded from very different points of view – e.g. via<br />

airborne pho<strong>to</strong>graphy or from the ground. For this purpose, aSPECT <strong>3D</strong> includes an ICPalgorithm,<br />

which can fuse overlapping point clouds <strong>to</strong>gether. This is especially suited for<br />

<strong>image</strong> <strong>series</strong> with very little overlap. Once this step is done, the <strong>3D</strong> point model is finished.<br />

How precise are <strong>3D</strong> <strong>models</strong>?<br />

<strong>3D</strong> <strong>models</strong> have <strong>to</strong> depict the original object as precisely as possible for res<strong>to</strong>ration,<br />

preservative or archaeological purposes. Size and shape need <strong>to</strong> have the smallest possible<br />

deviation. Depending on the deployed survey procedure (<strong>to</strong>tal station, laser scanner etc.),<br />

the accuracies can vary.<br />

The crea<strong>to</strong>rs of the software, which generates <strong>models</strong> based on SFM-<strong>image</strong>s, asked<br />

themselves if aSPECT <strong>3D</strong> could compete with other <strong>3D</strong> methods. In order <strong>to</strong> answer this<br />

question, ArcTron <strong>3D</strong> carried out accuracy analyses during different projects 5 . The results<br />

(below) show, that for both small and large objects, significantly precise <strong>3D</strong> <strong>models</strong> can be<br />

generated.<br />

5<br />

Publications of the author concerning the <strong>to</strong>pic are currently in print or preparation. Compare also: Kersten, T.<br />

&Mechelke, K., Fort Al Zubarah in Katar – <strong>3D</strong>-Modell aus Scanner- und Bilddaten im Vergleich. In:<br />

Luhmann/Müller (Ed.), Pho<strong>to</strong>grammetrie, Laserscanning, Optische <strong>3D</strong>-Messtechnik. Beitr. Oldenburger <strong>3D</strong>-<br />

Tage 2012, S. 89-98.

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

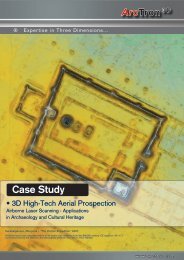

Fig. 3: Project “wall documentation”. Veitsberg, Bad Neustadt a.d. Saale (in cooperation with the University of Jena,<br />

Professorship for Prehis<strong>to</strong>ric and Pro<strong>to</strong>his<strong>to</strong>ric Archaeology). Left: Pho<strong>to</strong>graphic documentation airborne and from the ground.<br />

Middle: Reference surveys with <strong>to</strong>tal station and laser scanner. Right: Deviation result after a “best fit” registration of the SFM<br />

point cloud on<strong>to</strong> the terrestrial laser scan with standard deviation of below 1 cm. © ArcTron <strong>3D</strong> GmbH, 2012.<br />

Example 1: In cooperation with the University of Jena, ArcTron <strong>3D</strong> surveyed a small<br />

representative area of wall fragments in Veitsberg showing early medieval Carolingian<br />

remains (Fig. 3). For the accuracy analysis, the model generated by aSPECT <strong>3D</strong> was<br />

compared <strong>to</strong> <strong>models</strong> generated in a different way. In one test various control points placed in<br />

the area were recorded via <strong>to</strong>tal station. In a second test the reference area was scanned<br />

with a terrestrial <strong>3D</strong> laser scanner which produces accuracies of about 3 mm.<br />

The aSPECT <strong>3D</strong> model was computed from airborne pictures taken by a so-called ‘Oc<strong>to</strong>copter’<br />

drone and pictures taken from the ground. The comparison of the reference data from SFM<br />

point cloud and laser scan showed a very small standard deviation of about 1 cm. If only a<br />

<strong>to</strong>tal station is employed, the standard deviation still remains smaller than 3 cm. When a<br />

reference difference (e.g. a scale pho<strong>to</strong>graphed with the object) is the only device for scaling<br />

the point cloud, excavation findings of the respective size provide accuracies of around 5 cm.<br />

Fig. 4: Project “Enigma <strong>3D</strong>” (in cooperation with the “celtic + roman museum” Manching). Left: Angular “bread loaf idol” in<br />

pho<strong>to</strong>realistic and shaded depiction. Right: The highly precise structured light scanner PT-M 1280 serves as reference<br />

measuring device. For the au<strong>to</strong>mated, pho<strong>to</strong>graphic SFM-documentation, two Nikon cameras with macro-lenses and a turn<br />

table were set up in a homogenous white light room. The deviation analysis proved accuracies better than 0.2 mm. © ArcTron <strong>3D</strong><br />

GmbH, 2012.<br />

Example 2: Another accuracy test showed that the software is also suited for small objects. It<br />

was carried out in cooperation with the “celtic + roman museum” Manching 6 and focussed on<br />

6 M. Schaich, „Aenigma <strong>3D</strong>“. Zur Generierung hochaufgelöster <strong>3D</strong>-Modelle mit Fo<strong>to</strong>s einer handelsüblichen<br />

Spiegelreflexkamera – eine Fallstudie mit ArcTron’s Software aSPECT <strong>3D</strong> am Beispiel der „Brotlaibidole“. In W.<br />

David (Ed.), Aenigma. Katalog zur Ausstellung (2012) – in preparation.

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

more than a hundred of so-called “bread loaf idols” and other small archaeological objects.<br />

Professional camera systems with macro lenses produced accuracies of 0.2 mm for the<br />

resulting SFM-point cloud. A high-resolution structured light scan with a resolution of 0.05<br />

mm served as a reference.<br />

Processing pho<strong>to</strong>realistic <strong>3D</strong> <strong>models</strong><br />

Using one additional step, point clouds can be processed in<strong>to</strong> polygonally meshed <strong>3D</strong><br />

<strong>models</strong>. First of all, the coloured point clouds should be filtered and rid of interfering objects<br />

(e.g. scaffold elements). aSPECT <strong>3D</strong> offers different kinds of <strong>to</strong>ols for reducing noise in <strong>3D</strong><br />

data or deleting dispersive points. Afterwards, a closed <strong>3D</strong> mesh is laid over the point cloud<br />

using a so-called “Poisson triangulation” – a probability distribution. This <strong>3D</strong> mesh consists of<br />

numerous polygons, which connect at least three different points each. Simultaneously, the<br />

software generates a so-called “vertex texture”. For this, a colour gradient is computed from<br />

the respective RGB colours of each triangle. That is why the coloured surface of an object<br />

with a very dense point cloud is already realistically depicted.<br />

However, it takes another texturing for generating the pho<strong>to</strong>realistic <strong>3D</strong> model. During this<br />

step, the optimized pho<strong>to</strong>graphs of the SFM-process are mapped on<strong>to</strong> the geometries of the<br />

model surface. An Apsis-fresco may serve as an illustrating example (Fig. 5).<br />

Fig. 5: Project “Ninfa <strong>3D</strong>” (in cooperation with the German His<strong>to</strong>rical Institute, Rome). Left: SFM-documentation of a fresco.<br />

Middle: <strong>3D</strong> model with vertex texture. Right: Texture with high-resolution pho<strong>to</strong>graphs on the <strong>3D</strong> model. © ArcTron <strong>3D</strong> GmbH,<br />

2012.<br />

Combined airborne and terrestrial surveys<br />

During the past few years at ArcTron <strong>3D</strong> , a combination of airborne and terrestrial<br />

documentation technologies has proven very suitable for comprehensive <strong>3D</strong> surveys. In<br />

targeted survey flights anultra-light paraglider trike is deployed by the company.<br />

Up <strong>to</strong> 90 % of all required information can be gathered by aerial surveys. That is why since<br />

2010 ArcTron has also been using a remote controlled “camera drone” (Fig. 6). This aircraft<br />

robot can be completely au<strong>to</strong>nomously controlled via a ground station and is especially<br />

programmed for taking software-specific, pho<strong>to</strong>grammetrical survey <strong>image</strong>s. The system is<br />

able <strong>to</strong> carry cameras of over 2 kg and is equipped with a stabilized and separately<br />

controllable camera platform. This technology is suited for replacing bulky lifting platforms<br />

and can even reach remote objects very precisely (e.g. the gothic embellishment of dome<br />

spires).

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

Fig. 6: Project “Ninfa <strong>3D</strong>” (in cooperation with the German His<strong>to</strong>rical Institute, Rome). Left: Oc<strong>to</strong>copter, camera drone with a fully<br />

stabilized camera platform. Right: Technology in practice. Deployment of the system for the <strong>3D</strong> documentation of medieval<br />

remains of the city Ninfa (Italy). © ArcTron <strong>3D</strong> GmbH, 2012.<br />

Data management and exploitation<br />

The finished pho<strong>to</strong>realistic model can now be further edited and processed using the<br />

software’s inherent database.<br />

• Data management<br />

All object geometries can be individually ordered and described in the database. Different<br />

drafts of database structures for archaeological, architectural or res<strong>to</strong>ration purposes are<br />

available. They can be adjusted <strong>to</strong> any complex project. The database provides a<br />

hierarchical object system (“part of relation system”) as well as an additional classification<br />

tree structure. If necessary, a completely new global structure can be created.<br />

• Further processing<br />

The software allows <strong>to</strong> allocate additional information – e.g. for damage mapping – <strong>to</strong> the <strong>3D</strong><br />

model. Damage mapping can be applied directly on<strong>to</strong> pho<strong>to</strong>realistic <strong>models</strong> (Fig. 7). Mapping<br />

can also be carried out on location just beside the original using a notebook or a tablet PC<br />

with the respective <strong>3D</strong> software. This capability facilitates precise and reliable documentation<br />

in the field (essential <strong>to</strong> complex <strong>3D</strong> projects). Furthermore, the <strong>3D</strong> <strong>models</strong> can be linked with<br />

additional meta data such as texts, CAD-files, <strong>image</strong>s, films, PDFs etc. Using the included<br />

<strong>to</strong>ols complex documentation is a logical, step by step process.<br />

Fig. 7: Damage mapping of the Bronze Statue of general Yorck of Wartenberg (1759-1830), Berlin. The complex <strong>3D</strong> mapping of<br />

all damage areas with the integrated database was carried out by the res<strong>to</strong>ration office Helmich (Berlin) with the <strong>3D</strong> GIS system<br />

aSPECT <strong>3D</strong> . It enables database enquiries of deliberate <strong>3D</strong> damage mappings and res<strong>to</strong>ration planning. Graphics: © Landmark<br />

Preservation Authority Berlin & res<strong>to</strong>ration office Helmich, based on a laser scan by the company Laserscan, Berlin. Software<br />

Screenshots: ArcTron <strong>3D</strong> GmbH, 2009.<br />

• Print versions<br />

Printed documentations on paper, CAD-maps and high-resolution, scaled and rectified<br />

screenshots of many mosaic <strong>image</strong>s (orthopho<strong>to</strong>s) can be derived from different<br />

perspectives.

<strong>From</strong> <strong>digital</strong> <strong>image</strong> <strong>series</strong> <strong>to</strong> <strong>3D</strong> <strong>models</strong><br />

• Presentations<br />

The <strong>3D</strong> <strong>models</strong> are able <strong>to</strong> rotate on the screen for presentational purposes. Interior rooms<br />

can be entered and walked through in full screen mode – similar <strong>to</strong> video games. <strong>3D</strong> stereo<br />

technologies are also supported if a respective <strong>3D</strong> moni<strong>to</strong>r is available. This allows viewing<br />

the objects in “real <strong>3D</strong>” using <strong>3D</strong> glasses.<br />

Future prospects<br />

The company ArcTron <strong>3D</strong> has been developing the presented software continually for the last<br />

10 years. By now, it provides <strong>to</strong>ols and applications for res<strong>to</strong>rers, heritage preservers and<br />

archaeologists. aSPECT <strong>3D</strong> continues on a developmental path. Currently, ArcTron <strong>3D</strong> is<br />

working on a process manager, which will lead the user systematically through the complete<br />

workflow process, from point cloud <strong>to</strong> textured <strong>3D</strong> model. This brand new development will<br />

be presented on the trade fair “denkmal” in November.