MULTIVARIATE RANDOM VARIABLES 1 Joint ad marginal densities

MULTIVARIATE RANDOM VARIABLES 1 Joint ad marginal densities

MULTIVARIATE RANDOM VARIABLES 1 Joint ad marginal densities

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Computational Methods and <strong>ad</strong>vanced Statistics Tools<br />

III - <strong>MULTIVARIATE</strong> <strong>RANDOM</strong> <strong>VARIABLES</strong><br />

A random vector, or multivariate random variable, is a vector of n scalar<br />

random variables. The random vector is written<br />

X =<br />

⎛ ⎞<br />

X1<br />

⎜ ⎟<br />

⎜ X2 ⎟<br />

⎜ ⎟<br />

⎝ ... ⎠<br />

A random vector is also said an n¡ dimensional random variable.<br />

Xn<br />

1 <strong>Joint</strong> <strong>ad</strong> <strong>marginal</strong> <strong>densities</strong><br />

The glory of God is man fully alive; moreover, man’s life is the vision of God.<br />

(St. Irenaeus of Lyons, ca. 135-202)<br />

Every random variable has a distribution function.<br />

DEFINITION III.1A - (DISTRIBUTION) - The n¡dimensional random<br />

variable X has the distribution function<br />

F (x1, ..., xn) = P fX1 · x1, ..., Xn · xng. (2)<br />

A random vector, X, is called absolutely continuous if there exists the<br />

joint probability density f such that<br />

F (x1, ..., xn) =<br />

x1<br />

−∞<br />

(1)<br />

xn<br />

... f(t1, ..., tn)dt1, ...dtn. (3)<br />

−∞<br />

A random vector, X, is called discrete if it takes values on a discrete (i.e.<br />

finite or countable) sample space. It means that X : Ω ! R n , where Ω<br />

is discrete. In this case the joint probability function (or, less properly, the<br />

joint discrete density) is defined as<br />

f(x1, ..., xn) = P fX1 = x1, ..., Xn = xng. (4)<br />

1

The joint distribution and the joint probability function are related by<br />

F (x1, ..., xn) = <br />

t1·x1<br />

. . . <br />

tn·xn<br />

f(t1, . . . , tn). (5)<br />

Ex. III.1 (n = 2) The joint distribution function of the random vector<br />

(X, Y ) is defined by the relation<br />

F (x, y) = P (X · x, Y · y)<br />

The function F (x, y) is defined on R 2 and, as a function each variable, is<br />

monotone non-decreasing and such that<br />

lim F (x, y) = 1, lim F (x, y) = lim F (x, y) = 0<br />

x,y→+∞ x→−∞ y→−∞<br />

To know F (x, y) allows to know the probability that (X, Y ) falls in any given<br />

rectangle (a, b] £ (c, d] :<br />

P [(X, Y ) 2 (a, b] £ (c, d] ] = F (b, d) ¡ F (a, d) ¡ F (b, c) + F (c, d).<br />

Since rectangles approximate any measurable set of R 2 , if we know F then we<br />

know P [(X, Y ) 2 A] for any measurable set A ½ R 2 ; that is, the distribution<br />

function F (x, y) thoroughly describes the distribution measure of the couple<br />

(X, Y ).<br />

If the random vector (X, Y ) is absolutely continuous, i.e. if there is an<br />

integrable function f(x, y) such that<br />

then<br />

f(x, y) ¸ 0,<br />

<br />

A<br />

<br />

R 2<br />

f(x, y)dxdy = 1, F (x, y) =<br />

x<br />

−∞<br />

y<br />

−∞<br />

f(ξ, η)dξdη,<br />

f(x, y)dxdy = P [(X, Y ) 2 A], 8 measurable set A ½ R 2 ,<br />

which illustrates the meaning of the joint probability density f(x, y).<br />

An example of an absolutely continuous random vector: (X, Y ) uniformly<br />

distributed on a disk of r<strong>ad</strong>ius r, with probability density<br />

f(x, y) =<br />

For example, F (0, 0) = 1/4.<br />

<br />

1/(πr 2 ), if x 2 + y 2 · r 2<br />

0, elsewhere.<br />

2

An example of a discrete random vector is (X, Y ) with X = score got by tossing<br />

a die, Y = score got by tossing another die. Then the joint probability<br />

function has the value 1/36 for any (xi, yj) 2 f1, ..., 6g2 and zero elsewhere.<br />

The distribution function<br />

F (x, y) = <br />

P (X = xi, Y = yj)<br />

xi·x yj·y<br />

is computed by counting and dividing by 36. For example F (2, 4) = 2/9,,<br />

F (3, 3) = 1/4, ..., F (6, 6) = 1,. 4<br />

For a sub-vector S = (X1, ..., Xk) T <strong>marginal</strong> density function is<br />

(k < n) of the random vector X, the<br />

fS(x1, ..., xk) =<br />

in the continuous case, and<br />

fS(x1, ..., xk) = <br />

xk+1<br />

+∞<br />

−∞<br />

f(x1, ..., xn) dxk+1 . . . dxn<br />

. . . <br />

f(x1, ..., xn) dxk+1 . . . dxn<br />

xn<br />

if X is discrete. In both cases the <strong>marginal</strong> distribution function is<br />

FS(x1, . . . , xk) = F (x1, ..., xk, 1, ..., 1). (8)<br />

For the sake of notation, we will write fX(x) and FX(x) inste<strong>ad</strong> of f(x) and<br />

F (x), respectively, whenever it is necessary to emphasize to which random<br />

variable the function belongs.<br />

Ex III.2 As in Ex. I.1, let f(x, y) = 1/(πr2 )¢IDr, where IDr is the indicator<br />

of the disk of r<strong>ad</strong>ius r. Let us compute the <strong>marginal</strong> <strong>densities</strong> of X, Y :<br />

fX(x) =<br />

<br />

R<br />

f(x, y)dxdy =<br />

<br />

x 2 +y 2 ·r 2<br />

1 1<br />

dy =<br />

πr2 πr2 for jxj · r, and zero elsewhere. By symmetry<br />

√ r 2 −x 2<br />

− √ r 2 −x 2<br />

fY (y) = 2pr2 ¡ y2 πr2 ,<br />

Another example:<br />

if jyj · r, and 0 elsewhere.<br />

fX,Y (x, y) =<br />

<br />

1<br />

8<br />

Then the <strong>marginal</strong> density function of X is<br />

fX(x) =<br />

<br />

R 2 fX,Y (x, y) dy =<br />

, 0 · y · x · 4<br />

0, else<br />

x<br />

0 1<br />

8<br />

3<br />

1 dy = x, x 2 [0, 4]<br />

8<br />

0 x /2 [0, 4]<br />

<br />

(6)<br />

(7)<br />

dy = 2p r 2 ¡ x 2<br />

πr 2<br />

<br />

4

2 Conditional distributions<br />

Does God guide the world and my life? Yes, but in a mysterious way; God guides<br />

everything along paths that he only knows, le<strong>ad</strong>ing it to its perfection. ”Even the<br />

hairs of your he<strong>ad</strong> are all numbered” (Mt 10:30)<br />

Let A and B denote some events. If P (B) > 0 then the conditional probability<br />

of A occurring given that B occurs is<br />

P (AjB) :=<br />

P (A \ B<br />

P (B)<br />

We interpret P (AjB) as ”the probability of A given B”.<br />

By use of (9) the conditional probability function of a discrete r.v. Y , given<br />

a discrete r.v. X is defined:<br />

fY |X(yjjxi) := P (X = xi, Y = yj)<br />

P (X = xi)<br />

´ f(xi, yj)<br />

fX(xi)<br />

Now suppose that the continuous random variables X and Y have joint<br />

density fX,Y . If we wish to use (9) to determine the conditional distribution<br />

of Y given that X takes the value x, we have the problem that the probability<br />

P (Y · yj X = x) is undefined as we may only condition on events which<br />

have a strictly positive probability, and P (X = x) = 0. However, fX(x) is<br />

positive for some x values. Therefore for both discrete and continuous random<br />

variables we use the following definition:<br />

DEFINITION III.2A (CONDITIONAL DENSITY)<br />

The conditional density function of Y given X = x is<br />

fY | X(yjx) = fX,Y (x, y)<br />

, 8x : fX(x) > 0 (10)<br />

fX(x)<br />

where fX,Y is the joint density function of X and Y . Both X and Y may<br />

be multivariate random variables.<br />

The conditional distribution function is then found by integration or summation<br />

as previously described in (3), (5) for the discrete and continuous case,<br />

respectively.<br />

It follows from (10) that<br />

(9)<br />

fX,Y (x, y) = fY | X=x(y) ¢ fX(x) (11)<br />

4

and by interchanging X and Y on the right hand side of (11) we get Bayes’<br />

rule<br />

fY |X(yjx) = fX|Y (xjy) ¢ fY (y)<br />

fX(x)<br />

(12)<br />

Ex. III.3 Let us recall that an exponential variable T with parameter λ > 0<br />

is an absolutely continuous variable with density f(t) = λe −λt ¢ IR +(t).<br />

Now let Y be an exponential variable with parameter x > 0, which is in turn<br />

a value of X » exp(3). In other words, the conditional density of Y given<br />

X and the <strong>marginal</strong> of X are known:<br />

fY |X(yjx) = x ¢ e −xy ¢ IR +(y); fX(x) = 3e −3x ¢ IR +(x).<br />

Which is the conditional density of X given Y ? The answer is<br />

where<br />

= f<br />

So<br />

fY (y) =<br />

3x e −x(3+y)<br />

¡(3 + y)<br />

∞<br />

0<br />

∞<br />

¡<br />

0<br />

∞<br />

0<br />

fX|Y (xjy) = fY |X(yjx) ¢ fX(x)<br />

fY (y)<br />

fY |X(yjx) ¢ fX(x)dx =<br />

∞<br />

e−x(3+y) 3<br />

¢3dxg =<br />

¡(3 + y) 3 + y<br />

0<br />

3xe −x(3+y) dx =<br />

e −x(3+y)<br />

¡(3 + y)<br />

fX|Y (xjy) = x(3 + y) 2 e −x(3+y) , x > 0, y > 0.<br />

DEFINITION III.2B (INDEPENDENCE)<br />

The random vectors X and Y are independent 1 if<br />

which corresponds to<br />

∞<br />

0<br />

=<br />

3<br />

, y > 0.<br />

(3 + y) 2<br />

fX,Y (x, y) = fX(x) ¢ fY (y), 8x, y (13)<br />

FX,Y (x, y) = FX(x) ¢ FY (y), 8x, y (14)<br />

1 In most textbooks the n¡dimensional and m¡dimensional r.v. X, Y are said to be<br />

independent if<br />

P (X 2 A, Y 2 B) = P (X 2 A) ¢ P (Y 2 B), 8 measurable A ½ R n , B ½ R m .<br />

A suitable choice of A, B le<strong>ad</strong>s directly to the equivalent definition (14)<br />

5

If X and Y are independent, then<br />

fY |X=x(y) = fY (y). (15)<br />

Last but not least: if two random variables are independent, then they are<br />

also uncorrelated, but uncorrelated variables are not necessarily independent.<br />

3 Moments of scalar random variables<br />

Evolution presupposes the existence of something that can develop. The theory says<br />

nothing about where this ”something” came from. Furthermore, questions about<br />

the being, essence, dignity, mission, meaning and wherefore of the world and man<br />

cannot be answered in biological terms. (YouCat 42)<br />

An average of the possible values of X, each value being weighted by its<br />

probability,<br />

E[X] = <br />

x ¢ P fX = xg,<br />

x<br />

is <strong>ad</strong> litteram the notion of expectation for a discrete variable X (provided<br />

that such series is absolutely convergent). For continuous variables, the sums<br />

are replaced by integrals.<br />

DEFINITION III.3A (EXPECTATION)<br />

The expectation (or mean value) of an absolutely continuous variable X with<br />

density function fX is<br />

E[X] =<br />

∞<br />

−∞<br />

x ¢ fX(x) dx (16)<br />

provided that the integral is absolutely convergent, i.e. E[jXj] < 1.<br />

If X is a random variable and g is a function, then Y = g(X) is also a<br />

random variable. To calculate the expectation of Y , we could find fY and<br />

use (16). However the process of finding fY can be complicated; inste<strong>ad</strong>, we<br />

can simply use<br />

E[Y ] = E[g(X)] =<br />

<br />

R<br />

g(x)fX(x) dx (17)<br />

This provides a method for calculating the ”moments” of a distribution.<br />

6

DEFINITION III.3B (MOMENTS)<br />

The n-th moment of X is<br />

E[X n ] =<br />

and the n-th central moment is<br />

E[(X ¡ E[X]) n ] =<br />

<br />

<br />

R<br />

R<br />

x n fX(x) dx (18)<br />

(x ¡ E[X]) n fX(x) dx (19)<br />

The second central moment is also called the variance, and the the variance<br />

of X is given by<br />

V ar[X] = E[(X ¡ E[X]) 2 ] = E[X 2 ] ¡ (E[X]) 2 . (20)<br />

Now let X be an n¡dimensional random variable and put Y = g(X), where<br />

g is a function. As in (17) we have<br />

E[Y ] = E[g(X)] =<br />

<br />

R n<br />

g(x1..., xn)fX(x1, ..., xn)dx1...dxn<br />

This gives sense to the mean of a linear combination of variables, and to any<br />

type of moments. Since<br />

E[aX1 + bX2] = aE[X1] + bE[X2]<br />

the expectation operator is a linear operator.<br />

Of special interest is the covariance of Xi and Xj:<br />

DEF: III.3C - Covariance - The covariance of two scalar r.v.’s X1,2 is<br />

Cov[X1, X2] = E[(X1 ¡ E[X1])(X2 ¡ E[X2])] = E[X1X2] ¡ E[X1]E[X2](21)<br />

The covariance gives information about the simultaneous variation of two<br />

variables and is useful for finding interdependencies. Since the expectation<br />

is linear, the covariance is a bilinear operator, i.e. linear in each of the two<br />

arguments, whence its calculation rule:<br />

Cov[aX1 + bX2, cX3 + dX4] =<br />

= ac Cov[X1, X3] + <strong>ad</strong> Cov[X1, X4] + bc Cov[X2, X3] + bd Cov[X2, X4] (22)<br />

7

for constants a, b, c, d and r.v. X1, ..., X4.<br />

In particular, for two scalar random variables X, Y we have the formulae:<br />

Cov(X, Y ) = E(XY ) ¡ µxµy<br />

(23)<br />

V ar(X + Y ) = V ar(X) + 2 ¢ Cov(X, Y ) + V ar(Y ) (24)<br />

DEF. III.3C - Correlation coefficient - The correlation coefficient, or correlation,<br />

of two scalar r.v.’s X1, X2 is<br />

ρ ´ ρ(X1, X2) := E[ X1 ¡ µ<br />

σ1<br />

¢ X2 ¡ µ2<br />

]<br />

σ2<br />

THM. III.3D - The covariance and the correlation of two scalar r.v.’s X, Y<br />

satisfy<br />

Cov(X, Y ) 2 · V ar(X)V ar(Y ) (Schwarz’s inequality),<br />

¡1 · ρ(X, Y ) · 1<br />

respectively.<br />

Proof<br />

Since the expectation of a nonnegative variable is always nonnegative,<br />

0 · E[(θjXj + jY j) 2 ] = θ 2 E[X 2 ] + 2θE[jXY j] + E[Y 2 ], 8θ.<br />

Thus a polynomial with degree 2 (in theta) does not take negative values, so<br />

that the discriminant must be · 0:<br />

E[jXY j] 2 ¡ E[X 2 ]E[Y 2 ] · 0, or E[jXY j] 2 · E[X 2 ]E[Y 2 ].<br />

Replacing X and Y by X ¡ µx and Y ¡ µy, and using j f(t)g(t)dtj ·<br />

jf(t)g(t)jdt, this implies<br />

Cov(X, Y ) 2 · E[j(X¡mux)(Y ¡µy)j] 2 · E[(X¡µx) 2 ]E[(Y ¡µy) 2 ] = V ar(X)V ar(Y )<br />

Since ρ = Cov(X, Y )/ <br />

V ar(X)V ar(Y ), this also means<br />

[ρ(X, Y )] 2 · 1, i.e. ¡ 1 · ρ · +1. 4<br />

8

DEFINITION III.3E - Uncorrelation - Two scalar random variables , Y are<br />

said uncorrelated, or orthogonal, when Cov(X, Y ) = 0.<br />

Since Cov(X, Y ) = E(XY ) ¡ µxµy,<br />

However<br />

X, Y independent ) X, Y uncorrelated<br />

X, Y uncorrelated does not imply X, Y independent<br />

as we see in the following example.<br />

Ex. III.4 Let U be uniformly distributed in [0, 1] and consider the r.v.’s<br />

X = sin 2πU, Y = cos 2πU.<br />

X and Y are not independent since Y has only two possible values if X is<br />

known. However they are orthogonal or uncorrelated:<br />

so that<br />

E(X) =<br />

1<br />

Cov(X, Y ) = E(X ¢ Y ) =<br />

0<br />

sin 2πt dt = 0, E(Y ) =<br />

1<br />

0<br />

1<br />

0<br />

cos 2πt dt = 0,<br />

sin 2πt cos 2πt dt = 1<br />

1<br />

sin 4πt dt = 0.<br />

2 0<br />

In this case the covariance is zero, but the variables are not independent.<br />

4 Moments of multivariate random variables<br />

What role does man play in God’s providence?<br />

The completion of creation through divine providence is not something that happens<br />

above and beyond us. God invites us to collaborate in the completion of creation.<br />

We are interested in defining a (vector) first moment <strong>ad</strong> a (matrix) second<br />

moment of a multivariate random variable.<br />

DEFINITION III.4A (EXPECTATION OF A <strong>RANDOM</strong> VECTOR)<br />

9

The expectation (or the mean value) of the random vector X is<br />

µ = E[X] =<br />

⎛<br />

⎜<br />

⎝<br />

E[X1]<br />

E[X2]<br />

.<br />

E[Xn]<br />

⎞<br />

⎟<br />

⎠<br />

(25)<br />

DEFINITION III.4B (VARIANCE matrix) The variance (matrix) of the<br />

random vector X is<br />

=<br />

⎛<br />

⎜<br />

⎝<br />

ΣX = V ar[X] = E[(X ¡ µ)(X ¡ µ) T ] =<br />

V ar[X1] Cov[X1, X2] ... Cov[X1, Xn]<br />

Cov[X2, X1] V ar[X2] ... Cov[X2, Xn]<br />

.<br />

.<br />

Cov[Xn, X1] Cov[Xn, X2] ... V ar[Xn]<br />

Sometimes we shall use the notation<br />

Both σii and σ 2 i<br />

⎛<br />

σ<br />

⎜<br />

⎝<br />

2 1 σ12 ... σ1n<br />

σ21 σ2 ⎞<br />

⎟<br />

2 ... σ2n ⎟<br />

⎠<br />

.<br />

σn1 σn2 ... σ 2 n<br />

are possible notations for the variance of Xi.<br />

.<br />

⎞<br />

⎟<br />

⎠<br />

(26)<br />

By the calculation rule (25), the variance of a linear combination of X1, X2<br />

is known from the variance matrix of (X1, X2):<br />

V ar[z1X1 + z2X2] = z 2 1V ar(X1) + 2z1z2Cov(X1, X2) + z 2 2V ar(X2).<br />

and in general, for any n ¸ 2, it is the the qu<strong>ad</strong>ratic form of Σ calculated in<br />

the coefficients z1, ..., zn:<br />

V ar[z1X1 + . . . + znXn] = z T Σz.<br />

Ex. III.4<br />

Let (X1, X2) be a random vector with mean (0, 0) and variance matrix<br />

Σ =<br />

<br />

2 ¡1<br />

¡1 3<br />

10

Compute the variance of the projection of (X1, X2) on the direction ( 1<br />

We deal with the scalar variance of a linear combination:<br />

V ar[(X1, X2) ¢ ( 1<br />

2 ,<br />

p<br />

3<br />

)] = V ar[1<br />

2 2 X1<br />

p<br />

3<br />

+<br />

2 X2]<br />

= ( 1<br />

2 )2V ar[X1] + 2 ¢ 1<br />

p<br />

3<br />

2 2 Cov(X1,<br />

p<br />

3<br />

X2) + (<br />

2 )2V ar[X2]<br />

= 1<br />

4 ¢ 2 ¡ 2 ¢ p 3/4 + 3<br />

4 ¢ 3 = (11 ¡ 2p3)/4. 2 , √ 3<br />

The correlation of two random variables, Xi and Xj, is a standardization of<br />

the variance and can be written<br />

ρij =<br />

Cov[Xi, Xj]<br />

<br />

V ar[Xi]V ar[Xj]<br />

Equations (26, 27) le<strong>ad</strong> to the definition<br />

DEFINITION III.4C<br />

The correlation matrix for X is<br />

⎛<br />

⎜<br />

⎝<br />

1 ρ12 ... ρ1n<br />

ρ21 1 ... ρ2n<br />

.<br />

.<br />

ρn1 ρn2 ... 1<br />

= σij<br />

σiσj<br />

⎞<br />

⎟<br />

⎠<br />

2 ).<br />

(27)<br />

(28)<br />

THEOREM III.4D<br />

The variance matrix Σ and the correlation matrix R are a) symmetric and<br />

b) positive semi-definite.<br />

Proof. (a) The symmetry is obvious. (b) By definition of variance, V ar(z1X1+<br />

. . . + znXn) ¸ 0 for any z 2 R n . Hence the qu<strong>ad</strong>ratic form z T Σz ´<br />

V ar[z T X] ¸ 0, 8z, i.e. the matrix Σ is positive semidefinite. 4.<br />

Remark III.4E<br />

If Σ is positive definite,<br />

we often write Σ > 0.<br />

z T Σz ¸ 0 and z T Σz = 0 ) z = (0, ..., 0)<br />

11

DEFINITION III.4F<br />

The variance matrix of a p¡ dimensional random vector X with mean µ,<br />

and a q¡ dimensional random vector Y with mean ν is<br />

ΣXY = C[X, Y] = E <br />

<br />

T<br />

(X ¡ µ)(Y ¡ ν)<br />

=<br />

⎛<br />

⎜<br />

⎝<br />

Cov[X1, Y1] . . . Cov[X1, Yq]<br />

.<br />

.<br />

Cov[Xp, Y1] . . . Cov[Xp, Yq]<br />

In particular C[X, X] = V ar[X] = ΣX.<br />

⎞<br />

⎟<br />

⎠<br />

(29)<br />

COROLLARY III.4G - Linear transformations of random vectors<br />

Let X = (X1, ..., Xn) T have mean µ and covariance ΣX. Now we introduce<br />

the new random variable Y = (Y1, ..., Yk) T by the linear transformation<br />

Y = a + BX<br />

where a is (k £ 1) vector and B is a (k £ n) matrix. Then the expectation<br />

and variance matrix of Y are:<br />

Proof<br />

E[Y] = E[a + BX] = a + BE[X] (30)<br />

ΣY = V ar[a + BX] = V ar[BX] = BV ar[X]B T<br />

(31)<br />

The first formula is obvious since the expectation operator is linear. As for<br />

the variance of BX we have:<br />

= Cov[ <br />

l<br />

bi,lXl, <br />

(V ar[a + BX]) i,j = Cov[(BX)i, (BX)j] =<br />

m<br />

bj,mXm] = <br />

l,m<br />

bi,lbj,m Cov(Xl, Xm) = <br />

T<br />

BΣXB . 4<br />

i,j<br />

12

5 Conditional expectation<br />

What is heaven? Heaven is God’s milieu, the dwelling place of the angels and<br />

saints, and the goal of creation. Heaven is not a place in the universe. It is a<br />

condition in the next life. Heaven is where God’s will is done without resistance.<br />

Heaven happens when life is present in its greatest intensity and blessedness - a<br />

kind of life that we do not find on earth.<br />

The conditional expectation is the mean of a random variable, given values<br />

of another random variable. Mathematically, it is the mean calculated by the<br />

corresponding conditional density.<br />

DEF. III.5A - Conditional expectation - The conditional expectation, or<br />

conditional mean, of the random variable Y given X = x is<br />

E[Y jX = x] :=<br />

+∞<br />

−∞<br />

y fY |X(yjx) dy (32)<br />

where fY |X(yjx) is the conditional probability density of Y given X = x.<br />

The equation y = E[Y jX = x], defines a curve which is said the regression<br />

curve of Y on X.<br />

Ex. III.5 Let the conditional density of Y given X = x be known:<br />

Then<br />

fY |X(yjx) = xe −xy ¢ IR +(y)<br />

E[Y jX = x] :=<br />

= x<br />

x<br />

<br />

y e−xy<br />

∞ ¡x<br />

<br />

−xy 1 e<br />

x ¡x<br />

+∞<br />

0<br />

¡<br />

0<br />

∞ 0<br />

∞<br />

0<br />

y ¢ xe −xy dy =<br />

e−xy ¡x dy<br />

<br />

=<br />

= x ¢ 1 1<br />

=<br />

x2 x .<br />

In particular, the regression curve of Y on X is y = 1<br />

x<br />

for x > 0.<br />

DEF. III.5B - Conditional variance - The conditional variance of Y given X<br />

is<br />

V ar[Y jX = x] :=<br />

+∞<br />

(y ¡ E[Y jX = x]) 2 fY |X(yjx) dy<br />

−∞<br />

13

6 The multivariate normal distribution<br />

What is hell? Our faith calls ”hell” the condition of the final separation from<br />

God. Anyone who sees love clearly in the face of God and, nevertheless, does not<br />

want it decides freely to have this condition inste<strong>ad</strong>.<br />

We assume that X1, ..., Xn are independent random variables with means<br />

µ1, ..., µn , and variances σ 2 1, ..., σ 2 n. We write Xi 2 N(µi, σ 2 i ). Now, define<br />

the random vector X = (X1, ..., Xn) T . Because the random variables are<br />

indepedent, the joint density is the product of the <strong>marginal</strong> <strong>densities</strong>:<br />

fX(x1, ..., xn) = fX1(x1) ¢ ¢ ¢ fXn(xn)<br />

n<br />

<br />

1 (xi ¡ µi)<br />

= p exp<br />

2π 2<br />

<br />

i=1 σi<br />

1<br />

( n exp<br />

i=1 σi)(2π) n/2<br />

<br />

¡ 1<br />

2<br />

2σ2 i<br />

n<br />

· <br />

xi ¡ µi<br />

2<br />

By introducing the mean µ = (µ1, ..., µn) T , and the covariance<br />

this is written<br />

fX(x) =<br />

i=1<br />

σi<br />

ΣX = V ar[X] = diag(σ 2 1 , ..., σ2 n ),<br />

1<br />

(2π) n/2p det ΣX<br />

·<br />

exp ¡ 1<br />

2 (x ¡ µ)T Σ −1<br />

X<br />

.<br />

<br />

(x ¡ µ)<br />

(33)<br />

A generalization to the case where the covariance matrix is a full matrix le<strong>ad</strong>s<br />

to the following<br />

DEF. III.6A - The multivariate normal distribution -<br />

The joint density function for the n¡ dimensional random variable X with<br />

mean µ and covariance matrix Σ is<br />

fX(x) =<br />

1<br />

(2π) n/2p det ΣX<br />

·<br />

exp ¡ 1<br />

2 (x ¡ µ)T Σ −1<br />

<br />

X (x ¡ µ)<br />

(34)<br />

where Σ > 0 . We write X 2 N(µ, Σ). If X 2 N(0, I) we say that X is<br />

standardized normally distributed.<br />

THM. III.6B - In the case of a normally distributed variables X1, .., Xn, they<br />

are uncorrelated if <strong>ad</strong> only if they are independent.<br />

14

Proof<br />

Cov(Xi, Xj) = 0, 8i 6= j implies that the covariance and the qu<strong>ad</strong>ratic form<br />

in the exponent of f are diagonal, so that the density is the product of the<br />

<strong>marginal</strong> <strong>densities</strong>. 4<br />

THM. III.6C - Principal components of N(µ; Σ). -<br />

Given X 2 N(µ; Σ), with values in R n , the associated joint density f(x) is<br />

constant on the ellipsoid<br />

fx : (x ¡ µ) T Σ −1 (x ¡ µ) = c 2 g (35)<br />

in R n , c being a constant. The axes of the ellipsoid are<br />

<br />

§c λiei<br />

where (λi, ei) are the eigenvalue-eigenvector pairs of the covariance matrix<br />

Σ (i = 1, .., n). These ellipsoids are called the contours of the distribution<br />

or the ellipsoids of equal concentration.<br />

An orthogonal matrix G such that GΣG T is diagonal, determines a principal<br />

component transformation<br />

Y = G T (X ¡ µ) , (36)<br />

by which the Xi’s are transformed into independent and normally distributed<br />

variables Yi with mean zero and variance λi.<br />

Proof<br />

The covariance matrix Σ is symmetric and definite positive. Thus by the<br />

spectral decomposition theorem,<br />

G T ΣG = Λ, (or G T Σ −1 G = Λ −1 )<br />

where Λ = Diag(λ1, ..., λn) is the diagonal matrix of (positive) eigenvalues<br />

of Σ, while G is an orthogonal matrix whose columns are the corresponding<br />

normalized eigenvectors. By the principal component trasformation<br />

the ellipsoid becomes<br />

y = G T (x ¡ µ), i.e. x ¡ µ = Gy<br />

(Gy) T Σ −1 Gy = c 2 , i.e. y T G T Σ −1 Gy = c 2 ,<br />

i.e. y T Λ −1 y = c 2<br />

15<br />

i.e<br />

n 1<br />

y<br />

i=1<br />

λi<br />

2 i = c2 .

This is the equation of an ellipsoid with semimajor axes of length c p λi.<br />

y1, ..., yn represent the different axes of the ellipsoid. The direction of the<br />

axes are given by the eigenvectors of Σ since y = G T (x ¡ µ) is a rotation<br />

and G is orthogonal with eigenvectors of Σ as columns. 4<br />

EX. III.6D Principal components and contours of a two-dimensional normal<br />

vector.<br />

To obtain contours of a bivariate normal density we have to find the eigenvalues<br />

and eigenvectors of Σ. Suppose<br />

µ1 = 5, µ2 = 8, Σ =<br />

Here the eiegnvalue equation becomes<br />

0 = jΣ ¡ λIj = det<br />

<br />

12 ¡ λ 9<br />

9 25 ¡ λ<br />

<br />

<br />

12 9<br />

9 25<br />

<br />

.<br />

= (12 ¡ λ)(25 ¡ λ) ¡ 81 = 0.<br />

The equation gives λ1 = 29.601, λ2 = 7.398. The corresponding normalized<br />

eigenvectors are<br />

e1 =<br />

<br />

.455<br />

.890<br />

<br />

e2 =<br />

<br />

.890<br />

¡.455<br />

The ellipse of constant probability density (35) has center at µ = (5 , 8) T<br />

and axes §5.44 c e1 and §2.72 c e2. The eigenvector e1 lies along the line<br />

which makes an angle of cos −1 (.455) = 62.92 ◦ with the X1 axis and passes<br />

through the point (5, 8) in the (X1, X2) plane. By principal component<br />

transformation y = G T (x ¡ µ) ) where G = (e1, e2), the ellipse (35) has the<br />

equation<br />

fy :<br />

n<br />

i=1<br />

λi<br />

1<br />

y 2 i = c2g. The ellipse as center at (y1 = 0, y2 = 0) and axes §c¢ p λifi where fi, i = 1, 2,<br />

are the eigenvectors of L = Diag(λ1, λ2). In terms of the new co-ordinate<br />

axes Y1, Y2, the eigenvectors are f1 = (1, 0) T , f2 = (0, 1) T . The lengths of<br />

the axes are c p λi, i = 1, 2. The axes Y1, Y2 are the Principal Components<br />

of Σ, 4<br />

> Sigma eigen(Sigma)$values<br />

[1] 29.601802 7.398198<br />

> eigen(Sigma)$vectors<br />

16<br />

<br />

.

[,1] [,2]<br />

[1,] 0.4552524 0.8903624<br />

[2,] 0.8903624 -0.4552524<br />

><br />

THM. III.6E<br />

Let X be a normal random vector, with dimension n, with mean µ and positive<br />

definite variance Σ. Then X can be written as<br />

X = µ + CU<br />

where U = (U1, ..., Un) T » N(0; I).<br />

Proof<br />

Due to symmetry of the positive matrix Σ, there always exists a non singular<br />

real matrix C such that Σ = CC T (a matrix ”square root” of Σ). Setting<br />

C −1 (X ¡ µ), then from Corollary III.4G:<br />

E[U] = C −1 E[X ¡ µ] = 0<br />

V ar[U] = C −1 V ar[X](C −1 ) T = C −1 CC T (C T ) −1 = I. 4<br />

7 III.7 - Distributions derived from the normal<br />

distribution<br />

”I ask myself: What does hell mean? I maintain that it is the inability to love”<br />

(Fyodor M.Dostoyevsky, 1821-1881)<br />

Most of the test quantities used in Statistics are based on distributions derived<br />

from the normal one.<br />

Any linear combination of normally distributed random variables is normal.<br />

If, for instance, X 2 N(µ; Σ), then the linear transformation Y = a + BX<br />

defines a normally distributed random variable<br />

Y 2 N(a + Bµ, BΣB T ) (37)<br />

17

Compare with Corollary III.4G, where the transformation rule Σ ! BΣB T<br />

of the variance of X, for any linear map X ! a + BX, is established.<br />

Let U = (U1, ..., Un) T be a vector of independent N(0; 1) random variables.<br />

The (central) χ 2 distribution with n degrees of freedom is obtained as the<br />

squared sum of n independent N(0; 1) random variables, i.e.<br />

X 2 =<br />

n<br />

i=1<br />

U 2 i = UT U » χ 2 (n) (38)<br />

From this it is clear that if Y1, ..., Yn are independent N(µi; σ 2 i ) r.v.’s, then<br />

X 2 =<br />

n<br />

· 2<br />

Yi ¡ µi<br />

i=1<br />

since Ui = (Yi ¡ µi)/σi is N(0; 1) distributed.<br />

σi<br />

» χ 2 (n)<br />

THM. III.7A If Xi, for i = 1, ..., n, are independent normal variables from<br />

a population N(µ; σ 2 ), then the sample mean and the sample variance follow<br />

a normal and a chi squared distribution, respectively:<br />

¯X = 1<br />

n<br />

n<br />

i=1<br />

S 2 = 1 <br />

(Xi ¡<br />

n ¡ 1<br />

¯ X) 2 )<br />

Xi ) ¯ X » N(µ, σ2<br />

n )<br />

(n ¡ 1)S2<br />

σ 2<br />

» χ 2 (n ¡ 1).<br />

Proof.<br />

¯X is normal as a linear transformation of normal variables. By linearity<br />

E[ ¯ X] = 1 <br />

n i E[Xi] = 1 ¢ nµ = µ and by independence<br />

n<br />

V ar[ 1<br />

n<br />

n<br />

i=1<br />

Xi] = 1<br />

n 2<br />

<br />

whence ¯ X » N(µ, σ 2 /n).<br />

Now using this fact and the definition of χ 2 ,<br />

<br />

i<br />

( Xi ¡ µ<br />

σ<br />

i<br />

V ar[Xi] = nσ2 σ2<br />

=<br />

n2 n .<br />

) 2 » χ 2 (n), ( ¯ X ¡ µ<br />

σ/ p n )2 = ( ¯ X ¡ µ) 2<br />

σ 2 /n » χ2 (1)<br />

Now writing Xi ¡ µ = Xi ¡ ¯ X + ¯ X ¡ µ, the following decomposition can be<br />

verified:<br />

n<br />

( Xi ¡ µ<br />

)<br />

σ<br />

2 n (Xi ¡<br />

=<br />

¯ X) 2<br />

σ2 + n( ¯ X ¡ µ<br />

)<br />

σ<br />

2 =<br />

i=1<br />

i=1<br />

18

= (n ¡ 1)S2<br />

σ2 Therefore the random variable<br />

+ n( ¯ X ¡ µ<br />

)<br />

σ<br />

2 .<br />

(n ¡ 1)S 2<br />

σ 2<br />

is the difference between a χ 2 (n) r.v. and a χ 2 (1) r.v., that is a r. v. with<br />

distribution χ 2 (n ¡ 1). 4.<br />

The noncentral χ 2 distribution with n degrees of freedom and noncentrality<br />

parameter λ appears when considering the sum of squared normal independent<br />

variables in which the means are not necessarily zero. Hence,<br />

X 2 =<br />

n<br />

i=1<br />

where Vi » N(µi; 1) and λ = n i=1 µ 2 i ´ µT µ.<br />

V 2<br />

i = VT V » χ 2 (n; λ), (39)<br />

Let X 2 1 , ..., X2 n denote independent χ2 (ni, λi) distributed random variables.<br />

Then the reproduction property of the χ 2 distribution is<br />

m<br />

X<br />

i=1<br />

2 i<br />

» χ2<br />

m<br />

ni,<br />

i=1<br />

m<br />

<br />

λi<br />

i=1<br />

THM. III.7B If Y is Np(µ; Σ), and Σ is non-singular, then<br />

(40)<br />

a) X 2 = (Y ¡ µ) T Σ −1 (Y ¡ µ) » χ 2 (p) (41)<br />

b) Y T Σ −1 Y » χ 2 (p, λ) (42)<br />

where λ = µ T Σ −1 µ.<br />

Proof<br />

a) Σ is positive definite. By Thm. III.6F there exists a real nonsingular C<br />

such that<br />

Y = µ + CU, (or U = C −1 (Y ¡ µ) ) with U » N(0; Ip).<br />

(Such C is the matrix satisfying CC T = Σ). So U T U is a sum of squares<br />

of p independent N(0, 1) variables and we find:<br />

χ 2 (p) = U T U = (Y ¡ µ) T (C T ) −1 C −1 (Y ¡ µ) =<br />

19

) Omitted. 4<br />

= (Y ¡ µ) T (CC T ) −1 (Y ¡ µ) = (Y ¡ µ) T Σ −1 (Y ¡ µ).<br />

PROPOSITION III.7C - The data matrix. The sample mean vector.<br />

Suppose that X1, ..., Xn is a multivariate sample, i.e. independent identically<br />

distributed random vectors from a normal population Np(µ, Σ) :<br />

⎛<br />

⎞<br />

X1,1 ... X1,p<br />

⎜<br />

⎟<br />

⎜ X2,1 ... X2,p ⎟<br />

⎜<br />

⎟<br />

⎜<br />

⎟<br />

⎝<br />

⎠<br />

.<br />

.<br />

Xn,1 ... Xn,p<br />

Let us consider the sample mean vector<br />

¯X ´ ( ¯ X1, ..., ¯ Xp) T , ¯ Xj =<br />

n<br />

i=1<br />

.<br />

Xij/n, j = 1, ..., p.<br />

Then the mean and the variance matrix of ¯ X are µ and 1 Σ, respectively, and<br />

n<br />

¯X » Np(µ, 1<br />

n Σ).<br />

Proof<br />

For k, r = 1, ..., p, the k, r element of the variance matrix V ar[ ¯ X] is:<br />

Cov( ¯ Xk, ¯ Xr) = Cov( ¯ Xk, ¯ Xr) = Cov( 1<br />

n<br />

<br />

i<br />

Xi,k, 1<br />

n<br />

<br />

Xj,r)<br />

In such a sum only the i = j terms survive, since Xi and Xj are independent<br />

for i 6= j. On the other hand Cov(Xi,k, Xi,r) is the same for i = 1, ...n, so<br />

= 1<br />

n 2<br />

<br />

i<br />

Cov(Xi,k, Xi,r) = n<br />

n 2 σk,r whence Σ ¯ X = 1<br />

n Σ.<br />

4<br />

PROPOSITION III.7D - Testing for a multivariate population mean, if Σ is<br />

known.<br />

Suppose that X1, ..., Xn are a sample, i.e. independent identically distributed<br />

random vectors, from a normal distribution Np(µ, Σ) :<br />

⎛<br />

⎞<br />

X1,1 ... X1,p<br />

⎜<br />

⎟<br />

⎜ X2,1 ... X2,p ⎟<br />

⎜<br />

⎟<br />

⎜<br />

⎟<br />

⎝<br />

⎠<br />

.<br />

.<br />

Xn,1 ... Xn,p<br />

20<br />

.<br />

k

the variance of ¯ X is 1 Σ and n<br />

¯X » Np(µ, 1<br />

n Σ).<br />

A test on the null hypothesis H◦ : µ = µ◦ against H1 : µ = µ1 is performed<br />

by the statistics<br />

H◦ is rejected if<br />

W = n( ¯ X ¡ µ◦) T Σ −1 ( ¯ X ¡ µ◦) » χ 2 (p).<br />

W > χ 2 1−α(p)<br />

where χ2 1−α (p) is the 1¡α quantile of the chi square distribution with p degrees<br />

of freedom.<br />

If the alternative µ = µ1 is true, W follows a non central χ2 (p; λ), with<br />

λ = n(µ1¡µ◦)Σ −1 (µ¡µ◦) and the power of the test is given by the probability<br />

P <br />

χ 2 (p, λ) ¸ χ 2 1−α(p) <br />

.<br />

Proof<br />

By Prop. III.3C the variance of ¯ X is 1<br />

n Σ and, if H◦ holds,<br />

¯X » Np(µ◦, 1<br />

n Σ).<br />

Thus, by Thm. III.7B, the statistics<br />

W = (X ¡ µ◦) T (Σ/n) −1 (X ¡ µ◦)<br />

follows a χ 2 (p) distribution. Hence, at the level α, the null hypothesis H◦ is<br />

rejected if the experimental W belongs to the critical region:<br />

W > χ 2 1−α(p).<br />

Besides, we can recover the power of the test: under the alternative H1 :<br />

mu = µ1,<br />

( ¯ X ¡ µ◦) » Np[(µ1 ¡ µ◦), n −1 Σ]<br />

. Hence, by the second part of Thm. III.7A, W obeys a χ 2 (p, λ) distribution<br />

with non centrality parameter λ = n(µ1 ¡ µ◦) T Σ(µ1 ¡ µ◦). The power of the<br />

test is<br />

1 ¡ β = 1 ¡ P fχ 2 (p, λ) < χ 2 1−α (p)g = P fχ2 (p, λ) ¸ χ 2 1−α (p)g. 4<br />

EX. III.7E -<br />

Five dimensions of 19 sparrows have been measured. They are saved in the<br />

table<br />

21

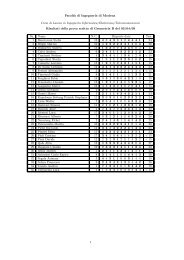

x1 x2 x3 x4 x5<br />

1 156 245 31.6 18.5 20.5<br />

2 154 240 30.4 17.9 19.6<br />

3 153 240 31.0 18.4 20.6<br />

4 153 236 30.9 17.7 20.2<br />

5 155 243 31.5 18.6 20.3<br />

6 163 247 32.0 19.0 20.9<br />

7 157 238 30.9 18.4 20.2<br />

8 155 239 32.8 18.6 21.2<br />

9 164 248 32.7 19.1 21.1<br />

10 158 238 31.0 18.8 22.0<br />

11 158 240 31.3 18.6 22.0<br />

12 160 244 31.1 18.6 20.5<br />

13 161 246 32.3 19.3 21.8<br />

14 157 245 32.0 19.1 20.0<br />

15 157 235 31.5 18.1 19.8<br />

16 156 237 30.9 18.0 20.3<br />

17 158 244 31.4 18.5 21.6<br />

18 153 238 30.5 18.2 20.9<br />

19 155 236 30.3 18.5 20.1<br />

From that, we extract a data matrix, X1, X2, X3 corresponding to the first 3<br />

columns (p = 3, n = 19). The sample mean and the sample variance are:<br />

Suppose we want to test<br />

S =<br />

¯x T = (157, 241, 31.37)<br />

⎛<br />

⎜<br />

⎝<br />

10.22 9 1.36<br />

9 16.66 1.84<br />

1.36 1.84 0.52<br />

H◦ : µ◦ = (156, 240, 30.9) T , against the alternative H1 : µ1 6= µ0<br />

assuming the parametric variance to be known, i.e. Σ = S. The statistics W<br />

falls in the critical region:<br />

⎞<br />

⎟<br />

⎠<br />

Wexp. = 8.99 > 7.81 ´ χ 2 0.95(3)<br />

so that the null hypothesis is rejected. More precisely, the p-value is 0.029 2<br />

2 The p-value is the probability area from the experimental value Wexp to infinity: if<br />

p · α, H◦ is rejected; if p > α, H◦ is not rejected at the level α. Actually p is the finest<br />

significance level at which the experiment rejects H◦.<br />

22

If an alternative hypothesis H1 : µ1 = (157, 141, 31.37) is true, the ncp λ of<br />

the chi square is computed and the power of the test follows.<br />

> T T<br />

x1 x2 x3 x4 x5<br />

1 156 245 31.6 18.5 20.5<br />

2 154 240 30.4 17.9 19.6<br />

3 153 240 31.0 18.4 20.6<br />

4 153 236 30.9 17.7 20.2<br />

5 155 243 31.5 18.6 20.3<br />

6 163 247 32.0 19.0 20.9<br />

7 157 238 30.9 18.4 20.2<br />

8 155 239 32.8 18.6 21.2<br />

9 164 248 32.7 19.1 21.1<br />

10 158 238 31.0 18.8 22.0<br />

11 158 240 31.3 18.6 22.0<br />

12 160 244 31.1 18.6 20.5<br />

13 161 246 32.3 19.3 21.8<br />

14 157 245 32.0 19.1 20.0<br />

15 157 235 31.5 18.1 19.8<br />

16 156 237 30.9 18.0 20.3<br />

17 158 244 31.4 18.5 21.6<br />

18 153 238 30.5 18.2 20.9<br />

19 155 236 30.3 18.5 20.1<br />

> x1 x2 x3 xbar xbar<br />

[1] 157.00000 241.00000 31.37368<br />

># calcolo di covarianza fra x1,x2 aventi la stessa lunghezza<br />

>sum((x1-mean(x1))*(x2-mean(x2)))/(length(x1)-1)<br />

[1] 9<br />

># si puo’ calcolare direttamente col comando "cov"<br />

>cov(x1,x2)<br />

[1] 9<br />

># calcolo la matrice delle covarianze fra x1,x2,x3 (matrice 3 x 3)<br />

> Sigma Sigma[1,1]

Sigma[1,2] Sigma[1,3] Sigma[2,1] Sigma[2,2] Sigma[2,3] Sigma[3,1] Sigma[3,2] Sigma[3,3] Sigma<br />

[,1] [,2] [,3]<br />

[1,] 10.222222 9.000000 1.3666667<br />

[2,] 9.000000 16.666667 1.8444444<br />

[3,] 1.366667 1.844444 0.5231579<br />

># C’e anche un comando diretto per scrivere la matrice varianza:<br />

> X var(X)<br />

[,1] [,2] [,3]<br />

[1,] 10.222222 9.000000 1.3666667<br />

[2,] 9.000000 16.666667 1.8444444<br />

[3,] 1.366667 1.844444 0.5231579<br />

># Pongo l’ipotesi nulla e calcolo la statistica W<br />

> mu0 Wsper Wsper<br />

[,1]<br />

[1,] 8.993734<br />

> qchisq(0.95,df=3)<br />

[1] 7.814728<br />

> pvalue pvalue<br />

[1] 0.02942414<br />

# Calcolo della potenza assumendo una media mu1 alternativa<br />

> mu1 lambda lambda<br />

[,1]<br />

[1,] 25483.11<br />

> potenza

potenza<br />

[1] 1<br />

DEF. III.7F -<br />

The (Student) t distribution with n degrees of freedom is obtained as<br />

T =<br />

Z<br />

» t(n) (43)<br />

(K/n) 1/2<br />

where Z 2 N(0; 1), K » χ 2 (n), and Z and K are independent.<br />

The F distribution with (n1, n2) degrees of freedom appears as the following<br />

ratio<br />

F = K1/n1<br />

K2/n2<br />

» F (n1, n2) (44)<br />

where K1 » χ 2 (n1), and K2 » χ 2 (n2), and K1, K2 are indepedent. It is<br />

clearly seen from (43) that T 2 » F (1, n1).<br />

PROP. III.7G - For i = 1, ..., n, let the Xi be i.i.d. variables from a normal<br />

population N(µ; σ2 ). If S2 = 1 ni=1(xi ¡ ¯x) n−1<br />

2 then the estimated standardization<br />

of the mean<br />

T = ¯ X ¡ µ<br />

S/ p n<br />

obeys a Student t distribution with n ¡ 1 degrees of freedom.<br />

If two independent samples fXigi and fYjgj (i = 1, ..., n1; j = 1, ...n2) are<br />

taken from normal populations with the same variance, the ratio<br />

F = S 2 1/S 2 2<br />

obeys a Fisher’s distribution with n1 ¡ 1, n2 ¡ 1 degrees of freedom.<br />

Proof Since ¯ X » N(µ; σ 2 /n), we have:<br />

T = ¯ X ¡ µ<br />

S/ p n = ¯ X ¡ µ<br />

σ/ p n ¢ σ/p n<br />

S/ p n =<br />

= U<br />

S/σ =<br />

U<br />

<br />

K/(n ¡ 1)<br />

25

where<br />

U = ¯ X ¡ µ<br />

σ/ p n , K = S2 ¢ (n ¡ 1)<br />

σ2 are independent with U » N(0; 1) and K » χ 2 (n ¡ 1).<br />

Finally, under the assumptions of equal variance σ 2 ,<br />

S 2 1<br />

S 2 2<br />

=<br />

1<br />

n1−1S2 1 ¢ (n1 ¡ 1)/σ2 1<br />

n2−1S2 2 ¢ (n2 ¡ 1)/σ2 = K1/(n1 ¡ 1)<br />

K2/(n2 ¡ 1)<br />

where K1 and K2 are independent, K1 » χ 2 (n1 ¡ 1), K2 » χ 2 (n2 ¡ 1).<br />

4<br />

># Intervallo di fiducia per la media di x1 (prima colonna della tabella sopra<br />

> t.test(x1)<br />

One Sample t-test<br />

data: x1<br />

t = 214.0444, df = 18, p-value < 2.2e-16<br />

alternative hypothesis: true mean is not equal to 0<br />

95 percent confidence interval:<br />

155.459 158.541<br />

sample estimates:<br />

mean of x<br />

157<br />

> # test su una media unilaterale: H_o: mu=155.5; H_1 mu> 155.5<br />

> t.test(x1,alternative="greater",mu=155.5)<br />

One Sample t-test<br />

data: x1<br />

t = 2.045, df = 18, p-value = 0.02788<br />

alternative hypothesis: true mean is greater than 155.5<br />

95 percent confidence interval:<br />

155.7281 Inf<br />

sample estimates:<br />

mean of x<br />

157<br />

26

# "ratio test", test sul rapporto di varianze di x2, x1:<br />

> var(x1)/var(x2)<br />

[1] 0.6133333<br />

> var(x2)/var(x1)<br />

[1] 1.630435<br />

># se il quantile 0.95 di F di Fisher e’ qf(0.95,length(x2)-1,length(x1)-1)<br />

[1] 2.217197<br />

> pvalue pvalue<br />

[1] 0.1545287<br />

Given a sample x1, ..., xn from Np(µ, Σ), we want to test H◦ : µ = µ◦ against<br />

H1 : µ 6= µ◦.<br />

In Prop. III.7D we used the fact that under H◦ : µ = µ◦<br />

and hence a critical region for H◦ is<br />

n(¯x ¡ µ◦) T Σ −1 (¯x ¡ µ◦) » χ 2 (p)<br />

fx : n(¯x ¡ µ◦) T Σ −1 (¯x ¡ µ◦) ¸ χ 2 1−α(p)g.<br />

That testing is realist for large samples: when n is large enough the sample<br />

variance S is nearly equal to the parameter variance Σ. Now consider the<br />

general case of unknown Σ.<br />

PROP. III.7H - Testing for a multivariate population mean if Σ is unknown.<br />

Let x1, ..., xn be a random sample from Np(µ, Σ), with unknown Σ. Under<br />

the hypothesis H◦ : µ = µ◦, and up to a suitable factor,<br />

T 2 ◦ = n(¯x ¡ µ◦) T S −1 (¯x ¡ µ◦)<br />

obeys an F distribution with p, n ¡ p degrees of freedom:<br />

F◦ = T 2 ◦ n ¡ p<br />

¢<br />

n ¡ 1 p<br />

The null hypothesis H◦ is rejected if<br />

F◦ ¸ F1−α(p, n ¡ p)<br />

27<br />

» Fp,n−p

where P [F (p, n ¡ p) · F1−α(p, n ¡ p)] = 1 ¡ α.<br />

When H◦ is false, the F distribution is non-central 3 with non-centrality parameter<br />

λ = n(µ ¡ µ◦) T Σ −1 (µ ¡ µ◦).. Thus<br />

T 2 ◦ n ¡ p<br />

¢<br />

n ¡ 1 p<br />

» F (p, n ¡ p; λ).<br />

The power of the test for a critical region of size α is<br />

P [F (p, n ¡ p); λ) ¸ F1−α(p, n ¡ p)]<br />

Proof. Parimal Mukhop<strong>ad</strong>hyay: Multivariate Statistical Analysis, World<br />

Scientific 2009, pages 114 and 131. 4<br />

>#test di media multivariata con Sigma incognita<br />

> T T<br />

x1 x2 x3 x4 x5<br />

1 156 245 31.6 18.5 20.5<br />

2 154 240 30.4 17.9 19.6<br />

3 153 240 31.0 18.4 20.6<br />

4 153 236 30.9 17.7 20.2<br />

5 155 243 31.5 18.6 20.3<br />

6 163 247 32.0 19.0 20.9<br />

7 157 238 30.9 18.4 20.2<br />

8 155 239 32.8 18.6 21.2<br />

9 164 248 32.7 19.1 21.1<br />

10 158 238 31.0 18.8 22.0<br />

11 158 240 31.3 18.6 22.0<br />

12 160 244 31.1 18.6 20.5<br />

13 161 246 32.3 19.3 21.8<br />

14 157 245 32.0 19.1 20.0<br />

15 157 235 31.5 18.1 19.8<br />

3 The non-central F distribution with (n1, n2) degrees of freedom and non-centrality<br />

parameter λ is obtained from<br />

F = K1/n1<br />

K2/n2<br />

where K1 » χ 2 (n1, λ) and K2 » χ 2 (n2, λ) and K1, K2 are independent. The non-central<br />

F distribution is written F » F (n1, n2; λ).<br />

28

16 156 237 30.9 18.0 20.3<br />

17 158 244 31.4 18.5 21.6<br />

18 153 238 30.5 18.2 20.9<br />

19 155 236 30.3 18.5 20.1<br />

> x1 x2 x3<br />

> # test di H_0: mu=(160,240,30)<br />

> mu0 xbar X S S<br />

[,1] [,2] [,3]<br />

[1,] 10.222222 9.000000 1.3666667<br />

[2,] 9.000000 16.666667 1.8444444<br />

[3,] 1.366667 1.844444 0.5231579<br />

> solve(S)<br />

[,1] [,2] [,3]<br />

[1,] 0.20277502 -0.08342674 -0.2355883<br />

[2,] -0.08342674 0.13271132 -0.2499477<br />

[3,] -0.23558829 -0.24994769 3.4081208<br />

> Tqu<strong>ad</strong>ro F0 F0<br />

[,1]<br />

[1,] 57.10946<br />

> # quantile 0.95 di F(3,19-3)<br />

> qf(0.95,df1=3,df2=16)<br />

[1] 3.238872<br />

><br />

> pvalue pvalue<br />

[1] 9.089796e-09<br />

> #potenza del test prendendo mu1= (161,240,31)<br />

> lambda mu1 lambda

potenza potenza<br />

[1] 0.2781924<br />

><br />

># potenza prendendo mu1=(161,241,31)<br />

mu1 lambda potenza potenza<br />

[1] 0.9998438<br />

The test therefore strongly rejects the null hypothesis.<br />

PROP.III.7I - CONFIDENCE REGION AND SIMULTANEOUS CONFI-<br />

DENCE INTERVALS<br />

Let X1, ..., Xn be a random sample from Np(µ, Σ). A (1 ¡ α) confidence<br />

region for the mean µ is the ellipsoid defined by<br />

fµ 2 R p : n(µ ¡ ¯x) T S −1 (µ ¡ ¯x) ·<br />

(n ¡ 1)p<br />

n ¡ p F1−α(p; n ¡ p)g<br />

where ¯x is the observed sample mean.<br />

Simultaneous confidence intervals for the p variables are obtained as projections<br />

of the ellipsoid to the p axes. Otherwise, at the level α, Bonferroni’s<br />

simultaneous confidence intervals are obtained by the usual univariate intervals<br />

with levels α/m inste<strong>ad</strong> of α.<br />

Proof We know that<br />

P [n( ¯ X ¡ µ) T S −1 ( ¯ X ¡ µ) ·<br />

(n ¡ 1)p<br />

n ¡ p F1−α(p; n ¡ p)] = 1 ¡ α.<br />

Hence the confidence region is the above ellipsoid with center ¯x. Beginning<br />

at the center, the axes of the elipsoid are<br />

<br />

<br />

<br />

§f λi<br />

<br />

(n ¡ 1)p<br />

n(n ¡ p) F1−α(p, n ¡ p)g ei<br />

where (λi, ei) (i=1,...,p) are the (eigenvalue, eigenvector ) pairs of S.<br />

Now let m · p and, for i = 1, ..., m ,let Ai be a confidence statement<br />

Ai : the interval ¯xi § t1−α/2(n ¡ 1)si/ p n contains µi<br />

30

on the i¡th component µi of µ. Then P (Ai) = 1¡α. By using De Morgan’s<br />

law and Boole’s inequality. i.e.<br />

we have<br />

(\ m i=1 Ai) C = [ m i=1 AC i ; P ([ m i=1 Ai) ·<br />

m<br />

i=1<br />

P (Ai),<br />

P (\ m i=1Ai) = 1 ¡ P [(\ m i=1Ai) C ] = 1 ¡ P [[ m i=1A C i ] ¸ 1 ¡<br />

m<br />

P (A<br />

i=1<br />

C i )<br />

So the simultaneous confidence of the m independent intervals is bounded<br />

below by 1 ¡ mα:<br />

(1 ¡ α) m ¸ 1 ¡ mα<br />

Therefore in place of α we can choose a confidence level α/m , for each<br />

i = 1, ..., m, to ensure the desired global confidence level α for the m means.<br />

For example, denoting x, y, z... the m variables, the simultaneous Bonferroni’s<br />

intervals are :<br />

µx = ¯x § t1− α<br />

2m<br />

sx<br />

p n , µy = ¯y § t1− α<br />

2m<br />

31<br />

sy<br />

p n , ... etc. 4