II. FINITE DIFFERENCE METHOD 1 Difference formulae

II. FINITE DIFFERENCE METHOD 1 Difference formulae

II. FINITE DIFFERENCE METHOD 1 Difference formulae

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

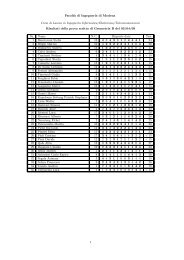

Computational Methods and advanced Statistics Tools<br />

<strong>II</strong>. <strong>FINITE</strong> <strong>DIFFERENCE</strong> <strong>METHOD</strong><br />

1 <strong>Difference</strong> <strong>formulae</strong><br />

”God whispers in our joys; he speaks in our conscience. But in our sorrows he<br />

shouts. They are the megaphone with which he awakens a deaf world”.<br />

(Clive Staples Lewis, 1898-1963)<br />

Finite difference <strong>formulae</strong> are the basis of methods for the numerical solution<br />

of differential equations. Assuming that the function f(x) is known in the<br />

points x ¡ h and x + h, we can estimate f ′ (x), that is the angular coefficient<br />

of the curve y = f(x) in the point x, by means of the angular coefficients of<br />

different lines.<br />

Def. (Forward, backward and central differences) Let f be a C 1 function<br />

given al least in some neighbourhood of the point x. The forward difference<br />

(obtained by joining the points (x, f(x)) and (x + h, f(x + h)))<br />

D+f :=<br />

f(x + h) ¡ f(x)<br />

,<br />

h<br />

the backward difference (obtained by joining the points (x, f(x)) and (x ¡<br />

h, f(x ¡ h))<br />

f(x) ¡ f(x ¡ h)<br />

D−f := ,<br />

h<br />

and the central difference (obtained by joining (x ¡ h, f(x ¡ h)) and (x +<br />

h, f(x + h))<br />

f(x + h) ¡ f(x ¡ h)<br />

Dcf :=<br />

h<br />

define the difference <strong>formulae</strong><br />

f ′ (x) » D+f, f ′ (x) » D−f, f ′ (x) » Dcf.<br />

1

By proceeding in analogous way, higher order derivatives can be approximated.<br />

For example, approximating the function f by a second degree polynomial<br />

through the points (x ¡ h, f(x ¡ h)), (x, f(x)) and (x + h, f(x + h)),<br />

it turns out that D+(D−f) is a central difference approximation of f ′′ (x) :<br />

= 1<br />

h<br />

[ 1<br />

h<br />

D+(D−f) = ( D−f(x + h) ¡ D−f(x)<br />

h<br />

1<br />

(f(x + h) ¡ f(x)) ¡ (f(x) ¡ f(x ¡ h))] =<br />

h<br />

= 1<br />

h 2 [f(x + h) ¡ 2f(x) + f(x ¡ h)] » f ′′ (x).<br />

The study of the truncation error can be introduced by an example: if the<br />

first derivative of the function e x is approximated by the forward (central)<br />

difference operator in the point x = 1, the truncation errors D+e x ¡ e x ,<br />

(Dce x ¡ e x ) for increments h, h/2, h/4,... are written in the following table.<br />

The ratio between an approximation and the subsequent one is represented<br />

by r:<br />

h D+e x ¡ e x r Dce x ¡ e x r<br />

0.4 0.624013 2.14 0.0730696 4.02<br />

0.2 0.290893 2.06 0.0181581 4.00<br />

0.1 0.140560 2.03 0.0045327 4.00<br />

0.05 0.069103 2.01 0.0011327 4.00<br />

0.025 0.034263 0.0002831<br />

If the forward difference operator is applied, the error is approximately<br />

halved when the increment is halved. If the case of central difference, the<br />

error is approximately reduced by a factor 4 when h is halved. Such results<br />

suggest that the errors behave linearly as h ! 0 in the first case, and<br />

quadratically, O(h 2 ), in the other case.<br />

Thm. <strong>II</strong>.1.1 (On truncation error)Let f be sufficiently regular and let ET<br />

be its truncation error. Then ET = O(h) in case of a forward difference,<br />

ET = O(h 2 ) in case of a central difference, as h ! 0. Besides, for the central<br />

difference D+(D−) approximating f ′′ (x), the truncation error is O(h 2 ) as<br />

h ! 0.<br />

PROOF.<br />

2

Let us apply Taylor’s formula. 1 If the function is twice continuously derivable,<br />

ET :=<br />

f(x + h) ¡ f(x)<br />

h<br />

¡ f ′ (x)<br />

= 1<br />

h (f(x) + hf ′ (x) + h2<br />

2 f ′′ (ξ) ¡ f(x)) ¡ f ′ (x) = h<br />

2 f ′′ (ξ)<br />

where ξ is a suitable point of (x, x + h). If the function is C (3) then the<br />

central difference approximation gives the truncation error<br />

ET = 1<br />

2h [f(x + h) ¡ f(x ¡ h)] ¡ f ′ (x)<br />

= 1<br />

2h [f(x)+hf ′ (x)+ h2<br />

2 f ′′ (x)+ h3<br />

6 f ′′′ (ξ1)¡f(x)+hf ′ (x)¡ h2<br />

2 f ′′ (x)+ h3<br />

6 f ′′′ (ξ2) ]<br />

= 1<br />

2h [h3<br />

6 f ′′′ (ξ1) + h3<br />

Finally, if f 2 C4 , we have<br />

6 f ′′′ (ξ2)] = O(h 2 ). 4<br />

D+(D−f) = 1<br />

[f(x + h) ¡ 2f(x) + f(x ¡ h)]<br />

h2 = 1<br />

h 2 [f(x) + hf ′ (x) + h2<br />

2 f ′′ (x) + h3<br />

6 f (3) (x)+<br />

+O(h 4 ) ¡ 2f(x) + f(x) ¡ hf ′ (x) + h2<br />

2 f ′′ (x) ¡ h3<br />

6 f (3) (x) + O(h 4 )] =<br />

= 1 h2<br />

[(h2 +<br />

h2 2 2 )f ′′ (x) + O(h 4 )] = f ′′ (x) + O(h 2 ). 4<br />

The influence of rounding errors in difference <strong>formulae</strong> for derivatives is<br />

described in the following table, where each central difference is computed<br />

for the function e x in x = 1. Computations were performed with precision<br />

1 Recall Taylor’s formula: if f is C r+1 in some neighbourhod of x then the r¡th re-<br />

mainder satisfies<br />

f(x + h) ¡ [f(x) + hf ′ (x) + h2<br />

2 f ′′ (x) + . . . + hr<br />

r! f (r) (x)] = O(h r+1 ), as h ! 0.<br />

Here the Landau’s symbol O(h m ) as h ! 0 denotes any infinitesimal fuction S(h) such<br />

that S(h)/h m stays bounded as h ! 0. There are several explicit expression of the<br />

remainder: for example if f 2 C r+1 there is ξ 2 [x, x + h], such that the r-th remainder<br />

is equal to hr+1<br />

(r+1)! f (r+1) (ξ) (if, say, h > 0).<br />

3

ǫ = 2.22 £ 10 −16 . As h decreases, the global error is reduced till a certain<br />

value of h, then it increases. Such a behaviour is due to the fact that the<br />

values of e 1±h are computed approximately, and for small h cancellations take<br />

place.<br />

h Dce x ¡ e x<br />

10 −1 0.004532<br />

10 −2 0.000453<br />

10 −3 0.0000000453<br />

10 −4 0.00000000453<br />

10 −8 -0.0000000165<br />

10 −9 0.0000000740<br />

10 −10 0.000000224<br />

10¡14 -0.002172<br />

Remark <strong>II</strong>.1A Let us denote by ˜ f(x§h) the approximate values of f(x§h)<br />

and let us suppose that<br />

j ˜ f(x § h) ¡ f(x § h)j · ǫ<br />

where ǫ only depends on the precision of the used machine. Then, denoting<br />

˜Dc the numerically computed central difference,<br />

j ˜ Dcf(x) ¡ f ′ (x)j · j ˜ Dcf(x) ¡ Dcf(x)j + jDcf(x) ¡ f ′ (x)j · ǫ h2<br />

+<br />

h 6 f (3) (ξ)<br />

The bound describes the real situation, there is a divergent component of<br />

the error as h ! 0. In other words, for decreasing h rounding errors can<br />

prevail on truncation error, with unreliable results. The situation is described<br />

in Figure <strong>II</strong>.1. An optimal choice of h corresponds to the minimum of the<br />

function ǫ<br />

h<br />

+ h2<br />

6 max jf (3) j.<br />

2 Finite difference method<br />

Does science make the Creator superfluous? No. The sentence ”God created the<br />

world” is not an outmoded scientific statement. It is a theological statement that is<br />

concerned with the relation of the world to God. Being created is a lasting quality<br />

in things and a fundamental truth about them. (YouCat 41)<br />

4

errore(h)<br />

errore totale<br />

h<br />

errore di troncamento<br />

errore di arrotondamento<br />

Figure 1: Rounding error and truncation error in the approximation of the derivative<br />

by central differences<br />

The difference method is based on the choice of subintervals [xi, xi+1] of<br />

[a, b] and the approximation of derivatives by suitable difference operators.<br />

We take equal intervals with length h = (b ¡ a)/n, so the subdivision points<br />

are given by<br />

xi = a + ih, i = 0, 1, ..., n<br />

We denote f¯yi, i = 1, ..., ng the discrete approximation of the values y(xi)<br />

of the continuos variable solution. Let us analyze the method in the case of<br />

the linear problem<br />

′′ ¡y (x) + q(x)y(x) = f(x), a < x < b<br />

(1)<br />

y(a) = α, y(b) = β<br />

where q(x), f(x) are assigned functions, continuous in the interval [a, b].<br />

Under the hypothesis<br />

q(x) ¸ 0 (2)<br />

existence and uniqueness of the solution can be proved for the problem (1).<br />

Moreover, the solution is C 2 and any further regularity assumption on data<br />

q(x), f(x) implies further regularity of the solution: for example, if f(x), q(x)<br />

admit second derivative, the solution has the fourth derivative. At each node<br />

xi, the derivative is discretized by means of the difference operator<br />

y ′′ (xi) » y(xi+1) ¡ 2y(xi) + y(x(xi−1)<br />

h2 .<br />

5

Inserting such approximation in the equation (1) for i = 1, 2, . . . , n ¡ 1 and<br />

replacing y(xi) by ¯yi, we obtain the following linear equations<br />

or<br />

¡ ¯yi+1 ¡ 2¯yi + ¯yi−1<br />

h 2 + q(xi)¯yi = f(xi), i = 1, 2, . . . , n ¡ 1<br />

¡¯yi+1 + ¯yi(2 + h 2 qi) ¡ ¯yi−1 = h 2 fi, i = 1, 2, . . . , n ¡ 1 (3)<br />

where qi = q(xi), fi = f(xi). In particular, for i = 1 ad i = n ¡ 1, taking<br />

into account the boundary conditions, we have the equations<br />

for i = 1, (2 + h 2 q1)¯y1 ¡ ¯y2 = α + h 2 f1 (4)<br />

for i = n ¡ 1, (2 + h 2 qn−1)¯yn−1 ¡ ¯yn−2 = β + h 2 fn−1 (5)<br />

Thm. <strong>II</strong>.2.1 (Existence and uniqueness of the numerical solution)The difference<br />

method applied to the problem (1) leads to a discrete problem which<br />

is the linear system of n ¡ 1 equations<br />

where<br />

and<br />

A =<br />

⎛<br />

⎜<br />

⎝<br />

A¯y = b (6)<br />

¯y = (¯y1, . . . , ¯yn−2, ¯yn−1) T ,<br />

(2 + h 2 q1) ¡1 0<br />

¡1 (2 + h 2 q2) ¡1<br />

.<br />

.<br />

.<br />

¡1 (2 + h 2 qn−2) ¡1<br />

0 ¡1 (2 + h 2 qn−1)<br />

b = (h 2 f1 + α, h 2 f2, ..., h 2 fn−2, h 2 fn−1 + β) T .<br />

Under the hypothesis q(x) ¸ 0 such a system admits a unique solution.<br />

PROOF<br />

Equations (3), (4), (5) can be written in vector form as stated. Now from<br />

linear algebra<br />

A is non-singular () z T Az 6= 0, 8z 6= (0, ..., 0).<br />

6<br />

.<br />

.<br />

⎞<br />

⎟<br />

⎠

By hypothesis (2), A satisfies:<br />

z T Az ¸ (z1, ..., zn−1)<br />

⎛<br />

⎜<br />

⎝<br />

= (z1, ..., zn−1)<br />

2 ¡1 0<br />

¡1 2 ¡1<br />

. . . . .<br />

¡1 2 ¡1<br />

0 ¡1 2<br />

⎛<br />

⎜<br />

⎝<br />

2z1 ¡ z2<br />

¡z1 + 2z2 ¡ z3<br />

.<br />

¡zj−1 + 2zj ¡ zj+1<br />

.<br />

¡zn−2 + 2zn−1<br />

⎞<br />

⎟ ⎛ ⎞<br />

⎟ z1 ⎟ ⎜ ⎟<br />

⎟ ⎜ ⎟<br />

⎟ ⎝ . ⎠<br />

⎟<br />

⎠ zn−1<br />

= 2z 2 1 ¡ z1z2 ¡ z1z2 + 2z 2 2 ¡ z2z3 + ... ¡ zn−2zn−1 + 2z 2 n−1<br />

⎞<br />

⎟<br />

⎠<br />

= z 2 1 + (z1 ¡ z2) 2 + ... + (zn−2 ¡ zn−1) 2 + z 2 n−1<br />

for any (z1, ..., zn−1) 6= (0, ..., 0). Thus A is positive definite, hence nonsingular:<br />

then the discrete model is solvable under the same hypothesis of<br />

the continuous variable one, and the statement is proved. 4<br />

The discrete solution ¯yi, i = 0, 1, ..., n, depends on the discretization gauge h.<br />

Then an important question is about the convergence problem as h ! 0:<br />

is it possible to approximate the continuous solution, acording to a fixed<br />

precision, for sufficiently small h? The approximation should be pointwise,<br />

since the solution of the boundary value problem is continuous. The study<br />

of convergence is introduced by the following example.<br />

Example <strong>II</strong>.2A Let us consider the problem<br />

¡y ′′ (x) + y(x) = 0, y(0) = 0, y(1) = sinh(1)<br />

which admits tha analytic solution y(x) = sinh(x). For n = 4, i.e. h = 0.25,<br />

the discrete problem consists in solving the system A¯y = b with unknown<br />

(¯y1, ¯y2, ¯y3) and<br />

A =<br />

⎛<br />

⎜<br />

⎝<br />

(2 + h 2 ) ¡1 0<br />

¡1 (2 + h 2 ) ¡1<br />

0 ¡1 (2 + h 2 )<br />

7<br />

⎞<br />

⎟<br />

⎠ ; b =<br />

⎛<br />

⎜<br />

⎝<br />

> 0<br />

0<br />

0<br />

sinh(1)<br />

⎞<br />

⎟<br />

⎠

The numerical computation can be performed by a program of the following<br />

type (the language and environment R can be taken from CRAN, or www.Rproject.org)<br />

programma

y<br />

5 10 15 20 25<br />

y’’+y=x^2, y(−1)=3, y(1)=5<br />

−1.0 −0.5 0.0 0.5 1.0<br />

Figure 2: The difference method applied to a problem y ′′ (x) + y(x) = x 2 , with<br />

Dirichlet conditions y(a) = α, y(b) = β.<br />

EX. <strong>II</strong>.2B - Let us consider the Dirichlet problem<br />

<br />

y ′′ (x) + x 2 y(x) = sin(x)<br />

y(¡4π) = 0, y(4π) = 0<br />

It is solved by a difference scheme, building an R function with output in<br />

Figure 4.<br />

As the above table suggests, the following theorem shows that, if the solution<br />

is sufficiently regular, the truncation error of the solution is O(h 2 ).<br />

Thm. <strong>II</strong>.2.2 (Convergence) Let y(x) be the solution of the problem with<br />

Dirichlet conditions<br />

¡y ′′ (x) + q(x)y(x) = f(x), y(a) = α, y(b) = β<br />

with q(x) and f(x) continuous functions on [a, b] and q(x) ¸ 0. Assume,<br />

moreover, that the function y(x) has bounded fourth derivative, jy (4) (x)j · M<br />

for any x 2 [a, b]. If the approximation of y(xi) obtained by the difference<br />

method is denoted by ¯yi, then for some C independent of h,<br />

jy(xi) ¡ ¯yij · Ch 2 , i = 1, 2, ..., n ¡ 1<br />

9<br />

x

y<br />

0.0 0.1 0.2 0.3 0.4 0.5<br />

−y’’+(x^2−1)y=0, y(−3)=e^(−9/2),y(−3)=e^(−9/2)<br />

−3 −2 −1 0 1 2 3<br />

Figure 3: The difference method applied to the harmonic oscillator ¡y ′′ (x)+(x 2 ¡<br />

1)y(x) = 0 with Dirichlet conditions y(¡3) = y(3) = e −4.5 .<br />

y<br />

−0.3 −0.2 −0.1 0.0 0.1 0.2 0.3<br />

x<br />

y’’(x)+x^2y(x)=sin(x), y(−4*pi)=0, y(4*pi)=0<br />

−10 −5 0 5 10<br />

Figure 4: The difference method applied to the forced harmonic oscillator y ′′ (x)+<br />

x 2 y(x) = sin(x) with Dirichlet conditions y(¡4π) = 0 , y(4π) = 0.<br />

x<br />

10

i.e. the method satisfies a convergence of order 2.<br />

Concepts of the PROOF<br />

Although we don’t give a complete proof, we can distingish two components<br />

of the concept of convergence: consistence and stability. On one hand,<br />

the quantity<br />

τi = ¡ 1<br />

h 2 [ u(xi+1) ¡ 2u(xi) + u(xi−1 ] + q(xi)y(xi) ¡ f(xi) (7)<br />

is introduced. It represents the local discretization error: the error committed<br />

in the node xi by the true solution when inserted in the discrete scheme.<br />

By introducing the appropriate Taylor’s expansions, as in the proof of Thm.<br />

<strong>II</strong>.1.1, we see that for some ξ<br />

k τi k=k u ′′ (xi) + h2<br />

12 u(4) (ξ) + q(xi)u(xi) ¡ f(xi) k=k h2<br />

12 u(4) (ξ) k· Mh 2 /12. (8)<br />

On the other hand, denoting by uh the vector of the approximation ( ¯yi ),<br />

and by u the vector of the true values of the solution, the convegence regards<br />

just the global error of the solution E := u ¡ uh. Now by the method uh<br />

obeys the linear system<br />

Auh = b.<br />

Hence<br />

Au ¡ Auh = Au ¡ b.<br />

Now, the right hand side is just τ, the vector of local discretization errors τi<br />

in (7), so that<br />

A(u ¡ uh) = τ.<br />

11

Since A is invertible, the error of the solution can be evaluated 2 in norm:<br />

k E k=k A −1 τ k·k A −1 kk τ k (9)<br />

To ensure convergence two facts have to be verified:<br />

1) k τ k ! 0, for some norm: this property is the consistence of the<br />

numerical method<br />

2) k A −1 k· C, with C independent of h: this property is the stability<br />

of the numerical method, it is verified if the matrix A −1 is not divergent in<br />

norm as h ! 0.<br />

If we choose, for example, the norm k w k:= maxi jwij, we have<br />

k τ k= max jτij · Mh<br />

i<br />

2 /12 to 0 as h ! 0<br />

by (8), whence consistence is proved. For what concerns stability, one must<br />

prove that A is nonsingular uniformly with respect to h, for example by<br />

showing that the lowest eigenvalue is larger than a positive constant independent<br />

of h. That can be proved, the stability property holds, and the<br />

convergence of the method follows. 4<br />

3 Further applications of the difference method<br />

The creation of the world is, so to speak, a ”community project” of the Trinitarian<br />

God. The Father is the Creator, the Almighty, The Son is the meaning and heart of<br />

2<br />

In these evaluations, the norm of a vector can be the usual Euclidean norm (k w k=<br />

w2 1 + . . . + w2 n ), or the simpler (and topologically equivalent) maximum norm: k w k:=<br />

maxi jwij. The norm of a matrix is induced by the choice of the vector norm by the<br />

definition<br />

k Bv k<br />

k B k:= sup<br />

v=0 k v k .<br />

If, for example, the maximum norm for vectors is chosen, the induced norm of a matrix<br />

turns out to be the maximum among the sum of rows (in absolute value):<br />

k B k= max<br />

i<br />

n<br />

jbijj.<br />

In any case, after choosing a vector norm and the induced matrix norm, it is guaranteed<br />

that<br />

k Bv k·k B k ¢ k v k, 8v.<br />

12<br />

j=1

the world: ”All things were created through him and for him” (Col 1:16). We find<br />

out that the world is good for only when we come to know Christ and understand<br />

that the world is heading for a destination: the truth, goodness and beauty of the<br />

Lord. The Holy Spirit holds everything together: he is the one ”that gives life” (Jn<br />

6,63).<br />

Let us analyze the finite difference method applied to convection-diffusion<br />

problems.<br />

Ex.<strong>II</strong>.3A (Convection-diffusion problems with Dirichlet conditions)<br />

¡y ′′ (x) + p(x)y ′ (x) + q(x)y(x) = f(x), a < x < b<br />

y(a) = α, y(b) = β<br />

where p(x), q(x), f(x) are continuous functions on [a, b] with q(x) ¸ 0. The<br />

analytical problem turns out to admit a unique solution in the the class C 2 .<br />

Setting h = (b¡a)/n, for given n 2 N, let us consider the following difference<br />

scheme obained by use of central difference operators:<br />

¡ ¯yi+1 ¡ 2¯yi + ¯yi−1<br />

h2 + p(xi) ¯yi+1 ¡ ¯yi−1<br />

+ q(xi)¯yi = f(xi)<br />

2h<br />

for i = 1, . . . , n ¡ 1. The boundary conditions are: ¯y◦ = α, ¯yn = β. The<br />

discrete problem is equivalent to a linear system A¯y = f with a tridiagonal<br />

coefficient matrix:<br />

A =<br />

⎛<br />

⎜<br />

⎝<br />

(2 + h 2 q1) ¡1 + h<br />

2 p1<br />

.<br />

.<br />

Under the following hypothesis<br />

.<br />

.<br />

¡1 ¡ h<br />

2 pi (2 + h 2 qi) ¡1 + h<br />

2 pi<br />

.<br />

.<br />

.<br />

.<br />

¡1 ¡ h<br />

2 pn−1 (2 + h 2 qn−1)<br />

h<br />

2 jp(xi)j < 1, i = 1, 2, ..., n ¡ 1 (10)<br />

then the lower and upper diagonals are strictly less, in absolute value, than<br />

the principal diagonal. Proceeding as in the proof of Thm. <strong>II</strong>.2.1, setting for<br />

convenience 2+ǫ and ¡2 the diagonal and off-diagonal elements of a suitable<br />

comparison matrix<br />

⎛<br />

z T Az ¸ z T<br />

⎜<br />

⎝<br />

2 + ǫ ¡2<br />

.<br />

.<br />

. . .<br />

¡2 2 + ǫ ¡2<br />

.<br />

13<br />

.<br />

.<br />

. .<br />

¡2 2 + ǫ<br />

⎞<br />

⎟<br />

⎠<br />

z<br />

.<br />

.<br />

⎞<br />

⎟<br />

⎠

y<br />

0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7<br />

y’’(x)+(3*x−2)*y’(x)+(x^2+1)*y(x)=cos(x/2), y(−6*pi)=0, y(6*pi)=0<br />

−20 −10 0 10 20<br />

Figure 5: The difference method applied to the the convection-diffusion problem<br />

¡y ′′ (x)+(3x¡2)y ′ (x)+(x 2 +1)y(x) = cos(x/2) with Dirichlet conditions y(¡6π) =<br />

0 , y(6π) = 0.<br />

¸ ǫz 2 1 + (p 2z1 ¡ p 2z2) 2 + . . . + ( p 2zn−2 ¡ p 2zn−1) 2 + ǫz 2 n−1 ,<br />

which is > 0 for any nonzero z 2 R n−1 . Hence we have the following result.<br />

Thm. <strong>II</strong>.3.1 (Existence and uniqueness of solution of the discretized problem)<br />

The discretized version of the convection-diffusion problem above described<br />

admits a unique solution if h = (b ¡ a)/n is chosen sufficiently small<br />

with respect to the convection term p(x), as in (10).<br />

Ex.<strong>II</strong>.3B (Alternative discretizations are possible) The restriction (10) can<br />

be eliminated by discretizing in another way the term p(x)y ′ . More precisely,<br />

we set<br />

¡ ¯yi+1 ¡ 2¯yi + ¯yi−1<br />

h 2<br />

¡ ¯yi+1 ¡ 2¯yi + ¯yi−1<br />

h 2<br />

+ p(xi) ¯yi+1 ¡ ¯yi<br />

h<br />

+ p(xi) ¯yi ¡ ¯yi−1<br />

h<br />

x<br />

14<br />

+ q(xi)¯yi = f(xi), if p(xi) · 0<br />

+ q(xi)¯yi = f(xi), if p(xi) > 0

This scheme, which is known as upwind difference method, , may be written<br />

in the form<br />

¡ ¯yi+1 ¡ 2¯yi + ¯yi−1<br />

h 2<br />

¡ (jpij ¡ pi)¯yi+1 ¡ 2jpij¯yi + (jpij + pi)¯yi−1<br />

2h<br />

+ qi¯yi = fi<br />

The upwind scheme is less accurate than the preceding one, but it is solvable<br />

without restrictions on the choice of h. Indeed, the matrix of the corresponding<br />

linear system turns out to be with diagonal predominance.<br />

<strong>II</strong>.3.C NEWTON’S <strong>METHOD</strong><br />

In the scalar case with f 2 C (1) , f ′ (x) 6= 0, the solution of the equation<br />

f(x) = 0 is approximated by a sequence xn starting from a (sufficiently<br />

near) point x◦. The idea, at the k¡th step, is to replace the curve f by the<br />

tangent line at xk, i.e. to solve the linear equation 0 = f(xk)+f ′ (xk)(x¡xk):<br />

xk+1 = xk ¡ [f ′ (xk)] −1 f(xk).<br />

In several dimensions suppose that f is regular with a non singular Jacobian<br />

matrix :<br />

f : R m ! R m , f 2 C 1 , J non singular.<br />

Let us take an initial point x◦ sufficiently near the true solution of the equation<br />

f(x) = 0.<br />

At the k¡th step, the idea is to replace the function y = f(x) by its Taylor’s<br />

formula at the first order, i.e. to solve the linear system 0 = f(xk)+J(xk)(x¡<br />

xk), where J is the Jacobian:<br />

xk+1 = xk ¡ [J(xk)] −1 f(xk).<br />

Ex.<strong>II</strong>.3D (The difference method applied to a nonlinear problem)<br />

<br />

y ′′ (x) = 1<br />

2 (1 + x + y)3 , x 2 (0, 1)<br />

y(0) = y(1) = 0<br />

Setting f(x, y) := 1<br />

2 (1 + x + y)3 , let’s notice that<br />

∂f<br />

∂y<br />

= 3<br />

2 (1 + x + y)2 ¸ 0<br />

Then it can be shown that such a boundary value problem admits a unique<br />

solution. Let us discretize the interval (0, 1) by the points xi = ih, with<br />

15

h = 1/(n + 1) and i = 0, 1, ..., n + 1. Let us denote by ¯yi the values of<br />

the approximated solution in the points xi. By discretizing y ′′ by means f<br />

central differences, the following nonlinear system is got:<br />

¯y◦ = 0, ¯yn+1 = 0<br />

¡ ¯yi+1−2¯yi+¯yi−1<br />

h 2<br />

+ 1<br />

2 (1 + xi + ¯yi) 3 = 0, (1 · i · n)<br />

Eliminating the variables ¯y◦, ¯yn+1, the system can be written in the following<br />

matrix form<br />

with ¯y = (¯y1, ..., ¯yn) T and<br />

A =<br />

⎛<br />

⎜<br />

⎝<br />

2 ¡1<br />

¡1 2 ¡1<br />

.<br />

.<br />

. . .<br />

¡1 2 ¡1<br />

¡1 2<br />

A¯y + h 2 B(¯y) = 0 (11)<br />

⎞<br />

⎟<br />

⎠<br />

, B(¯y) = diag(f(xi, ¯yi)), i = 1, 2, ..., n<br />

The nonlinear system (11) can be solved, for example, by Newton’s method.<br />

Indeed, the Jacobian matrix of the system is given by<br />

J = A + h 2 By<br />

(12)<br />

By virtue of the property fy ¸ 0, such a Jacobian turns out to be a matrix<br />

with diagonal predominance and positive definite. Therefore the Newton’s<br />

method is convergent if the initial values are chosen sufficiently near the<br />

solution. The implementation of Newton’s method is the following. A<br />

plausible initial point ¯y (◦) is chosen, the (r+1)¡th iterated vector is obtained<br />

by solving the linear system<br />

<br />

A + h 2 <br />

By<br />

and setting<br />

v = ¡A¯y (r) ¡ h 2 B(¯y (r) )<br />

¯y (r+1) = ¯y (r) + v.<br />

As it is easily verified, the exact solution of the continuous problem is y(x) =<br />

¡ x ¡ 1.<br />

2<br />

2−x<br />

By a program of the following type we obtain y (1) from an initial vector,<br />

y (◦) = 0. By the same program we obtain y (2) from y (1) = 0... and so on:<br />

16

iterabile

above consistence and stability, and using the fact that the nonlinear term<br />

f(x, y) is monotonic.<br />

Ex.<strong>II</strong>.3E (The difference method applied to a partial differential equation<br />

) We already showed the solution of the heat equation on the line ¡1 <<br />

x < +1 by using the convolution properties of the Fourier transform. Let<br />

us show how to solve numerically the heat equation for x in some bouded<br />

interval: the mixed problem (initial value and boundary value problem)<br />

⎧<br />

⎪⎨<br />

⎪⎩<br />

ut ¡ D uxx = 0<br />

u(x, 0) = f(x)<br />

u(0, t) = g1(t), u(0, t) = g2(t), 0 < t < T<br />

(13)<br />

where D is the diffusion coefficient, and f(x), g1(t), g2(t) are given functions.<br />

Let us consider a discretization with nodes (xj, tn) , with xj = jh, tn = nk<br />

and h = L/J, k = T/N. Let us denote by ūj,n the numeric solution in the<br />

node (xj, tn). By the above initial condition<br />

while the boundary conditions imply<br />

ūj,0 = f(xj), j = 0, 1, ..., J<br />

ū0,n = g1(tn), ūJ,n = g2(tn).<br />

For 0 < j < J, n > 0 a possible discretization of (13) in the point (xj, tn) is<br />

got by means of a forward difference for the derivative ut and by a central<br />

difference for the second derivative uxx:<br />

ut » ūj,n+1 ¡ ūj,n<br />

, uxx »<br />

k<br />

ūj+1,n ¡ 2ūj,n + ūj−1,n<br />

h2 By substituting in (13), we obtain the following relation for j = 1, ..., J ¡ 1,<br />

and n = 0, ..., N ¡ 1<br />

where<br />

ūj,n+1 = (1 ¡ 2r)ūj,n + r(ūj+1,n + ūj−1,n<br />

(14)<br />

r = Dk/h 2 . (15)<br />

The method is explicit: in xj the numerical solution ū at time tn+1 is<br />

obtained from ū in the three points xj−1, xj, xj+1 at time tn, by an explicit<br />

formula and not by solving a system. Hence in this case the existence of the<br />

18

u<br />

t<br />

Figure 6: The explicit method (??) applied to the the diffusion mixed problem<br />

(13). Here D=0.45, and L = T = J = N = 20, so Dk/h 2 · 1/2 and the explicit<br />

method is convergent<br />

u<br />

t<br />

Figure 7: The explicit method (14) applied to the the diffusion mixed problem<br />

(13). Here D=0.55, and L = T = J = N = 20, so Dk/h 2 > 1/2 and the explicit<br />

method is unstable.<br />

19<br />

x<br />

x

u<br />

x<br />

Figure 8: The implicit method (18) applied to the the diffusion mixed problem<br />

(13). Here D = 1, L = 80, T = 160, J = 40, N = 40. Athough Dk/h 2 > 1/2, the<br />

implicit method is convergent.<br />

numerical solution is obvious. However the convergence of ū to the analytic<br />

solution u as h, k ! 0 is not always true. Indeed, in Figures <strong>II</strong>.6 and <strong>II</strong>.7,<br />

with slightly different choice of parameters, we see different behaviours of the<br />

numerical solution. Such a numerical example shows the importance of the<br />

quantity (15). Actually one can prove that the condition<br />

r = Dk/h 2 · 1<br />

2<br />

t<br />

(16)<br />

is a necessary condition for stability of the explicit numerical method. In<br />

practice, it ensures a sufficiently high velocity of propagation of the discrete<br />

signal. As for convergence, it is sufficient to remark that the explicit scheme<br />

is consistent. More precisely, one can prove that, for a sufficiently regular<br />

function, the local discretization error behaves like O(k + h 2 ). This implies<br />

that the the scheme (14) is convergent (since it is stable and consistent) under<br />

the condition (16). It is an example of conditionally convergent scheme.<br />

Unconditionally convergent methods can be obtained using implicit schemes<br />

where a linear system has to be solved at each time level tn. Such a scheme<br />

can be obtained by using a backward difference, instead of a forward difference,<br />

to approximate the derivatie ut. This way the differental equation (13)<br />

20

is transformed into the difference equation:<br />

whence<br />

1<br />

k (ūj,n ¡ ūj,n−1) ¡ D 1<br />

h2 (ūj+1,n ¡ 2ūj,n + ūj−1,n) = 0 (17)<br />

¡rūj−1,n + (1 + 2r)ūj,n ¡ rūj+1,n = ūj,n−1<br />

(18)<br />

for n = 1, 2, ..., Nad j = 1, 2, ..., J ¡ 1. Since the values ū0,n and ūJ,n are<br />

determined by the boundary conditions, (18) is a system of equations in the<br />

J ¡ 1 unknowns ū1,n, . . . , ūJ−1,n. The matrix of the system<br />

⎛<br />

⎜<br />

⎝<br />

1 + 2r ¡r . . . . . . . . .<br />

. . . ¡r 1 + 2r ¡r . . .<br />

. . . . . . . . . ¡r 1 + 2r<br />

is symmetric and with diagonal predominance, as in the above discussed onedimensional<br />

boundary problems. Therefore the matrix is positive definite<br />

and the numerical solution exists.<br />

The convergence problem, as usual, consists of two parts: consistence and<br />

stability. The local discretization error τj,n is defined by the following relation<br />

τj,n := ut(xj, t ¡ n) ¡ Duxx(xj, tn) ¡<br />

ūj,n ¡ ūj,n−1<br />

Thus by Taylor’s formula (Section I on difference <strong>formulae</strong>)<br />

k<br />

⎞<br />

⎟<br />

⎠<br />

τj,n = 1<br />

2 utt(xj, ¯tn)k ¡ D<br />

12 uxxxx(¯xj, tn)h 2<br />

¡ D ūj+1,n ¡ 2ūj,n + ūj−1,n<br />

h2 <br />

.(19)<br />

where ¯tn is a suitable point of the interval (tn−1, tn), and ¯xj 2 (xj−1, xj+1).<br />

Therefore, if a solution is sufficiently regular,<br />

τj,n = O(k) + O(h 2 ).<br />

As for stability, defining the global error ej,n := uj,n ¡ ūj,n, one can prove<br />

that<br />

(1 + 2r)ej,n = ej,n−1 + r(ej+1,n + ej−1,n) ¡ kτj,n<br />

Setting En = max0·j·J jej,nj and ˆτ = maxj,n jτj,nj, the global error can be<br />

bounded by the following steps:<br />

(1 + 2r)jej,nj · En−1 + 2rEn + kˆτ<br />

21

Taking the maximum over 0 · j · J<br />

wence, by recurrence<br />

This last inequality says that<br />

En · En−1 + kˆτ<br />

En · E◦ + nkˆτ · T ˆτ.<br />

juj,n ¡ ūj,nj · T ˆτ<br />

that is the implicit scheme is stable. Then convergence follows from consistence.<br />

4<br />

4 The elements of R<br />

Apart from the Cross there is no other ladder by which we may get to heaven.<br />

(St. Rose of Lima, 1586-1617)<br />

> x x<br />

[1] 4<br />

><br />

> y y<br />

[1] 2 7 4 1 (lo scrive cosi’, ma e’ un vettore colonna)<br />

> ls() elenca tutti gli oggetti nel workspace<br />

[1] "x" "y"<br />

> x*y prodotto elemento per elemento<br />

[1] 8 28 16 4<br />

> y*y prodotto elemento per elemento<br />

[1] 4 49 16 1<br />

> y^2 elevamento al quadrato el. per el.<br />

[1] 4 49 16 1<br />

22

t(y) trasposta del vettore colonna y<br />

[,1] [,2] [,3] [,4]<br />

[1,] 2 7 4 1<br />

> t(y)%*%y %*% e’ il prodotto righe per colonne<br />

[,1] (in questo caso: prodotto scalare)<br />

[1,] 70<br />

> y%*%t(y) -> z prodotto righe per colonne<br />

> z<br />

[,1] [,2] [,3] [,4]<br />

[1,] 4 14 8 2<br />

[2,] 14 49 28 7<br />

[3,] 8 28 16 4<br />

[4,] 2 7 4 1<br />

> a a<br />

[,1] [,2] [,3] [,4] [,5]<br />

[1,] -5 -1 3 7 11<br />

[2,] -4 0 4 8 12<br />

[3,] -3 1 5 9 13<br />

[4,] -2 2 6 10 14 (nota: assegna per colonne)<br />

> t(a) trasposta<br />

[,1] [,2] [,3] [,4]<br />

[1,] -5 -4 -3 -2<br />

[2,] -1 0 1 2<br />

[3,] 3 4 5 6<br />

[4,] 7 8 9 10<br />

[5,] 11 12 13 14 (cosi’ ha assegnato per righe)<br />

><br />

> u u<br />

[1] 2 5 3<br />

> sum(u)/length(u) media camponaria di u<br />

[1] 3.333333<br />

23

mean(u) media campionaria di u<br />

[1] 3.333333<br />

> sum((u-mean(u))^2)/(length(u)-1) varianza campionaria di u<br />

[1] 2.333333<br />

> var(u) varianza campionaria di u<br />

[1] 2.333333<br />

> v u sum((u-mean(u))*(v-mean(v))/(length(u)-1)) covarianza di u,v<br />

[1] 5<br />

> cov(u,v) covarianza di u,v<br />

[1] 5<br />

> zz zz<br />

[1] 1.00 1.33 1.66 1.99 2.32 2.65 2.98 3.31 3.64 3.97<br />

> n for (i in 1:n)<br />

+ for (j in 1:n)<br />

+ if (i==j) if(condizione) esegui else esegui<br />

+ A[i,j] A<br />

[,1] [,2] [,3] [,4] [,5]<br />

[1,] 1 0 0 0 0<br />

[2,] 0 1 0 0 0<br />

[3,] 0 0 1 0 0<br />

[4,] 0 0 0 1 0<br />

[5,] 0 0 0 0 1<br />

> getwd() di’qual e’ il work directory<br />

[1] "C:/Programmi/R/R-2.3.1"<br />

Nel direttorio di lavoro, con l’editor semplice<br />

di "blocco note" scriviamo il file codice<br />

chiamandolo "sinusoide,txt":<br />

24

sinusoide source("sinusoide.txt")<br />

> x plot(x,sinusoide(x,4),ty="l")<br />

in blocco note scrivo "tabella.txt"<br />

peso altezza cibo<br />

1 20 70 10<br />

2 15 60 5<br />

3 17 50 8<br />

4 23 67 12<br />

5 25 58 18<br />

> read.table("tabella.txt")<br />

peso altezza cibo<br />

1 20 70 10<br />

2 15 60 5<br />

3 17 50 8<br />

4 23 67 12<br />

5 25 58 18<br />

in workspace la leggo con "read.table"<br />

> T x y z M M (3 colonne: cioe’ 3 variabili)<br />

[,1] [,2] [,3] (5 righe: cioe’ 5 unita’ osservate)<br />

25

[1,] 20 70 10<br />

[2,] 15 60 5<br />

[3,] 17 50 8<br />

[4,] 23 67 12<br />

[5,] 25 58 18<br />

> media media<br />

[1] 20.0 61.0 10.6<br />

> var(M) matrice di covarianza<br />

[,1] [,2] [,3] dei dati campionari della matrice M<br />

[1,] 17.00 10.25 19.25 cioe’: cov(colonna i di M, colonna j di M)<br />

[2,] 10.25 62.00 3.75 per i,j = 1,2,3<br />

[3,] 19.25 3.75 23.80<br />

> pnorm(2.3, mean=0, sd=1) funz. distribuzione N(0;1) in 2.3<br />

[1] 0.9892759<br />

> pnorm(5.8, mean=2, sd=3) funz. distribuzione N(2;9) in 5.8<br />

[1] 0.8973627<br />

> qnorm(0.897,mean=2, sd=3) quantile 0.897 di N(2;9)<br />

[1] 5.793923<br />

> dnorm(2.7,mean=0,sd=5) funz.densita’ N(0;25) in 2.7<br />

[1] 0.0689636<br />

> x hist(x) istogramma dei dati campionari x<br />

> dbinom(10,size=22,prob=3/7) funzione di probabilita’, calcolata in 10,<br />

[1] 0.1638484 di Binomiale (n=22, p=3/7)<br />

> pbinom(10,size=22,prob=3/7) funzione distribuzione, calcolata in 10,<br />

[1] 0.6802406 della stessa Binomiale<br />

> qbinom(0.68,size=22,prob=3/7) quantile 0.68 di Binomiale (n=22, p=3/7)<br />

[1] 10<br />

> qt(0.95,df=13) quantile 0.95 di "t" di Student<br />

[1] 1.770933 (con 13 gradi di liberta’)<br />

26

qchisq(0.99,df=10) quantile 0.99 di "chi quadro"<br />

[1] 23.20925 (con 10 gradi di liberta’)<br />

-----------------------------------------<br />

# codice di -y’’(x)+y(x)=0, y(a)=ya,y(b)=yb, con n passi<br />

finite

finiteconvdiff

______________________________________________________<br />

# codice di figura <strong>II</strong>6 (con D=0.45, L=T=J=N=20) schema alle differenze esplicito sta<br />

# e di figura <strong>II</strong>7 (con D=0.55,L=T=J=N=20) schema esplicito instabile perche Dk/h^2 ><br />

pde<strong>II</strong>7

for (ii in 1:J-1)<br />

for (jj in 1:J-1)<br />

{<br />

if (ii==jj)<br />

A[ii,jj]