Beginning SQL

Beginning SQL Beginning SQL

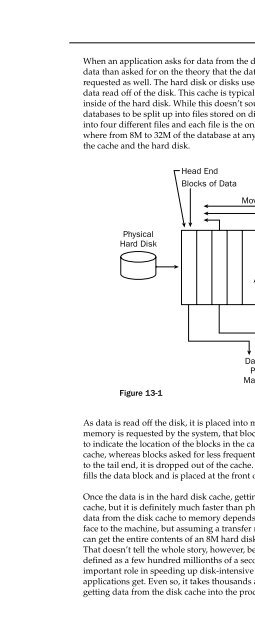

When an application asks for data from the disk subsystem, the disk often reads a much larger block of data than asked for on the theory that the data around the data that was asked for is eventually requested as well. The hard disk or disks used to store data have a small cache that holds large blocks of data read off of the disk. This cache is typically 2M or 8M and physically resides on the circuit board inside of the hard disk. While this doesn’t sound like a lot, remember that it is quite common for databases to be split up into files stored on different hard disks. Thus, if a database is physically split into four different files and each file is the only thing on its disk, the disk subsystem itself is caching anywhere from 8M to 32M of the database at any one time. Figure 13-1 illustrates the relationship between the cache and the hard disk. Physical Hard Disk Figure 13-1 Head End Tail End Blocks of Data Move to Front Data Sent To Processor Main Memory Database Tuning Back to Head of Cache Bit Bucket (trash) As data is read off the disk, it is placed into memory in blocks, typically of the sector size. If any block of memory is requested by the system, that block is logically moved to the head of the cache using pointers to indicate the location of the blocks in the cache. Thus, blocks of cache asked for frequently stay in the cache, whereas blocks asked for less frequently move toward the tail end of the cache. If any block gets to the tail end, it is dropped out of the cache. This happens when the latest data being read into the cache fills the data block and is placed at the front of the cache. Once the data is in the hard disk cache, getting at it is still a lot slower than accessing the processor cache, but it is definitely much faster than physical disk access. The amount of time required to move data from the disk cache to memory depends on a slew of things, such as how the disks physically interface to the machine, but assuming a transfer rate of 50M per second, a good ballpark today is that you can get the entire contents of an 8M hard disk cache moved to memory in about one-sixth of a second. That doesn’t tell the whole story, however, because you can start to access it instantly — instantly being defined as a few hundred millionths of a second. Thus, you can see that the hard disk cache plays a very important role in speeding up disk-intensive applications, and a database is about as disk-intensive as applications get. Even so, it takes thousands and perhaps tens of thousands of processor cycles to start getting data from the disk cache into the processor itself so that it can be used by your application. 353

- Page 696: Chapter 12 328 SQL security is cent

- Page 700: Chapter 12 Creating User IDs Figure

- Page 704: Chapter 12 3. In the SQL window, ty

- Page 708: Chapter 12 ❑ You can assign a dif

- Page 712: Chapter 12 Privileges Privileges ar

- Page 716: Chapter 12 338 You could then give

- Page 720: Chapter 12 You might implement this

- Page 724: Chapter 12 Another shortcut, using

- Page 728: Chapter 12 As you can imagine, givi

- Page 732: Chapter 12 The situation gets a lit

- Page 736: Chapter 12 Summary Database securit

- Page 740: Chapter 13 robust, fault-tolerant m

- Page 744: Chapter 13 Gigahertz Networks or Co

- Page 750: The point of all the discussion of

- Page 754: How Do You Do It? And herein lies t

- Page 758: The slots are often implemented as

- Page 762: On the other hand, an index on a tr

- Page 766: Tuning Tips The following list cont

- Page 770: Database Tuning Exercises 1. Create

- Page 776: Appendix A Exercise 2 Solution 368

- Page 780: Appendix A ( 8, ‘Jack’, ‘John

- Page 784: Appendix A ZipCode, Email, DateOfJo

- Page 788: Appendix A Exercise 3 Solution Firs

- Page 792: Appendix A 376 Figure A-1 ISBN is a

When an application asks for data from the disk subsystem, the disk often reads a much larger block of<br />

data than asked for on the theory that the data around the data that was asked for is eventually<br />

requested as well. The hard disk or disks used to store data have a small cache that holds large blocks of<br />

data read off of the disk. This cache is typically 2M or 8M and physically resides on the circuit board<br />

inside of the hard disk. While this doesn’t sound like a lot, remember that it is quite common for<br />

databases to be split up into files stored on different hard disks. Thus, if a database is physically split<br />

into four different files and each file is the only thing on its disk, the disk subsystem itself is caching anywhere<br />

from 8M to 32M of the database at any one time. Figure 13-1 illustrates the relationship between<br />

the cache and the hard disk.<br />

Physical<br />

Hard Disk<br />

Figure 13-1<br />

Head End Tail End<br />

Blocks of Data<br />

Move to Front<br />

Data Sent To<br />

Processor<br />

Main Memory<br />

Database Tuning<br />

Back to Head<br />

of Cache<br />

Bit<br />

Bucket<br />

(trash)<br />

As data is read off the disk, it is placed into memory in blocks, typically of the sector size. If any block of<br />

memory is requested by the system, that block is logically moved to the head of the cache using pointers<br />

to indicate the location of the blocks in the cache. Thus, blocks of cache asked for frequently stay in the<br />

cache, whereas blocks asked for less frequently move toward the tail end of the cache. If any block gets<br />

to the tail end, it is dropped out of the cache. This happens when the latest data being read into the cache<br />

fills the data block and is placed at the front of the cache.<br />

Once the data is in the hard disk cache, getting at it is still a lot slower than accessing the processor<br />

cache, but it is definitely much faster than physical disk access. The amount of time required to move<br />

data from the disk cache to memory depends on a slew of things, such as how the disks physically interface<br />

to the machine, but assuming a transfer rate of 50M per second, a good ballpark today is that you<br />

can get the entire contents of an 8M hard disk cache moved to memory in about one-sixth of a second.<br />

That doesn’t tell the whole story, however, because you can start to access it instantly — instantly being<br />

defined as a few hundred millionths of a second. Thus, you can see that the hard disk cache plays a very<br />

important role in speeding up disk-intensive applications, and a database is about as disk-intensive as<br />

applications get. Even so, it takes thousands and perhaps tens of thousands of processor cycles to start<br />

getting data from the disk cache into the processor itself so that it can be used by your application.<br />

353