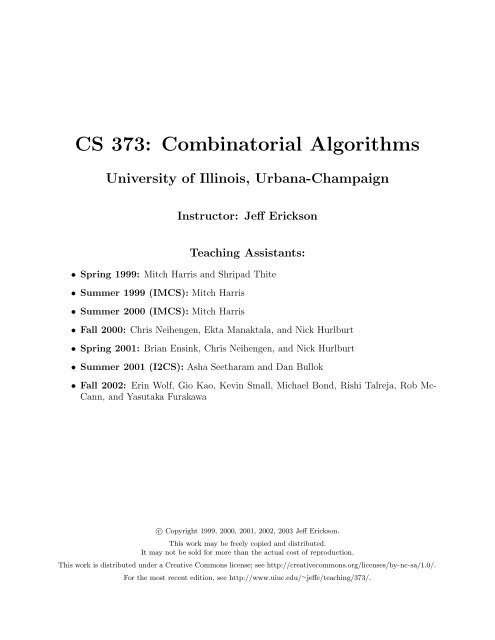

CS 373: Combinatorial Algorithms

CS 373: Combinatorial Algorithms

CS 373: Combinatorial Algorithms

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>CS</strong> <strong>373</strong>: <strong>Combinatorial</strong> <strong>Algorithms</strong><br />

University of Illinois, Urbana-Champaign<br />

Instructor: Jeff Erickson<br />

Teaching Assistants:<br />

• Spring 1999: Mitch Harris and Shripad Thite<br />

• Summer 1999 (IM<strong>CS</strong>): Mitch Harris<br />

• Summer 2000 (IM<strong>CS</strong>): Mitch Harris<br />

• Fall 2000: Chris Neihengen, Ekta Manaktala, and Nick Hurlburt<br />

• Spring 2001: Brian Ensink, Chris Neihengen, and Nick Hurlburt<br />

• Summer 2001 (I2<strong>CS</strong>): Asha Seetharam and Dan Bullok<br />

• Fall 2002: Erin Wolf, Gio Kao, Kevin Small, Michael Bond, Rishi Talreja, Rob Mc-<br />

Cann, and Yasutaka Furakawa<br />

c○ Copyright 1999, 2000, 2001, 2002, 2003 Jeff Erickson.<br />

This work may be freely copied and distributed.<br />

It may not be sold for more than the actual cost of reproduction.<br />

This work is distributed under a Creative Commons license; see http://creativecommons.org/licenses/by-nc-sa/1.0/.<br />

For the most recent edition, see http://www.uiuc.edu/ ∼ jeffe/teaching/<strong>373</strong>/.

For junior faculty, it may be a choice between a book and tenure.<br />

— George A. Bekey, “The Assistant Professor’s Guide to the Galaxy” (1993)<br />

I’m writing a book. I’ve got the page numbers done.<br />

About These Notes<br />

— Stephen Wright<br />

This course packet includes lecture notes, homework questions, and exam questions from the<br />

course ‘<strong>CS</strong> <strong>373</strong>: <strong>Combinatorial</strong> <strong>Algorithms</strong>’, which I taught at the University of Illinois in Spring<br />

1999, Fall 2000, Spring 2001, and Fall 2002. Lecture notes and videotapes lectures were also used<br />

during Summer 1999, Summer 2000, Summer 2001, and Fall 2002 as part of the UIUC computer<br />

science department’s Illinois Internet Computer Science (I2<strong>CS</strong>) program.<br />

The recurrences handout is based on samizdat, probably written by Ari Trachtenberg, based<br />

on a paper by George Lueker, from an earlier semester taught by Ed Reingold. I wrote most<br />

of the lecture notes in Spring 1999; I revised them and added a few new notes in each following<br />

semester. Except for the infamous Homework Zero, which is entirely my doing, homework and<br />

exam problems and their solutions were written mostly by the teaching assistants: Asha Seetharam,<br />

Brian Ensink, Chris Neihengen, Dan Bullok, Ekta Manaktala, Erin Wolf, Gio Kao, Kevin Small,<br />

Michael Bond, Mitch Harris, Nick Hurlburt, Rishi Talreja, Rob McCann, Shripad Thite, and Yasu<br />

Furakawa. Lecture notes were posted to the course web site a few days (on average) after each<br />

lecture. Homeworks, exams, and solutions were also distributed over the web. I have deliberately<br />

excluded solutions from this course packet.<br />

The lecture notes, homeworks, and exams draw heavily on the following sources, all of which I<br />

can recommend as good references.<br />

• Alfred V. Aho, John E. Hopcroft, and Jeffrey D. Ullman. The Design and Analysis of Computer<br />

<strong>Algorithms</strong>. Addison-Wesley, 1974. (This was the textbook for the algorithms classes<br />

I took as an undergrad at Rice and as a masters student at UC Irvine.)<br />

• Sara Baase and Allen Van Gelder. Computer <strong>Algorithms</strong>: Introduction to Design and Analysis.<br />

Addison-Wesley, 2000.<br />

• Mark de Berg, Marc van Kreveld, Mark Overmars, and Otfried Schwarzkopf. Computational<br />

Geometry: <strong>Algorithms</strong> and Applications. Springer-Verlag, 1997. (This is the required<br />

textbook in my computational geometry course.)<br />

• Thomas Cormen, Charles Leiserson, Ron Rivest, and Cliff Stein. Introduction to <strong>Algorithms</strong>,<br />

second edition. MIT Press/McGraw-Hill, 2000. (This is the required textbook for <strong>CS</strong> <strong>373</strong>,<br />

although I never actually use it in class. Students use it as a educated second opinion. I used<br />

the first edition of this book as a teaching assistant at Berkeley.)<br />

• Michael R. Garey and David S. Johnson. Computers and Intractability: A Guide to the<br />

Theory of NP-Completeness. W. H. Freeman, 1979.<br />

• Michael T. Goodrich and Roberto Tamassia. Algorithm Design: Foundations, Analysis, and<br />

Internet Examples. John Wiley & Sons, 2002.<br />

• Dan Gusfield. <strong>Algorithms</strong> on Strings, Trees, and Sequences: Computer Science and Molecular<br />

Biology. Cambridge University Press, 1997.<br />

• Udi Manber. Introduction to <strong>Algorithms</strong>: A Creative Approach. Addison-Wesley, 1989.<br />

(I used this textbook as a teaching assistant at Berkeley.)

• Rajeev Motwani and Prabhakar Raghavan. Randomized <strong>Algorithms</strong>. Cambridge University<br />

Press, 1995.<br />

• Ian Parberry. Problems on <strong>Algorithms</strong>. Prentice-Hall, 1995. (This was a recommended<br />

textbook for early versions of <strong>CS</strong> <strong>373</strong>, primarily for students who needed to strengthen their<br />

prerequisite knowledge. This book is out of print, but can be downloaded as karmaware from<br />

http://hercule.csci.unt.edu/ ∼ ian/books/poa.html .)<br />

• Robert Sedgewick. <strong>Algorithms</strong>. Addison-Wesley, 1988. (This book and its sequels have by<br />

far the best algorithm illustrations anywhere.)<br />

• Robert Endre Tarjan. Data Structures and Network <strong>Algorithms</strong>. SIAM, 1983.<br />

• Class notes from my own algorithms classes at Berkeley, especially those taught by Dick Karp<br />

and Raimund Seidel.<br />

• Various journal and conference papers (cited in the notes).<br />

• Google.<br />

Naturally, everything here owes a great debt to the people who taught me this algorithm stuff<br />

in the first place: Abhiram Ranade, Bob Bixby, David Eppstein, Dan Hirshberg, Dick Karp, Ed<br />

Reingold, George Lueker, Manuel Blum, Mike Luby, Michael Perlman, and Raimund Seidel. I’ve<br />

also been helped immensely by many discussions with colleagues at UIUC—Ed Reingold, Edgar<br />

Raoms, Herbert Edelsbrunner, Jason Zych, Lenny Pitt, Mahesh Viswanathan, Shang-Hua Teng,<br />

Steve LaValle, and especially Sariel Har-Peled—as well as voluminous feedback from the students<br />

and teaching assistants. I stole the overall course structure (and the idea to write up my own<br />

lecture notes) from Herbert Edelsbrunner.<br />

We did the best we could, but I’m sure there are still plenty of mistakes, errors, bugs, gaffes,<br />

omissions, snafus, kludges, typos, mathos, grammaros, thinkos, brain farts, nonsense, garbage,<br />

cruft, junk, and outright lies, all of which are entirely Steve Skiena’s fault. I revise and update<br />

these notes every time I teach the course, so please let me know if you find a bug. (Steve is unlikely<br />

to care.)<br />

When I’m teaching <strong>CS</strong> <strong>373</strong>, I award extra credit points to the first student to post an explanation<br />

and correction of any error in the lecture notes to the course newsgroup (uiuc.class.cs<strong>373</strong>).<br />

Obviously, the number of extra credit points depends on the severity of the error and the quality<br />

of the correction. If I’m not teaching the course, encourage your instructor to set up a similar<br />

extra-credit scheme, and forward the bug reports to Steve me!<br />

Of course, any other feedback is also welcome!<br />

Enjoy!<br />

— Jeff<br />

It is traditional for the author to magnanimously accept the blame for whatever deficiencies<br />

remain. I don’t. Any errors, deficiencies, or problems in this book are somebody else’s fault,<br />

but I would appreciate knowing about them so as to determine who is to blame.<br />

— Steven S. Skiena, The Algorithm Design Manual, Springer-Verlag, 1997, page x.

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

1 Fun with Fibonacci numbers<br />

Consider the reproductive cycle of bees. Each male bee has a mother but no father; each female<br />

bee has both a mother and a father. If we examine the generations we see the following family tree:<br />

♀ ♂<br />

♀<br />

♀<br />

♀<br />

♂<br />

♀<br />

♀ ♂<br />

♀<br />

♂<br />

♀<br />

♀ ♂<br />

♀<br />

♀<br />

♂<br />

♀<br />

♂<br />

♀<br />

♀ ♂<br />

♀<br />

♀<br />

♀<br />

♂<br />

♀<br />

♂<br />

♀ ♂<br />

♀<br />

♂<br />

♀<br />

♂<br />

♀ ♂<br />

♀<br />

♀<br />

♀<br />

♂<br />

♀<br />

♀ ♂<br />

♀<br />

♂<br />

♀<br />

♂<br />

♀ ♂<br />

We easily see that the number of ancestors in each generation is the sum of the two numbers<br />

before it. For example, our male bee has three great-grandparents, two grandparents, and one<br />

parent, and 3 = 2 + 1. The number of ancestors a bee has in generation n is defined by the<br />

Fibonacci sequence; we can also see this by applying the rule of sum.<br />

As a second example, consider light entering two adjacent planes of glass:<br />

At any meeting surface (between the two panes of glass, or between the glass and air), the light<br />

may either reflect or continue straight through (refract). For example, here is the light bouncing<br />

seven times before it leaves the glass.<br />

In general, how many different paths can the light take if we are told that it bounces n times before<br />

leaving the glass?<br />

The answer to the question (in case you haven’t guessed) rests with the Fibonacci sequence.<br />

We can apply the rule of sum to the event E constituting all paths through the glass in n bounces.<br />

We generate two separate sub-events, E1 and E2, illustrated in the following picture.<br />

1<br />

♀<br />

♀<br />

♂<br />

♀<br />

♂

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

Sub-event E1: Let E1 be the event that the first bounce is not at the boundary between<br />

the two panes. In this case, the light must first bounce off the bottom pane, or else we are<br />

dealing with the case of having zero bounces (there is only one way to have zero bounces).<br />

However, the number of remaining paths after bouncing off the bottom pane is the same as<br />

the number of paths entering through the bottom pane and bouncing n − 1 bounces more.<br />

Entering through the bottom pane is the same as entering through the top pane (but flipped<br />

over), so E1 is the number of paths of light bouncing n − 1 times.<br />

Sub-event E2: Let E2 be the event that the first bounce is on the boundary between the<br />

two panes. In this case, we consider the two options for the light after the first bounce: it<br />

can either leave the glass (in which case we are dealing with the case of having one bounce,<br />

and there is only one way for the light to bounce once) or it can bounce yet again on the top<br />

of the upper pane, in which case it is equivalent to the light entering from the top with n − 2<br />

bounces to take along its path.<br />

By the rule of sum, we thus get the following recurrence relation for Fn, the number of paths<br />

in which the light can travel with exactly n bounces. There is exactly one way for the light to<br />

travel with no bounces—straight through—and exactly two ways for the light to travel with only<br />

one bounce—off the bottom and off the middle. For any n > 1, there are Fn−1 paths where the<br />

light bounces off the bottom of the glass, and Fn−2 paths where the light bounces off the middle<br />

and then off the top.<br />

Stump a professor<br />

F0 = 1<br />

F1 = 2<br />

Fn = Fn−1 + Fn−2<br />

What is the recurrence relation for three panes of glass? This question once stumped an anonymous<br />

professor 1 in a science discipline, but now you should be able to solve it with a bit of effort. Aren’t<br />

you proud of your knowledge?<br />

1 Not me! —Jeff<br />

2

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

2 Sequences, sequence operators, and annihilators<br />

We have shown that several different problems can be expressed in terms of Fibonacci sequences,<br />

but we don’t yet know how to explicitly compute the nth Fibonacci number, or even (and more<br />

importantly) roughly how big it is. We can easily write a program to compute the n th Fibonacci<br />

number, but that doesn’t help us much here. What we really want is a closed form solution for the<br />

Fibonacci recurrence—an explicit algebraic formula without conditionals, loops, or recursion.<br />

In order to solve recurrences like the Fibonacci recurrence, we first need to understand operations<br />

on infinite sequences of numbers. Although these sequences are formally defined as functions of<br />

the form A : IN → IR, we will write them either as A = 〈a0, a1, a2, a3, a4, . . .〉 when we want to<br />

emphasize the entire sequence 2 , or as A = 〈ai〉 when we want to emphasize a generic element. For<br />

example, the Fibonacci sequence is 〈0, 1, 1, 2, 3, 5, 8, 13, 21, . . .〉.<br />

We can naturally define several sequence operators:<br />

We can add or subtract any two sequences:<br />

〈ai〉 + 〈bi〉 = 〈a0, a1, a2, . . .〉 + 〈b0, b1, b2, . . .〉 = 〈a0 + b0, a1 + b1, a2 + b2, . . .〉 = 〈ai + bi〉<br />

〈ai〉 − 〈bi〉 = 〈a0, a1, a2, . . .〉 − 〈b0, b1, b2, . . .〉 = 〈a0 − b0, a1 − b1, a2 − b2, . . .〉 = 〈ai − bi〉<br />

We can multiply any sequence by a constant:<br />

c · 〈ai〉 = c · 〈a0, a1, a2, . . .〉 = 〈c · a0, c · a1, c · a2, . . .〉 = 〈c · ai〉<br />

We can shift any sequence to the left by removing its initial element:<br />

E〈ai〉 = E〈a0, a1, a2, a3, . . .〉 = 〈a1, a2, a3, a4, . . .〉 = 〈ai+1〉<br />

Example: We can understand these operators better by looking at some specific examples, using<br />

the sequence T of powers of two.<br />

T = 〈2 0 , 2 1 , 2 2 , 2 3 , . . .〉 = 〈2 i 〉<br />

ET = 〈2 1 , 2 2 , 2 3 , 2 4 , . . .〉 = 〈2 i+1 〉<br />

2T = 〈2 · 2 0 , 2 · 2 1 , 2 · 2 2 , 2 · 2 3 , . . .〉 = 〈2 1 , 2 2 , 2 3 , 2 4 , . . .〉 = 〈2 i+1 〉<br />

2T − ET = 〈2 1 − 2 1 , 2 2 − 2 2 , 2 3 − 2 3 , 2 4 − 2 4 , . . .〉 = 〈0, 0, 0, 0, . . .〉 = 〈0〉<br />

2.1 Properties of operators<br />

It turns out that the distributive property holds for these operators, so we can rewrite ET − 2T as<br />

(E − 2)T . Since (E − 2)T = 〈0, 0, 0, 0, . . .〉, we say that the operator (E − 2) annihilates T , and we<br />

call (E − 2) an annihilator of T . Obviously, we can trivially annihilate any sequence by multiplying<br />

it by zero, so as a technical matter, we do not consider multiplication by 0 to be an annihilator.<br />

What happens when we apply the operator (E − 3) to our sequence T ?<br />

(E − 3)T = ET − 3T = 〈2 i+1 〉 − 3〈2 i 〉 = 〈2 i+1 − 3 · 2 i 〉 = 〈−2 i 〉 = −T<br />

The operator (E − 3) did very little to our sequence T ; it just flipped the sign of each number in<br />

the sequence. In fact, we will soon see that only (E − 2) will annihilate T , and all other simple<br />

2 It really doesn’t matter whether we start a sequence with a0 or a1 or a5 or even a−17. Zero is often a convenient<br />

starting point for many recursively defined sequences, so we’ll usually start there.<br />

3

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

operators will affect T in very minor ways. Thus, if we know how to annihilate the sequence, we<br />

know what the sequence must look like.<br />

In general, (E − c) annihilates any geometric sequence A = 〈a0, a0c, a0c 2 , a0c 3 , . . .〉 = 〈a0c i 〉:<br />

(E − c)〈a0c i 〉 = E〈a0c i 〉 − c〈a0ci〉 = 〈a0c i+1 〉 − 〈c · a0ci〉 = 〈a0c i+1 − a0c i+1 〉 = 〈0〉<br />

To see that this is the only operator of this form that annihilates A, let’s see the effect of operator<br />

(E − d) for some d = c:<br />

(E − d)〈a0c i 〉 = E〈a0c i 〉 − d〈a0ci〉 = 〈a0c i+1 − da0ci〉 = 〈(c − d)a0c i 〉 = (c − d)〈a0c i 〉<br />

So we have a more rigorous confirmation that an annihilator annihilates exactly one type of sequence,<br />

but multiplies other similar sequences by a constant.<br />

We can use this fact about annihilators of geometric sequences to solve certain recurrences. For<br />

example, consider the sequence R = 〈r0, r1, r2, . . .〉 defined recursively as follows:<br />

r0 = 3<br />

ri+1 = 5ri<br />

We can easily prove that the operator (E − 5) annihilates R:<br />

(E − 5)〈ri〉 = E〈ri〉 − 5〈ri〉 = 〈ri+1〉 − 〈5ri〉 = 〈ri+1 − 5ri〉 = 〈0〉<br />

Since (E − 5) is an annihilator for R, we must have the closed form solution ri = r05 i = 3 · 5 i . We<br />

can easily verify this by induction, as follows:<br />

r0 = 3 · 5 0 = 3 [definition]<br />

ri = 5ri−1<br />

2.2 Multiple operators<br />

[definition]<br />

= 5 · (3 · 5 i−1 ) [induction hypothesis]<br />

= 5 i · 3 [algebra]<br />

An operator is a function that transforms one sequence into another. Like any other function, we can<br />

apply operators one after another to the same sequence. For example, we can multiply a sequence<br />

〈ai〉 by a constant d and then by a constant c, resulting in the sequence c(d〈ai〉) = 〈c·d·ai〉 = (cd)〈ai〉.<br />

Alternatively, we may multiply the sequence by a constant c and then shift it to the left to get<br />

E(c〈ai〉) = E〈c · ai〉 = 〈c · ai+1〉. This is exactly the same as applying the operators in the<br />

reverse order: c(E〈ai〉) = c〈ai+1〉 = 〈c · ai+1〉. We can also shift the sequence twice to the left:<br />

E(E〈ai〉) = E〈ai+1〉 = 〈ai+2〉. We will write this in shorthand as E 2 〈ai〉. More generally, the<br />

operator E k shifts a sequence k steps to the left: E k 〈ai〉 = 〈ai+k〉.<br />

We now have the tools to solve a whole host of recurrence problems. For example, what<br />

annihilates C = 〈2 i + 3 i 〉? Well, we know that (E − 2) annihilates 〈2 i 〉 while leaving 〈3 i 〉 essentially<br />

unscathed. Similarly, (E − 3) annihilates 〈3 i 〉 while leaving 〈2 i 〉 essentially unscathed. Thus, if we<br />

apply both operators one after the other, we see that (E − 2)(E − 3) annihilates our sequence C.<br />

In general, for any integers a = b, the operator (E − a)(E − b) annihilates any sequence of the<br />

form 〈c1a i + c2b i 〉 but nothing else. We will often ‘multiply out’ the operators into the shorthand<br />

notation E 2 − (a + b)E + ab. It is left as an exhilarating exercise to the student to verify that this<br />

4

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

shorthand actually makes sense—the operators (E − a)(E − b) and E 2 − (a + b)E + ab have the<br />

same effect on every sequence.<br />

We now know finally enough to solve the recurrence for Fibonacci numbers. Specifically, notice<br />

that the recurrence Fi = Fi−1 + Fi−2 is annihilated by E 2 − E − 1:<br />

(E 2 − E − 1)〈Fi〉 = E 2 〈Fi〉 − E〈Fi〉 − 〈Fi〉<br />

= 〈Fi+2〉 − 〈Fi+1〉 − 〈Fi〉<br />

= 〈Fi−2 − Fi−1 − Fi〉<br />

= 〈0〉<br />

Factoring E 2 − E − 1 using the quadratic formula, we obtain<br />

E 2 − E − 1 = (E − φ)(E − ˆ φ)<br />

where φ = (1 + √ 5)/2 ≈ 1.618034 is the golden ratio and ˆ φ = (1 − √ 5)/2 = 1 − φ = −1/φ. Thus,<br />

the operator (E − φ)(E − ˆ φ) annihilates the Fibonacci sequence, so Fi must have the form<br />

Fi = cφ i + ĉ ˆ φ i<br />

for some constants c and ĉ. We call this the generic solution to the recurrence, since it doesn’t<br />

depend at all on the base cases. To compute the constants c and ĉ, we use the base cases F0 = 0<br />

and F1 = 1 to obtain a pair of linear equations:<br />

F0 = 0 = c + ĉ<br />

F1 = 1 = cφ + ĉ ˆ φ<br />

Solving this system of equations gives us c = 1/(2φ − 1) = 1/ √ 5 and ĉ = −1/ √ 5.<br />

We now have a closed-form expression for the ith Fibonacci number:<br />

Fi = φi − ˆ φi √ =<br />

5 1<br />

<br />

1 +<br />

√<br />

5<br />

√ i 5<br />

−<br />

2<br />

1<br />

<br />

1 −<br />

√<br />

5<br />

√ i 5<br />

2<br />

With all the square roots in this formula, it’s quite amazing that Fibonacci numbers are integers.<br />

However, if we do all the math correctly, all the square roots cancel out when i is an integer. (In<br />

fact, this is pretty easy to prove using the binomial theorem.)<br />

2.3 Degenerate cases<br />

We can’t quite solve every recurrence yet. In our above formulation of (E − a)(E − b), we assumed<br />

that a = b. What about the operator (E − a)(E − a) = (E − a) 2 ? It turns out that this operator<br />

annihilates sequences such as 〈ia i 〉:<br />

(E − a)〈ia i 〉 = 〈(i + 1)a i+1 − (a)ia i 〉<br />

= 〈(i + 1)a i+1 − ia i+1 〉<br />

= 〈a i+1 〉<br />

(E − a) 2 〈ia i 〉 = (E − a)〈a i+1 〉 = 〈0〉<br />

More generally, the operator (E − a) k annihilates any sequence 〈p(i) · a i 〉, where p(i) is any<br />

polynomial in i of degree k − 1. As an example, (E − 1) 3 annihilates the sequence 〈i 2 · 1 i 〉 = 〈i 2 〉 =<br />

〈1, 4, 9, 16, 25, . . .〉, since p(i) = i 2 is a polynomial of degree n − 1 = 2.<br />

As a review, try to explain the following statements:<br />

5

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

(E − 1) annihilates any constant sequence 〈α〉.<br />

(E − 1) 2 annihilates any arithmetic sequence 〈α + βi〉.<br />

(E − 1) 3 annihilates any quadratic sequence 〈α + βi + γi 2 〉.<br />

(E − 3)(E − 2)(E − 1) annihilates any sequence 〈α + β2 i + γ3 i 〉.<br />

(E − 3) 2 (E − 2)(E − 1) annihilates any sequence 〈α + β2 i + γ3 i + δi3 i 〉.<br />

2.4 Summary<br />

In summary, we have learned several operators that act on sequences, as well as a few ways of<br />

combining operators.<br />

Operator Definition<br />

Addition 〈ai〉 + 〈bi〉 = 〈ai + bi〉<br />

Subtraction 〈ai〉 + 〈bi〉 = 〈ai + bi〉<br />

Scalar multiplication c〈ai〉 = 〈cai〉<br />

Shift E〈ai〉 = 〈ai+1〉<br />

Composition of operators (X + Y)〈ai〉 = X〈ai〉 + Y〈ai〉<br />

(X − Y)〈ai〉 = X〈ai〉 − Y〈ai〉<br />

XY〈ai〉 = X(Y〈ai〉) = Y(X〈ai〉)<br />

k-fold shift E k 〈ai〉 = 〈ai+k〉<br />

Notice that we have not defined a multiplication operator for two sequences. This is usually<br />

accomplished by convolution:<br />

<br />

i<br />

〈ai〉 ∗ 〈bi〉 =<br />

<br />

.<br />

j=0<br />

ajbi−j<br />

Fortunately, convolution is unnecessary for solving the recurrences we will see in this course.<br />

We have also learned some things about annihilators, which can be summarized as follows:<br />

Sequence Annihilator<br />

<br />

〈α〉<br />

αai E − 1<br />

<br />

αai + βbi E − a<br />

<br />

α0a<br />

(E − a)(E − b)<br />

i 0 + α1ai 1 + · · · + αnai <br />

n<br />

〈αi + β〉<br />

(E − a0)(E − a1) · · · (E − an)<br />

(E − 1) 2<br />

<br />

(αi + β)ai (E − a) 2<br />

<br />

(αi + β)ai + γbi (E − a) 2 <br />

(α0 + α1i + · · · αn−1i<br />

(E − b)<br />

n−1 )ai (E − a) n<br />

If X annihilates 〈ai〉, then X also annihilates c〈ai〉 for any constant c.<br />

If X annihilates 〈ai〉 and Y annihilates 〈bi〉, then XY annihilates 〈ai〉 ± 〈bi〉.<br />

3 Solving Linear Recurrences<br />

3.1 Homogeneous Recurrences<br />

The general expressions in the annihilator box above are really the most important things to<br />

remember about annihilators because they help you to solve any recurrence for which you can<br />

write down an annihilator. The general method is:<br />

6

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

1. Write down the annihilator for the recurrence<br />

2. Factor the annihilator<br />

3. Determine the sequence annihilated by each factor<br />

4. Add these sequences together to form the generic solution<br />

5. Solve for constants of the solution by using initial conditions<br />

Example: Let’s show the steps required to solve the following recurrence:<br />

r0 = 1<br />

r1 = 5<br />

r2 = 17<br />

ri = 7ri−1 − 16ri−2 + 12ri−3<br />

1. Write down the annihilator. Since ri+3 − 7ri+2 + 16ri+1 − 12ri = 0, the annihilator is E 3 −<br />

7E 2 + 16E − 12.<br />

2. Factor the annihilator. E 3 − 7E 2 + 16E − 12 = (E − 2) 2 (E − 3).<br />

3. Determine sequences annihilated by each factor. (E − 2) 2 annihilates 〈(αi + β)2 i 〉 for any<br />

constants α and β, and (E − 3) annihilates 〈γ3 i 〉 for any constant γ.<br />

4. Combine the sequences. (E − 2) 2 (E − 3) annihilates 〈(αi + β)2 i + γ3 i 〉 for any constants<br />

α, β, γ.<br />

5. Solve for the constants. The base cases give us three equations in the three unknowns α, β, γ:<br />

r0 = 1 = (α · 0 + β)2 0 + γ · 3 0 = β + γ<br />

r1 = 5 = (α · 1 + β)2 1 + γ · 3 1 = 2α + 2β + 3γ<br />

r2 = 17 = (α · 2 + β)2 2 + γ · 3 2 = 8α + 4β + 9γ<br />

We can solve these equations to get α = 1, β = 0, γ = 1. Thus, our final solution is<br />

ri = i2 i + 3 i , which we can verify by induction.<br />

3.2 Non-homogeneous Recurrences<br />

A height balanced tree is a binary tree, where the heights of the two subtrees of the root differ by<br />

at most one, and both subtrees are also height balanced. To ground the recursive definition, the<br />

empty set is considered a height balanced tree of height −1, and a single node is a height balanced<br />

tree of height 0.<br />

Let Tn be the smallest height-balanced tree of height n—how many nodes does Tn have? Well,<br />

one of the subtrees of Tn has height n − 1 (since Tn has height n) and the other has height either<br />

n − 1 or n − 2 (since Tn is height-balanced and as small as possible). Since both subtrees are<br />

themselves height-balanced, the two subtrees must be Tn−1 and Tn−2.<br />

We have just derived the following recurrence for tn, the number of nodes in the tree Tn:<br />

t−1 = 0 [the empty set]<br />

t0 = 1 [a single node]<br />

tn = tn−1 + tn−2 + 1<br />

7

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

The final ‘+1’ is for the root of Tn.<br />

We refer to the terms in the equation involving ti’s as the homogeneous terms and the rest<br />

as the non-homogeneous terms. (If there were no non-homogeneous terms, we would say that the<br />

recurrence itself is homogeneous.) We know that E 2 − E − 1 annihilates the homogeneous part<br />

tn = tn−1 + tn−2. Let us try applying this annihilator to the entire equation:<br />

(E 2 − E − 1)〈ti〉 = E 2 〈ti〉 − E〈ai〉 − 1〈ai〉<br />

= 〈ti+2〉 − 〈ti+1〉 − 〈ti〉<br />

= 〈ti+2 − ti+1 − ti〉<br />

= 〈1〉<br />

The leftover sequence 〈1, 1, 1, . . .〉 is called the residue. To obtain the annihilator for the entire<br />

recurrence, we compose the annihilator for its homogeneous part with the annihilator of its residue.<br />

Since E − 1 annihilates 〈1〉, it follows that (E 2 − E − 1)(E − 1) annihilates 〈tn〉. We can factor the<br />

annihilator into<br />

(E − φ)(E − ˆ φ)(E − 1),<br />

so our annihilator rules tell us that<br />

tn = αφ n + β ˆ φ n + γ<br />

for some constants α, β, γ. We call this the generic solution to the recurrence. Different recurrences<br />

can have the same generic solution.<br />

To solve for the unknown constants, we need three equations in three unknowns. Our base cases<br />

give us two equations, and we can get a third by examining the next nontrivial case t1 = 2:<br />

Solving these equations, we find that α =<br />

tn =<br />

t−1 = 0 = αφ −1 + β ˆ φ −1 + γ = α/φ + β/ ˆ φ + γ<br />

t0 = 1 = αφ 0 + β ˆ φ 0 + γ = α + β + γ<br />

t1 = 2 = αφ 1 + β ˆ φ 1 + γ = αφ + β ˆ φ + γ<br />

√ 5 + 2<br />

√ 5<br />

<br />

√ √<br />

√5+2, β = √5−2, and γ = −1. Thus,<br />

5 5<br />

1 + √ n √ <br />

5 5 − 2 1 −<br />

+ √<br />

2<br />

5<br />

√ n 5<br />

− 1<br />

2<br />

Here is the general method for non-homogeneous recurrences:<br />

1. Write down the homogeneous annihilator, directly from the recurrence<br />

11 2 . ‘Multiply’ by the annihilator for the residue<br />

2. Factor the annihilator<br />

3. Determine what sequence each factor annihilates<br />

4. Add these sequences together to form the generic solution<br />

5. Solve for constants of the solution by using initial conditions<br />

8

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

3.3 Some more examples<br />

In each example below, we use the base cases a0 = 0 and a1 = 1.<br />

an = an−1 + an−2 + 2<br />

– The homogeneous annihilator is E 2 − E − 1.<br />

– The residue is the constant sequence 〈2, 2, 2, . . .〉, which is annihilated by E − 1.<br />

– Thus, the annihilator is (E 2 − E − 1)(E − 1).<br />

– The annihilator factors into (E − φ)(E − ˆ φ)(E − 1).<br />

– Thus, the generic solution is an = αφ n + β ˆ φ n + γ.<br />

– The constants α, β, γ satisfy the equations<br />

– Solving the equations gives us α =<br />

– So the final solution is an =<br />

a0 = 0 = α + β + γ<br />

a1 = 1 = αφ + β ˆ φ + γ<br />

a2 = 3 = αφ 2 + β ˆ φ 2 + γ<br />

√ 5 + 2<br />

√ 5<br />

√ √<br />

√5+2 , β = √5−2 , and γ = −2<br />

5 5<br />

<br />

1 + √ 5<br />

2<br />

n<br />

+<br />

√ 5 − 2<br />

√ 5<br />

<br />

1 − √ 5<br />

2<br />

(In the remaining examples, I won’t explicitly enumerate the steps like this.)<br />

an = an−1 + an−2 + 3<br />

The homogeneous annihilator (E 2 − E − 1) leaves a constant residue 〈3, 3, 3, . . .〉, so the<br />

annihilator is (E 2 − E − 1)(E − 1), and the generic solution is an = αφ n + β ˆ φ n + γ. Solving<br />

the equations<br />

gives us the final solution an =<br />

an = an−1 + an−2 + 2 n<br />

a0 = 0 = α + β + γ<br />

a1 = 1 = αφ + β ˆ φ + γ<br />

a2 = 4 = αφ 2 + β ˆ φ 2 + γ<br />

√ 5 + 3<br />

√ 5<br />

<br />

n<br />

− 2<br />

1 + √ n √ <br />

5 5 − 3 1 −<br />

+ √<br />

2<br />

5<br />

√ n 5<br />

− 3<br />

2<br />

The homogeneous annihilator (E 2 − E − 1) leaves an exponential residue 〈4, 8, 16, 32, . . .〉 =<br />

〈2 i+2 〉, which is annihilated by E − 2. Thus, the annihilator is (E 2 − E − 1)(E − 2), and<br />

the generic solution is an = αφ n + β ˆ φ n + γ2 n . The constants α, β, γ satisfy the following<br />

equations:<br />

a0 = 0 = α + β + γ<br />

a1 = 1 = αφ + β ˆ φ + 2γ<br />

a2 = 5 = αφ 2 + β ˆ φ 2 + 4γ<br />

9

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

an = an−1 + an−2 + n<br />

The homogeneous annihilator (E 2 − E − 1) leaves a linear residue 〈2, 3, 4, 5 . . .〉 = 〈i + 2〉,<br />

which is annihilated by (E − 1) 2 . Thus, the annihilator is (E 2 − E − 1)(E − 1) 2 , and the<br />

generic solution is an = αφ n + β ˆ φ n + γ + δn. The constants α, β, γ, δ satisfy the following<br />

equations:<br />

an = an−1 + an−2 + n 2<br />

a0 = 0 = α + β + γ<br />

a1 = 1 = αφ + β ˆ φ + γ + δ<br />

a2 = 3 = αφ 2 + β ˆ φ 2 + γ + 2δ<br />

a3 = 7 = αφ 3 + β ˆ φ 3 + γ + 3δ<br />

The homogeneous annihilator (E 2 − E − 1) leaves a quadratic residue 〈4, 9, 16, 25 . . .〉 =<br />

〈(i + 2) 2 〉, which is annihilated by (E − 1) 3 . Thus, the annihilator is (E 2 − E − 1)(E − 1) 3 ,<br />

and the generic solution is an = αφ n + β ˆ φ n + γ + δn + εn 2 . The constants α, β, γ, δ, ε satisfy<br />

the following equations:<br />

an = an−1 + an−2 + n 2 − 2 n<br />

a0 = 0 = α + β + γ<br />

a1 = 1 = αφ + β ˆ φ + γ + δ + ε<br />

a2 = 5 = αφ 2 + β ˆ φ 2 + γ + 2δ + 4ε<br />

a3 = 15 = αφ 3 + β ˆ φ 3 + γ + 3δ + 9ε<br />

a4 = 36 = αφ 4 + β ˆ φ 4 + γ + 4δ + 16ε<br />

The homogeneous annihilator (E 2 − E − 1) leaves the residue 〈(i + 2) 2 − 2 i−2 〉. The quadratic<br />

part of the residue is annihilated by (E − 1) 3 , and the exponential part is annihilated by<br />

(E − 2). Thus, the annihilator for the whole recurrence is (E 2 − E − 1)(E − 1) 3 (E − 2), and<br />

so the generic solution is an = αφ n + β ˆ φ n + γ + δn + εn 2 + η2 i . The constants α, β, γ, δ, ε, η<br />

satisfy a system of six equations in six unknowns determined by a0, a1, . . . , a5.<br />

an = an−1 + an−2 + φ n<br />

The annihilator is (E 2 − E − 1)(E − φ) = (E − φ) 2 (E − ˆ φ), so the generic solution is an =<br />

αφ n + βnφ n + γ ˆ φ n . (Other recurrence solving methods will have a “interference” problem<br />

with this equation, while the operator method does not.)<br />

Our method does not work on recurrences like an = an−1 + 1<br />

functions 1<br />

n<br />

n or an = an−1 + lg n, because the<br />

and lg n do not have annihilators. Our tool, as it stands, is limited to linear recurrences.<br />

4 Divide and Conquer Recurrences and the Master Theorem<br />

Divide and conquer algorithms often give us running-time recurrences of the form<br />

T (n) = a T (n/b) + f(n) (1)<br />

where a and b are constants and f(n) is some other function. The so-called ‘Master Theorem’ gives<br />

us a general method for solving such recurrences f(n) is a simple polynomial.<br />

10

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

Unfortunately, the Master Theorem doesn’t work for all functions f(n), and many useful recurrences<br />

don’t look like (1) at all. Fortunately, there’s a general technique to solve most divide-andconquer<br />

recurrences, even if they don’t have this form. This technique is used to prove the Master<br />

Theorem, so if you remember this technique, you can forget the Master Theorem entirely (which<br />

is what I did). Throw off your chains!<br />

I’ll illustrate the technique using the generic recurrence (1). We start by drawing a recursion<br />

tree. The root of the recursion tree is a box containing the value f(n), it has a children, each<br />

of which is the root of a recursion tree for T (n/b). Equivalently, a recursion tree is a complete<br />

a-ary tree where each node at depth i contains the value a i f(n/b i ). The recursion stops when we<br />

get to the base case(s) of the recurrence. Since we’re looking for asymptotic bounds, it turns out<br />

not to matter much what we use for the base case; for purposes of illustration, I’ll assume that<br />

T (1) = f(1).<br />

f( )<br />

1<br />

3 f(n/b )<br />

2 f(n/b )<br />

a<br />

f(n/b)<br />

2 2 2<br />

f(n/b ) f(n/b ) f(n/b )<br />

a<br />

f(n)<br />

f(n/b) f(n/b) f(n/b)<br />

A recursion tree for the recurrence T (n) = a T (n/b) + f(n)<br />

f(n)<br />

a f(n/b)<br />

2 2 a f(n/b )<br />

a f(n/b ) 3 3<br />

L a f(1)<br />

Now T (n) is just the sum of all values stored in the tree. Assuming that each level of the tree<br />

is full, we have<br />

T (n) = f(n) + a f(n/b) + a 2 f(n/b 2 ) + · · · + a i f(n/b i ) + · · · + a L f(n/b L )<br />

where L is the depth of the recursion tree. We easily see that L = log b n, since n/b L = 1. Since<br />

f(1) = Θ(1), the last non-zero term in the summation is Θ(a L ) = Θ(a log b n ) = Θ(n log b a ).<br />

Now we can easily state and prove the Master Theorem, in a slightly different form than it’s<br />

usually stated.<br />

The Master Theorem. The recurrence T (n) = aT (n/b) + f(n) can be solved as follows.<br />

If a f(n/b) = κ f(n) for some constant κ < 1, then T (n) = Θ(f(n)).<br />

If a f(n/b) = K f(n) for some constant K > 1, then T (n) = Θ(n log b a ).<br />

If a f(n/b) = f(n), then T (n) = Θ(f(n) log b n).<br />

Proof: If f(n) is a constant factor larger than a f(b/n), then by induction, the sum is a descending<br />

geometric series. The sum of any geometric series is a constant times its largest term. In this case,<br />

the largest term is the first term f(n).<br />

If f(n) is a constant factor smaller than a f(b/n), then by induction, the sum is an ascending<br />

geometric series. The sum of any geometric series is a constant times its largest term. In this case,<br />

this is the last term, which by our earlier argument is Θ(n log b a ).<br />

11

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

Finally, if a f(b/n) = f(n), then by induction, each of the L + 1 terms in the summation is<br />

equal to f(n). <br />

Here are a few canonical examples of the Master Theorem in action:<br />

Randomized selection: T (n) = T (3n/4) + n<br />

Here a f(n/b) = 3n/4 is smaller than f(n) = n by a factor of 4/3, so T (n) = Θ(n)<br />

Karatsuba’s multiplication algorithm: T (n) = 3T (n/2) + n<br />

Here a f(n/b) = 3n/2 is bigger than f(n) = n by a factor of 3/2, so T (n) = Θ(n log 2 3 )<br />

Mergesort: T (n) = 2T (n/2) + n<br />

Here a f(n/b) = f(n), so T (n) = Θ(n log n)<br />

T (n) = 4T (n/2) + n lg n<br />

In this case, we have a f(n/b) = 2n lg n−2n, which is not quite twice f(n) = n lg n. However,<br />

for sufficiently large n (which is all we care about with asymptotic bounds) we have 2f(n) ><br />

a f(n/b) > 1.9f(n). Since the level sums are bounded both above and below by ascending<br />

geometric series, the solution is T (n) = Θ(n log 2 4 ) = Θ(n 2 ) . (This trick will not work in the<br />

second or third cases of the Master Theorem!)<br />

Using the same recursion-tree technique, we can also solve recurrences where the Master Theorem<br />

doesn’t apply.<br />

T (n) = 2T (n/2) + n/ lg n<br />

We can’t apply the Master Theorem here, because a f(n/b) = n/(lg n − 1) isn’t equal to<br />

f(n) = n/ lg n, but the difference isn’t a constant factor. So we need to compute each of the<br />

level sums and compute their total in some other way. It’s not hard to see that the sum of<br />

all the nodes in the ith level is n/(lg n − i). In particular, this means the depth of the tree is<br />

at most lg n − 1.<br />

T (n) =<br />

lg n−1<br />

i=0<br />

n<br />

lg n − i =<br />

lg<br />

n<br />

n<br />

j<br />

j=1<br />

= nHlg n = Θ(n lg lg n)<br />

Randomized quicksort: T (n) = T (3n/4) + T (n/4) + n<br />

In this case, nodes in the same level of the recursion tree have different values. This makes<br />

the tree lopsided; different leaves are at different levels. However, it’s not to hard to see that<br />

the nodes in any complete level (i.e., above any of the leaves) sum to n, so this is like the<br />

last case of the Master Theorem, and that every leaf has depth between log 4 n and log 4/3 n.<br />

To derive an upper bound, we overestimate T (n) by ignoring the base cases and extending<br />

the tree downward to the level of the deepest leaf. Similarly, to derive a lower bound, we<br />

overestimate T (n) by counting only nodes in the tree up to the level of the shallowest leaf.<br />

These observations give us the upper and lower bounds n log 4 n ≤ T (n) ≤ n log 4/3 n. Since<br />

these bound differ by only a constant factor, we have T (n) = Θ(n log n) .<br />

12

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

Deterministic selection: T (n) = T (n/5) + T (7n/10) + n<br />

Again, we have a lopsided recursion tree. If we look only at complete levels of the tree, we<br />

find that the level sums form a descending geometric series T (n) = n+9n/10+81n/100+· · · ,<br />

so this is like the first case of the master theorem. We can get an upper bound by ignoring<br />

the base cases entirely and growing the tree out to infinity, and we can get a lower bound by<br />

only counting nodes in complete levels. Either way, the geometric series is dominated by its<br />

largest term, so T (n) = Θ(n) .<br />

T (n) = √ n · T ( √ n) + n<br />

In this case, we have a complete recursion tree, but the degree of the nodes is no longer<br />

constant, so we have to be a bit more careful. It’s not hard to see that the nodes in any<br />

level sum to n, so this is like the third Master case. The depth L satisfies the identity<br />

n2−L = 2 (we can’t get all the way down to 1 by taking square roots), so L = lg lg n and<br />

T (n) = Θ(n lg lg n) .<br />

T (n) = 2 √ n · T ( √ n) + n<br />

We still have at most lg lg n levels, but now the nodes in level i sum to 2 i n. We have an<br />

increasing geometric series of level sums, like the second Master case, so T (n) is dominated<br />

by the sum over the deepest level: T (n) = Θ(2 lg lg n n) = Θ(n log n)<br />

T (n) = 4 √ n · T ( √ n) + n<br />

Now the nodes in level i sum to 4 i n. Again, we have an increasing geometric series, like the<br />

second Master case, so we only care about the leaves: T (n) = Θ(4 lg lg n n) = Θ(n log 2 n) Ick!<br />

5 Transforming Recurrences<br />

5.1 An analysis of mergesort: domain transformation<br />

Previously we gave the recurrence for mergesort as T (n) = 2T (n/2) + n, and obtained the solution<br />

T (n) = Θ(n log n) using the Master Theorem (or the recursion tree method if you, like me, can’t<br />

remember the Master Theorem). This is fine is n is a power of two, but for other values values of<br />

n, this recurrence is incorrect. When n is odd, then the recurrence calls for us to sort a fractional<br />

number of elements! Worse yet, if n is not a power of two, we will never reach the base case<br />

T (1) = 0.<br />

To get a recurrence that’s valid for all integers n, we need to carefully add ceilings and floors:<br />

T (n) = T (⌈n/2⌉) + T (⌊n/2⌋) + n.<br />

We have almost no hope of getting an exact solution here; the floors and ceilings will eventually<br />

kill us. So instead, let’s just try to get a tight asymptotic upper bound for T (n) using a technique<br />

called domain transformation. A domain transformation rewrites a function T (n) with a difficult<br />

recurrence as a nested function S(f(n)), where f(n) is a simple function and S() has an easier<br />

recurrence.<br />

First we overestimate the time bound, once by pretending that the two subproblem sizes are<br />

equal, and again to eliminate the ceiling:<br />

T (n) ≤ 2T ⌈n/2⌉ + n ≤ 2T (n/2 + 1) + n.<br />

13

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

Now we define a new function S(n) = T (n + α), where α is a unknown constant, chosen so that<br />

S(n) satisfies the Master-ready recurrence S(n) ≤ 2S(n/2) + O(n). To figure out the correct value<br />

of α, we compare two versions of the recurrence for the function T (n + α):<br />

S(n) ≤ 2S(n/2) + O(n) =⇒ T (n + α) ≤ 2T (n/2 + α) + O(n)<br />

T (n) ≤ 2T (n/2 + 1) + n =⇒ T (n + α) ≤ 2T ((n + α)/2 + 1) + n + α<br />

For these two recurrences to be equal, we need n/2 + α = (n + α)/2 + 1, which implies that α = 2.<br />

The Master Theorem now tells us that S(n) = O(n log n), so<br />

T (n) = S(n − 2) = O((n − 2) log(n − 2)) = O(n log n).<br />

A similar argument gives a matching lower bound T (n) = Ω(n log n). So T (n) = Θ(n log n) after<br />

all, just as though we had ignored the floors and ceilings from the beginning!<br />

Domain transformations are useful for removing floors, ceilings, and lower order terms from the<br />

arguments of any recurrence that otherwise looks like it ought to fit either the Master Theorem or<br />

the recursion tree method. But now that we know this, we don’t need to bother grinding through<br />

the actual gory details!<br />

5.2 A less trivial example<br />

There is a data structure in computational geometry called ham-sandwich trees, where the cost<br />

of doing a certain search operation obeys the recurrence T (n) = T (n/2) + T (n/4) + 1. This<br />

doesn’t fit the Master theorem, because the two subproblems have different sizes, and using the<br />

recursion tree method only gives us the loose bounds √ n ≪ T (n) ≪ n.<br />

Domain transformations save the day. If we define the new function t(k) = T (2 k ), we have a<br />

new recurrence<br />

t(k) = t(k − 1) + t(k − 2) + 1<br />

which should immediately remind you of Fibonacci numbers. Sure enough, after a bit of work, the<br />

annihilator method gives us the solution t(k) = Θ(φ k ), where φ = (1 + √ 5)/2 is the golden ratio.<br />

This implies that<br />

T (n) = t(lg n) = Θ(φ lg n ) = Θ(n lg φ ) ≈ Θ(n 0.69424 ).<br />

It’s possible to solve this recurrence without domain transformations and annihilators—in fact,<br />

the inventors of ham-sandwich trees did so—but it’s much more difficult.<br />

5.3 Secondary recurrences<br />

Consider the recurrence T (n) = 2T ( n<br />

− 1) + n with the base case T (1) = 1. We already know<br />

3<br />

how to use domain transformations to get the tight asymptotic bound T (n) = Θ(n), but how would<br />

we we obtain an exact solution?<br />

First we need to figure out how the parameter n changes as we get deeper and deeper into the<br />

recurrence. For this we use a secondary recurrence. We define a sequence ni so that<br />

T (ni) = 2T (ni−1) + ni,<br />

So ni is the argument of T () when we are i recursion steps away from the base case n0 = 1. The<br />

original recurrence gives us the following secondary recurrence for ni:<br />

ni−1 = ni<br />

3 − 1 =⇒ ni = 3ni−3 + 3.<br />

14

<strong>CS</strong> <strong>373</strong> Notes on Solving Recurrence Relations<br />

The annihilator for this recurrence is (E − 1)(E − 3), so the generic solution is ni = α3 i + β.<br />

Plugging in the base cases n0 = 1 and n1 = 6, we get the exact solution<br />

ni = 5<br />

2 · 3i − 3<br />

2 .<br />

Notice that our original function T (n) is only well-defined if n = ni for some integer i ≥ 0.<br />

Now to solve the original recurrence, we do a range transformation. If we set ti = T (ni), we<br />

, which by now we can solve using the annihilator method.<br />

have the recurrence ti = 2ti−1 + 5<br />

2 · 3i − 3<br />

2<br />

The annihilator of the recurrence is (E − 2)(E − 3)(E − 1), so the generic solution is α ′ 3i + β ′ 2i + γ ′ .<br />

Plugging in the base cases t0 = 1, t1 = 8, t2 = 37, we get the exact solution<br />

ti = 15<br />

2 · 3i − 8 · 2 i + 3<br />

2<br />

Finally, we need to substitute to get a solution for the original recurrence in terms of n, by<br />

inverting the solution of the secondary recurrence. If n = ni = 5<br />

2 ·3i − 3<br />

2 , then (after a little algebra)<br />

we have<br />

<br />

2 3<br />

i = log3 n + .<br />

5 5<br />

Substituting this into the expression for ti, we get our exact, closed-form solution.<br />

T (n) = 15<br />

2 · 3i − 8 · 2 i + 3<br />

2<br />

= 15<br />

2<br />

= 15<br />

2<br />

2 3<br />

n+<br />

· 3( 5<br />

= 3n − 8 ·<br />

<br />

2 3<br />

n +<br />

5 5<br />

5) log<br />

− 8 · 2 3( 2<br />

5<br />

<br />

2<br />

− 8 ·<br />

<br />

2 3<br />

n +<br />

5 5<br />

log3 2<br />

3<br />

n+ 3<br />

5) +<br />

2<br />

3<br />

n +<br />

5 5<br />

log3 2<br />

Isn’t that special? Now you know why we stick to asymptotic bounds for most recurrences.<br />

6 References<br />

Methods for solving recurrences by annihilators, domain transformations, and secondary recurrences<br />

are nicely outlined in G. Lueker, Some Techniques for Solving Recurrences, ACM Computing<br />

Surveys 12(4):419-436, 1980. The master theorem is presented in sections 4.3 and 4.4 of CLR.<br />

Sections 1–3 and 5 of this handout were written by Ed Reingold and Ari Trachtenberg and<br />

substantially revised by Jeff Erickson. Section 4 is entirely Jeff’s fault.<br />

15<br />

+ 6<br />

+ 3<br />

2

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

0 Introduction (August 29)<br />

0.1 What is an algorithm?<br />

Partly because of his computational skills, Gerbert, in his later years, was made Pope by<br />

Otto the Great, Holy Roman Emperor, and took the name Sylvester II. By this time, his gift<br />

in the art of calculating contributed to the belief, commonly held throughout Europe, that<br />

he had sold his soul to the devil.<br />

— Dominic Olivastro, Ancient Puzzles, 1993<br />

This is a course about algorithms, specifically combinatorial algorithms. An algorithm is a set of<br />

simple, unambiguous, step-by-step instructions for accomplishing a specific task. Note that the<br />

word “computer” doesn’t appear anywhere in this definition; algorithms don’t necessarily have<br />

anything to do with computers! For example, here is an algorithm for singing that annoying song<br />

‘99 Bottles of Beer on the Wall’ for arbitrary values of 99:<br />

BottlesOfBeer(n):<br />

For i ← n down to 1<br />

Sing “i bottles of beer on the wall, i bottles of beer,”<br />

Sing “Take one down, pass it around, i − 1 bottles of beer on the wall.”<br />

Sing “No bottles of beer on the wall, no bottles of beer,”<br />

Sing “Go to the store, buy some more, x bottles of beer on the wall.”<br />

<strong>Algorithms</strong> have been with us since the dawn of civilization. Here is an algorithm, popularized<br />

(but almost certainly not discovered) by Euclid about 2500 years ago, for multiplying or dividing<br />

numbers using a ruler and compass. The Greek geometers represented numbers using line segments<br />

of the right length. In the pseudo-code below, Circle(p, q) represents the circle centered at a point<br />

p and passing through another point q; hopefully the other instructions are obvious.<br />

〈〈Construct the line perpendicular to ℓ and passing through P .〉〉<br />

RightAngle(ℓ, P ):<br />

Choose a point A ∈ ℓ<br />

A, B ← Intersect(Circle(P, A), ℓ)<br />

C, D ← Intersect(Circle(A, B), Circle(B, A))<br />

return Line(C, D)<br />

〈〈Construct a point Z such that |AZ| = |AC||AD|/|AB|.〉〉<br />

MultiplyOrDivide(A, B, C, D):<br />

α ← RightAngle(Line(A, C), A)<br />

E ← Intersect(Circle(A, B), α)<br />

F ← Intersect(Circle(A, D), α)<br />

β ← RightAngle(Line(E, C), F )<br />

γ ← RightAngle(β, F )<br />

return Intersect(γ, Line(A, C))<br />

Multiplying or dividing using a compass and straight-edge.<br />

1<br />

D<br />

Z<br />

C<br />

A<br />

B<br />

E<br />

F<br />

γ<br />

β<br />

α

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

This algorithm breaks down the difficult task of multiplication into simple primitive steps:<br />

drawing a line between two points, drawing a circle with a given center and boundary point, and so<br />

on. The primitive steps need not be quite this primitive, but each primitive step must be something<br />

that the person or machine executing the algorithm already knows how to do. Notice in this example<br />

that we have made constructing a right angle a primitive operation in the MultiplyOrDivide<br />

algorithm by writing a subroutine.<br />

As a bad example, consider “Martin’s algorithm”: 1<br />

BecomeAMillionaireAndNeverPayTaxes:<br />

Get a million dollars.<br />

Don’t pay taxes.<br />

If you get caught,<br />

Say “I forgot.”<br />

Pretty simple, except for that first step; it’s a doozy. A group of billionaire CEOs would consider<br />

this an algorithm, since for them the first step is both unambiguous and trivial. But for the rest<br />

of us poor slobs who don’t have a million dollars handy, Martin’s procedure is too vague to be<br />

considered an algorithm. [On the other hand, this is a perfect example of a reduction—it reduces<br />

the problem of being a millionaire and never paying taxes to the ‘easier’ problem of acquiring a<br />

million dollars. We’ll see reductions over and over again in this class. As hundreds of businessmen<br />

and politicians have demonstrated, if you know how to solve the easier problem, a reduction tells<br />

you how to solve the harder one.]<br />

Although most of the previous examples are algorithms, they’re not the kind of algorithms<br />

that computer scientists are used to thinking about. In this class, we’ll focus (almost!) exclusively<br />

on algorithms that can be reasonably implemented on a computer. In other words, each step in<br />

the algorithm must be something that either is directly supported by your favorite programming<br />

language (arithmetic, assignments, loops, recursion, etc.) or is something that you’ve already<br />

learned how to do in an earlier class (sorting, binary search, depth first search, etc.).<br />

For example, here’s the algorithm that’s actually used to determine the number of congressional<br />

representatives assigned to each state. 2 The input array P [1 .. n] stores the populations of the n<br />

states, and R is the total number of representatives. (Currently, n = 50 and R = 435.)<br />

ApportionCongress(P [1 .. n], R):<br />

H ← NewMaxHeap<br />

for i ← 1 to n<br />

r[i] ← 1<br />

Insert H, i, P [i]/ √ 2 <br />

R ← R − n<br />

while R > 0<br />

s ← ExtractMax(H)<br />

r[s] ← r[s] + 1<br />

Insert H, i, P [i]/ r[i](r[i] + 1) <br />

R ← R − 1<br />

return r[1 .. n]<br />

1<br />

S. Martin, “You Can Be A Millionaire”, Saturday Night Live, January 21, 1978. Reprinted in Comedy Is Not<br />

Pretty, Warner Bros. Records, 1979.<br />

2<br />

The congressional apportionment algorithm is described in detail at http://www.census.gov/population/www/<br />

censusdata/apportionment/computing.html, and some earlier algorithms are described at http://www.census.gov/<br />

population/www/censusdata/apportionment/history.html.<br />

2

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

Note that this description assumes that you know how to implement a max-heap and its basic operations<br />

NewMaxHeap, Insert, and ExtractMax. Moreover, the correctness of the algorithm<br />

doesn’t depend at all on how these operations are implemented. 3 The Census Bureau implements<br />

the max-heap as an unsorted array, probably inside an Excel spreadsheet. (You should have learned<br />

a more efficient solution in <strong>CS</strong> 225.)<br />

So what’s a combinatorial algorithm? The distinction is fairly artificial, but basically, this means<br />

something distinct from a numerical algorithm. Numerical algorithms are used to approximate<br />

computation with ideal real numbers on finite precision computers. For example, here’s a numerical<br />

algorithm to compute the square root of a number to a given precision. (This algorithm works<br />

remarkably quickly—every iteration doubles the number of correct digits.)<br />

SquareRoot(x, ε):<br />

s ← 1<br />

while |s − x/s| > ε<br />

s ← (s + x/s)/2<br />

return s<br />

The output of a numerical algorithm is necessarily an approximation to some ideal mathematical<br />

object. Any number that’s close enough to the ideal answer is a correct answer. <strong>Combinatorial</strong><br />

algorithms, on the other hand, manipulate discrete objects like arrays, lists, trees, and graphs that<br />

can be represented exactly on a digital computer.<br />

0.2 Writing down algorithms<br />

<strong>Algorithms</strong> are not programs; they should never be specified in a particular programming language.<br />

The whole point of this course is to develop computational techniques that can be used in any programming<br />

language. 4 The idiosyncratic syntactic details of C, Java, Visual Basic, ML, Smalltalk,<br />

Intercal, or Brainfunk 5 are of absolutely no importance in algorithm design, and focusing on them<br />

will only distract you from what’s really going on. What we really want is closer to what you’d<br />

write in the comments of a real program than the code itself.<br />

On the other hand, a plain English prose description is usually not a good idea either. <strong>Algorithms</strong><br />

have a lot of structure—especially conditionals, loops, and recursion—that are far too easily<br />

hidden by unstructured prose. Like any language spoken by humans, English is full of ambiguities,<br />

subtleties, and shades of meaning, but algorithms must be described as accurately as possible.<br />

Finally and more seriously, many people have a tendency to describe loops informally: “Do this<br />

first, then do this second, and so on.” As anyone who has taken one of those ‘what comes next in<br />

this sequence?’ tests already knows, specifying what happens in the first couple of iterations of a<br />

loop doesn’t say much about what happens later on. 6 Phrases like ‘and so on’ or ‘do X over and<br />

over’ or ‘et cetera’ are a good indication that the algorithm should have been described in terms of<br />

3<br />

However, as several students pointed out, the algorithm’s correctness does depend on the populations of the<br />

states being not too different. If Congress had only 384 representatives, then the 2000 Census data would have given<br />

Rhode Island only one representative! If state populations continue to change at their current rates, The United<br />

States have have a serious constitutional crisis right after the 2030 Census. I can’t wait!<br />

4<br />

See http://www.ionet.net/˜timtroyr/funhouse/beer.html for implementations of the BottlesOfBeer algorithm<br />

in over 200 different programming languages.<br />

5<br />

Pardon my thinly bowdlerized Anglo-Saxon. Brainfork is the well-deserved name of a programming language<br />

invented by Urban Mueller in 1993. Brainflick programs are written entirely using the punctuation characters<br />

+-,.[], each representing a different operation (roughly: shift left, shift right, increment, decrement, input, output,<br />

begin loop, end loop). See http://www.catseye.mb.ca/esoteric/bf/ for a complete definition of Brainfooey, sample<br />

Brainfudge programs, a Brainfiretruck interpreter (written in just 230 characters of C), and related shit.<br />

6<br />

See http://www.research.att.com/˜njas/sequences/.<br />

3

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

loops or recursion, and the description should have specified what happens in a generic iteration<br />

of the loop. 7<br />

The best way to write down an algorithm is using pseudocode. Pseudocode uses the structure<br />

of formal programming languages and mathematics to break the algorithm into one-sentence steps,<br />

but those sentences can be written using mathematics, pure English, or some mixture of the two.<br />

Exactly how to structure the pseudocode is a personal choice, but here are the basic rules I follow:<br />

• Use standard imperative programming keywords (if/then/else, while, for, repeat/until, case,<br />

return) and notation (array[index], pointer→field, function(args), etc.)<br />

• The block structure should be visible from across the room. Indent everything carefully and<br />

consistently. Don’t use syntactic sugar (like C/C++/Java braces or Pascal/Algol begin/end<br />

tags) unless the pseudocode is absolutely unreadable without it.<br />

• Don’t typeset keywords in a different font or style. Changing type style emphasizes the<br />

keywords, making the reader think the syntactic sugar is actually important—it isn’t!<br />

• Each statement should fit on one line, each line should contain only one statement. (The only<br />

exception is extremely short and similar statements like i ← i + 1; j ← j − 1; k ← 0.)<br />

• Put each structuring statement (for, while, if) on its own line. The order of nested loops<br />

matters a great deal; make it absolutely obvious.<br />

• Use short but mnemonic algorithm and variable names. Absolutely never use pronouns!<br />

A good description of an algorithm reveals the internal structure, hides irrelevant details, and<br />

can be implemented easily by any competent programmer in any programming language, even if<br />

they don’t understand why the algorithm works. Good pseudocode, like good code, makes the<br />

algorithm much easier to understand and analyze; it also makes mistakes much easier to spot. The<br />

algorithm descriptions in the textbooks and lecture notes are good examples of what we want to<br />

see on your homeworks and exams..<br />

0.3 Analyzing algorithms<br />

It’s not enough just to write down an algorithm and say ‘Behold!’ We also need to convince<br />

ourselves (and our graders) that the algorithm does what it’s supposed to do, and that it does it<br />

quickly.<br />

Correctness: In the real world, it is often acceptable for programs to behave correctly most of<br />

the time, on all ‘reasonable’ inputs. Not in this class; our standards are higher 8 . We need to prove<br />

that our algorithms are correct on all possible inputs. Sometimes this is fairly obvious, especially<br />

for algorithms you’ve seen in earlier courses. But many of the algorithms we will discuss in this<br />

course will require some extra work to prove. Correctness proofs almost always involve induction.<br />

We like induction. Induction is our friend. 9<br />

Before we can formally prove that our algorithm does what we want it to, we have to formally<br />

state what we want the algorithm to do! Usually problems are given to us in real-world terms,<br />

7<br />

Similarly, the appearance of the phrase ‘and so on’ in a proof is a good indication that the proof should have<br />

been done by induction!<br />

8<br />

or at least different<br />

9<br />

If induction is not your friend, you will have a hard time in this course.<br />

4

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

not with formal mathematical descriptions. It’s up to us, the algorithm designer, to restate the<br />

problem in terms of mathematical objects that we can prove things about: numbers, arrays, lists,<br />

graphs, trees, and so on. We also need to determine if the problem statement makes any hidden<br />

assumptions, and state those assumptions explicitly. (For example, in the song “n Bottles of Beer<br />

on the Wall”, n is always a positive integer.) Restating the problem formally is not only required<br />

for proofs; it is also one of the best ways to really understand what the problems is asking for. The<br />

hardest part of solving a problem is figuring out the right way to ask the question!<br />

An important distinction to keep in mind is the distinction between a problem and an algorithm.<br />

A problem is a task to perform, like “Compute the square root of x” or “Sort these n numbers”<br />

or “Keep n algorithms students awake for t minutes”. An algorithm is a set of instructions that<br />

you follow if you want to execute this task. The same problem may have hundreds of different<br />

algorithms.<br />

Running time: The usual way of distinguishing between different algorithms for the same problem<br />

is by how fast they run. Ideally, we want the fastest possible algorithm for our problem. In<br />

the real world, it is often acceptable for programs to run efficiently most of the time, on all ‘reasonable’<br />

inputs. Not in this class; our standards are different. We require algorithms that always<br />

run efficiently, even in the worst case.<br />

But how do we measure running time? As a specific example, how long does it take to sing the<br />

song BottlesOfBeer(n)? This is obviously a function of the input value n, but it also depends<br />

on how quickly you can sing. Some singers might take ten seconds to sing a verse; others might<br />

take twenty. Technology widens the possibilities even further. Dictating the song over a telegraph<br />

using Morse code might take a full minute per verse. Ripping an mp3 over the Web might take a<br />

tenth of a second per verse. Duplicating the mp3 in a computer’s main memory might take only a<br />

few microseconds per verse.<br />

Nevertheless, what’s important here is how the singing time changes as n grows. Singing<br />

BottlesOfBeer(2n) takes about twice as long as singing BottlesOfBeer(n), no matter what<br />

technology is being used. This is reflected in the asymptotic singing time Θ(n). We can measure<br />

time by counting how many times the algorithm executes a certain instruction or reaches a certain<br />

milestone in the ‘code’. For example, we might notice that the word ‘beer’ is sung three times in<br />

every verse of BottlesOfBeer, so the number of times you sing ‘beer’ is a good indication of<br />

the total singing time. For this question, we can give an exact answer: BottlesOfBeer(n) uses<br />

exactly 3n + 3 beers.<br />

There are plenty of other songs that have non-trivial singing time. This one is probably familiar<br />

to most English-speakers:<br />

NDaysOfChristmas(gifts[2 .. n]):<br />

for i ← 1 to n<br />

Sing “On the ith day of Christmas, my true love gave to me”<br />

for j ← i down to 2<br />

Sing “j gifts[j]”<br />

if i > 1<br />

Sing “and”<br />

Sing “a partridge in a pear tree.”<br />

The input to NDaysOfChristmas is a list of n − 1 gifts. It’s quite easy to show that the<br />

singing time is Θ(n2 ); in particular, the singer mentions the name of a gift n i=1 i = n(n + 1)/2<br />

times (counting the partridge in the pear tree). It’s also easy to see that during the first n days<br />

of Christmas, my true love gave to me exactly n i i=1 j=1 j = n(n + 1)(n + 2)/6 = Θ(n3 ) gifts.<br />

5

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

Other songs that take quadratic time to sing are “Old MacDonald”, “There Was an Old Lady Who<br />

Swallowed a Fly”, “Green Grow the Rushes O”, “The Barley Mow” (which we’ll see in Homework 1),<br />

“Echad Mi Yode’a” (“Who knows one?”), “Allouette”, “Ist das nicht ein Schnitzelbank?” 10 etc.<br />

For details, consult your nearest pre-schooler.<br />

For a slightly more complicated example, consider the algorithm ApportionCongress. Here<br />

the running time obviously depends on the implementation of the max-heap operations, but we<br />

can certainly bound the running time as O(N + RI + (R − n)E), where N is the time for a<br />

NewMaxHeap, I is the time for an Insert, and E is the time for an ExtractMax. Under the<br />

reasonable assumption that R > 2n (on average, each state gets at least two representatives), this<br />

simplifies to O(N + R(I + E)). The Census Bureau uses an unsorted array of size n, for which<br />

N = I = Θ(1) (since we know a priori how big the array is), and E = Θ(n), so the overall running<br />

time is Θ(Rn). This is fine for the federal government, but if we want to be more efficient, we can<br />

implement the heap as a perfectly balanced n-node binary tree (or a heap-ordered array). In this<br />

case, we have N = Θ(1) and I = R = O(log n), so the overall running time is Θ(R log n).<br />

Incidentally, there is a faster algorithm for apportioning Congress. I’ll give extra credit to the<br />

first student who can find the faster algorithm, analyze its running time, and prove that it always<br />

gives exactly the same results as ApportionCongress.<br />

Sometimes we are also interested in other computational resources: space, disk swaps, concurrency,<br />

and so forth. We use the same techniques to analyze those resources as we use for running<br />

time.<br />

0.4 Why are we here, anyway?<br />

We will try to teach you two things in <strong>CS</strong><strong>373</strong>: how to think about algorithms and how to talk<br />

about algorithms. Along the way, you’ll pick up a bunch of algorithmic facts—mergesort runs in<br />

Θ(n log n) time; the amortized time to search in a splay tree is O(log n); the traveling salesman<br />

problem is NP-hard—but these aren’t the point of the course. You can always look up facts in a<br />

textbook, provided you have the intuition to know what to look for. That’s why we let you bring<br />

cheat sheets to the exams; we don’t want you wasting your study time trying to memorize all the<br />

facts you’ve seen. You’ll also practice a lot of algorithm design and analysis skills—finding useful<br />

(counter)examples, developing induction proofs, solving recurrences, using big-Oh notation, using<br />

probability, and so on. These skills are useful, but they aren’t really the point of the course either.<br />

At this point in your educational career, you should be able to pick up those skills on your own,<br />

once you know what you’re trying to do.<br />

The main goal of this course is to help you develop algorithmic intuition. How do various<br />

algorithms really work? When you see a problem for the first time, how should you attack it?<br />

How do you tell which techniques will work at all, and which ones will work best? How do you<br />

judge whether one algorithm is better than another? How do you tell if you have the best possible<br />

solution?<br />

The flip side of this goal is developing algorithmic language. It’s not enough just to understand<br />

how to solve a problem; you also have to be able to explain your solution to somebody else. I don’t<br />

mean just how to turn your algorithms into code—despite what many students (and inexperienced<br />

programmers) think, ‘somebody else’ is not just a computer. Nobody programs alone. Perhaps<br />

more importantly in the short term, explaining something to somebody else is one of the best ways<br />

of clarifying your own understanding.<br />

10 Wakko: Ist das nicht Otto von Schnitzelpusskrankengescheitmeyer?<br />

Yakko and Dot: Ja, das ist Otto von Schnitzelpusskrankengescheitmeyer!!<br />

6

<strong>CS</strong> <strong>373</strong> Lecture 0: Introduction Fall 2002<br />

You’ll also get a chance to develop brand new algorithms and algorithmic techniques on your<br />

own. Unfortunately, this is not the sort of thing that we can really teach you. All we can really do<br />

is lay out the tools, encourage you to practice with them, and give you feedback.<br />

Good algorithms are extremely useful, but they can also be elegant, surprising, deep, even<br />

beautiful. But most importantly, algorithms are fun!! Hopefully this class will inspire at least a<br />

few of you to come play!<br />

7

<strong>CS</strong> <strong>373</strong> Lecture 1: Divide and Conquer Fall 2002<br />

1 Divide and Conquer (September 3)<br />

1.1 MergeSort<br />

The control of a large force is the same principle as the control of a few men:<br />

it is merely a question of dividing up their numbers.<br />

— Sun Zi, The Art of War (c. 400 C.E.), translated by Lionel Giles (1910)<br />

Mergesort is one of the earliest algorithms proposed for sorting. According to Knuth, it was<br />

suggested by John von Neumann as early as 1945.<br />

1. Divide the array A[1 .. n] into two subarrays A[1 .. m] and A[m + 1 .. n], where m = ⌊n/2⌋.<br />

2. Recursively mergesort the subarrays A[1 .. m] and A[m + 1 .. n].<br />

3. Merge the newly-sorted subarrays A[1 .. m] and A[m + 1 .. n] into a single sorted list.<br />

Input: S O R T I N G E X A M P L<br />

Divide: S O R T I N G E X A M P L<br />

Recurse: I N O S R T A E G L M P X<br />

Merge: A E G I L M N O P S R T X<br />

A Mergesort example.<br />

The first step is completely trivial; we only need to compute the median index m. The second<br />

step is also trivial, thanks to our friend the recursion fairy. All the real work is done in the final<br />

step; the two sorted subarrays A[1 .. m] and A[m + 1 .. n] can be merged using a simple linear-time<br />

algorithm. Here’s a complete specification of the Mergesort algorithm; for simplicity, we separate<br />

out the merge step as a subroutine.<br />

MergeSort(A[1 .. n]):<br />

if (n > 1)<br />

m ← ⌊n/2⌋<br />

MergeSort(A[1 .. m])<br />

MergeSort(A[m + 1 .. n])<br />

Merge(A[1 .. n], m)<br />

Merge(A[1 .. n], m):<br />

i ← 1; j ← m + 1<br />

for k ← 1 to n<br />

if j > n<br />

B[k] ← A[i]; i ← i + 1<br />

else if i > m<br />

B[k] ← A[j]; j ← j + 1<br />

else if A[i] < A[j]<br />

B[k] ← A[i]; i ← i + 1<br />

else<br />

B[k] ← A[j]; j ← j + 1<br />

for k ← 1 to n<br />

A[k] ← B[k]<br />

To prove that the algorithm is correct, we use our old friend induction. We can prove that<br />

Merge is correct using induction on the total size of the two subarrays A[i .. m] and A[j .. n] left to<br />

be merged into B[k .. n]. The base case, where at least one subarray is empty, is straightforward; the<br />

algorithm just copies it into B. Otherwise, the smallest remaining element is either A[i] or A[j],<br />

since both subarrays are sorted, so B[k] is assigned correctly. The remaining subarrays—either<br />

1

<strong>CS</strong> <strong>373</strong> Lecture 1: Divide and Conquer Fall 2002<br />

A[i + 1 .. m] and A[j .. n], or A[i .. m] and A[j + 1 .. n]—are merged correctly into B[k + 1 .. n] by the<br />

inductive hypothesis. 1 This completes the proof.<br />

Now we can prove MergeSort correct by another round of straightforward induction. 2 The<br />

base cases n ≤ 1 are trivial. Otherwise, by the inductive hypothesis, the two smaller subarrays<br />

A[1 .. m] and A[m+1 .. n] are sorted correctly, and by our earlier argument, merged into the correct<br />

sorted output.<br />

What’s the running time? Since we have a recursive algorithm, we’re going to get a recurrence<br />

of some sort. Merge clearly takes linear time, since it’s a simple for-loop with constant work per<br />

iteration. We get the following recurrence for MergeSort:<br />

T (1) = O(1), T (n) = T ⌈n/2⌉ + T ⌊n/2⌋ + O(n).<br />

1.2 Aside: Domain Transformations<br />

Except for the floor and ceiling, this recurrence falls into case (b) of the Master Theorem [CLR,<br />

§4.3]. If we simply ignore the floor and ceiling, the Master Theorem suggests the solution T (n) =<br />

O(n log n). We can easily check that this answer is correct using induction, but there is a simple<br />

method for solving recurrences like this directly, called domain transformation.<br />

First we overestimate the time bound, once by pretending that the two subproblem sizes are<br />

equal, and again to eliminate the ceiling:<br />

T (n) ≤ 2T ⌈n/2⌉ + O(n) ≤ 2T (n/2 + 1) + O(n).<br />