Robot Vision.pdf

Robot Vision.pdf

Robot Vision.pdf

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

I<br />

<strong>Robot</strong> <strong>Vision</strong>

In-Tech<br />

intechweb.org<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Edited by<br />

Aleš Ude

Published by In-Teh<br />

In-Teh<br />

Olajnica 19/2, 32000 Vukovar, Croatia<br />

Abstracting and non-profit use of the material is permitted with credit to the source. Statements and<br />

opinions expressed in the chapters are these of the individual contributors and not necessarily those of<br />

the editors or publisher. No responsibility is accepted for the accuracy of information contained in the<br />

published articles. Publisher assumes no responsibility liability for any damage or injury to persons or<br />

property arising out of the use of any materials, instructions, methods or ideas contained inside. After<br />

this work has been published by the In-Teh, authors have the right to republish it, in whole or part, in any<br />

publication of which they are an author or editor, and the make other personal use of the work.<br />

© 2010 In-teh<br />

www.intechweb.org<br />

Additional copies can be obtained from:<br />

publication@intechweb.org<br />

First published March 2010<br />

Printed in India<br />

Technical Editor: Martina Peric<br />

Cover designed by Dino Smrekar<br />

<strong>Robot</strong> <strong>Vision</strong>,<br />

Edited by Aleš Ude<br />

p. cm.<br />

ISBN 978-953-307-077-3

V<br />

Preface<br />

The purpose of robot vision is to enable robots to perceive the external world in order to<br />

perform a large range of tasks such as navigation, visual servoing for object tracking and<br />

manipulation, object recognition and categorization, surveillance, and higher-level decisionmaking.<br />

Among different perceptual modalities, vision is arguably the most important one. It<br />

is therefore an essential building block of a cognitive robot. Most of the initial research in robot<br />

vision has been industrially oriented and while this research is still ongoing, current works<br />

are more focused on enabling the robots to autonomously operate in natural environments<br />

that cannot be fully modeled and controlled. A long-term goal is to open new applications<br />

to robotics such as robotic home assistants, which can only come into existence if the robots<br />

are equipped with significant cognitive capabilities. In pursuit of this goal, current research<br />

in robot vision benefits from studies in human vision, which is still by far the most powerful<br />

existing vision system. It also emphasizes the role of active vision, which in case of humanoid<br />

robots does not limit itself to active eyes only any more, but rather employs the whole<br />

body of the humanoid robot to support visual perception. By combining these paradigms<br />

with modern advances in computer vision, especially with many of the recently developed<br />

statistical approaches, powerful new robot vision systems can be built.<br />

This book presents a snapshot of the wide variety of work in robot vision that is currently<br />

going on in different parts of the world.<br />

March 2010<br />

Aleš Ude

VII<br />

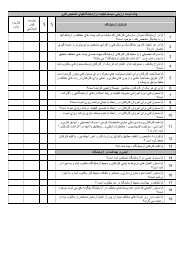

Contents<br />

Preface V<br />

1. Design and fabrication of soft zoom lens applied in robot vision 001<br />

Wei-Cheng Lin, Chao-Chang A. Chen, Kuo-Cheng Huang and Yi-Shin Wang<br />

2. Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a Dioptric System 013<br />

E. Martínez and A.P. del Pobil<br />

3. An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 021<br />

Kanglin Xu<br />

4. Visual Motion Analysis for 3D <strong>Robot</strong> Navigation in Dynamic Environments 037<br />

Chunrong Yuan and Hanspeter A. Mallot<br />

5. A Visual Navigation Strategy Based on Inverse Perspective Transformation 061<br />

Francisco Bonin-Font, Alberto Ortiz and Gabriel Oliver<br />

6. <strong>Vision</strong>-based Navigation Using an Associative Memory 085<br />

Mateus Mendes<br />

7. <strong>Vision</strong> Based <strong>Robot</strong>ic Navigation: Application to Orthopedic Surgery 111<br />

P. Gamage, S. Q. Xie, P. Delmas and W. L. Xu<br />

8. Navigation and Control of Mobile <strong>Robot</strong> Using Sensor Fusion 129<br />

Yong Liu<br />

9. Visual Navigation for Mobile <strong>Robot</strong>s 143<br />

Nils Axel Andersen, Jens Christian Andersen, Enis Bayramoğlu and Ole Ravn<br />

10. Interactive object learning and recognition with multiclass<br />

support vector machines 169<br />

Aleš Ude<br />

11. Recognizing Human Gait Types 183<br />

Preben Fihl and Thomas B. Moeslund<br />

12. Environment Recognition System for Biped <strong>Robot</strong> Walking Using <strong>Vision</strong><br />

Based Sensor Fusion 209<br />

Tae-Koo Kang, Hee-Jun Song and Gwi-Tae Park

VIII<br />

13. Non Contact 2D and 3D Shape Recognition by <strong>Vision</strong> System<br />

for <strong>Robot</strong>ic Prehension 231<br />

Bikash Bepari, Ranjit Ray and Subhasis Bhaumik<br />

14. Image Stabilization in Active <strong>Robot</strong> <strong>Vision</strong> 261<br />

Angelos Amanatiadis, Antonios Gasteratos, Stelios Papadakis and Vassilis Kaburlasos<br />

15. Real-time Stereo <strong>Vision</strong> Applications 275<br />

Christos Georgoulas, Georgios Ch. Sirakoulis and Ioannis Andreadis<br />

16. <strong>Robot</strong> vision using 3D TOF systems 293<br />

Stephan Hussmann and Torsten Edeler<br />

17. Calibration of Non-SVP Hyperbolic Catadioptric <strong>Robot</strong>ic <strong>Vision</strong> Systems 307<br />

Bernardo Cunha, José Azevedo and Nuno Lau<br />

18. Computational Modeling, Visualization, and Control of 2-D and 3-D Grasping<br />

under Rolling Contacts 325<br />

Suguru Arimoto, Morio Yoshida and Masahiro Sekimoto1<br />

19. Towards Real Time Data Reduction and Feature Abstraction for <strong>Robot</strong>ics <strong>Vision</strong> 345<br />

Rafael B. Gomes, Renato Q. Gardiman, Luiz E. C. Leite,<br />

Bruno M. Carvalho and Luiz M. G. Gonçalves<br />

20. LSCIC Pre coder for Image and Video Compression 363<br />

Muhammad Kamran, Shi Feng and Wang YiZhuo<br />

21. The robotic visual information processing system based on wavelet<br />

transformation and photoelectric hybrid 373<br />

DAI Shi-jie and HUANG-He<br />

22. Direct visual servoing of planar manipulators using moments of planar targets 403<br />

Eusebio Bugarin and Rafael Kelly<br />

23. Industrial robot manipulator guarding using artificial vision 429<br />

Fevery Brecht, Wyns Bart, Boullart Luc Llata García José Ramón<br />

and Torre Ferrero Carlos<br />

24. Remote <strong>Robot</strong> <strong>Vision</strong> Control of a Flexible Manufacturing Cell 455<br />

Silvia Anton, Florin Daniel Anton and Theodor Borangiu<br />

25. Network-based <strong>Vision</strong> Guidance of <strong>Robot</strong> for Remote Quality Control 479<br />

Yongjin (James) Kwon, Richard Chiou, Bill Tseng and Teresa Wu<br />

26. <strong>Robot</strong> <strong>Vision</strong> in Industrial Assembly and Quality Control Processes 501<br />

Niko Herakovic<br />

27. Testing Stereoscopic <strong>Vision</strong> in <strong>Robot</strong> Teleguide 535<br />

Salvatore Livatino, Giovanni Muscato and Christina Koeffel<br />

28. Embedded System for Biometric Identification 557<br />

Ahmad Nasir Che Rosli

29. Multi-Task Active-<strong>Vision</strong> in <strong>Robot</strong>ics 583<br />

J. Cabrera, D. Hernandez, A. Dominguez and E. Fernandez<br />

30. An Approach to Perception Enhancement in <strong>Robot</strong>ized Surgery<br />

using Computer <strong>Vision</strong> 597<br />

Agustín A. Navarro, Albert Hernansanz, Joan Aranda and Alícia Casals<br />

IX

Design and fabrication of soft zoom lens applied in robot vision 1<br />

X 1<br />

Design and fabrication of soft zoom lens<br />

applied in robot vision<br />

Wei-Cheng Lina, Chao-Chang A. Chenb, Kuo-Cheng Huanga and Yi-Shin Wangb a Instrument Technology Research Center, National Applied Research Laboratories<br />

Taiwan<br />

b National Taiwan University of Science and Technology<br />

Taiwan<br />

1. Introduction<br />

The design theorem of traditional zoom lens uses mechanical motion to precisely adjust the<br />

separations between individual or groups of lenses; therefore, it is more complicated and<br />

requires multiple optical elements. Conventionally, the types of zoom lens can be divided<br />

into optical zoom and digital zoom. With the demands of the opto-mechanical elements, the<br />

zoom ability of lens system was dominated by the optical and mechanical design technique.<br />

In recent years, zoom lens is applied in compact imaging devices, which are popularly used<br />

in Notebook, PDA, mobile phone, and etc. The minimization of the zoom lens with excellent<br />

zoom ability and high imaging quality at the same time becomes a key subject of related<br />

efficient zoom lens design. In this decade, some novel technologies for zoom lens have been<br />

presented and they can simply be classified into the following three types:<br />

(1)Electro-wetting liquid lens:<br />

Electro-wetting liquid lens is the earliest liquid lens which contains two immiscible liquids<br />

having equal density but different refractive index, one is an electrically conductive liquid<br />

(water) and the other is a drop of oil (silicon oil), contained in a short tube with transparent<br />

end caps. When a voltage is applied, because of electrostatic property, the shape of interface<br />

between oil and water will be changed and then the focal length will be altered. Among the<br />

electro-wetting liquid lenses, the most famous one is Varioptic liquid lens.<br />

(2) MEMS process liquid lens:<br />

This type of liquid lens usually contains micro channel, liquid chamber and PDMS<br />

membrane which can be made in MEMS process, and then micro-pump or actuator is<br />

applied to pump liquid in/out the liquid chamber. In this way, the shape of liquid lens will<br />

change as plano-concave or plano-convex lens, even in bi-concave, bi-convex, meniscus<br />

convex and meniscus concave. It can also combine one above liquid lens to enlarge the field<br />

of view (FOV) and the zoom ratio of the system.

2<br />

(3)Liquid crystals lens:<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Liquid crystals are excellent electro-optic materials with electrical and optical anisotropies.<br />

Its optical properties can easily be varied by external electric field. When the voltage is<br />

applied, the liquid crystals molecules will reorient by the inhomogeneous electric field<br />

which generates a centro-symmetric refractive index distribution. Then, the focal length will<br />

be altered with varying voltage.<br />

(4)Solid tunable lens:<br />

One non-liquid tunable lens with PDMS was increasing temperature by heating the silicon<br />

conducting ring. PDMS lens is deformed by mismatching of bi-thermal expansion<br />

coefficients and stiffness between PDMS and silicon. Alternatively the zoom lens can be<br />

fabricated by soft or flexible materials, such as PDMS. The shape of soft lens can be changed<br />

by external force, this force may be mechanical force like contact force or magnetic force;<br />

therefore the focal length will be altered without any fluid pump.<br />

All presented above have a common character that they process optical zoom by changing<br />

lens shape and without any transmission mechanism. The earliest deformed lens can be<br />

traced to the theory of “Adaptive optics” that is a technology used to improve the<br />

performance of optical systems and is most used in astronomy to reduce the effects of<br />

atmospheric distortion. The adaptive optics usually used MEMS process to fabricate the<br />

flexible membranes and combine the actuator to make the lens with the motion of tilt and<br />

shift. However, there has not yet a zoom lens with PDMS that can be controlled by the fluid<br />

pressure for curvature change. In this chapter, PDMS is used for fabricating the lens of SZL<br />

and EFL of this lens system will be changed using pneumatic pressure.<br />

2. PDMS properties<br />

PDMS, Dow Corning SYLGARD 184, is used because of its good light transmittance, a twopart<br />

elastomer (base and curing agent), is liquid in room temperature and it is cured by<br />

mixing base and curing agent at 25°C for 24 hour. Heating will shorten the curing time of<br />

PDMS, 100 °C for 1hour, 150°C for only 15 minutes. PDMS also has low glass transition<br />

temperature (-123 °C), good chemical and thermal stability, and contain flexibility and<br />

elasticity from -50 to 200°C. Since different curing parameter cause different Young's<br />

Modulus and refractive index, this section introduces the material properties of PDMS,<br />

including Young's Modulus, reflection index (n) and Abbe number (ν) in different curing<br />

parameter.<br />

2.1 PDMS mechanical property (Young's Modulus)<br />

Young's Modulus is the important mechanic property in the analysis of the maximum<br />

displacement and deformed shape of PDMS lens. In order to find the relationship of curing<br />

parameter and Young's Modulus, tensile test is used, master curing parameters is curing<br />

time, curing temperature and mixing ratio.<br />

The geometric specification of test sample is define as standard ASTM D412 98a , the process<br />

parameter separate as 10:1 and 15:1, cured at 100 °C for 30, 45, 60 minutes and 150 °C for 15,<br />

20, 25 minutes. As the result, in the same mixed ratio, higher curing temperature and long

4<br />

Fig. 1. Components of the soft zoom lens system.<br />

<strong>Robot</strong> <strong>Vision</strong><br />

3.2 SZL system<br />

The SZL system assembly procedure is following these steps as show in Fig.2.: (1) PDMS<br />

lens is put into the barrel, (2) the spacer which constrain the distance between PDMS lens<br />

and BK7 lens is packed, (3) O-ring is fixed which can avoid air to escape, (4) BK7 lens is<br />

loaded and mounted by retainer, (5) BK7 protect lens is equipped with at both end of the<br />

SZL, and finally, the SZL system is combined with the SZL unit and pneumatic control unit<br />

with a pump (NITTO Inc.), a regulator, a pressure gauge and also a switch.<br />

Fig. 2. Flow chart of SZL assembly process.

Design and fabrication of soft zoom lens applied in robot vision 5<br />

Fig. 3. Soft zoom lens system contain pneumatic supply device for control unit.<br />

Fig.4. shows the zoom process of SZL system for variant applied pressures. The principle of<br />

SZL system is by the way of pneumatic pressure to adapt its shape and curvature, the gas<br />

input is supplied with NITTO pump and max pressure is up to 0.03 MPa. The regulator and<br />

pressure gauge control the magnitude of applied pressure, and there are two switches at<br />

both end of the SZL, one is input valve and the other is output valve.<br />

Fig. 4. Zoom process of the SZL during the pneumatic pressure applied.

6<br />

<strong>Robot</strong> <strong>Vision</strong><br />

3.3 PDMS lens mold and PDMS soft lens processing<br />

The lens mold contains two pair of mold inserts, one pair is for PDMS spherical lens and<br />

another is for PDMS flat lens. Each pair has the upper and the lower mold inserts. The<br />

material of mold insert is copper (Moldmax XL) and is fabricated by ultra-precision<br />

diamond turning machine. Fig.4. shows the fabrication process. Since the PDMS lens is<br />

fabricated by casting, the parting line between the upper and the lower mold inserts need<br />

have an air trap for over welling the extra amount PDMS. At the corner of the mold are four<br />

guiding pins to orientate the mold plates.<br />

The fabrication processes is shown at Fig.5. The fabrication process of PDMS lens is mixing,<br />

stirring, degassing and heating for curing. At first, base A and curing agent B is mixed and<br />

stirred with 10:1 by weight, then degas with a vacuum pump. After preparing the PDMS,<br />

cast PDMS into the mold cavity slowly and carefully, then close the mold and put into oven<br />

for curing. As the result, at curing temperature 100°C, PDMS lens can be formed after 60<br />

min. Fig.6. is the PDMS lens of SZL unit. There are spherical lens and flat lens for<br />

experimental tests and they are fabricated at the same molding procedure.<br />

(a) PDMS cast into mold cavity (b) Mold cavity full of PDMS<br />

(c) Curing in oven (d) After curing<br />

Fig. 5. Fabrication processes of PDMS lens.<br />

(a) PDMS flat lens (b) PDMS sphere lens<br />

Fig. 6. PDMS lens of soft zoom lens system.

Design and fabrication of soft zoom lens applied in robot vision 7<br />

3.4 Deformation analysis of PDMS lens<br />

In the deformation analysis of PDMS lens, the measured Young’s Modulus and Poisson’s<br />

Ratio of PDMS are inputted to the software and then set the meshed type, boundary condition<br />

and loading. The boundary condition is to constrain the contact surface between the PDMS<br />

lens and mechanism like barrel and spacer, and the load is the applied pressure of pneumatic<br />

supply device. Fig.7. shows the analysis procedure. As the result of the deformation analysis,<br />

comparing to the SZL with PDMS flat and spherical lens, the flat lens has larger deformation<br />

with the same curing parameter of both PDMS lens. Fig.8. shows the relationship of the<br />

maximum displacement versus the Young’s Modulus of PDMS flat lens and spherical lens.<br />

Fig. 7. Flow chart of PDMS lens deformation analysis.<br />

Max. displacement(mm<br />

6<br />

5<br />

4<br />

3<br />

2<br />

1<br />

0<br />

PDMS flat lens V.S spherical lens<br />

0.01 0.02 0.03<br />

Pressure(MPa)<br />

flat E = 1 MPa flat E = 1.2 MPa flat E = 1.4 MPa<br />

spherical E = 1MPa spherical E = 1.2MPa spherical E = 1.4MPa<br />

Fig. 8. Relationship of PDMS lens Young’s Modulus and maximum displacement.

Design and fabrication of soft zoom lens applied in robot vision 11<br />

thickness of PDMS soft lens. The SZL system has been verified with the function of zoom<br />

ability.<br />

In future, the deformed shape of PDMS lens after applied pressure needs to be analyzed and<br />

then the altered lens’s shape can be obtained to be fit and calculated. It can be imported to<br />

the optical software to check the EFL variation after applying the pressure and also compare<br />

with the inspection data. Furthermore, it can find the optimized design by the shape<br />

analysis of PDMS lens when the pneumatic pressure is applied, then the correct lens is<br />

obtained to modify the optical image property. On the operation principle, we should find<br />

other actuator instead of fluid pump to change the shape of PDMS lens and EFL of SZL with<br />

external pressure for zoom lens devices. Further research will work on the integration of the<br />

SZL system with imaging system for mobile devices or robot vision applications.<br />

6. Future work<br />

As the result, the pneumatic control soft zoom lens can simply proof our idea that the<br />

effective focal length of zoom lens will be altered through the deformation varied by<br />

changing the pneumatic pressure. As we know, the deformed lens whether in pneumatic<br />

system or MEMS micro-pump system is not convenient to apply in compact imaging<br />

devices. In order to improve this defect, we provide another type of soft zoom lens whose<br />

effective focal length can be tuned without external force. Fig.16. shows one of the new idea,<br />

flexible material are used to fabricate the hollow ball lens then filled with the transparent<br />

liquid or gel. The structure like human eyes and its shape can be altered by an adjustable<br />

ring, thus the focal length will be changed.<br />

Fig. 16. The structure of artificial eyes.<br />

Another one of the improvement of pneumatic control soft zoom lens is showed in Fig.17.<br />

This zoom lens can combine one and above soft lens unit, each unit has at least one soft lens<br />

at both end and filled with transparent liquid or gel then seal up. The zoom lens with two<br />

and above soft lens unit can be a successive soft zoom lens and the motion is like a piston<br />

when the adjustable mechanism is moved, the fluid that filled in the lens will be towed then<br />

the lens unit will be present as a concave or convex lens. Due to different combination, the<br />

focal length is changed from the original type. Those two type soft zoom lens will be<br />

integrated in imaging system which can applied in robot vision.

12<br />

Fig. 17. The structure of successive soft zoom lens.<br />

7. References<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Berge, Bruno, Peseux, Jerome, (1999). WO 99/18456, USPTO Patent Full-Text and Image<br />

Database , 1999<br />

Berge B. ,Varioptic, Lyon, (2005). Liquid lens technology : Principle of electrowetting based<br />

lenses and applications to image, 2005<br />

Chao Chang-Chen, Kuo-Cheng, Huang, Wei-Cheng Lin, (2007) US 7209297, USPTO Patent<br />

Full-Text and Image Database, 2007<br />

Dow Corning, (1998). Production information-Sylgard® 184 Silicone Elastomer, 1998<br />

David A. Chang-Yen, Richard K. Eich, and Bruce K. Gale, (2005). A monolithic PDMS<br />

waveguide system fabricated using soft-lithography techniques," Journal of<br />

lightwave technology, Vol. 23, NO. 6, June (2005)<br />

Feenstra, Bokke J., (2003). WO 03/069380, USPTO Patent Full-Text and Image Database,<br />

2003<br />

Huang, R. C. and Anand L., (2005). Non-linear mechanical behavior of the elastomer<br />

polydimethylsiloxane (PDMS) used in the manufacture of microfluidic devices,<br />

2005<br />

Jeong, Ki-Hun, Liu Gang L., (2004). Nikolas Chronis and Luke P. Lee, Turnable<br />

microdoublet lens array, Optics Express 2494, Vol. 12, No. 11, 2004<br />

Peter M. Moran, Saman Dharmatilleke, Aik Hau Khaw, Kok Wei Tan, Mei Lin Chan, and<br />

Isabel Rodriguez, (2006). Fluidic lenses with variable focal length, Appl. Phys. Lett.<br />

88, 2006<br />

Rajan G. S., G. S. Sur, J. E. Mark, D. W. Schaefer, G. Beaucage, A. (2003). Preparation and<br />

application of some unusually transparent poly (dimethylsiloxane)<br />

nanocomposites, J. Polym. Sci., B, vol.41, 1897–1901<br />

R.A. Gunasekaran, M. Agarwal, A. Singh, P. Dubasi,P. Coane, K. Varahramyan, (2005).<br />

Design and fabrication of fluid controlled dynamic optical lens system, Optics and<br />

Lasers in Engineering 43, 2005

Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a Dioptric System 13<br />

1. Introduction<br />

X 2<br />

Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a<br />

Dioptric System<br />

E. Martínez and A.P. del Pobil<br />

<strong>Robot</strong>ic Intelligence Lab., Jaume-I University, Castellón, Spain<br />

Interaction Science Dept., Sungkyunkwan University, Seoul, S. Korea<br />

There is a growing interest in <strong>Robot</strong>ics research on building robots which behave and even<br />

look like human beings. Thus, from industrial robots, which act in a restricted, controlled,<br />

well-known environment, today’s robot development is conducted as to emulate alive<br />

beings in their natural environment, that is, a real environment which might be dynamic<br />

and unknown. In the case of mimicking human being behaviors, a key issue is how to<br />

perform manipulation tasks, such as picking up or carrying objects. However, as this kind of<br />

actions implies an interaction with the environment, they should be performed in such a<br />

way that the safety of all elements present in the robot workspace at each time is<br />

guaranteed, especially when they are human beings.<br />

Although some devices have been developed to avoid collisions, such as, for instance, cages,<br />

laser fencing or visual acoustic signals, they considerably restrict the system autonomy and<br />

flexibility. Thus, with the aim of avoiding those constrains, a robot-embedded sensor might be<br />

suitable for our goal. Among the available ones, cameras are a good alternative since they are<br />

an important source of information. On the one hand, they allow a robot system to identify<br />

interest objects, that is, objects it must interact with. On the other hand, in a human-populated,<br />

everyday environment, from visual input, it is possible to build an environment representation<br />

from which a collision-free path might be generated. Nevertheless, it is not straightforward to<br />

successfully deal with this safety issue by using traditional cameras due to its limited field of<br />

view. That constrain could not be removed by combining several images captured by rotating<br />

a camera or strategically positioning a set of them, since it is necessary to establish any feature<br />

correspondence between many images at any time. This processing entails a high<br />

computational cost which makes them fail for real-time tasks.<br />

Despite combining mirrors with conventional imaging systems, known as catadioptric sensors<br />

(Svoboda et al., 1998; Wei et al., 1998; Baker & Nayar, 1999) might be an effective solution,<br />

these devices unfortunately exhibit a dead area in the centre of the image that can be an<br />

important drawback in some applications. For that reason, a dioptric system is proposed.<br />

Dioptric systems, also called fisheye cameras, are systems which combine a fisheye lens with a<br />

conventional camera (Baker & Nayar, 1998; Wood, 2006). Thus, a conventional lens is changed<br />

by one of these lenses that has a short focal length that allows cameras to see objects in an<br />

hemisphere. Although fisheye devices present several advantages over catadioptric sensors

14<br />

<strong>Robot</strong> <strong>Vision</strong><br />

such as no presence of dead areas in the captured images, a unique model for this kind of<br />

cameras does not exist unlike central catadioptric ones (Geyer & Daniilidis, 2000).<br />

So, with the aim of designing a dependable, autonomous, manipulation robot system, a fast,<br />

robust vision system is presented that covers the full robot workspace. Two different stages<br />

have been considered:<br />

moving object detection<br />

target tracking<br />

First of all, a new robust adaptive background model has been designed. It allows the<br />

system to adapt to different unexpected changes in the scene such as sudden illumination<br />

changes, blinking of computer screens, shadows or changes induced by camera motion or<br />

sensor noise. Then, a tracking process takes place. Finally, the estimation of the distance<br />

between the system and the detected objects is computed by using an additional method. In<br />

this case, information about the 3D localization of the detected objects with respect to the<br />

system was obtained from a dioptric stereo system.<br />

Thus, the structure of this paper is as follows: the new robust adaptive background model is<br />

described in Section 2, while in Section 3 the tracking process is introduced. An epipolar<br />

geometry study of a dioptric stereo system is presented in Section 4. Some experimental<br />

results are presented in Section 5, and discussed in Section 6.<br />

2. Moving Object Detection: A New Background Maintance Approach<br />

As it was presented in (Cervera et al., 2008), an adaptive background modelling combined<br />

with a global illumination change detection method is used to proper detect any moving<br />

object in a robot workspace and its surrounding area. That approach can be summarized as<br />

follows:<br />

In a first phase, a background model is built. This model associates a statistical<br />

distribution, defined by its mean color value and its variance, to each pixel of the image.<br />

It is important to note that the implemented method allows to obtain the initial<br />

background model without any restrictions of bootstrapping<br />

In a second phase, two different processing stages take place:<br />

o First, each image is processed at pixel level, in which the background model is<br />

used to classify pixels as foreground or background depending on whether they<br />

fit in with the built model or not<br />

o Second, the raw classification based on the background model is improved at<br />

frame level<br />

Moreover, when a global change in illumination occurs, it is detected at frame level and the<br />

background model is properly adapted.<br />

Thus, when a human or another moving object enters in a room where the robot is, it is<br />

detected by means of the background model at pixel level. It is possible because each pixel<br />

belonging to the moving object has an intensity value which does not fit into the<br />

background model. Then, the obtained binary image is refined by using a combination of<br />

subtraction techniques at frame level. Moreover, two consecutive morphological operations<br />

are applied to erase isolated points or lines caused by the dynamic factors mentioned above.<br />

The next step is to update the statistical model with the values of the pixels classified as<br />

background in order to adapt it to some small changes that do not represent targets.

Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a Dioptric System 15<br />

At the same time, a process for sudden illumination change detection is performed at frame<br />

level. This step is necessary because the model is based on intensity values and a change in<br />

illumination produces a variation of them. A new adaptive background model is built when<br />

an event of this type occurs, because if it was not done, the application would detect<br />

background pixels as if they were moving objects.<br />

3. Tracking Process<br />

Once targets are detected by using a background maintenance model, the next step is to<br />

track each target. A widely used approach in Computer <strong>Vision</strong> to deal with this problem is<br />

the Kalman Filter (Kalman & Bucy, 1961; Bar-Shalom & Li, 1998; Haykin, 2001; Zarchan &<br />

Mussof, 2005; Grewal et al., 2008). It is an efficient recursive filter that has two distinct<br />

phases: Predict and Update. The predict phase uses the state estimate from the previous<br />

timestep to produce an estimate of the state at the current timestep. This predicted state<br />

estimate is also known as the a priori state estimate because, although it is an estimate of the<br />

state at the current timestep, it does not include observation information from the current<br />

timestep. In the update phase, the current a priori prediction is combined with current<br />

observation information to refine the state estimate. This improved estimate is termed the a<br />

posteriori state estimate. With regard to the current observation information, it is obtained by<br />

means of an image correspondence approach. In that sense, one of most well-known<br />

methods is the Scale Invariant Feature Transform (SIFT) approach (Lowe, 1999; Lowe, 2004)<br />

which shares many features with neuron responses in primate vision. Basically, it is a 4stage<br />

filtering approach that provides a feature description of an object. That feature array<br />

allows a system to locate a target in an image containing many other objects. Thus, after<br />

calculating feature vectors, known as SIFT keys, a nearest-neighbor approach is used to<br />

identify possible objects in an image. Moreover, that array of features is not affected by<br />

many of the complications experienced in other methods such as object scaling and/or<br />

rotation. However, some disadvantages made us discard SIFT for our purpose:<br />

It uses a varying number of features to describe an image and sometimes it might be<br />

not enough<br />

Detecting substantial levels of occlusion requires a large number of SIFT keys what can<br />

result in a high computational cost<br />

Large collections of keys can be space-consuming when many targets have to be<br />

tracked<br />

It was designed for perspective cameras, not for fisheye ones<br />

All these approaches have been developed for perspective cameras. Although some research<br />

has been carried out to adapt them to omnidirectional devices (Fiala, 2005; Tamimi et al.,<br />

2006), a common solution is to apply a transformation to the omnidirectional image in order<br />

to obtain a panoramic one and to be able to use a traditional approach (Cielniak et al., 2003;<br />

Liu et al., 2003; Potúcek, 2003; Zhu et. al (2004); Mauthner et al. (2006); Puig et al., 2008).<br />

However, this might give rise to a high computational cost and/or mismatching errors. For<br />

all those reasons, we propose a new method which is composed of three different steps (see<br />

Fig. 1):<br />

1. The minimum bounding rectangle that encloses each detected target is computed<br />

2. Each area described by a minimum rectangle identifying a target, is transformed<br />

into a perspective image. For that, the following transformation is used:

16<br />

= c/Rmed<br />

Rmed = (Rmin + Rmax)/2<br />

c1 = c0 + (Rmin + r) sin <br />

r1 = r0 + (Rmin + r) cos <br />

<strong>Robot</strong> <strong>Vision</strong><br />

where (c0, r0) represent the image center coordinates in terms of column and row<br />

respectively, while (c1, r1) are the coordinates in the new perspective image; is the<br />

angle between Y-axis and the ray from the image center to the considered pixel;<br />

and, Rmin and Rmax are, respectively, the minimum and maximum radius of a torus<br />

which encloses the area to be transformed. These radii are obtained from the four<br />

corners of the minimum rectangle that encloses the target to be transformed.<br />

However, there is a special situation to be taken into account. It is produced when<br />

the center of the image is in the minimum rectangle. This situation, once it is<br />

detected, is solved by setting Rmin to 0.<br />

On the other hand, note that only the detected targets are transformed into its<br />

cylindrical panoramic image, not the whole image. It allows the system to reduce<br />

the computational cost and time consumption. Moreover, the orientation problem<br />

is also solved, since all the resulting cylindrical panoramic images have the same<br />

orientation. In this way, it is easier to compare two different images of the same<br />

interest object<br />

3. The cylindrical panoramic images obtained for the detected targets are compared with<br />

the ones obtained in the previous frame. A similarity likelihood criterion is used for<br />

matching different images of the same interest object. Moreover, in order to reduce<br />

computational cost, images to be compared must have a centroid distance lower than a<br />

threshold. This distance is measured in the fisheye image and is described by means of<br />

a circle of possible situations of the target having as center its current position and as<br />

radius the maximum distance allowed. In that way, the occlusion problem is solved,<br />

since all parts of the cylindrical panoramic images are being analyzed.<br />

(a) Original Image<br />

Fig. 1. Tracking Process<br />

(b) Image from<br />

Segmentation Process<br />

(c) Minimum rectangle<br />

that encloses the target<br />

(d) Corresponding<br />

cylindrical panoramic image<br />

(1)<br />

(2)<br />

(3)<br />

(4)<br />

(e) Matching process<br />

This method is valid for matching targets in a monocular video sequence. Thus, when a<br />

dioptric stereo system is provided, the processing is changed. When an interest object firstly<br />

appears in an image, it is necessary to establish its corresponding image in the other camera<br />

of the stereo pair. For that, a disparity estimation approach is used. That estimation is<br />

carried out until the interest object is in the field of view of both stereo cameras. In that<br />

moment, the same identifier is assigned to both images of the interest object. Then, matching<br />

is done as a monocular system in a parallel way, but as both images have the same identifier<br />

it is possible to estimate its distance from the system whenever it is necessary. As it is<br />

performed in a parallel way and processing is not time-consuming, a real-time performance<br />

is obtained.

Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a Dioptric System 17<br />

4. Experimental Results<br />

For the experiments carried out, a mobile manipulator was used which incorporates a visual<br />

system composed of 2 fisheye cameras mounted on the robot base, pointing upwards to the<br />

ceiling, to guarantee the safety in its whole workspace. Fig. 2 depicts our experimental<br />

setup, which consists of a mobile Nomadic XR4000 base, a Mitsubishi PA10 arm, and two<br />

fisheye cameras (Sony SSC-DC330P 1/3-inch color cameras with fish-eye vari-focal lenses Fujinon<br />

YV2.2x1.4A-2, which provide 185-degree field of view). Images to be processed were<br />

acquired in 24-bit RGB color space with a 640x480 resolution.<br />

Fig. 2. Experimental Set-up<br />

Fisheye Vari-focal Lens<br />

24-bit RGB, 640x480 images<br />

Different experiments were carried out obtaining a good performance in all of them. Some<br />

of the experimental results are depicted in Fig. 3. where different parts of the whole process<br />

are shown. The first column represents the image captured by a fisheye camera, then the<br />

binary image generated by the proposed approach appears in the next column. The three<br />

remaining columns represent several phases of the tracking process, that is, the generation<br />

of the minimum bounding rectangle and the cylindrical panoramic images. Note that in all<br />

cases, both the detection approach and the proposed tracking process were successful in<br />

their purpose, although some occlusions, rotation and/or scaling had occurred.<br />

5. Conclusions & Future Work<br />

We have presented a dioptric system for reliable robot vision by focusing on the tracking<br />

process. Dioptric cameras have the clear advantage of covering the whole workspace<br />

without resulting in a time consuming application, but there is little previous work about<br />

this kind of devices. Consequently, we had to implement novel techniques to achieve our<br />

goal. Thus, on the one hand, a process to detect moving objects within the observed scene<br />

was designed. The proposed adaptive background modeling approach combines moving<br />

object detection with global illumination change identification. It is composed of two<br />

different phases, which consider several factors which may affect the detection process, so<br />

that constraints in illumination conditions do not exist, and neither is it necessary to wait for<br />

some time for collecting enough data before starting to process.

18<br />

Fig. 3. Some Experimental Results<br />

<strong>Robot</strong> <strong>Vision</strong>

Methods for Reliable <strong>Robot</strong> <strong>Vision</strong> with a Dioptric System 19<br />

On the other hand, a new matching process has been proposed. Basically, it obtains a<br />

panoramic cylindrical image for each detected target from an image in which identified<br />

targets where enclosed in the minimum bounding rectangle. In this way, the rotation<br />

problem has disappeared. Next, each panoramic cylindrical image is compared with all the<br />

ones obtained in the previous frame whenever they are in its proximity. As all previous<br />

panoramic cylindrical images are used, the translation problem is eliminated. Finally, a<br />

similarity likelihood criterion is used for matching target images at two different times. With<br />

this method the occlusion problem is also solved. As time consumption is a critical issue in<br />

robotics, when a dioptric system is used, this processing is performed in a parallel way such<br />

that correspondence between two different panoramic cylindrical images of the same target<br />

taken at the same time for both cameras is established by means of a disparity map.<br />

Therefore, a complete real-time surveillance system has been presented.<br />

6. Acknowledges<br />

This research was partly supported by WCU (World Class University) program through the<br />

National Research Foundation of Korea funded by the Ministry of Education, Science and<br />

Technology (Grant No. R31-2008-000-10062-0), by the European Commission’s Seventh<br />

Framework Programme FP7/2007-2013 under grant agreement 217077 (EYESHOTS project),<br />

by Ministerio de Ciencia e Innovación (DPI-2008-06636), by Generalitat Valenciana<br />

(PROMETEO/2009/052) and by Fundació Caixa Castelló-Bancaixa (P1-1B2008-51).<br />

7. References<br />

Baker, S. & Nayar, S.K. (1998). A Theory of Catadioptric Image Formation. Proceedings of<br />

International Conference on Computer <strong>Vision</strong> (ICCV), pp. 35–42, 1998, Bombay, India<br />

Baker, S. & Nayar, S.K. (1999). A Theory of Single-Viewpoint Catadioptric Image Formation.<br />

International Journal on Computer <strong>Vision</strong>, Vol. 2, No. 2, (1999), page numbers (175-<br />

196)<br />

Bar-Shalom, Y. and Li X-R (1998). Estimation and Tracking: Principles, Techniques and Software,<br />

YBS Publishing, ISBN: 096483121X<br />

Cervera, E.; Garcia-Aracil, N.; Martínez, E.; Nomdedeu, L. & del Pobil, A. Safety for a <strong>Robot</strong><br />

Arm Moving Amidst Humans by Using Panoramic <strong>Vision</strong>. Proceedings of IEEE<br />

International Conference on <strong>Robot</strong>ics & Automation (ICRA), pp. 2183–2188, ISBN: 978-<br />

4244-1646-2, May 2008, Pasadena, California<br />

Cielniak, G.; Miladinovic, M.; Hammarin, D. & Göranson, L. (2003). Appearance-based<br />

Tracking of Persons with an Omnidirectional <strong>Vision</strong> Sensor. Proceedings of the 4 th<br />

IEEE Workshop on Omnidirectional <strong>Vision</strong> (OmniVis), 2003, Madison, Wisconsin, USA<br />

Fiala, M. (2005). Structure from Motion using SIFT Features and the PH Transform with<br />

Panoramic Imagery. Proceedings of the 2 nd Canadian Conference on Computer and <strong>Robot</strong><br />

<strong>Vision</strong> (CRV), pp. 506–513, 2005<br />

Geyer, C. & Daniilidis, K. (2000). A Unifying Theory for Central Panoramic Systems and<br />

Practical Applications. Proceedings of 6 th European Conference on Computer <strong>Vision</strong><br />

(ECCV), pp. 445–461, ISBN: 3-540-67686-4, June 2000, Dublin, Ireland<br />

Grewal, M., Andrews, S. and Angus, P. (2008) Kalman Filtering Theory and Practice Using<br />

MATLAB, Wiley-IEEE Press, ISBN: 978-0-470-17366-4

20<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Haykin, S. (2001). Kalman Filtering and Neural Networks. John Wiley & Sons, Inc. ISBN: 978-0-<br />

471-36998-1<br />

Kalman, R.E. and Bucy, R.S. (1961). New Results in Linear Filtering and Prediction Theory.<br />

Journal of Basic Engineering (1961)<br />

Liu, H.; Wenkai, P.I. & Zha, H. (2003) Motion Detection for Multiple Moving Targets by<br />

Using an Omnidirectional Camera. Proceedings of IEEE International Conference on<br />

<strong>Robot</strong>ics, Intelligent Systems and Signal Processing, pp. 422–426, October 2003,<br />

Chansgsha, China<br />

Lowe, D.G. (1999). Object recognition from local scale-invariant features, Proceedings of<br />

International Conference on Computer <strong>Vision</strong>, pp. 1150–1157, September 1999, Corfu,<br />

Greece<br />

Lowe, D.G. (2004). Distinctive image features from Scale-Invariant Keypoints. International<br />

Journal of Computer <strong>Vision</strong>, Vol. 60, No. 2, (2004), page numbers (91-110)<br />

Mauthner, T.; Fraundorfer, F. & Bischof, H. (2006). Region Matching for Omnidirectional<br />

Images Using Virtual Camera Planes. Proceedings of Computer <strong>Vision</strong> Winter<br />

Workshop, February 2006, Czech Republic<br />

Murillo, A.C.; Sagüés, C.; Guerrero, J.J.; Goedemé, T.; Tuytelaars, T. and Van Gool, L. (2006).<br />

From Omnidirectional Images to Hierarchical Localization. <strong>Robot</strong>ics and Autonomous<br />

Systems, Vol. 55, No. 5, (December 2006), page numbers (372 – 382)<br />

Potúcek, I. (2003). Person Tracking using Omnidirectional View. Proceedings of the 9 th<br />

International Student EEICT Conference and Competition, pp. 603–607, ISBN: 80-214-<br />

2370, 2003, Brno, Czech Republic<br />

Puig, L.; Guerrero, J. & Sturm, P. (2008). Matching of Omnidirectional and Perspectiva<br />

Images using the Hybrid Fundamental Matrix. Proceedings of the Workshop on<br />

Omnidirectional <strong>Vision</strong>, Camera Networks and Non-Classical Cameras, October 2008,<br />

Marseille, France<br />

Svoboda, T.; Pajdla, T. & Hlavác, V. (1998). Epipolar Geometry for Panoramic Cameras.<br />

Proceedings of the 5 th European Conference on Computer <strong>Vision</strong> (ECCV), pp. 218–231,<br />

ISBN: 3-540-64569-1, June 1998, Freiburg, Germany<br />

Tamimi, H.; Andreasson, H.; Treptow, A.; Duckett, T. & Zell, A. (2006). Localization of<br />

Mobile <strong>Robot</strong>s with Omnidirectional <strong>Vision</strong> using Particle Filter and Iterative SIFT.<br />

<strong>Robot</strong>ics and Autonomous Systems, Vol. 54 (2006), page numbers (758-765)<br />

Wei, S.C.; Yagi, Y. & Yachida, M. (1998). Building Local Floor Map by Use of Ultrasonic and<br />

Omni-directional <strong>Vision</strong> Sensor. Proceedings of IEEE International Conference on<br />

<strong>Robot</strong>ics & Automation (ICRA), pp. 2548–2553, ISBN: 0-7803-4300-X, May 1998,<br />

Leuven, Belgium<br />

Wood, R.W. (1906). Fish-eye Views. Philosophical Magazine, Vol. 12, No. 6, (1906), page<br />

numbers (159–162)<br />

Zarchan, P. and Mussof, H. (2005) Fundamentals of Kalman Filtering: A Practical Approach,<br />

American Institute of Aeronautics & Ast (AIAA), ISBN: 978-1563474552<br />

Zhu, Z.; Karuppiah, D.R.; Riseman, E.M. & Hanson, A.R. (2004). Keeping Smart,<br />

Omnidirectional Eyes on You [Adaptive Panoramic Stereo<strong>Vision</strong>]. IEEE <strong>Robot</strong>ics<br />

and Automation Magazine – Special Issue on Panoramic <strong>Robot</strong>ics, Vol. 11, No. 4,<br />

(December 2004), page numbers (69–78), ISSN: 1070-9932

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 21<br />

1. Introduction<br />

03<br />

An Approach for Optimal Design<br />

of <strong>Robot</strong> <strong>Vision</strong> Systems<br />

Kanglin Xu<br />

New Mexico Tech<br />

USA<br />

The accuracy of a robot manipulator’s position in an application environment is dependent on<br />

the manufacturing accuracy and the control accuracy. Unfortunately, there always exist both<br />

manufacturing error and control error. Calibration is an approach to identifying the accurate<br />

geometry of the robot. In general, robots must be calibrated to improve their accuracy. A calibrated<br />

robot has a higher absolute positioning accuracy. However, calibration involves robot<br />

kinematic modeling, pose measurement, parameter identification and accuracy compensation.<br />

These calibrations are hard work and time consuming. For an active vision system, a<br />

robot device for controlling the motion of cameras based on visual information, the kinematic<br />

calibrations are even more difficult. As a result, even though calibration is fundamental, most<br />

existing active vision systems are not accurately calibrated (Shih et al., 1998). To address this<br />

problem, many researchers select self-calibration techniques. In this article, we apply a more<br />

active approach, that is, we reduce the kinematic errors at the design stage instead of at the<br />

calibration stage. Furthermore, we combine the model described in this article with a costtolerance<br />

model to implement an optimal design for active vision systems so that they can be<br />

used more widely in enterprise. We begin to build the model using the relation between two<br />

connecting joint coordinates defined by a DH homogeneous transformation.We then use the<br />

differential relationship between these two connecting joint coordinates to extend the model<br />

so that it relates the kinematic parameter errors of each link to the pose error of the last link.<br />

Given this model, we can implement an algorithm for estimating depth using stereo cameras,<br />

extending the model to handle an active stereo vision system. Based on these two models, we<br />

have developed a set of C++ class libraries. Using this set of libraries, we can estimate robot<br />

pose errors or depth estimation errors based on kinematic errors. Furthermore, we can apply<br />

these libraries to find the key factors that affect accuracy. As a result, more reasonable minimum<br />

tolerances or manufacturing requirements can be defined so that the manufacturing cost<br />

is reduced while retaining relatively high accuracy. Besides providing an approach to find the<br />

key factors and best settings of key parameters, we demonstrate how to use a cost-tolerance<br />

model to evaluate the settings. In this way, we can implement optimal design for manufacturing(DFM)<br />

in enterprises. Because our models are derived from the Denavit-Hartenberg transformation<br />

matrix, differential changes for the transformation matrix and link parameters, and<br />

the fundamental algorithm for estimating depth using stereo cameras, they are suitable for<br />

any manipulator or stereo active vision system. The remainder of this article is organized<br />

as follows. Section 2 derives the model for analyzing the effect of parameter errors on robot

22<br />

<strong>Robot</strong> <strong>Vision</strong><br />

poses. Section 3 introduces the extended kinematic error model for an active vision system.<br />

It should be noted that this extended model is the main contribution of our article and that<br />

we integrate the robot differential kinematics into an active vision system. Section 4 provides<br />

more detailed steps describing how to use our approach. Section 5 discusses some issues related<br />

to the design of active vision systems and DFM. Section 6 presents a case study for a real<br />

active vision system and cost evaluation using a cost-tolerance model. Finally, Section 7 offers<br />

concluding remarks.<br />

2. Kinematic Differential Model Derived from DH Transformation Matrix<br />

A serial link manipulator consists of a sequence of links connected together by actuated<br />

joints (Paul, 1981). The kinematical relationship between any two successive actuated joints is<br />

defined by the DH (Denavit-Hartenberg) homogeneous transformation matrix. The DH homogeneous<br />

transformation matrix is dependent on the four link parameters, that is, θ i, α i, r i,<br />

and d i. For the generic robot forward kinematics, only one of these four parameters is variable.<br />

If joint i is rotational, the θ i is the joint variable and d i, α i, and r i are constants. If joint<br />

i is translational, the d i is the joint variable and θ, α i, and r i are constants. Since there always<br />

exist errors for these four parameters, we also need a differential relationship between any<br />

two successive actuated joints. This relationship is defined by matrix dA i which is dependent<br />

on dθ i, dα i, dr i, and dd i as well as θ i, α i, r i, and d i. Given the relationship between two successive<br />

joints A i and differential relationship between two successive joints dA i, we can derive<br />

an equation to calculate the accurate position and orientation of the end-effector with respect<br />

to the world coordinate system for a manipulator with N degrees of freedom(N-DOF).<br />

In this section, we will first derive the differential changes between two successive frames in<br />

subsection 2.1. We then give the error model for a manipulator of N degrees of freedom with<br />

respect to the world coordinate system in subsection 2.2.<br />

2.1 The Error Relation between Two Frames<br />

For an N-DOF manipulator described by the Denavit-Hartenberg definition, the homogeneous<br />

transformation matrix Ai which relates the (i-1)th joint to ith joint is (Paul, 1981)<br />

A i =<br />

⎡<br />

⎢<br />

⎣<br />

cθ i −sθ i cα i sθ i sα i r icθ i<br />

sθ i cθ i cα i −cθ i sα i r isθ i<br />

0 sα i cα i d i<br />

0 0 0 1<br />

where s and c refer to sine and cosine functions, and θi, αi, ri, and di are link parameters.<br />

Given the individual transformation matrix Ai, the end of an N-DOF manipulator can be<br />

represented as<br />

TN = A1A2 · · · AN−1AN (2)<br />

We will also use the following definitions. We define Ui = AiAi+1 · · · AN with UN+1 = I, and<br />

a homogeneous matrix<br />

A i =<br />

ni o i a i p i<br />

0 0 0 1<br />

where ni, oi, ai and pi are 3 × 1 vectors.<br />

Given the ith actual coordinate frame Ai and the ith nominal frame A0 i , we can obtain an<br />

additive differential transformation dAi <br />

⎤<br />

⎥<br />

⎦<br />

dAi = Ai − A 0 i . (4)<br />

(1)<br />

(3)

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 23<br />

If we represent the ith additive differential transformation dA i as the ith differential transformation<br />

δA i right multiplying the transformation A i , we can write<br />

dA i = A i δA i. (5)<br />

In this case, the changes are with respect to coordinate frame A i.<br />

Assuming the link parameters are continuous and differentiable we can represent dA i in another<br />

way, that is<br />

dA i = ∂A i<br />

∂θ i<br />

Comparing (5) with (6), we obtain<br />

dθ i + ∂A i<br />

∂α i<br />

δA i = A −1 ∂A i<br />

∂θ i<br />

+ ∂A i<br />

∂r i<br />

dα i + ∂A i<br />

∂r i<br />

For the homogeneous matrix, the inverse matrix of Ai is<br />

A −1<br />

i =<br />

⎡<br />

⎢<br />

⎣<br />

nt i<br />

o<br />

−pi · ni t i<br />

a<br />

−pi · oi t i<br />

01×3 −pi · ai 1<br />

⎤<br />

⎥<br />

⎦<br />

dri + ∂Ai dd<br />

∂d<br />

i. (6)<br />

i<br />

dθi + ∂Ai dαi ∂αi dri + ∂Ai <br />

ddi . (7)<br />

∂di By differentiating all the elements of equation (1) with respect to θi, αi, ri and di respectively,<br />

we obtain<br />

⎡<br />

−sθi −cθi cαi ∂Ai ⎢<br />

= ⎢ cθi −sθi cαi ∂θ ⎣<br />

i 0 0<br />

0 0<br />

⎡<br />

0 sθi sαi ∂Ai ⎢<br />

= ⎢ 0 −cθi sαi ∂α ⎣<br />

i 0 cαi 0 0<br />

⎡<br />

0 0<br />

∂Ai ⎢<br />

= ⎢ 0 0<br />

∂r ⎣<br />

i 0 0<br />

0 0<br />

⎡<br />

0 0<br />

∂Ai ⎢<br />

= ⎢ 0 0<br />

∂d ⎣<br />

i 0 0<br />

0 0<br />

cθi sαi −ri sθi sθi cαi ri cθi 0 0<br />

0 0<br />

⎤<br />

sθi cαi 0<br />

−cθi cαi 0 ⎥<br />

−sαi 0 ⎦<br />

0 0<br />

⎤<br />

0 cθi 0 sθ ⎥<br />

i ⎥<br />

0 0 ⎦<br />

0 0<br />

⎤<br />

0 0<br />

0 0 ⎥<br />

0 1 ⎦<br />

0 0<br />

⎤<br />

⎥<br />

⎦<br />

(9)<br />

(10)<br />

(11)<br />

(12)<br />

Substituting equations (8), (9), (10), (11) and (12) into (7), we obtain<br />

⎡<br />

⎢<br />

δAi = ⎢<br />

⎣<br />

0<br />

cαi dθi −sαi dθi −cαi dθi 0<br />

dαi sαi dθi −dαi 0<br />

dri u<br />

v<br />

⎤<br />

⎥<br />

⎦<br />

(13)<br />

0 0 0 0<br />

(8)

24<br />

<strong>Robot</strong> <strong>Vision</strong><br />

where u = ri cαi dθi + sαi ddi and v = ri sαi dθi + cαi ddi. Since dAi = Ai δAi, therefore (Paul,<br />

1981) ⎛ ⎞ ⎛<br />

⎞<br />

dxi ddi ⎝ dy ⎠<br />

i = ⎝ di cα dθi + sαi dr ⎠<br />

i<br />

(14)<br />

dzi −di sα dθi + cαi dri ⎛ ⎞ ⎛ ⎞<br />

δxi<br />

dαi ⎝ δyi<br />

⎠ = ⎝ sα dθ ⎠<br />

i<br />

(15)<br />

δzi cα dθi where (dxi dyi dzi) t is the differential translation vector and t δxi<br />

δyi<br />

δzi is the differential<br />

rotation vector with respect to frame Ai. Let di = (dxi dyi dzi) t and δi = (δxi δyi δzi )t . The differential vectors di and δi can be<br />

represented as a linear combination of the parameter changes, which are<br />

d i = k 1 i dθ i + k 2 i dd i + k 3 i dr i<br />

and<br />

δi = k 2 i dθi + k 3 i dαi where k1 i = (0 ricαi − risαi) t , k2 i = (0 sαi cαi) t and k3 i = (1 0 0)t .<br />

2.2 Kinematic Differential Model of a Manipulator<br />

Wu (Wu, 1984) has developed a kinematic error model of an N-DOF robot manipulator based<br />

on the differential changes dA i and the error matrix δA i due to four small kinematic errors at<br />

an individual joint coordinate frame.<br />

Let d N and δ N denote the three translation errors and rotation errors of the end of a manipulator<br />

respectively where d N = (dx N dy N dz N ) and δ N = (δ N x δ N y δ N z ). From (Wu, 1984), we<br />

obtain<br />

where<br />

<br />

dN δN <br />

=<br />

+<br />

<br />

M1<br />

M2<br />

<br />

M3<br />

0<br />

<br />

M2<br />

dθ +<br />

0<br />

<br />

M4<br />

dd +<br />

M3<br />

dθ = (dθ1 dθ2 . . . dθN) t<br />

dr = (dr1 dr2 . . . drN) t<br />

dd = (dd1 dd2 . . . ddN) t<br />

dα = (dα1 dα2 . . . dαN) t<br />

<br />

dr<br />

(16)<br />

(17)<br />

<br />

dα (18)<br />

and M 1, M2, M3 and M 4 are all 3 × N matrices whose components are the function of N joint<br />

variables. The ith column of M 1, M2, M3 and M 4 can be expressed as follows:<br />

M i 1<br />

=<br />

⎡<br />

⎣<br />

n u n+1 · k1 i + (pu i+1 × nu i+1 ) · k2 i<br />

o u i+1 · k1 i + (pu i+1 × ou i+1 ) · k2 i<br />

a u i+1 · k1 i + (pu i+1 × au i+1 ) · k2 i<br />

⎤<br />

⎦

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 25<br />

M i 2 =<br />

⎡<br />

⎣<br />

nu n+1 · k2 i<br />

ou i+1 · k2 i<br />

au i+1 · k2 i<br />

⎤<br />

⎦<br />

M i 3 =<br />

⎡<br />

⎣<br />

nu n+1 · k3 i<br />

ou i+1 · k3 i<br />

au i+1 · k3 i<br />

⎤<br />

⎦<br />

M i 4 =<br />

⎡<br />

⎣<br />

(pu i+1 × nu i+1 ) · k3 i<br />

(pu i+1 × ou i+1 ) · k3 i<br />

(pu i+1 × au i+1 ) · k3 i<br />

⎤<br />

⎦<br />

where nu i+1 , ou i+1 , au i+1 and pu i+1 are four 3 × 1 vectors of matrix Ui+1 which is defined as<br />

Ui = AiAi+1 · · · AN with UN+1 = I.<br />

Using the above equations, the manipulator’s differential changes with respect to the base can<br />

be represented as<br />

where<br />

<br />

dn<br />

dTN =<br />

0<br />

do<br />

0<br />

da<br />

0<br />

dp<br />

1<br />

<br />

(19)<br />

dn = o u 1 δN z − a u 1 δN y<br />

do = −n u 1 δN z + a u 1 δN x<br />

da = n u 1 δN y − o u 1 δN x<br />

dp = n u 1 dxN + o u 1 dyN + a u 1 dzN<br />

and n u 1 , ou 1 , au 1 are four 3 × 1 vectors of matrix U 1.<br />

Finally, the real position and orientation at the end of the manipulator can be calculated by<br />

where TN = A 1A2 · · · AN.<br />

T R N = TN + dTN<br />

3. Extended Model for an Active Visual System<br />

An active vision system, which has become an increasingly important research topic, is a<br />

robot device for controlling the motion of cameras based on visual information. The primary<br />

advantage of directed vision is its ability to use camera redirection to look at widely separated<br />

areas of interest at fairly high resolution instead of using a single sensor or array of cameras to<br />

cover the entire visual field with uniform resolution. It is able to interact with the environment<br />

actively by altering its viewpoint rather than observing it passively. Like an end effector, a<br />

camera can also be connected by a fixed homogeneous transformation to the last link. In<br />

addition, the structure and mechanism are similar to those of robots. Since an active visual<br />

system can kinematically be handled like a manipulator of N degrees of freedom, we can use<br />

the derived solutions in the last section directly.<br />

In this section, we will first introduce the camera coordinate system corresponding to the standard<br />

coordinate system definition for the approaches used in the computer vision literature<br />

and describe a generic algorithm for location estimation with stereo cameras. We will then<br />

integrate them with the kinematic error model of a manipulator.<br />

(20)

26<br />

X<br />

Y<br />

Image plane<br />

f<br />

(u,v)<br />

P = (x,y,z)<br />

Object<br />

Z<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Fig. 1. The camera coordinate system whose x- and y-axes form a basis for the image plane,<br />

whose z-axis is perpendicular to the image plane (along the optical axis), and whose origin is<br />

located at distance f behind the image plane, where f is the focal length of the camera lens.<br />

3.1 Pose Estimation with Stereo Cameras<br />

We assign the camera coordinate system with x- and y-axes forming a basis for the image<br />

plane, the z-axis perpendicular to the image plane (along the optical axis), and with its origin<br />

located at distance f behind the image plane, where f is the focal length of the camera lens.<br />

This is illustrated in Fig. 1. A point, c p = (x, y, z) t whose coordinates are expressed with<br />

respect to the camera coordinate frame C, will project onto the image plane with coordinates<br />

p i = (u, v) t given by<br />

π(x, y, z) =<br />

u<br />

v<br />

<br />

= f<br />

<br />

x<br />

z y<br />

If the coordinates of xp are expressed relative to coordinate frame X, we must first perform<br />

the coordinate transformation<br />

c c<br />

p = Tx x p. (22)<br />

Let aTc1 represent the pose of the first camera relative to the arbitrary reference coordinate<br />

frame A. By inverting this transformation, we can obtain c1Ta, where<br />

⎡<br />

⎤<br />

For convenience, let<br />

c1 Ta =<br />

⎢<br />

⎣<br />

<br />

c1r11 c1r21 c1r31 c1r12 c1r22 c1r32 c1r13 c1r23 c1r33 c1tx c1ty c1tz 0 0 0 1<br />

⎥<br />

⎦<br />

(21)<br />

(23)<br />

c1 R1 = ( c1 r 11 c1 r12 c1 r13) (24)<br />

c1 R2 = ( c1 r 21 c1 r22 c1 r23) (25)<br />

c1 R1 = ( c1 r 31 c1 r32 c1 r33) (26)

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 27<br />

(a) (b)<br />

Fig. 2. The TRICLOPS Active <strong>Vision</strong> System has four axes. They are pan axis, tilt axis, left vergence<br />

axis and right vergence axis. The pan axis can rotate around a vertical axis through the center of the<br />

base. The tilt axis can rotate around a horizontal line that intersects the base rotation axis. The left<br />

and right vergence axes intersect and are perpendicular to the tilt axis. These two pictures come from<br />

Wavering, Schneiderman, and Fiala (Wavering et al.). We would like to thank to the paper’s authors.<br />

Given the image coordinates, Hutchinson, Hager and Corke (Hutchinson et al., 1996) have<br />

presented an approach to find the object location with respect to the frame A using the following<br />

equations:<br />

A1 · a p = b1 (27)<br />

where<br />

<br />

f1<br />

c1R1 − u c1<br />

1 R3<br />

A1 =<br />

f c1<br />

1 R2 − v c1 (28)<br />

1 R3<br />

b 1<br />

=<br />

<br />

u1<br />

c1tz − f c1<br />

1 tx<br />

v c1<br />

1 tz − f c1<br />

1 ty<br />

Given a second camera at location aXc2 , we can compute c2Xa, A2 and ap similarly. Finally<br />

we have an over-determined system for finding ap <br />

A1 ap b1<br />

=<br />

(30)<br />

A2<br />

b2<br />

where A1 and b1 are defined by (28) and (29) while A2 and b2 are defined as follows:<br />

A2 =<br />

<br />

f2 c2R1 − u2 c2R3 f2 c2R2 − v2 c2R3 <br />

b2 =<br />

<br />

u2 c2tz − f2 c2tx v2 c2tz − f2 c2ty (29)<br />

(31)<br />

<br />

. (32)

28<br />

<strong>Robot</strong> <strong>Vision</strong><br />

3.2 Pose Estimation Based on an Active <strong>Vision</strong> System<br />

As mentioned before, assuming that the camera frames are assigned as shown in Fig. 1 and<br />

that the projective geometry of the camera is modeled by perspective projection, a point cp =<br />

( cx cy cz) t , whose coordinates are expressed with respect to the camera coordinate frame will<br />

project onto the image plane with coordinates (u v) t given by<br />

u<br />

v<br />

<br />

= f<br />

c z<br />

cx<br />

cy<br />

For convenience, we suppose that f - the focal length of lens, does not have an error. This<br />

assumption is only for simplicity purposes and we will discuss this issue again in Section 5.<br />

Another problem is that the difference between the real pose of the camera and its nominal<br />

pose will affect the image coordinates. This problem is difficult to solve because the image<br />

coordinates are dependent on the depth of the object which is unknown. If we assume f / c z ≪<br />

1, we can regard them as high order error terms and ignore them. From these assumptions,<br />

we can obtain the real position and orientation of the left camera coordinate frame which is<br />

a Tc1 = T R 1 A c1<br />

and those of the right camera coordinate frame which is<br />

a Tc2 = T R 2 Ac2<br />

In the above two equations, TR 1 , TR 2 are the real poses of the end links and Ac1, Ac2 are two<br />

operators which relate the camera frames to their end links.<br />

Given Equation (34) and (35), we can invert them to get c1Ta and c2Ta. Then we can obtain an<br />

over-determined system using the method mentioned before. This system can be solved by<br />

the least squares approach as follows (Lawson & Hanson, 1995):<br />

<br />

a<br />

p = (A T A) −1 A T<br />

b (36)<br />

where<br />

A =<br />

b =<br />

<br />

⎛<br />

f c1<br />

1 R1 − u c1<br />

1 R3<br />

⎜ f c1<br />

1 R2 − v c1<br />

1 R3<br />

⎝ f2 c2R1 − u2 c2 ⎞<br />

⎟<br />

R3<br />

⎠<br />

⎛<br />

⎜<br />

⎝<br />

f2 c2 R2 − v2 c2 R3<br />

u 1 c1 tz − f 1 c1 tx<br />

v 1 c1 tz − f 1 c1 ty<br />

u2 c2 tz − f2 c2 tx<br />

v2 c2 tz − f2 c2 ty<br />

If the superscript a in equations (34) and (35) indicates the world frame, we can calculate the<br />

position of P in world space.<br />

⎞<br />

⎟<br />

⎠<br />

(33)<br />

(34)<br />

(35)<br />

(37)<br />

(38)

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 29<br />

4. Optimal Structure Design for Active <strong>Vision</strong> Systems<br />

In the previous section, we derived an equation to calculate the real position when using a<br />

active vision with kinematic errors to estimate the location of a point P in the world space.<br />

Given this equation, it is straightforward to design the structure of an active vision system<br />

optimally. First, we can use T1 and T2 to replace TR 1 and TR 2 in equations (34) and (35).<br />

We then invert the resulting aTc1 and aTc2 to get c1Ta and c2Ta. Finally we solve the overdetermined<br />

system by the least squares approach to obtain the nominal pose of the cameras<br />

PN . The difference between these two results, i.e.<br />

E = P − P N , (39)<br />

is the estimation error.<br />

Note that the estimation errors are dependent on the joint variables and are a function of these<br />

joint variables. Consequently, a series of estimation errors can be obtained based on different<br />

poses of the stereo vision system. A curve describing the relationship between estimation errors<br />

and joint variables can be drawn. This curve can help us to analyze the estimation error<br />

or to design an active vision system. Inspired by the eyes of human beings and animals, we<br />

usually select a mechanical architecture with bilateral symmetry when we design a binocular<br />

or stereo active vision system. In this way, we can also simplify our design and manufacture<br />

procedures, and thus reduce the our design work and cost. We summarize our method<br />

described in the previous sections as follows:<br />

1. Calculate transformation matrix Ai for each link based on the nominal size of the system<br />

and use them to calculate U1, U2, · · · , UN+1, where Ui = AiAi+1 · · · AN, UN+1 = I and<br />

T1 = U1. 2. Calculate the operator Ac1 which relates the camera frame to its end link and multiply it<br />

with T1 to obtain the nominal position and orientation of the camera coordinate frame<br />

Tc1. 3. Repeat the above two steps to obtain the nominal position and orientation of the other<br />

camera coordinate frame Tc2.<br />

4. Invert frames Tc1 and Tc2 to obtain c1T and c2T. Here we assume that Tc1 represents<br />

the pose of the first camera relative to the world frame. Since they are homogeneous<br />

matrices, we can guarantee that their inverse matrices exist.<br />

5. Derive Equation (36), an over-determined system using c1T and c2T and solve it by the<br />

least squares approach to find nominal location estimation PN .<br />

6. Calculate four 3 × N matrices M1, M2, M3 and M4 in Equation (18), which are determined<br />

by the elements of matrices in Ui obtained in the first step. Since Ui is dependent<br />

on ith link parameters θi, αi, ri and di, these four matrices are functions of the system<br />

link geometrical parameters as well as of the joint variable θ.<br />

7. Based on performance requirements, machining capability and manufacturing costs,<br />

distribute tolerances to the four parameters of each link. Basically, the three geometrical<br />

tolerances dα, dd, and dr affect manufacturing and assembling costs while the joint<br />

variable tolerance dθ affects control cost.<br />

8. Given four matrices M1, M2, M3, M4 and tolerances for each link, we can use Equation<br />

(19) to compute the total error with respect to the base frame. By adding it to the<br />

T1 obtained in the first step, we can have TR 1 , the real position and orientation of the<br />

end link for the first camera. Similar to Step 2, we need to use the operator Ac1 to do<br />

one more transformation to find the real position and orientation of camera coordinate<br />

frame TR c1 .

30<br />

<strong>Robot</strong> <strong>Vision</strong><br />

Fig. 3. Coordinate Frames for TRICLOPS - Frame 0 for world frame; Frame 1 for Pan; Frame<br />

2(left) for left tilt; Frame 2(right) for right tilt; Frame 3(left) for left vergence; Frame 3(right) for<br />

right vergence. There are also two camera frames C 1 and C2 whose original are located at<br />

distance f behind the image planes.<br />

9. Repeating Step 6, Step 7 and Step 8 for the second camera, we can obtain TR c2 . As mentioned<br />

above, we usually have a symmetry structure for a stereo vision system. We<br />

assign the same tolerances dα, dθ, dd and dr for the two subsystems. Otherwise, the<br />

subsystem with low precision will dominate the whole performance of the system, and<br />

therefore we will waste the manufacturing cost for the subsystem with highest precision.<br />

10. Similar to Step 4 and Step 5, we can obtain the inverse matrices of TR c1 and TR c2 , and use<br />

them to build another over-determined system. Solving it, we can have real pose P with<br />

respect to the world frame. The difference between P and PN is the estimation error.<br />

11. Repeat the above ten steps using another group of joint variables θ1, θ2, · · · , θN until<br />

we exhaust all the joint variables in the Θ domain.<br />

12. After exhausting all the joint variables in the Θ domain, we have a maximum estimation<br />

error for assigning tolerances. Obviously, if this maximum does not satisfy the performance<br />

requirement, we need to adjust tolerances to improve the system precision<br />

and then go to Step 1 to simulate the result again. On the other hand, if the estimation<br />

errors are much smaller than that required, we have some space to relax tolerance<br />

requirements. In this case, we also need to adjust tolerance in order to reduce the manufacturing<br />

cost and go to Step 1 to start simulation. After some iterations, we can find<br />

an optimal solution.<br />

Like many engineering designs, while it is true that we can learn from trial and error, those<br />

trials should be informed by something more than random chance, and should begin from a<br />

well thought out design. For example, we can initialize the tolerances based on the previous<br />

design experience or our knowledge in the manufacturing industry. The theory and model<br />

described in this article provide a tool for the design of such an active vision system.

An Approach for Optimal Design of <strong>Robot</strong> <strong>Vision</strong> Systems 31<br />

Plan # dθ dα dd dr<br />

1 (.8 ◦ .8 ◦ .8 ◦ ) T (.8 ◦ .8 ◦ .8 ◦ ) T (.005 .005 .005) T (.005 .005 .005) T<br />

2 (.8 ◦ .8 ◦ .5 ◦ ) T (.8 ◦ .8 ◦ .5 ◦ ) T (.005 .005 .005) T (.005 .005 .005) T<br />

3 (.8 ◦ .5 ◦ .8 ◦ ) T (.8 ◦ .5 ◦ .8 ◦ ) T (.005 .005 .005) T (.005 .005 .005) T<br />