ENTANGLEMENT OF GAUSSIAN STATES Gerardo Adesso

ENTANGLEMENT OF GAUSSIAN STATES Gerardo Adesso

ENTANGLEMENT OF GAUSSIAN STATES Gerardo Adesso

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

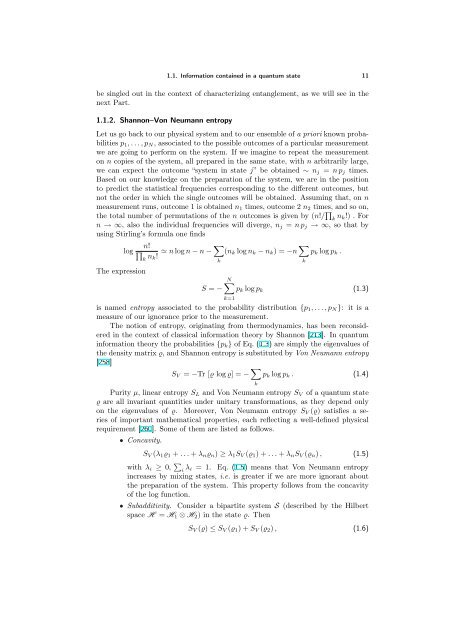

1.1. Information contained in a quantum state 11<br />

be singled out in the context of characterizing entanglement, as we will see in the<br />

next Part.<br />

1.1.2. Shannon–Von Neumann entropy<br />

Let us go back to our physical system and to our ensemble of a priori known probabilities<br />

p1, . . . , pN, associated to the possible outcomes of a particular measurement<br />

we are going to perform on the system. If we imagine to repeat the measurement<br />

on n copies of the system, all prepared in the same state, with n arbitrarily large,<br />

we can expect the outcome “system in state j” be obtained ∼ nj = n pj times.<br />

Based on our knowledge on the preparation of the system, we are in the position<br />

to predict the statistical frequencies corresponding to the different outcomes, but<br />

not the order in which the single outcomes will be obtained. Assuming that, on n<br />

measurement runs, outcome 1 is obtained n1 times, outcome 2 n2 times, and so on,<br />

the total number of permutations of the n outcomes is given by (n!/ <br />

k nk!) . For<br />

n → ∞, also the individual frequencies will diverge, nj = n pj → ∞, so that by<br />

using Stirling’s formula one finds<br />

log<br />

<br />

k<br />

The expression<br />

n!<br />

<br />

n log n − n − (nk log nk − nk) = −n<br />

nk! <br />

pk log pk .<br />

k<br />

S = −<br />

N<br />

k=1<br />

pk log pk<br />

k<br />

(1.3)<br />

is named entropy associated to the probability distribution {p1, . . . , pN}: it is a<br />

measure of our ignorance prior to the measurement.<br />

The notion of entropy, originating from thermodynamics, has been reconsidered<br />

in the context of classical information theory by Shannon [213]. In quantum<br />

information theory the probabilities {pk} of Eq. (1.3) are simply the eigenvalues of<br />

the density matrix ϱ, and Shannon entropy is substituted by Von Neumann entropy<br />

[258]<br />

SV = −Tr [ϱ log ϱ] = − <br />

pk log pk . (1.4)<br />

Purity µ, linear entropy SL and Von Neumann entropy SV of a quantum state<br />

ϱ are all invariant quantities under unitary transformations, as they depend only<br />

on the eigenvalues of ϱ. Moreover, Von Neumann entropy SV (ϱ) satisfies a series<br />

of important mathematical properties, each reflecting a well-defined physical<br />

requirement [260]. Some of them are listed as follows.<br />

• Concavity.<br />

SV (λ1ϱ1 + . . . + λnϱn) ≥ λ1SV (ϱ1) + . . . + λnSV (ϱn) , (1.5)<br />

with λi ≥ 0, <br />

i λi = 1. Eq. (1.5) means that Von Neumann entropy<br />

increases by mixing states, i.e. is greater if we are more ignorant about<br />

the preparation of the system. This property follows from the concavity<br />

of the log function.<br />

• Subadditivity. Consider a bipartite system S (described by the Hilbert<br />

space H = H1 ⊗ H2) in the state ϱ. Then<br />

k<br />

SV (ϱ) ≤ SV (ϱ1) + SV (ϱ2) , (1.6)