- Page 1 and 2:

Eckhard Bick ♠ THE PARSING SYSTEM

- Page 3 and 4:

Abstract The dissertation describes

- Page 5 and 6:

3.7.2. Making the most of the lexic

- Page 7 and 8:

Inflexion tags 451 Syntactic tags 4

- Page 9 and 10:

first, - but soon, it would move th

- Page 11 and 12:

disambiguated), same level tags (to

- Page 13 and 14:

chain, but rather the linguistic co

- Page 15 and 16:

2 The lexicomorphological level: St

- Page 17 and 18:

The core of PALMORF is written in C

- Page 19 and 20:

(d) as part of words. For instance,

- Page 21 and 22:

(1) binary search technique: a . .

- Page 23 and 24:

acapitã#=#####B(orn)###413 acara#a

- Page 25 and 26:

Words with graphical accents often

- Page 27 and 28:

2.2.3.2 The inflexional endings lex

- Page 29 and 30:

2.2.3.3 The suffix lexicon (1) 1

- Page 31 and 32:

Suffix combination rules is also us

- Page 33 and 34:

an V a DERP a- [ANT] brad Vi as HV

- Page 35 and 36:

"inimigo" ADJ M P "inimigo" N M P

- Page 37 and 38:

variable word forms receive '_'- li

- Page 39 and 40:

The remaining 2 words of the 4-word

- Page 41 and 42:

appear truncated or not, depending

- Page 43 and 44:

comparison to the substring consist

- Page 45 and 46:

My present linguistic solution 28 i

- Page 47 and 48:

In some non-personal proper nouns,

- Page 49 and 50:

(i.e., not too complex) derivationa

- Page 51 and 52:

2.2.4.5 Abbreviations and sentence

- Page 53 and 54:

Another case, where meaning bearing

- Page 55 and 56:

2.2.4.6 The human factor: variation

- Page 57 and 58:

circumflex-) accented words without

- Page 59 and 60:

the tagger tries to identify a word

- Page 61 and 62:

xxxar-#1##AaiD######54578 endings-s

- Page 63 and 64:

"sombrancelha" N F P '=sobrancelha

- Page 65 and 66:

(6) Word class distribution and par

- Page 67 and 68:

VFIN 24.96 16 7.77 9 2.46 - - 25 3.

- Page 69 and 70:

the present participle by derivatio

- Page 71 and 72:

Inflexion tags combine with word cl

- Page 73 and 74:

2.2.5.2 The individual word classes

- Page 75 and 76:

SPEC "specifiers": independent pron

- Page 77 and 78:

WORD FORM and LEXEME CATEGORIES gen

- Page 79 and 80:

WORD FORM and LEXEME CATEGORIES dea

- Page 81 and 82:

mood VFIN finite IND indicative SUB

- Page 83 and 84: prefixal derivation in verbs comple

- Page 85 and 86: graph. def. characteristics: hyphen

- Page 87 and 88: PP prepositional group de=aluguel

- Page 89 and 90: 2.2.5.3 Portuguese particles By ‘

- Page 91 and 92: Deictic adverbs refer to discourse

- Page 93 and 94: algo, algum=tanto, nada, nadinha?,

- Page 95 and 96: quando når, da hvornår quanto [QU

- Page 97 and 98: (1) Language distribution and error

- Page 99 and 100: 3 Morphosyntactic disambiguation: T

- Page 101 and 102: (4) "quando" conjunction or adverb

- Page 103 and 104: oth for the simple ambiguities intr

- Page 105 and 106: case of a 3.person possessor, the u

- Page 107 and 108: The fact that these semantic distin

- Page 109: In (Karlsson et. al, 1995:19ff) an

- Page 112 and 113: meaning- scope someone loves everyb

- Page 114 and 115: • 5. Tags can be integrated as me

- Page 116 and 117: 19 0 0 - - - - >= 20 15 1 - - - - t

- Page 118 and 119: Portuguese non-name words. Apart fr

- Page 120 and 121: words: 40.8 10.5 17.9 2.9 0.5 3.1 8

- Page 122 and 123: quite distinct, and few irregular e

- Page 124 and 125: 3.3 Borderline ambiguity: The limit

- Page 126 and 127: (5b) They [saw(see) [the girl with

- Page 128 and 129: Choose 'saw', if within the same cl

- Page 130 and 131: ambiguous words), moving them to "l

- Page 132 and 133: (3) modernização "modernizar" N

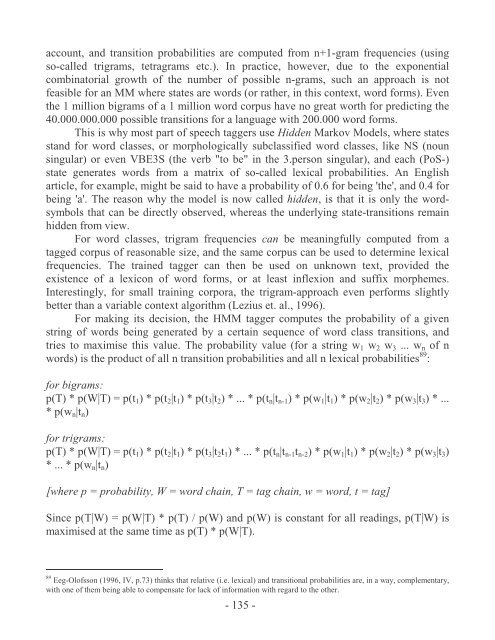

- Page 136 and 137: In a brute force approach, for an a

- Page 138 and 139: While undeniably involving more con

- Page 140 and 141: Generative Grammar, introduced and

- Page 142 and 143: node without branches matching the

- Page 144 and 145: example is a grammar of one termina

- Page 146 and 147: wide coverage tagging and parsing t

- Page 148 and 149: ambiguity, Constraint Grammar can a

- Page 150 and 151: syntactic reach of ordinary hand-cr

- Page 152 and 153: “base form-1” .. .. WORD CLAS

- Page 154 and 155: Base forms ("...") are tags like al

- Page 156 and 157: play after base form rules targetin

- Page 158 and 159: The above sequences are examples of

- Page 160 and 161: 3.7.2 Making the most of the lexico

- Page 162 and 163: (1) number of CG rules containing a

- Page 164 and 165: Intuitively, one might assume (a) t

- Page 166 and 167: constructions. Something similar is

- Page 168 and 169: are shaded, as well as the sum-colu

- Page 170 and 171: For global context syntactic rules

- Page 172 and 173: In table (4), three types of contex

- Page 174 and 175: ules appear to be slightly more com

- Page 176 and 177: (5a), continued ¡ ¢ number of con

- Page 178 and 179: in the sequential “understanding

- Page 180 and 181: all - 356 978 492 2219 C-percent 39

- Page 182 and 183: 3.8 Mapping: From word class to syn

- Page 184 and 185:

@ADVL [N-temp ADV PRP #ICL #FS #AS]

- Page 186 and 187:

@#AS-A< [ADV-rel] @#AS- @#AS-

- Page 188 and 189:

During the project period I have do

- Page 190 and 191:

syntactic errors around it, by prov

- Page 192 and 193:

1. Orthography and layout normalisa

- Page 194 and 195:

Of course, the use of dishesion mar

- Page 196 and 197:

S2 para aquele ... está perfeitame

- Page 198 and 199:

Parser performance on speech data (

- Page 200 and 201:

4 The syntactic level: A dependency

- Page 202 and 203:

subjects, but the second subject ca

- Page 204 and 205:

(7) SUBJ o [o] DET M S @>N 'the' b

- Page 206 and 207:

4.1.2 Dependency relation types: Cl

- Page 208 and 209:

(1) Table: Constituent structure ty

- Page 210 and 211:

Any further, more detailed, syntact

- Page 212 and 213:

discussion are inspired by Perini (

- Page 214 and 215:

Thus, we get ”Comeu peixe/bananas

- Page 216 and 217:

type-4 adverbials. Second, adverbia

- Page 218 and 219:

they all share one, and only one, c

- Page 220 and 221:

AB todos estes ambos os uns, uma,

- Page 222 and 223:

from personal pronouns, are not mut

- Page 224 and 225:

noun. Only very few adjectives (the

- Page 226 and 227:

4.2.2 The adpositional group head a

- Page 228 and 229:

However, adnominals can point to a

- Page 230 and 231:

(g) isso existe (até/nem (nos Esta

- Page 232 and 233:

4.3 The verb chain 4.3.1 The predic

- Page 234 and 235:

* aspect estar/andar/continuar/segu

- Page 236 and 237:

Ordinarily, 'o' is understood as su

- Page 238 and 239:

second verb's object 152 ), with so

- Page 240 and 241:

different subjects of matrix and su

- Page 242 and 243:

case function ± fronting ÷ fronti

- Page 244 and 245:

Quando ”quando” ADV @#FS-ADVL

- Page 246 and 247:

abalançar a kaste sig ud i at acab

- Page 248 and 249:

fazer xt, xd xdr få til at, lade s

- Page 250 and 251:

4.4 Clause types and clause functio

- Page 252 and 253:

@#ICL- non-finite subclause (combin

- Page 254 and 255:

While it is one of the clausality t

- Page 256 and 257:

(6) ..., tudo @S< pago @NN mão @

- Page 258 and 259:

(1g) Não [queria [comer outro bolo

- Page 260 and 261:

Apart from the presence of predicat

- Page 262 and 263:

demais [demais] DET M/F S/P @>N ‘

- Page 264 and 265:

element is a comparator and the sec

- Page 266 and 267:

without a predicator's valency info

- Page 268 and 269:

quantifying) cases, indicating adve

- Page 270 and 271:

4.5 Under the magnifying glass: Spe

- Page 272 and 273:

O assunto eram as ditaduras 2. F E

- Page 274 and 275:

The examples show that the normal "

- Page 276 and 277:

focusing function to the syntactic

- Page 278 and 279:

the quantifying adverbs mais and me

- Page 280 and 281:

qual DET @SC> @#FS-KOMP< antes era

- Page 282 and 283:

o [o] DET M S @AS< ‘that’ de [d

- Page 284 and 285:

[P=predicator, O=object, A=adverbia

- Page 286 and 287:

mais=de [mais=de] ADV @>A ‘more

- Page 288 and 289:

(2k) Ficou [ficar] V PS 3S IND V

- Page 290 and 291:

interno [interno] ADJ M S @N< '-'

- Page 292 and 293:

a preposition, por, then allowing f

- Page 294 and 295:

4.5.3 Tagging the quantifier 'todo'

- Page 296 and 297:

connection with personal pronouns -

- Page 298 and 299:

4.5.4 Adverbial function 4.5.4.1 Ar

- Page 300 and 301:

explanation for this may be that a

- Page 302 and 303:

a [a] DET F S @>N ‘the’ pergun

- Page 304 and 305:

carro [carro] N M S @SUBJ> ‘car

- Page 306 and 307:

pass the second test ('*De seu novo

- Page 308 and 309:

Therefore, the @ADV-@ADVL distincti

- Page 310 and 311:

@PIV, @ADV and @SC are - unlike @AD

- Page 312 and 313:

heads a "material" PP. The followin

- Page 314 and 315:

4.5.4.3 Intensifier adverbs Intensi

- Page 316 and 317:

4.5.4.4 Complementiser adverbs: Int

- Page 318 and 319:

complementiser in nominal FS or ICL

- Page 320 and 321:

hooked AS-relatives (3) they even a

- Page 322 and 323:

4.5.4.5 Adverb disambiguation and o

- Page 324 and 325:

position: adverb class: A) set oper

- Page 326 and 327:

(5b) fits fairly well into the (A)

- Page 328 and 329:

antes/depois+de Depois [depois] AD

- Page 330 and 331:

While (2c-e) can be described as pr

- Page 332 and 333:

In (4d) ter ('have') does have mono

- Page 334 and 335:

When the potential subject is post-

- Page 336 and 337:

(1) Table: functions of pronominal

- Page 338 and 339:

ditador [ditador] N M S @N ‘-’

- Page 340 and 341:

In other contexts, para is not usua

- Page 342 and 343:

life that the work of different gra

- Page 344 and 345:

SPEC DET/SPEC ¬ @#FS/AS-N< artic

- Page 346 and 347:

4.6.3 Tree structures for constitue

- Page 348 and 349:

dono de restaurante que pilota a pr

- Page 350 and 351:

|-@N

- Page 352 and 353:

4.7 Elegant underspecification An i

- Page 354 and 355:

5 The uses of valency: A bridge fro

- Page 356 and 357:

Returning to a research view concer

- Page 358 and 359:

5.2 Valency instantiation: From sec

- Page 360 and 361:

Note that valency is treated as a f

- Page 362 and 363:

REMOVE (@%vt) (0 @%vK) (*1C @

- Page 364 and 365:

word order can't be counted on to m

- Page 366 and 367:

than by asking ‘is it an A?’ or

- Page 368 and 369:

hemisphere membership 218 , - ‘SW

- Page 370 and 371:

disambiguate the senses ‘suit’

- Page 372 and 373:

In all, the parser uses 16 atomic s

- Page 374 and 375:

‘+ uninferable positive feature +

- Page 376 and 377:

6.5 A semantic Constraint Grammar I

- Page 378 and 379:

capable - by “negative instantiat

- Page 380 and 381:

LIST = (@=c @=v @=p @=s @=t) ; LIS

- Page 382 and 383:

um verão ‘a summer’ * ok. * f

- Page 384 and 385:

An example of argument-head polysem

- Page 386 and 387:

The program module implementing the

- Page 388 and 389:

REMOVE (@=X) (*1 @%jn BARRIER @NON-

- Page 390 and 391:

@AUX, 'know how to, can' @MV, 'ta

- Page 392 and 393:

Note that the MT engine will choose

- Page 394 and 395:

Even where none of the semantic rul

- Page 396 and 397:

A casa revista PCP @N< revista PC

- Page 398 and 399:

O Itamarati +HUM @SUBJ The Itamara

- Page 400 and 401:

and a morphological CG module may c

- Page 402 and 403:

• V-trees - vertical tree structu

- Page 404 and 405:

descriptional complexity: 'full tag

- Page 406 and 407:

Here, each line of the CG-notation

- Page 408 and 409:

7.2 Grammar teaching on the Interne

- Page 410 and 411:

system's disposal. After acquiring

- Page 412 and 413:

the reach of "subject"-ness or "obj

- Page 414 and 415:

provided and what choices can be ma

- Page 416 and 417:

terminological confusion ? term not

- Page 418 and 419:

The tree structure of the sample se

- Page 420 and 421:

(5) Syntactic tree structures (VISL

- Page 422 and 423:

two function tags, since the studen

- Page 424 and 425:

7.3 Corpus research In the case of

- Page 426 and 427:

- 426 -

- Page 428 and 429:

- 428 -

- Page 430 and 431:

The NURC speech corpus (“Norma ur

- Page 432 and 433:

7.4 Machine translation 7.4.1. A te

- Page 434 and 435:

processors used by translators or l

- Page 436 and 437:

The following diagram shows how dif

- Page 438 and 439:

8 Conclusion: The advantages of inc

- Page 440 and 441:

- 440 -

- Page 442 and 443:

Sources: English: EngCG: Karlsson e

- Page 444 and 445:

(4) Table: syntactic tree structure

- Page 446 and 447:

lexicon which ensure removal of fal

- Page 448 and 449:

8.2 CG Progressive Level Parsing: G

- Page 450 and 451:

Methodologically, it is commonly cl

- Page 452 and 453:

$ the dollar sign is used to mark n

- Page 454 and 455:

@#ICL-AUX< argument verb in verb ch

- Page 456 and 457:

"unit" noun (e.g. "20 metros") att

- Page 458 and 459:

SEMANTIC TAGS The tables below prov

- Page 460 and 461:

ANIMATE NON-HUMAN, NON-MOVING bo 13

- Page 462 and 463:

stof 136 fabric seda ‘silke’ ma

- Page 464 and 465:

(-CONCRETE, - MASS, +COUNT) ‘modu

- Page 466 and 467:

ax 25 plus below: (-CONCRETE, -MASS

- Page 468 and 469:

cI 199 process (-CONTROL, imperfect

- Page 470 and 471:

oot search lexicon search takes a w

- Page 472 and 473:

translation, prepares bilingual lex

- Page 474 and 475:

in a typical van/von-name chain con

- Page 476 and 477:

Appendix: Example sentences The fol

- Page 478 and 479:

é [ser] V PR 3S IND VFIN @FMV quas

- Page 480 and 481:

será [ser] V FUT 3S IND VFIN @FMV

- Page 482 and 483:

julgamento [julgamento] N M S @P< $

- Page 484 and 485:

Os [o] DET M P @>N sabonetes [sabo

- Page 486 and 487:

parte [parte] N F S @P< de [de] PR

- Page 488 and 489:

inesquecíveis [inesquecível] ADJ

- Page 490 and 491:

Literature Almeida, Napoleão Mende

- Page 492 and 493:

Lindberg, Nikolaj, “Learning Cons

- Page 494 and 495:

Alphabetical index Page numbers in

- Page 496 and 497:

averbal subclauses;254 finite subcl

- Page 498 and 499:

attributive;249 nominal;248 finite

- Page 500 and 501:

for auxiliary definition;235;237 pa

- Page 502 and 503:

subject identity test;236 subjects

- Page 505:

ERROR: rangecheck OFFENDING COMMAND