Assessing and reporting performances on pre-sessional EAP courses

Assessing and reporting performances on pre-sessional EAP courses

Assessing and reporting performances on pre-sessional EAP courses

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

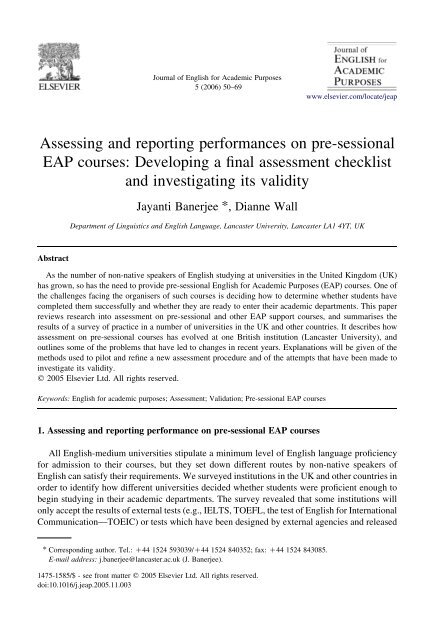

<str<strong>on</strong>g>Assessing</str<strong>on</strong>g> <str<strong>on</strong>g>and</str<strong>on</strong>g> <str<strong>on</strong>g>reporting</str<strong>on</strong>g> <str<strong>on</strong>g>performances</str<strong>on</strong>g> <strong>on</strong> <strong>pre</strong>-sessi<strong>on</strong>al<br />

<strong>EAP</strong> <strong>courses</strong>: Developing a final assessment checklist<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> investigating its validity<br />

Abstract<br />

Journal of English for Academic Purposes<br />

5 (2006) 50–69<br />

Jayanti Banerjee *, Dianne Wall<br />

Department of Linguistics <str<strong>on</strong>g>and</str<strong>on</strong>g> English Language, Lancaster University, Lancaster LA1 4YT, UK<br />

As the number of n<strong>on</strong>-native speakers of English studying at universities in the United Kingdom (UK)<br />

has grown, so has the need to provide <strong>pre</strong>-sessi<strong>on</strong>al English for Academic Purposes (<strong>EAP</strong>) <strong>courses</strong>. One of<br />

the challenges facing the organisers of such <strong>courses</strong> is deciding how to determine whether students have<br />

completed them successfully <str<strong>on</strong>g>and</str<strong>on</strong>g> whether they are ready to enter their academic departments. This paper<br />

reviews research into assessment <strong>on</strong> <strong>pre</strong>-sessi<strong>on</strong>al <str<strong>on</strong>g>and</str<strong>on</strong>g> other <strong>EAP</strong> support <strong>courses</strong>, <str<strong>on</strong>g>and</str<strong>on</strong>g> summarises the<br />

results of a survey of practice in a number of universities in the UK <str<strong>on</strong>g>and</str<strong>on</strong>g> other countries. It describes how<br />

assessment <strong>on</strong> <strong>pre</strong>-sessi<strong>on</strong>al <strong>courses</strong> has evolved at <strong>on</strong>e British instituti<strong>on</strong> (Lancaster University), <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

outlines some of the problems that have led to changes in recent years. Explanati<strong>on</strong>s will be given of the<br />

methods used to pilot <str<strong>on</strong>g>and</str<strong>on</strong>g> refine a new assessment procedure <str<strong>on</strong>g>and</str<strong>on</strong>g> of the attempts that have been made to<br />

investigate its validity.<br />

q 2005 Elsevier Ltd. All rights reserved.<br />

Keywords: English for academic purposes; Assessment; Validati<strong>on</strong>; Pre-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong><br />

1. <str<strong>on</strong>g>Assessing</str<strong>on</strong>g> <str<strong>on</strong>g>and</str<strong>on</strong>g> <str<strong>on</strong>g>reporting</str<strong>on</strong>g> performance <strong>on</strong> <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong><br />

www.elsevier.com/locate/jeap<br />

All English-medium universities stipulate a minimum level of English language proficiency<br />

for admissi<strong>on</strong> to their <strong>courses</strong>, but they set down different routes by n<strong>on</strong>-native speakers of<br />

English can satisfy their requirements. We surveyed instituti<strong>on</strong>s in the UK <str<strong>on</strong>g>and</str<strong>on</strong>g> other countries in<br />

order to identify how different universities decided whether students were proficient enough to<br />

begin studying in their academic departments. The survey revealed that some instituti<strong>on</strong>s will<br />

<strong>on</strong>ly accept the results of external tests (e.g., IELTS, TOEFL, the test of English for Internati<strong>on</strong>al<br />

Communicati<strong>on</strong>—TOEIC) or tests which have been designed by external agencies <str<strong>on</strong>g>and</str<strong>on</strong>g> released<br />

* Corresp<strong>on</strong>ding author. Tel.: C44 1524 593039/C44 1524 840352; fax: C44 1524 843085.<br />

E-mail address: j.banerjee@lancaster.ac.uk (J. Banerjee).<br />

1475-1585/$ - see fr<strong>on</strong>t matter q 2005 Elsevier Ltd. All rights reserved.<br />

doi:10.1016/j.jeap.2005.11.003

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 51<br />

for instituti<strong>on</strong>al purposes (e.g., the Instituti<strong>on</strong>al TOEFL). Some instituti<strong>on</strong>s, in additi<strong>on</strong> to<br />

dem<str<strong>on</strong>g>and</str<strong>on</strong>g>ing a minimum level of performance <strong>on</strong> an external test, require students to take an inhouse<br />

test. The results of this test are used to determine whether the students need further<br />

language support before they begin their academic programme or, in some instituti<strong>on</strong>s, while<br />

they follow their programme. In other instituti<strong>on</strong>s (particularly those in the UK), students who<br />

meet all the other requirements for admissi<strong>on</strong> but whose performance <strong>on</strong> the external test falls<br />

short of that required for unc<strong>on</strong>diti<strong>on</strong>al admissi<strong>on</strong> can be admitted provided that they<br />

successfully complete a <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> course.<br />

There is no comm<strong>on</strong> approach to assessing <str<strong>on</strong>g>and</str<strong>on</strong>g> <str<strong>on</strong>g>reporting</str<strong>on</strong>g> performance <strong>on</strong> <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong><br />

<strong>courses</strong>. In the case of instituti<strong>on</strong>s that will <strong>on</strong>ly accept the results of external tests, students are<br />

required to re-take the tests at the end of their <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong>. One recently reported<br />

example is that of Australian universities where <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong> serve not <strong>on</strong>ly to<br />

<strong>pre</strong>pare students for academic study but also for the IELTS test (Moore & Mort<strong>on</strong>, 2005). At<br />

least <strong>on</strong>e instituti<strong>on</strong> uses the results of an external test that has been piloted <strong>on</strong> its students; others<br />

design their own tests, which may or may not be modelled <strong>on</strong> tests created elsewhere for other<br />

purposes. Some instituti<strong>on</strong>s <strong>pre</strong>fer to judge students <strong>on</strong> their in-course performance, combining<br />

internal test scores, performance <strong>on</strong> written assignments (projects <str<strong>on</strong>g>and</str<strong>on</strong>g> course assignments,<br />

sometimes collected together in portfolios), formal <strong>pre</strong>sentati<strong>on</strong>s, <str<strong>on</strong>g>and</str<strong>on</strong>g> classroom participati<strong>on</strong>.<br />

The weighting given to these elements varies, but it is comm<strong>on</strong> to give more weight to tasks that<br />

are completed near the end of the course.<br />

Most instituti<strong>on</strong>s are required to send individual reports to the central admissi<strong>on</strong>s office or the<br />

receiving department, either with a test result (from <strong>on</strong>e or more of the tests described above), a<br />

grade determined by a combinati<strong>on</strong> of test results <str<strong>on</strong>g>and</str<strong>on</strong>g> other assessments, or a pass-fail<br />

judgement which is based either <strong>on</strong> tutors’ im<strong>pre</strong>ssi<strong>on</strong>s or <strong>on</strong> a more straightforward criteri<strong>on</strong><br />

such as attendance. An estimated IELTS score is required in some instituti<strong>on</strong>s. A recent<br />

development is the use of ‘can-do’ scales, which c<strong>on</strong>sist of lists of performance objectives <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

columns where <strong>EAP</strong> tutors indicate whether students are able or not to achieve each objective.<br />

The history of assessment <strong>on</strong> Lancaster University’s <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong> reveals that<br />

we have adopted a number of these approaches at different times. In the early 1990s, we were<br />

asked to provide estimated IELTS scores. The admissi<strong>on</strong>s officers found these estimates useful<br />

as they could inter<strong>pre</strong>t them in the same way they had processed genuine test results when<br />

making their initial decisi<strong>on</strong>s. We, however, became increasingly c<strong>on</strong>cerned about the lack of<br />

validity <str<strong>on</strong>g>and</str<strong>on</strong>g> reliability in our judgements because:<br />

(i) We did not have access to the official rating scales for speaking <str<strong>on</strong>g>and</str<strong>on</strong>g> writing.<br />

(ii) Estimating reading <str<strong>on</strong>g>and</str<strong>on</strong>g> listening abilities was problematic, since this depended <strong>on</strong><br />

inferences about students’ ‘inner processes’ rather than analyses of products such as<br />

essays or oral <strong>pre</strong>sentati<strong>on</strong>s, <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

(iii) N<strong>on</strong>e of the teaching team had been trained as IELTS examiners.<br />

We ab<str<strong>on</strong>g>and</str<strong>on</strong>g><strong>on</strong>ed this practice in favour of writing a profile report for each student, commenting<br />

<strong>on</strong> their general ability in listening, oral <strong>pre</strong>sentati<strong>on</strong>s, group discussi<strong>on</strong>s, reading <str<strong>on</strong>g>and</str<strong>on</strong>g> writing.<br />

The report was written by the student’s main tutor, who had at least 8 h of c<strong>on</strong>tact with the<br />

student every week <str<strong>on</strong>g>and</str<strong>on</strong>g> also had access to feedback sheets <str<strong>on</strong>g>and</str<strong>on</strong>g> comments from the student’s<br />

other tutors. The main tutor would also give an overall judgement of the student’s ability. The<br />

report was discussed with the student before being passed <strong>on</strong> to the course co-ordinators. The<br />

course co-ordinators reviewed <str<strong>on</strong>g>and</str<strong>on</strong>g> edited it to st<str<strong>on</strong>g>and</str<strong>on</strong>g>ardise the style <str<strong>on</strong>g>and</str<strong>on</strong>g> to ensure that it was

52<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

a summary of the student’s progress <str<strong>on</strong>g>and</str<strong>on</strong>g> ability rather than a collecti<strong>on</strong> of anecdotes. Copies<br />

were sent to the University’s admissi<strong>on</strong>s officers, the departments <str<strong>on</strong>g>and</str<strong>on</strong>g> to the students<br />

themselves.<br />

An example of a completed profile report can be found in Appendix A.<br />

1.1. Problems with the <str<strong>on</strong>g>reporting</str<strong>on</strong>g> system<br />

This system c<strong>on</strong>tinued until 2001 without too many objecti<strong>on</strong>s or queries, but there were a<br />

number of problems:<br />

(i) The reports went to two different audiences: the administrative audience, c<strong>on</strong>sisting of<br />

the central university admissi<strong>on</strong>s officers <str<strong>on</strong>g>and</str<strong>on</strong>g> the students’ departmental admissi<strong>on</strong>s<br />

officers, <str<strong>on</strong>g>and</str<strong>on</strong>g> the students themselves. It was difficult to write in a way which satisfied the<br />

needs of two such distinct audiences—in particular, to say what needed to be said<br />

without discouraging the students.<br />

(ii) Tutors had varying inter<strong>pre</strong>tati<strong>on</strong>s of what c<strong>on</strong>stituted readiness for entry into a<br />

university department <str<strong>on</strong>g>and</str<strong>on</strong>g> there were few opportunities in either <strong>pre</strong>-course or in-course<br />

staff meetings to discuss definiti<strong>on</strong>s of the key criteria. Table 1 c<strong>on</strong>tains a compilati<strong>on</strong> of<br />

the types of features that tutors menti<strong>on</strong>ed in the “Writing” secti<strong>on</strong> of a sample of 50 final<br />

reports produced in 2001. The left-h<str<strong>on</strong>g>and</str<strong>on</strong>g> column c<strong>on</strong>tains comments which relate to some<br />

noti<strong>on</strong> of writing proficiency. It is interesting to note that features which often figure in<br />

textbooks <strong>on</strong> writing, such as cohesiveness, coherence, <str<strong>on</strong>g>and</str<strong>on</strong>g> checking drafts, occur rarely.<br />

The comments about linguistic performance (under “Language”) are general rather than<br />

detailed. It is a matter of debate whether the comments in the right-h<str<strong>on</strong>g>and</str<strong>on</strong>g> column (which<br />

relate to other facets of the students’ performance) reveal anything substantial about the<br />

students’ ability to ex<strong>pre</strong>ss them in formal writing <str<strong>on</strong>g>and</str<strong>on</strong>g> to c<strong>on</strong>form to st<str<strong>on</strong>g>and</str<strong>on</strong>g>ard academic<br />

c<strong>on</strong>venti<strong>on</strong>s.<br />

(iii) The brevity of the <strong>courses</strong>, the need to give fair coverage to all skill areas, <str<strong>on</strong>g>and</str<strong>on</strong>g> a course<br />

approach which emphasised out-of-class <strong>pre</strong>parati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> in-class group discussi<strong>on</strong>s<br />

meant that there were many aspects of performance that were either not visible in the<br />

classroom or that manifested themselves when students were talking with <strong>on</strong>e another<br />

rather than with the tutor. This affected the depth of knowledge any tutor had of a<br />

student’s ability.<br />

(iv) We have already explained that all the course reports were edited by the course coordinators<br />

in order to st<str<strong>on</strong>g>and</str<strong>on</strong>g>ardise the style <str<strong>on</strong>g>and</str<strong>on</strong>g> to lift the level of commentary from the<br />

more specific to the more general. It was inevitable that those doing the editing changed<br />

the meaning of some of the reports, either <strong>on</strong> purpose (t<strong>on</strong>ing them down) or<br />

inadvertently, through a lack of underst<str<strong>on</strong>g>and</str<strong>on</strong>g>ing of the original text. As a result of changes<br />

that made the language more diplomatic, admissi<strong>on</strong>s officers sometimes had problems<br />

with inter<strong>pre</strong>tati<strong>on</strong>, which made their job harder rather than easier, thus undermining <strong>on</strong>e<br />

of the purposes of the exercise.<br />

It is clear from the survey of other instituti<strong>on</strong>s that Lancaster University’s practices before<br />

2001 were not unusual. However, we remained c<strong>on</strong>cerned about the validity <str<strong>on</strong>g>and</str<strong>on</strong>g> reliability of<br />

our exit assessments. We wanted to ensure that our practice was well grounded in theory, that it<br />

was transparent to users (our students <str<strong>on</strong>g>and</str<strong>on</strong>g> admissi<strong>on</strong>s pers<strong>on</strong>nel), <str<strong>on</strong>g>and</str<strong>on</strong>g> that it was practical in<br />

terms of tutors’ skills <str<strong>on</strong>g>and</str<strong>on</strong>g> time.

Table 1<br />

Final report 2001—the types of comments recorded in the secti<strong>on</strong> for writing<br />

Related to writing Interesting, but are they related to writing?<br />

GENERAL C<strong>on</strong>fident<br />

Excellent writing Has improved<br />

Thoughtful writing (3) Feels has improved<br />

Transmits ideas clearly Will improve<br />

Cohesive (4) No improvement in first 3 weeks, then sudden<br />

improvement<br />

Coherent (6) Raised self-awareness<br />

Fluent Has become aware of the need to write well<br />

CONTENT<br />

Related to reading: Aware of difficulties <str<strong>on</strong>g>and</str<strong>on</strong>g> trying to overcome (2)<br />

Reveals she’s understood reading Has taken note of principles (3)<br />

Can analyse data Puts in effort (2)<br />

Can resp<strong>on</strong>d critically to data (10) Has applied self<br />

Argumentati<strong>on</strong>:<br />

Good argumentati<strong>on</strong> (11) Resp<strong>on</strong>ds to feedback (9)<br />

Does not hesitate to give own views Found module helpful (2)<br />

Needs to read widely <str<strong>on</strong>g>and</str<strong>on</strong>g> critically Has enjoyed this module<br />

Style:<br />

Reader-friendly (9) Settled down quickly<br />

Academic I have no worries<br />

C<strong>on</strong>cise ex<strong>pre</strong>ssi<strong>on</strong> Should be able to cope with dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s (2)<br />

Verbose I hope she will c<strong>on</strong>tinue to improve<br />

C<strong>on</strong>venti<strong>on</strong>s:<br />

Has explored c<strong>on</strong>venti<strong>on</strong>s of academic writing Weaknesses likely to disappear so<strong>on</strong><br />

Can c<strong>on</strong>vey message in own words Weak in xxx but <strong>on</strong>ce that improves I hope<br />

Editing:<br />

Developing editing skills Will benefit from c<strong>on</strong>tinued academic support<br />

Less than rigorous when checking drafts (2)<br />

ORGANISATION (31)<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 53<br />

LANGUAGE<br />

General comment about language (13)<br />

Grammatical problems (5)<br />

Good word choice<br />

2. Research into <strong>EAP</strong> assessment<br />

One obvious opti<strong>on</strong> when restructuring the <strong>EAP</strong> exit assessment was to require the students to<br />

re-take an external <strong>EAP</strong> test such as IELTS or TOEFL. However, there are a number of<br />

drawbacks in this approach. The first is practical in that there is a time delay between the taking<br />

of the test <str<strong>on</strong>g>and</str<strong>on</strong>g> the release of the official results. The <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong> finish just before<br />

the academic year begins so this means that students would need to take the external test before<br />

the <strong>pre</strong>-sessi<strong>on</strong>al course ended. Since, the shortest (<str<strong>on</strong>g>and</str<strong>on</strong>g> largest) of Lancaster University’s <strong>pre</strong>-

54<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong> is <strong>on</strong>ly four weeks, the students would probably have to take the external<br />

test mid-way through the programme, possibly before they were able to make measurable<br />

advances in their language proficiency.<br />

The sec<strong>on</strong>d, <str<strong>on</strong>g>and</str<strong>on</strong>g> str<strong>on</strong>ger, argument is made by research that has analysed the dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s of<br />

university writing tasks, particularly research that has compared these dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s to those <strong>on</strong><br />

external <strong>EAP</strong> tests. Horowitz (1986) <str<strong>on</strong>g>and</str<strong>on</strong>g> Canseco <str<strong>on</strong>g>and</str<strong>on</strong>g> Byrd (1989), the latter looking<br />

specifically at the writing dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s in postgraduate degree programmes, report that university<br />

students need to be able to select relevant data from sources, reorganise the data to<br />

answer the questi<strong>on</strong> set, <str<strong>on</strong>g>and</str<strong>on</strong>g> also need to write their text using academic register. Other<br />

important writing skills include the ability to <strong>pre</strong>sent informati<strong>on</strong> in tables <str<strong>on</strong>g>and</str<strong>on</strong>g> graphs, <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

to revise <str<strong>on</strong>g>and</str<strong>on</strong>g> edit drafts. Students are rarely required to refer to pers<strong>on</strong>al experience. This, as<br />

Moore <str<strong>on</strong>g>and</str<strong>on</strong>g> Mort<strong>on</strong> (2005) point out, marks an important difference between the dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s of the<br />

target language situati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> the writing dem<str<strong>on</strong>g>and</str<strong>on</strong>g>ed by at least <strong>on</strong>e major external test—the<br />

IELTS. IELTS Task 2 typically requires students to draw up<strong>on</strong> their pers<strong>on</strong>al experience<br />

for examples to support claims that they make rather than to draw <strong>on</strong> reading sources or<br />

primary data.<br />

Other differences documented by Moore & Mort<strong>on</strong> (2005) include:<br />

(i) IELTS Task 2 falls within a restricted genre of a written argument or case whereas<br />

university writing tasks cover reviews, case study reports, research reports, research<br />

proposals, summaries, exercises <str<strong>on</strong>g>and</str<strong>on</strong>g> short answer tasks.<br />

(ii) The <strong>pre</strong>dominant rhetorical functi<strong>on</strong>s in IELTS Task 2 are evaluati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> hortati<strong>on</strong>.<br />

Though evaluati<strong>on</strong> is the <strong>pre</strong>dominant rhetorical functi<strong>on</strong> of university writing tasks, other<br />

rhetorical functi<strong>on</strong>s such as descripti<strong>on</strong>, summarisati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> comparis<strong>on</strong> are also comm<strong>on</strong><br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> hortati<strong>on</strong> is relatively rare.<br />

C<strong>on</strong>sequently, Moore & Mort<strong>on</strong> argue that it is not possible to combine <strong>pre</strong>parati<strong>on</strong> for the<br />

IELTS test with <strong>pre</strong>parati<strong>on</strong> for university study.<br />

Another possible reas<strong>on</strong> for lack of fit between <strong>EAP</strong> tests <str<strong>on</strong>g>and</str<strong>on</strong>g> the language dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s students<br />

face at university may lie in the criteria used for assessment in <strong>EAP</strong> c<strong>on</strong>texts. If the assessment<br />

criteria used in <strong>EAP</strong> tests do not reflect the criteria against which the students’ performance will<br />

be judged in academic c<strong>on</strong>texts, then the scores achieved are less easily inter<strong>pre</strong>table with<br />

reference to the students’ ability to perform tasks in those c<strong>on</strong>texts. For instance, Thorp <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

Kennedy (2003) have reported that ‘idiomaticity’ rather than the ability to write within the<br />

academic genre is rewarded <strong>on</strong> the IELTS writing test. Jacoby <str<strong>on</strong>g>and</str<strong>on</strong>g> McNamara (1999) comment<br />

that the assessment criteria in specific purposes tests usually employ <strong>on</strong>ly language-focused<br />

categories whereas <str<strong>on</strong>g>performances</str<strong>on</strong>g> in a specific purposes c<strong>on</strong>text are not wholly language based.<br />

This last point is problematic because the main purpose of a <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> course is to boost<br />

students’ ability to perform in English in an academic envir<strong>on</strong>ment so it can be argued that the<br />

focus should therefore be up<strong>on</strong> language. However, this would be to ignore the complexity of<br />

language for specific purposes c<strong>on</strong>texts. It appears, therefore, that external <strong>EAP</strong> tests such as<br />

IELTS <str<strong>on</strong>g>and</str<strong>on</strong>g> TOEFL (in their current forms) [2005], while suitable as a <strong>pre</strong>-entry measure, are<br />

insufficiently re<strong>pre</strong>sentative of the c<strong>on</strong>struct of <strong>EAP</strong> (particularly <strong>EAP</strong> writing) to be used as an<br />

exit measure for <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> <strong>courses</strong>.<br />

With some notable excepti<strong>on</strong>s (e.g., Cho, 2003), there is little published <strong>on</strong> <strong>EAP</strong>-course finalassessment<br />

procedures. However, the c<strong>on</strong>struct of <strong>EAP</strong> assessment has been addressed from a<br />

number of other perspectives (see Blue, Milt<strong>on</strong>, & Saville, 2000 for a compendium of recent

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 55<br />

Table 2<br />

Key factors in successful academic performance, taken from Ginther <str<strong>on</strong>g>and</str<strong>on</strong>g> Grant (1996)<br />

Reading Writing<br />

Underst<str<strong>on</strong>g>and</str<strong>on</strong>g>ing the main idea of their reading Organisati<strong>on</strong><br />

Reaching valid c<strong>on</strong>clusi<strong>on</strong>s Summarisati<strong>on</strong><br />

Making critical evaluati<strong>on</strong>s of c<strong>on</strong>tent Well-formed sentences<br />

Com<strong>pre</strong>hending significant detail Vocabulary<br />

Underst<str<strong>on</strong>g>and</str<strong>on</strong>g>ing explicitly stated informati<strong>on</strong> Usage<br />

Detecting inferences between the lines Research skills<br />

Ec<strong>on</strong>omy<br />

Clarity<br />

Providing sufficient evidence<br />

Grammatical<br />

Correctly punctuated<br />

Ability to use ‘st<str<strong>on</strong>g>and</str<strong>on</strong>g>ard’ academic discourse<br />

Knowing what your tutor–examiner values<br />

(<str<strong>on</strong>g>and</str<strong>on</strong>g> giving that to him/her)<br />

work). Studies like Horowitz (1986), Canseco <str<strong>on</strong>g>and</str<strong>on</strong>g> Byrd (1989) <str<strong>on</strong>g>and</str<strong>on</strong>g> Moore <str<strong>on</strong>g>and</str<strong>on</strong>g> Mort<strong>on</strong> (2005)<br />

have analysed the writing dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s placed up<strong>on</strong> university students. Others have looked<br />

more broadly at the language <str<strong>on</strong>g>and</str<strong>on</strong>g> academic needs of university students. Weir’s (1983)<br />

groundbreaking work focused up<strong>on</strong> the needs of overseas students. More recently, as part of<br />

the development programme for the TOEFL internet-based test (TOEFL iBT), the<br />

Educati<strong>on</strong>al Testing Service has published a series of commissi<strong>on</strong>ed papers covering<br />

the reading, writing, speaking <str<strong>on</strong>g>and</str<strong>on</strong>g> listening dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s <strong>on</strong> university students (see Bejar,<br />

Douglas, Jamies<strong>on</strong>, Nissan, & Turner, 2000; Butler, Eignor, J<strong>on</strong>es, McNamara, & Suomi, 2000;<br />

Cumming, Kantor, Powers, Santos, & Taylor, 2000; Enright, Grabe, Koda, Mosenthal,<br />

Mulcahy-Ernt, & Schedl, 2000; Hamp-Ly<strong>on</strong>s & Kroll, 1997; Jamies<strong>on</strong>, J<strong>on</strong>es, Kirsch,<br />

Mosenthal, & Taylor, 2000; Waters, 1996). Though the primary purpose of these papers was<br />

to define the initial c<strong>on</strong>struct of the revised TOEFL test, they also form an excellent resource for<br />

the <strong>EAP</strong> c<strong>on</strong>struct in all four-language skills.<br />

Another useful resource is Ginther <str<strong>on</strong>g>and</str<strong>on</strong>g> Grant’s (1996) meta-analysis of research into the<br />

academic needs of native English-speaking university students in the US. This provides<br />

informati<strong>on</strong> about the language dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s placed <strong>on</strong> all students (not <strong>on</strong>ly internati<strong>on</strong>al students).<br />

They note that speaking <str<strong>on</strong>g>and</str<strong>on</strong>g> listening needs have not been investigated (possibly because these<br />

skills are taken for granted am<strong>on</strong>g native speakers or because they are rarely formally assessed in<br />

an academic c<strong>on</strong>text) <str<strong>on</strong>g>and</str<strong>on</strong>g> that reading is often commented <strong>on</strong> in relati<strong>on</strong> to writing. Their metaanalysis<br />

suggests a number of reading <str<strong>on</strong>g>and</str<strong>on</strong>g> writing features that are key factors in good academic<br />

performance. These are listed in Table 2.<br />

Yet other researchers have explored the criteria applied by subject tutors to university students’<br />

<str<strong>on</strong>g>performances</str<strong>on</strong>g>. For instance, as part of a study to design suitable writing tasks for post-graduate<br />

students Wall (1981) analysed the criteria that Ec<strong>on</strong>omics tutors applied to the students’ written<br />

work. She found that the tutors applied some or all of the following criteria when assessing students’<br />

finished work: knowledge of subject matter, critical ability, structuring of the essay, answering the<br />

questi<strong>on</strong>, <str<strong>on</strong>g>and</str<strong>on</strong>g> the use of sources. Tutors resp<strong>on</strong>ded negatively to verbosity/irrelevance <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

carelessness/lack of rewriting. They made relatively few comments about specific language<br />

features. Wall, Nicks<strong>on</strong>, Jordan, Allwright <str<strong>on</strong>g>and</str<strong>on</strong>g> Hought<strong>on</strong> (1988) arrived at a similar finding when<br />

they compared the reacti<strong>on</strong>s of an academic tutor to a student essay with those of three <strong>EAP</strong> tutors.

56<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

Table 3<br />

Factors influencing lecturers’ judgements of student writing, from Errey (2000, p. 156)<br />

C<strong>on</strong>tent Layout<br />

Use of sources Punctuati<strong>on</strong><br />

Coherence Underst<str<strong>on</strong>g>and</str<strong>on</strong>g>ing of reading<br />

Organisati<strong>on</strong> Appropriate register<br />

Addresses topic Appropriate ex<strong>pre</strong>ssi<strong>on</strong><br />

Pre-writing strategies Accurate vocabulary<br />

Development of ideas Essential vocabulary<br />

Completi<strong>on</strong> of task Length<br />

General linguistic accuracy Paragraphing<br />

Grammatical accuracy L1 interference<br />

Fluency/style Legibility<br />

Spelling Cohesi<strong>on</strong><br />

Although the <strong>EAP</strong> tutors differed in their approaches to helping the student they all seemed to share<br />

the opini<strong>on</strong> of the academic tutor that “a higher return to language teacher input can be achieved by a<br />

shift in teaching emphasis towards the wider questi<strong>on</strong>s of essay structure in general <str<strong>on</strong>g>and</str<strong>on</strong>g> the art of<br />

logical <strong>pre</strong>sentati<strong>on</strong> in particular” (1988, p. 127). More recently, Hartill (2000) <str<strong>on</strong>g>and</str<strong>on</strong>g> Errey (2000)<br />

have investigated the assessment dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s faced by students at two UK universities. Hartill reports<br />

Table 4<br />

‘Cost’ factors listed in Banerjee (2003, pp. 335 <str<strong>on</strong>g>and</str<strong>on</strong>g> 343)<br />

Language related source of “cost” Course related source of “cost”<br />

Inability to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> the lecturers’ accents The structure of the course<br />

Inability to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> native-speaking colleagues Course c<strong>on</strong>tent<br />

Inability to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> local residents –Theoretical orientati<strong>on</strong><br />

Inability to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> other n<strong>on</strong>-native speakers –Inadequate coverage<br />

Difficulty in following radio broadcasts –Rigid (insufficient allowance for student c<strong>on</strong>tributi<strong>on</strong>s)<br />

Need to translate into L1 in order to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> c<strong>on</strong>cepts –British centred examples<br />

Need to translate from L1 when speaking or writing –No focus <strong>on</strong> current affairs<br />

Difficulty in making themselves understood –Mathematics<br />

Restricted/inadequate lexical resources Course delivery<br />

Inability to retrieve lexical resources quickly<br />

(particularly problematic under examinati<strong>on</strong> c<strong>on</strong>diti<strong>on</strong>s)<br />

–Poor lecturing<br />

Negative percepti<strong>on</strong> of their ability to communicate C<strong>on</strong>flict between <strong>pre</strong>ferred study method <str<strong>on</strong>g>and</str<strong>on</strong>g> the<br />

dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s of the course<br />

Slow reading speed C<strong>on</strong>flict between study aims <str<strong>on</strong>g>and</str<strong>on</strong>g> the dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s of the<br />

course<br />

Difficulty in/inability to identify key points in reading Volume of assessment<br />

British academic writing c<strong>on</strong>venti<strong>on</strong>s Lack of variety in assessment<br />

Avoidance of resources in their L1 because of a<br />

perceived incompatibility between the two languages<br />

Lack of background in the subject<br />

Preference for resources in their L1 Relati<strong>on</strong>ship with supervisor<br />

Inability to memorise easily (particularly problematic Behaviour of course colleagues<br />

under examinati<strong>on</strong> c<strong>on</strong>diti<strong>on</strong>s)<br />

Poor examinati<strong>on</strong> performance

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 57<br />

that departments tend to value “communicative effectiveness,” but that this does not necessarily<br />

encompass linguistic accuracy. Errey’s introspective verbal report study with lecturers from five<br />

different departments at Oxford Brookes University has revealed 24 factors that influence the<br />

lecturers’ judgements of student writing. These factors are <strong>pre</strong>sented in Table 3.<br />

A few researchers (e.g., Geoghegan, 1983; Banerjee, 2003) have explored study needs from<br />

the students’ perspective by drawing <strong>on</strong> their <strong>on</strong>-going study experiences. Banerjee (2003)<br />

c<strong>on</strong>ducted a l<strong>on</strong>gitudinal study of 25 postgraduate students, interviewing them at a number of<br />

different points during their Masters degrees. She found that they experienced difficulties (she<br />

termed this “cost”) stemming from factors related to both language <str<strong>on</strong>g>and</str<strong>on</strong>g> the <strong>courses</strong> they were<br />

following (Table 4). She also found that students experienced difficulties in adjusting to<br />

the British system of educati<strong>on</strong>, particularly the lecture-seminar structure of their <strong>courses</strong>, the<br />

emphasis <strong>on</strong> learner independence, the dem<str<strong>on</strong>g>and</str<strong>on</strong>g> for critical <str<strong>on</strong>g>and</str<strong>on</strong>g> independent thought, academic<br />

c<strong>on</strong>venti<strong>on</strong>s, the criteria used for marking (particularly the way they were applied), the role of<br />

the teacher, <str<strong>on</strong>g>and</str<strong>on</strong>g> the emphasis in many <strong>courses</strong> <strong>on</strong> group work.<br />

To summarise, our <strong>pre</strong>vious experience of <str<strong>on</strong>g>reporting</str<strong>on</strong>g> student performance <strong>on</strong> the <strong>pre</strong>-sessi<strong>on</strong>al<br />

<strong>EAP</strong> course <str<strong>on</strong>g>and</str<strong>on</strong>g> the studies reported above narrowed our opti<strong>on</strong>s with respect to the <str<strong>on</strong>g>reporting</str<strong>on</strong>g><br />

mechanisms that we could c<strong>on</strong>sider. It was clear that the use of an external <strong>EAP</strong> test would risk<br />

c<strong>on</strong>struct under-re<strong>pre</strong>sentativeness <str<strong>on</strong>g>and</str<strong>on</strong>g> would undermine the usefulness of the report for the<br />

admitting departments <str<strong>on</strong>g>and</str<strong>on</strong>g> the students. Our experience of using profile reports based <strong>on</strong> tutors’<br />

pers<strong>on</strong>al criteria was similarly unsatisfactory. We wanted to devise a report form which would<br />

incorporate key features from the research we have reviewed <str<strong>on</strong>g>and</str<strong>on</strong>g>, because much of this focused<br />

<strong>on</strong> writing, would also incorporate features from scales such as those found in the English<br />

Speaking Uni<strong>on</strong> (ESU) framework (Carroll & West, 1989). We would complement this with our<br />

own experience, not <strong>on</strong>ly as <strong>pre</strong>-sessi<strong>on</strong>al <str<strong>on</strong>g>and</str<strong>on</strong>g> in-sessi<strong>on</strong>al tutors but also as members of an<br />

academic department.<br />

In the secti<strong>on</strong>s that follow, we describe both the design of an exit assessment checklist <str<strong>on</strong>g>and</str<strong>on</strong>g> the<br />

initial stages in its validati<strong>on</strong>.<br />

3. Developing a final assessment checklist<br />

We took a number of decisi<strong>on</strong>s in order to ensure that the new exit assessment procedure<br />

provided more useful guidance to tutors, students <str<strong>on</strong>g>and</str<strong>on</strong>g> the admissi<strong>on</strong>s officers; was more<br />

practical; <str<strong>on</strong>g>and</str<strong>on</strong>g> explicitly reflected theories of the <strong>EAP</strong> c<strong>on</strong>struct. These decisi<strong>on</strong>s were:<br />

(i) Functi<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> audience—The main functi<strong>on</strong> of the report would be to give the admissi<strong>on</strong>s<br />

officers an accurate picture of the student’s abilities. Students would receive a copy of the<br />

report, <str<strong>on</strong>g>and</str<strong>on</strong>g> it was hoped they would benefit from receiving a frank account of their<br />

strengths <str<strong>on</strong>g>and</str<strong>on</strong>g> weaknesses, but we would not edit the reports to soften the blow for<br />

students who were not performing adequately.<br />

(ii) Coverage—The report form would explicitly reflect current <strong>EAP</strong> theory. It would specify<br />

the skills <str<strong>on</strong>g>and</str<strong>on</strong>g> strategies that had emerged from our analysis of <strong>pre</strong>vious research, the<br />

report forms <strong>pre</strong>pared in 2001, <str<strong>on</strong>g>and</str<strong>on</strong>g> our <strong>pre</strong>vious experience as <strong>EAP</strong> teachers <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

academic tutors. We decided that it would be inappropriate to comment <strong>on</strong> attitude,<br />

aptitude, motivati<strong>on</strong>, awareness, or any other quality which was a feature of pers<strong>on</strong>ality<br />

rather than of linguistic or <strong>EAP</strong> ability.<br />

(iii) Evidence—We wanted to make it clear that we were <strong>on</strong>ly commenting <strong>on</strong> features<br />

that we had evidence for. We also wanted to make clear the limits of our judgements.

58<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

For instance, we stated explicitly that the tasks that students performed during the <strong>pre</strong>sessi<strong>on</strong>al<br />

course approximated (but could not fully replicate) the dem<str<strong>on</strong>g>and</str<strong>on</strong>g>s students<br />

were likely to encounter in their study c<strong>on</strong>texts. We would indicate how students had<br />

performed <strong>on</strong> the tasks set during the course rather than <strong>pre</strong>dicting whether they<br />

would do well in their future settings. Our intenti<strong>on</strong> was to provide our primary<br />

users—the admissi<strong>on</strong>s pers<strong>on</strong>nel, with an evidential basis for deciding whether or not<br />

a student was ready to begin studying <strong>on</strong> a particular programme. This allowed<br />

admissi<strong>on</strong>s tutors for different degree programmes to inter<strong>pre</strong>t the evidence<br />

differently, depending <strong>on</strong> the aspects of <strong>EAP</strong> proficiency they c<strong>on</strong>sidered more<br />

important for their fields of study.<br />

(iv) Format—It was clear that prose reports were difficult for the tutors to write <str<strong>on</strong>g>and</str<strong>on</strong>g> timec<strong>on</strong>suming<br />

to edit. They were also difficult for the end-users (primarily university<br />

admissi<strong>on</strong>s pers<strong>on</strong>nel) to inter<strong>pre</strong>t. One way of eliminating the problems of<br />

composing, editing <str<strong>on</strong>g>and</str<strong>on</strong>g> inter<strong>pre</strong>ting was to devise a checklist where tutors would<br />

<strong>on</strong>ly have to place ticks in columns to indicate whether the student had or had not<br />

dem<strong>on</strong>strated certain abilities.<br />

The procedure for devising the checklist was as follows:<br />

1. We drew up an exhaustive list of the features of academic reading, writing, listening <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

speaking abilities.<br />

2. We went through an iterative process of combining some items that were similar <str<strong>on</strong>g>and</str<strong>on</strong>g>, in<br />

c<strong>on</strong>trast, breaking down some broad descriptors into sub-categories. For instance, we split an<br />

item taken from Errey (2000)—“c<strong>on</strong>tent’”—into two sub-categories, “can analyse the topic<br />

of the assignment” <str<strong>on</strong>g>and</str<strong>on</strong>g> “can produce relevant c<strong>on</strong>tent”.<br />

3. We grouped items according to the language skill they re<strong>pre</strong>sented. At times these decisi<strong>on</strong>s<br />

were complex. For example, “providing sufficient evidence” (Ginther & Grant 1996) isa<br />

writing skill but is also dependent <strong>on</strong> the student’s ability to underst<str<strong>on</strong>g>and</str<strong>on</strong>g> <str<strong>on</strong>g>and</str<strong>on</strong>g> make use of<br />

reading. We resolved this particular problem by breaking the skill down into two comp<strong>on</strong>ents<br />

“can analyse argumentati<strong>on</strong> in academic texts” (classified in the checklist as a reading skill)<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> “can reproduce others’ ideas using his/her own words” (classified as a writing skill).<br />

4. The checklist was formatted to include two columns for each can-do descriptor: “yes” (the<br />

student can do this) or “must pay attenti<strong>on</strong> to” (this skill or strategy).<br />

5. The draft checklist was then <strong>pre</strong>sented to the University’s postgraduate admissi<strong>on</strong>s officer<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> to the <strong>pre</strong>-sessi<strong>on</strong>al course tutors. Changes were made <strong>on</strong> the basis of their feedback.<br />

6. The revised checklist was piloted by the <strong>pre</strong>-sessi<strong>on</strong>al course tutors <strong>on</strong> three of their<br />

students (a str<strong>on</strong>g student, a weak student, <str<strong>on</strong>g>and</str<strong>on</strong>g> <strong>on</strong>e whose ability fell between these two<br />

extremes)—approximately 27 students. On the basis of this pilot procedure, further changes<br />

were made.<br />

7. Taking account of recommendati<strong>on</strong>s by Weigle (1994) for rater familiarisati<strong>on</strong> with the<br />

adopted rating scale, there was extensive opportunity, at each point during the c<strong>on</strong>sultati<strong>on</strong><br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> piloting phase, for discussi<strong>on</strong> of what the can-do descriptors meant <str<strong>on</strong>g>and</str<strong>on</strong>g> how they might<br />

be applied. Indeed, the raters (the <strong>pre</strong>-sessi<strong>on</strong>al course tutors) c<strong>on</strong>tributed to the c<strong>on</strong>tent,<br />

wording <str<strong>on</strong>g>and</str<strong>on</strong>g> final format of the exit assessment checklist. The details of this c<strong>on</strong>sultati<strong>on</strong><br />

are <strong>pre</strong>sented in Secti<strong>on</strong> 3.1 (below).<br />

8. We also met with the students in order to introduce the checklist to them as well as to elicit<br />

their feedback <strong>on</strong> the items included. Changes were made <strong>on</strong> the basis of this feedback.

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 59<br />

9. The final form of the exit assessment checklist (Appendix B) was used during the last week of the<br />

<strong>pre</strong>-sessi<strong>on</strong>al course. Each student received their report <str<strong>on</strong>g>and</str<strong>on</strong>g> a copy was sent to the University’s<br />

postgraduate admissi<strong>on</strong>s officer <str<strong>on</strong>g>and</str<strong>on</strong>g> the admissi<strong>on</strong>s pers<strong>on</strong>nel in admitting departments.<br />

3.1. C<strong>on</strong>sulting the stakeholders <str<strong>on</strong>g>and</str<strong>on</strong>g> piloting the checklist<br />

As has already been stated, the aims of the c<strong>on</strong>sultati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> piloting phase were threefold:<br />

† To provide some of our stakeholders (the University’s admissi<strong>on</strong>s pers<strong>on</strong>nel <str<strong>on</strong>g>and</str<strong>on</strong>g> the <strong>pre</strong>sessi<strong>on</strong>al<br />

course tutors) with an opportunity to comment <strong>on</strong> the initial draft <str<strong>on</strong>g>and</str<strong>on</strong>g> suggest<br />

changes or additi<strong>on</strong>s before the piloting stage (we c<strong>on</strong>sulted students during the piloting<br />

stage).<br />

† To pilot the revised instrument in order to ascertain its usability <str<strong>on</strong>g>and</str<strong>on</strong>g> coverage.<br />

† To maximise the course tutors’ opportunities to familiarise themselves with the checklist <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

to arrive at shared inter<strong>pre</strong>tati<strong>on</strong>s of each of the ‘can-do’ statements.<br />

During the c<strong>on</strong>sultati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> piloting phase, we received valuable feedback in a number of<br />

areas:<br />

(i) Usability of the report form—The tutors found the report form quick to complete<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g>, despite some suggesti<strong>on</strong>s for changes, they believed that they understood the<br />

can-do statements. The University’s Postgraduate Admissi<strong>on</strong>s Officer noted that the<br />

form provided judgements in four skill areas, commenting that this paralleled the<br />

report formats of language proficiency test scores like the IELTS. She thought this<br />

was a step forward, arguing that this form was more transparent than its <strong>pre</strong>decessor<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> that the informati<strong>on</strong> was easy to retrieve because it was not <strong>pre</strong>sented in prose.<br />

She believed that course directors in the academic departments would be able to<br />

return to this form at a later date in order to compare the students’ <strong>on</strong>-going<br />

performance in their departments with what was reported at the end of the <strong>EAP</strong><br />

course.<br />

(ii) Format of the overall report—The tutors initially argued that if a student seemed to<br />

be satisfactory in all respects it would be sufficient to <strong>pre</strong>sent a summary judgement<br />

of his/her performance <strong>on</strong> the course. They suggested that the form for such students<br />

should c<strong>on</strong>sist of a single page with a box to tick for each of the four skills<br />

(listening, speaking, reading <str<strong>on</strong>g>and</str<strong>on</strong>g> writing). We resisted this suggesti<strong>on</strong> for two<br />

reas<strong>on</strong>s. The first was that we needed to be sure (inasmuch as this is possible) that<br />

all the tutors shared the same underst<str<strong>on</strong>g>and</str<strong>on</strong>g>ing of the c<strong>on</strong>struct for each skill area. We<br />

felt that if they had to place a tick opposite each aspect listed under each skill they<br />

would be obliged to c<strong>on</strong>sider all the traits that we c<strong>on</strong>sidered to be important. The<br />

sec<strong>on</strong>d reas<strong>on</strong> had to do with accountability <str<strong>on</strong>g>and</str<strong>on</strong>g>, ultimately, face validity: we felt it<br />

unlikely that the University would accept our judgements <strong>on</strong> the adequacy or<br />

otherwise of students’ <str<strong>on</strong>g>performances</str<strong>on</strong>g> unless we dem<strong>on</strong>strated how we had arrived at<br />

our decisi<strong>on</strong>s.<br />

Nevertheless, the course tutors <strong>pre</strong>vailed up<strong>on</strong> us to include a summary judgement<br />

page (this became the final page of the exit assessment form). They argued that they<br />

would feel more comfortable being “h<strong>on</strong>est” in the checklist if they could also

60<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

indicate how seriously they viewed a student’s particular difficulties. They would not<br />

worry about ticking individual boxes if they could indicate in the summary secti<strong>on</strong><br />

that they were “generally satisfied with the student’s performance”. This would<br />

allow them to give their students specific feedback <str<strong>on</strong>g>and</str<strong>on</strong>g> guidance without suggesting<br />

that they had not “successfully completed” the course.<br />

(iii) Wording of the judgement—The tutors argued that some of the diplomacy of the<br />

original <str<strong>on</strong>g>reporting</str<strong>on</strong>g> format had been lost <str<strong>on</strong>g>and</str<strong>on</strong>g> that, in order to correct the apparent<br />

baldness of the claims being made, the descriptors needed to be <strong>pre</strong>sented within the<br />

c<strong>on</strong>text in which the judgements took place. The outcome of this discussi<strong>on</strong> was that<br />

all the judgements are <strong>pre</strong>faced with the phrase “Our evidence suggests that.”<br />

The tutors also argued that it should be possible to indicate when there was no<br />

evidence for a particular judgement. For instance, a skill may have been taught but<br />

the student may not have taken advantage of opportunities to dem<strong>on</strong>strate it. This<br />

resulted in the inclusi<strong>on</strong> of the third column, “We have no evidence.” It should be<br />

noted at this point that the tutors’ request for this column proved somewhat<br />

problematic when the report was used in its final form (see below).<br />

Thirdly, in line with the spirit of the new exit assessment, the tutors rejected the<br />

formulati<strong>on</strong>s “will have difficulty” <str<strong>on</strong>g>and</str<strong>on</strong>g> even “has difficulty” as they implied a<br />

<strong>pre</strong>dicti<strong>on</strong> of future performance. They <strong>pre</strong>ferred instead to locate their judgements<br />

within the period of the course, hence the formulati<strong>on</strong> “has had difficulty”.<br />

(iv) Evidence available <strong>on</strong> which to base a judgement—Though the tutors c<strong>on</strong>sidered the<br />

report form easy to use <str<strong>on</strong>g>and</str<strong>on</strong>g> transparent, they were c<strong>on</strong>cerned about the extent to which<br />

they could make detailed judgements about the students’ listening <str<strong>on</strong>g>and</str<strong>on</strong>g> reading abilities.<br />

They argued that since the processes involved in listening <str<strong>on</strong>g>and</str<strong>on</strong>g> reading are internal, they<br />

would be reliant <strong>on</strong> student self-report (which might not be reliable) or <strong>on</strong> their<br />

inter<strong>pre</strong>tati<strong>on</strong> of students’ listening <str<strong>on</strong>g>and</str<strong>on</strong>g> reading abilities based <strong>on</strong> what they produced<br />

when speaking <str<strong>on</strong>g>and</str<strong>on</strong>g> writing. This problem has not been fully or satisfactorily resolved.<br />

Indeed, it remains <strong>on</strong>e of the difficulties in any assessment of receptive skills.<br />

The tutors also argued that students with good language skills might fail to give evidence<br />

of certain can-do statements such as strategies like “asking for clarificati<strong>on</strong>,” since they<br />

might never be in a positi<strong>on</strong> where they have misunderstood <str<strong>on</strong>g>and</str<strong>on</strong>g> have to ask for<br />

clarificati<strong>on</strong>. The soluti<strong>on</strong> we have adopted has been to orientate the students to the criteria<br />

by which they are to be judged. When the students register they receive a self-assessment<br />

checklist which requires them to make judgements about their language abilities at the start<br />

of the course. This checklist replicates the can-do statements used in the exit assessment<br />

checklist <str<strong>on</strong>g>and</str<strong>on</strong>g> has proved a useful c<strong>on</strong>sciousness-raising tool particularly since students<br />

discuss their self-assessments during their first c<strong>on</strong>sultati<strong>on</strong> with their tutor. Nevertheless,<br />

we are still c<strong>on</strong>cerned that students might fail to fully dem<strong>on</strong>strate their abilities according<br />

to the “can-do” statements <str<strong>on</strong>g>and</str<strong>on</strong>g> we plan to explore this area in a later study.<br />

4. Investigating the validity of the checklist<br />

The final versi<strong>on</strong> of the checklist incorporated as much of the feedback as was feasible from<br />

the course tutors <str<strong>on</strong>g>and</str<strong>on</strong>g> the University’s Postgraduate Admissi<strong>on</strong>s Officer. It was then used to<br />

assess 86 students, all of whom completed the 4-week <strong>pre</strong>-sessi<strong>on</strong>al <strong>EAP</strong> course. In a further

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 61<br />

stage of developing <str<strong>on</strong>g>and</str<strong>on</strong>g> reviewing this new assessment format, we investigated the validity of<br />

the exit assessment in two phases:<br />

† C<strong>on</strong>tent relevance <str<strong>on</strong>g>and</str<strong>on</strong>g> coverage<br />

† Inter<strong>pre</strong>tati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> use of the new instrument<br />

4.1. C<strong>on</strong>tent relevance <str<strong>on</strong>g>and</str<strong>on</strong>g> coverage<br />

The aims of this validati<strong>on</strong> phase were to determine where evidence for items <strong>on</strong> the checklist<br />

might be available from assessment opportunities during the programme <str<strong>on</strong>g>and</str<strong>on</strong>g> what opportunities<br />

for collecting evidence were being missed. The first step in this process was to list all the<br />

assessment instruments that were used during the course, when they were used, what functi<strong>on</strong><br />

they performed, what they c<strong>on</strong>sisted of, <str<strong>on</strong>g>and</str<strong>on</strong>g> where the results of the assessment were kept for<br />

reference. A table c<strong>on</strong>taining the details of 12 instruments can be found in Appendix C.<br />

We distributed this list <str<strong>on</strong>g>and</str<strong>on</strong>g> copies of all the instruments to a seminar of colleagues working in<br />

language testing, <str<strong>on</strong>g>and</str<strong>on</strong>g> asked them to analyse the instruments to determine whether there were<br />

features <strong>on</strong> the final checklist for which no evidence was being collected during the course <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

whether there was evidence being collected <strong>on</strong> the course for which there were no features<br />

menti<strong>on</strong>ed in the checklist.<br />

The main c<strong>on</strong>clusi<strong>on</strong> emerging from the discussi<strong>on</strong> was that there was a great deal of<br />

assessment <strong>on</strong> the <strong>pre</strong>-sessi<strong>on</strong>al course, both formal <str<strong>on</strong>g>and</str<strong>on</strong>g> informal, but the overall system was not<br />

integrated. Each instrument had its own functi<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> its own rati<strong>on</strong>ale but the instruments did<br />

not fit together in a coherent whole. The following types of problems were identified:<br />

† Entry <strong>on</strong> checklist but no evidence available through instruments—for example, the checklist<br />

asks for a judgement c<strong>on</strong>cerning the student’s ability to participate in small group<br />

discussi<strong>on</strong>s, but there is no structured way of gathering this evidence.<br />

† Evidence available but no entry <strong>on</strong> checklist—for example, the tutor feedback form for oral<br />

<strong>pre</strong>sentati<strong>on</strong>s includes several features of oral performance which do not appear <strong>on</strong> the<br />

checklist.<br />

† Terminology: some of the terminology in the checklist <str<strong>on</strong>g>and</str<strong>on</strong>g> in the instruments was not defined<br />

so it was not clear whether they were referring to the same thing—for example, the TEEP<br />

(Test of English for Educati<strong>on</strong>al Purposes) attribute writing scale, which is used to assess the<br />

students’ first piece of writing, c<strong>on</strong>tains a criteri<strong>on</strong> called “cohesi<strong>on</strong>”. There is no equivalent<br />

term <strong>on</strong> the checklist. The nearest noti<strong>on</strong> seems to be “Can make appropriate use of heading<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> subheadings,” but this may have been quite different from what the TEEP designers<br />

originally had in mind.<br />

† What do these things look like in the classroom? The checklist c<strong>on</strong>tained several noti<strong>on</strong>s such<br />

as “Can cope with heavy reading load” <str<strong>on</strong>g>and</str<strong>on</strong>g> “Can underst<str<strong>on</strong>g>and</str<strong>on</strong>g> gist” but it was not clear what<br />

the teachers should look for to c<strong>on</strong>firm whether students were able to do these things.<br />

It was to be expected that there would be inc<strong>on</strong>sistencies within the overall system, given that<br />

different instruments <str<strong>on</strong>g>and</str<strong>on</strong>g> practices were designed at different times in the history of the<br />

development of the programme. We have begun to harm<strong>on</strong>ise all of the comp<strong>on</strong>ents so that<br />

evidence is available for all the features specifically menti<strong>on</strong>ed in the checklist.

62<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

4.2. Inter<strong>pre</strong>tati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> use of the checklist<br />

In the sec<strong>on</strong>d phase of our validity investigati<strong>on</strong>, we explored how the <strong>pre</strong>-sessi<strong>on</strong>al course<br />

tutors used the checklist to make judgements about individual students. Since, the course tutors<br />

had been so closely involved in the development of the instrument, we were particularly<br />

interested in the extent to which the co-operative development process had benefited them in the<br />

way they used it.<br />

Three tutors were selected for individual interviews (out of a teaching team of nine) based <strong>on</strong><br />

their general teaching experience <str<strong>on</strong>g>and</str<strong>on</strong>g> their experience of teaching <strong>EAP</strong> (particularly, the<br />

Lancaster University <strong>pre</strong>-sessi<strong>on</strong>al course). One was a teacher with many years of experience in<br />

both teaching <str<strong>on</strong>g>and</str<strong>on</strong>g> course management. He had worked <strong>on</strong> the teaching team the <strong>pre</strong>vious year<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g> had used the <strong>pre</strong>vious <str<strong>on</strong>g>reporting</str<strong>on</strong>g> format. The sec<strong>on</strong>d was a tutor who had also worked <strong>on</strong> the<br />

teaching team the <strong>pre</strong>vious year but who was less experienced. The third tutor selected had no<br />

<strong>pre</strong>vious <strong>EAP</strong> teaching experience <str<strong>on</strong>g>and</str<strong>on</strong>g> was teaching <strong>on</strong> this course for the first time. She had<br />

therefore not used the old <str<strong>on</strong>g>reporting</str<strong>on</strong>g> format.<br />

Each interview followed a three-part structure. In the first stage, the tutors were asked for<br />

their general im<strong>pre</strong>ssi<strong>on</strong>s of the form <str<strong>on</strong>g>and</str<strong>on</strong>g> the process of using it. They were then shown a blank<br />

copy of the form <str<strong>on</strong>g>and</str<strong>on</strong>g> asked to work through it, pointing out any difficulties they faced in<br />

completing it. In the final stage, they were given the report forms they had <strong>pre</strong>viously completed<br />

for their class. They were asked to select three students—the top student, the bottom student, <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

<strong>on</strong>e in the middle—<str<strong>on</strong>g>and</str<strong>on</strong>g> then asked to recount the process they had g<strong>on</strong>e through when making<br />

judgements about them.<br />

The interviews revealed three perceived strengths of the report form. First, the tutors were<br />

im<strong>pre</strong>ssed by the amount of time saved in using this report form. The tutor who was teaching<br />

<strong>on</strong> the course for the first time commented that she could not imagine having to write prose<br />

reports in additi<strong>on</strong> to the other <strong>pre</strong>ssures <strong>on</strong> tutors’ time. The tutors also ap<strong>pre</strong>ciated the<br />

transparency of the report form. One tutor commented that he did not “have to be creative.”<br />

He also argued that it was clearer for departments who no l<strong>on</strong>ger had “to wade through .<br />

diplomatically couched language to find out what the tutor was really getting at.” Finally, the<br />

tutors were in favour of the disclaimer <strong>on</strong> the fr<strong>on</strong>t page which delimited the judgements<br />

being made to the c<strong>on</strong>text of the course.<br />

The interviews also revealed that the tutors were able identify the behaviours <strong>on</strong> which<br />

they had based their judgements <str<strong>on</strong>g>and</str<strong>on</strong>g> that they had used both the more formal opportunities<br />

for m<strong>on</strong>itoring progress (such as essays <str<strong>on</strong>g>and</str<strong>on</strong>g> oral <strong>pre</strong>sentati<strong>on</strong>s) <str<strong>on</strong>g>and</str<strong>on</strong>g> the learning<br />

opportunities provided in class (such as group discussi<strong>on</strong>s <str<strong>on</strong>g>and</str<strong>on</strong>g> poster <strong>pre</strong>sentati<strong>on</strong>s) to<br />

gather evidence of students’ language ability. Clearly, they found it easiest to comment <strong>on</strong><br />

language areas for which they had formal evidence. This was particularly true of writing<br />

because, as <strong>on</strong>e tutor commented, “it is the skill we gather the most evidence for”.<br />

Listening <str<strong>on</strong>g>and</str<strong>on</strong>g> reading definitely <strong>pre</strong>sented problems, not least because the tutors were<br />

unable to observe their students in all the c<strong>on</strong>texts for which they were making judgements.<br />

For instance, though the students attended lectures each week (delivered by invited<br />

members of academic departments), the tutors resp<strong>on</strong>sible for judging their progress were<br />

not necessarily <strong>pre</strong>sent <str<strong>on</strong>g>and</str<strong>on</strong>g> so did not have an opportunity to observe them.<br />

Where tutors did not have direct access to a particular ability such as “Can underst<str<strong>on</strong>g>and</str<strong>on</strong>g> a<br />

variety of n<strong>on</strong>-native speaker accents,” they found that they were able to c<strong>on</strong>sult with the<br />

students. This is a particularly interesting outcome because, during the c<strong>on</strong>sultati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g><br />

piloting process the tutors had ex<strong>pre</strong>ssed some c<strong>on</strong>cern about how much they should rely <strong>on</strong>

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69 63<br />

student self-assessment. Since, the exit assessment report re<strong>pre</strong>sented high stakes for the<br />

students they might well have described themselves in a way that they thought appropriate in<br />

order to successfully complete the course. However, the tutors we interviewed felt that their<br />

students had largely been forthright in their self-assessments <str<strong>on</strong>g>and</str<strong>on</strong>g> individual tutorials. In fact,<br />

<strong>on</strong>e tutor commented that the students “were more h<strong>on</strong>est than perhaps we give them credit<br />

for.” Indeed many students encouraged their tutors to be “truthful” so that they could set<br />

learning goals for the rest of the year.<br />

Nevertheless, a number of issues also emerged from the interviews. Most of them are clearly<br />

training issues which need to be addressed as so<strong>on</strong> as possible:<br />

1. The checklist is criteri<strong>on</strong>-referenced <str<strong>on</strong>g>and</str<strong>on</strong>g> each student is to be judged against the can-do<br />

statements. However, at least <strong>on</strong>e tutor not <strong>on</strong>ly c<strong>on</strong>sidered whether the student had the stated<br />

ability or had had difficulty but also tried to rank his students. For example, in the case of the<br />

writing item “Can produce grammatically correct text” he ticked that a student had this<br />

ability not because she performed well in this area but because he wanted to distinguish her<br />

from another good student who did not.<br />

2. During the c<strong>on</strong>sultati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> piloting phase (see Secti<strong>on</strong> 3.1, above), the tutors specifically<br />

requested a column entitled “we have no evidence” to cover cases where students did not<br />

dem<strong>on</strong>strate a particular ability. The purpose of this column was discussed extensively at the<br />

time yet, during the implementati<strong>on</strong> of the checklist, at least <strong>on</strong>e tutor ag<strong>on</strong>ised over using<br />

the “no evidence” column, worrying that it would imply that the student did not have that<br />

language ability.<br />

3. The exit assessment checklist includes a summary judgement <strong>on</strong> the final page. This was<br />

included at the request of the course tutors (see Secti<strong>on</strong> 3.1, above). However, the interviews<br />

revealed that the route by which the tutors arrived at their summary judgements differed. One<br />

tutor said that she had a global sense of whether a student had made satisfactory progress or<br />

not <str<strong>on</strong>g>and</str<strong>on</strong>g> based her judgement <strong>on</strong> that rather than <strong>on</strong> the distributi<strong>on</strong> of ticks across the report.<br />

Another tutor based her judgement <strong>on</strong> the number of ticks the student received in the “has<br />

had some difficulties” column as well as her judgement of the gravity of these ticks. This last<br />

practice is particularly interesting for it introduces a level of detail not explicit in the report<br />

form. This has implicati<strong>on</strong>s for the relati<strong>on</strong>ship between the detailed checklist <str<strong>on</strong>g>and</str<strong>on</strong>g> the<br />

summary secti<strong>on</strong> of the report form <str<strong>on</strong>g>and</str<strong>on</strong>g> is again an issue to be dealt with in training.<br />

Though there is still much to be d<strong>on</strong>e to help tutors adjust to the new <str<strong>on</strong>g>reporting</str<strong>on</strong>g> system <str<strong>on</strong>g>and</str<strong>on</strong>g> to<br />

orientate new users of the checklist, the remaining c<strong>on</strong>cern relates to how the tutors applied the<br />

“can-do” statements. It is important to remember that the tutors had discussed their<br />

inter<strong>pre</strong>tati<strong>on</strong>s of items during the c<strong>on</strong>sultati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> piloting stage (described earlier) <str<strong>on</strong>g>and</str<strong>on</strong>g> were<br />

therefore familiar with the checklist. Indeed, they had c<strong>on</strong>tributed to its wording. Yet, as Lumley<br />

(2002) points out, when making their judgements all raters have to rec<strong>on</strong>cile the rating scale (in<br />

this case the can-do statements) <str<strong>on</strong>g>and</str<strong>on</strong>g> their observati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> inter<strong>pre</strong>tati<strong>on</strong> of student<br />

<str<strong>on</strong>g>performances</str<strong>on</strong>g>. This proved to be an issue for the tutors we interviewed. For instance, <strong>on</strong>e<br />

tutor commented <strong>on</strong> the word “satisfactory” in the statement “can take satisfactory notes.” Since,<br />

notes are generally for pers<strong>on</strong>al use, this tutor w<strong>on</strong>dered whether she was the best judge of<br />

whether a student’s notes were “satisfactory” for the student’s own purposes. Much can be d<strong>on</strong>e<br />

to discuss with the course tutors the behaviours that c<strong>on</strong>stitute evidence of a particular ability (as<br />

well as the behaviours that suggest difficulty). However, the tutor is still the ultimate arbitrator<br />

<str<strong>on</strong>g>and</str<strong>on</strong>g>, as Lumley (2002) argues, it is not possible to cover all eventualities during rater training.

64<br />

J. Banerjee, D. Wall / Journal of English for Academic Purposes 5 (2006) 50–69<br />

5. Planning future directi<strong>on</strong>s<br />

In this paper, we have <strong>pre</strong>sented the rati<strong>on</strong>ale for a new assessment instrument <str<strong>on</strong>g>and</str<strong>on</strong>g> the process<br />

we went through to tailor the instrument to our needs <str<strong>on</strong>g>and</str<strong>on</strong>g> to investigate its validity. Our work so<br />

far has identified two areas for immediate acti<strong>on</strong>:<br />

1. Integrating c<strong>on</strong>tinuous assessment with final assessment—Our review of the instruments we use<br />

for c<strong>on</strong>tinuous assessment (Appendix C) clearly shows that there is a need to review <str<strong>on</strong>g>and</str<strong>on</strong>g> rati<strong>on</strong>alise<br />

our system, so that each formative judgement c<strong>on</strong>tributes to the summative checklist. This should<br />

also provide our students with sufficient opportunities to dem<strong>on</strong>strate whether they have mastered<br />

the abilities in the final checklist. This will, of course, have an impact up<strong>on</strong> syllabus design.<br />

2. Training the tutors—Despite our involvement of course tutors in the development of the checklist,<br />

it is clear that they still diverged in their inter<strong>pre</strong>tati<strong>on</strong> <str<strong>on</strong>g>and</str<strong>on</strong>g> implementati<strong>on</strong> of the instrument. This<br />

problem could be exacerbated in cases where tutors are teaching <strong>on</strong> the course for the first time. We<br />