Channel Coding 1 - Communications Engineering

Channel Coding 1 - Communications Engineering Channel Coding 1 - Communications Engineering

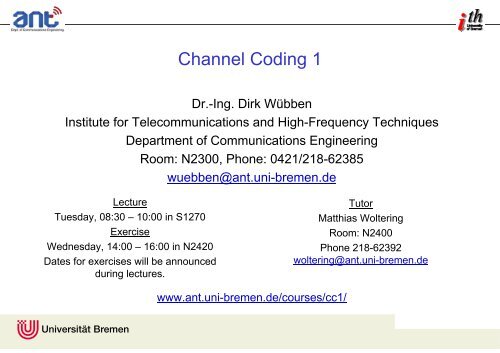

Channel Coding 1 Dr.-Ing. Dirk Wübben Institute for Telecommunications and High-Frequency Techniques Department of Communications Engineering Room: N2300, Phone: 0421/218-62385 wuebben@ant.uni-bremen.de Lecture Tuesday, 08:30 – 10:00 in S1270 Exercise Wednesday, 14:00 – 16:00 in N2420 Dates for exercises will be announced during lectures. www.ant.uni-bremen.de/courses/cc1/ Tutor Matthias Woltering Room: N2400 Phone 218-62392 woltering@ant.uni-bremen.de

- Page 2 and 3: Cehannl Ciodng 1 Dr.-Ing. Drik Webb

- Page 4 and 5: Selected Literature Books on Chann

- Page 6 and 7: Claude Elwood Shannon: A mathematic

- Page 8 and 9: Outline Channel Coding II 1. Conca

- Page 10 and 11: Important terms: General Declaratio

- Page 12 and 13: Basic Principles of Channel Coding

- Page 14 and 15: Visualizing Distance Properties wit

- Page 16 and 17: Structure of Digital Transmission S

- Page 18 and 19: Structure of Digital Transmission S

- Page 20 and 21: Structure of Digital Transmission S

- Page 22 and 23: Input and Output Alphabet of Discre

- Page 24 and 25: Stochastic Description of Discrete

- Page 26 and 27: Example Discrete channel with alph

- Page 28 and 29: xi g () t T Baseband Transmission

- Page 30 and 31: -f0 B 1 2 2 X 1 2 2 N Equivalent

- Page 32 and 33: Signal-to-Noise-Ratio S/N S Es Ts E

- Page 34 and 35: Error Function and Error Function C

- Page 36 and 37: P b 10 0 10 -2 10 -4 Bit Error Rate

- Page 38 and 39: Discrete Memoryless Channel (DMC)

- Page 40 and 41: Binary Symmetric Erasure Channel (B

- Page 42 and 43: Example: Repetition Code Code word

- Page 44 and 45: Basic Approach for Decoding the (7,

- Page 46: Error Rate Performance for BSC with

<strong>Channel</strong> <strong>Coding</strong> 1<br />

Dr.-Ing. Dirk Wübben<br />

Institute for Telecommunications and High-Frequency Techniques<br />

Department of <strong>Communications</strong> <strong>Engineering</strong><br />

Room: N2300, Phone: 0421/218-62385<br />

wuebben@ant.uni-bremen.de<br />

Lecture<br />

Tuesday, 08:30 – 10:00 in S1270<br />

Exercise<br />

Wednesday, 14:00 – 16:00 in N2420<br />

Dates for exercises will be announced<br />

during lectures.<br />

www.ant.uni-bremen.de/courses/cc1/<br />

Tutor<br />

Matthias Woltering<br />

Room: N2400<br />

Phone 218-62392<br />

woltering@ant.uni-bremen.de

Cehannl Ciodng 1<br />

Dr.-Ing. Drik Webbün<br />

Iuttitnse for Toliteuemmancoincs and Hgih-Fueecqrny Tequecinhs<br />

Dmpeteanrt of Ccninoomumtias Eiinennegrg<br />

Room: N2300, Phnoe: 0241/821-62538<br />

wuebben@ant.uni-bremen.de<br />

Lrtecue<br />

Tduaesy, 08:30 – 10:00 in S1270<br />

Exsricee<br />

Wedasnedy, 14:00 – 16:00 in N2420<br />

Daets for ercesxeis wlil be<br />

acounennd duinrg lteuecrs.<br />

Toutr<br />

Mttahias Wletirong<br />

Room: N2400<br />

Phone 218-62392<br />

woltering@ant.uni-bremen.de<br />

www.buchstaben-vertauschen.de<br />

www.ant.uni-bremen.de/courses/cc1/<br />

2

Preliminaries<br />

Master students:<br />

<strong>Channel</strong> <strong>Coding</strong> I and <strong>Channel</strong> <strong>Coding</strong> II are elective courses<br />

Written examination (alternatively oral exam with written part) at the end of each semester<br />

Diplomstudenten<br />

Kanalcodierung als (Wahl)pflichtfach, mündliche Prüfung mit schriftlichen Anteil<br />

Wahlweise dreistündig (Prüfung nach 1 Semester)<br />

oder sechsstündig (Prüfung nach 2 Semestern)<br />

Documents<br />

Script Kanalcodierung I/II by Kühn & Wübben (in German), these slides and tasks for exercises<br />

are available in the internet http://www.ant.uni-bremen.de/courses/cc1/<br />

Exercises<br />

Take place on Wednesday, 14:00-16:00 in Room N2420<br />

Dates will be arranged in the lesson and announced by mailing list<br />

cc_at_ant.uni-bremen.de<br />

Contain theoretical analysis and tasks to be solved in Matlab<br />

3

Selected Literature<br />

Books on <strong>Channel</strong> <strong>Coding</strong><br />

A. Neubauer, J. Freudenberger, V. Kühn: <strong>Coding</strong> Theory: Algorithms, Architectures and<br />

Applications, Wiley<br />

R.E. Blahut, Algebraic Codes for Data Transmission, Cambridge University Press, 2003<br />

W.C. Huffman, V. Pless, Fundamentals of Error-Correcting Codes, Cambridge, 2003<br />

S. Lin, D.J. Costello, Error Control <strong>Coding</strong>: Fundamentals and Applications, Prentice-Hall, 2004<br />

J.C. Moreira, P.G. Farr: Essentials of Error-Control <strong>Coding</strong>, Wiley, 2006<br />

R.H. Morelos-Zaragoza: The Art of Error correcting <strong>Coding</strong>, Wiley, 2 nd Edition, 2006<br />

S.B. Wicker, Error Control Systems for Digital <strong>Communications</strong> and Storage, Prentice-Hall, 1995<br />

B. Friedrichs, Kanalcodierung, Springer Verlag, 1996<br />

M. Bossert, Kanalcodierung, B.G. Teubner Verlag, 1998<br />

J. Huber, Trelliscodierung, Springer Verlag, 1992<br />

Books on Information Theory<br />

T. M. Cover, J. A. Thomas, Information Theory, Wiley, 1991<br />

R.G. Gallager, Information Theory and Reliable Communication, Wiley, 1968<br />

R. McEliece: The Theory of Information and <strong>Coding</strong>, Cambridge, 2004<br />

R. Johannesson, Informationstheorie - Grundlagen der (Tele-)Kommunikation, Addison-Wesley,<br />

1992<br />

4

Selected Literature<br />

General Books on Digital Communication<br />

J. Proakis, Digital <strong>Communications</strong>, McGraw-Hill, 2001<br />

J.B. Anderson, Digital Transmission <strong>Engineering</strong>, IEEE Press, 2005<br />

B. Sklar, Digital <strong>Communications</strong>, Fundamentals and Applications, Prentice-Hall, 2003<br />

contains 3 chapters about channel coding<br />

V. Kühn, Wireless communications over MIMO <strong>Channel</strong>s: Applications to CDMA and Multiple<br />

Antenna Systems, John Wiley & Sons, 2006<br />

contains parts of the script<br />

K.D. Kammeyer, Nachrichtenübertragung, B.G. Teubner, 4 th Edition 2008<br />

K.D. Kammeyer, V. Kühn, MATLAB in der Nachrichtentechnik, Schlembach, 2001<br />

chapters about channel coding and exercises<br />

Internet Resources<br />

Lecture notes, Online books, technical publications, introductions to Matlab, …<br />

http://www.ant.uni-bremen.de/courses/cc1/<br />

• Further material (within university net): http://www.ant.uni-bremen.de/misc/ccscripts/<br />

Google, Yahoo, …<br />

5

Claude Elwood Shannon: A mathematical theory of<br />

communication, Bell Systems Technical Journal, Vol. 27,<br />

pp. 379-423 and 623-656, 1948<br />

“The fundamental problem of communication is<br />

that of reproducing at one point either exactly or<br />

approximately a message selected at another<br />

point.”<br />

To solve that task, he created a new branch of applied mathematics:<br />

information theory and/or coding theory<br />

Examples for source – transmission – sink combinations<br />

Mobile telephone – wireless channel – base station<br />

Modem – twisted pair telephone channel – internet provider<br />

…<br />

6

Outline <strong>Channel</strong> <strong>Coding</strong> I<br />

1. Introduction<br />

Declarations and definitions, general principle of channel coding<br />

Structure of digital communication systems<br />

2. Introduction to Information Theory<br />

Probabilities, measure of information<br />

SHANNON‘s channel capacity for different channels<br />

3. Linear Block Codes<br />

Properties of block codes and general decoding principles<br />

Bounds on error rate performance<br />

Representation of block codes with generator and parity check matrices<br />

Cyclic block codes (CRC-Code, Reed-Solomon and BCH codes)<br />

4. Convolutional Codes<br />

Structure, algebraic and graphical presentation<br />

Distance properties and error rate performance<br />

Optimal decoding with Viterbi algorithm<br />

7

Outline <strong>Channel</strong> <strong>Coding</strong> II<br />

1. Concatenated Codes<br />

Serial Concatenation & Parallel Concatenation (Turbo Codes)<br />

Iterative Decoding with Soft-In/Soft-Out decoding algorithms<br />

EXIT-Charts, Bitinterleaved Coded Modulation<br />

Low Density Parity Check Codes (LDPC)<br />

2. Trelliscoded Modulation (TCM)<br />

Motivation by information theory<br />

TCM of Ungerböck, pragmatic approach by Viterbi, Multilevel codes<br />

Distance properties and error rate performance<br />

Applications (data transmission via modems)<br />

3. Adaptive Error Control<br />

Automatic Repeat Request (ARQ)<br />

Performance for perfect and disturbed feedback channel<br />

Hybrid FEC/ARQ schemes<br />

8

Chapter 1. Introduction<br />

Basics about <strong>Channel</strong> <strong>Coding</strong><br />

General declarations and different areas of coding<br />

Basic idea and applications of channel coding<br />

Structure of digital communication systems<br />

Discrete <strong>Channel</strong><br />

Statistical description<br />

Base band and band pass transmission<br />

AWGN and fading channel<br />

Discrete Memoryless <strong>Channel</strong> (DMC)<br />

Binary Symmetric <strong>Channel</strong> (BSC and BSEC)<br />

Examples for simple Error Correction Codes<br />

Single Parity Check (SPC), Repetition Code, Hamming Code<br />

9

Important terms:<br />

General Declarations<br />

Message Amount of transmitted data or symbols by the source<br />

Information Part of message, which is new for the sink<br />

Redundancy Difference of message and information, which is<br />

unknown to the sink<br />

Message = Information + Redundancy<br />

Irrelevance Information, which is not of importance to the sink<br />

Equivocation Information, not stemming from source of interest<br />

Message is also transmitted in a distinct amount of time<br />

Messageflow Amount of message per time<br />

Informationflow Amount of information per time<br />

Transinformation Amount of error-free information per time transmitted<br />

from the source to the sink<br />

10

Three Main Areas of <strong>Coding</strong><br />

Source coding (entropy coding)<br />

Compression of the information stream so that no significant information is lost, enabling a<br />

perfect reconstruction of the information<br />

Thus, by eliminating superfluous and uncontrolled redundancy the load on the transmission<br />

system is reduced (e.g. JPEG, MPEG, ZIP)<br />

Entropy defines the minimum amount of necessary information<br />

<strong>Channel</strong> coding (error-control coding)<br />

Encoder adds redundancy (additional bits) to information bits in order to detect or even<br />

correct transmission errors at the receiver<br />

Cryptography<br />

The information is encrypted to make it unreadable to unauthorized persons or to avoid<br />

falsification or deception during transmission<br />

Decryption is only possible by knowing the encryption key<br />

Claude E. Shannon (1948): A Mathematical Theory of Communication<br />

http://www.ant.uni-bremen.de/misc/ccscripts/<br />

11

Basic Principles of <strong>Channel</strong> <strong>Coding</strong><br />

Forward Error Correction (FEC)<br />

Added redundancy is used to correct transmission errors at the receiver<br />

<strong>Channel</strong> condition affects the quality of data transmission<br />

errors after decoding occur if the error-correction capability of the code is passed<br />

No feedback channel is required<br />

varying reliability, constant bit throughput<br />

Automatic Repeat Request (ARQ)<br />

Small amount of redundancy is added to detect transmission errors<br />

retransmission of data in case of a detected error<br />

feedback channel is required<br />

<strong>Channel</strong> condition affects the throughput<br />

constant reliability, but varying throughput<br />

Hybrid FEC/ARQ: Combination to use advantages of both schemes<br />

Chapter 3 of<br />

<strong>Channel</strong> <strong>Coding</strong> II<br />

12

Basic Idea of <strong>Channel</strong> <strong>Coding</strong><br />

Sequence of information symbols u i is grouped into blocks of length k<br />

uu 0 1uk1 uu k k1u2k1u2k u2k1u3k1 <br />

block block block<br />

each block is<br />

encoded separately<br />

u channel<br />

x<br />

k encoder<br />

n<br />

Information vector u of length k: u = [u0 u1 …uk-1 ]<br />

Elements ui stem from finite alphabets of size q: ui {0, 1, 2, … , q-1} binary code for q = 2<br />

<strong>Channel</strong> <strong>Coding</strong><br />

Create a code vector x of length n > k with elements xi {0, 1, 2, … , q-1} for the information<br />

vector u by a bijective (i.e. u x) function: x = [x0 x1 …xn-1] <br />

<br />

Code contains set of all code words x, i.e. x for all possible u<br />

qk different information vectors u and qn different vectors x exist<br />

due to the bijective mapping from u to x only qk < qn vectors x are used<br />

•Codeis a subset<br />

• Coder is the mapper<br />

Challenge: Find a k-dimensional subset out of an n-dimensional space so that minimum<br />

distance between elements within is maximized effects probability of detecting errors<br />

Code rate Rc equals the ratio of length of the uncoded and the coded<br />

sequence and describes the required expansion of the signal bandwidth<br />

k<br />

Rc<br />

<br />

n<br />

13

Visualizing Distance Properties with Code Cube<br />

q=2, n=3 x = [x 0 x 1 x 2 ] code word, i.e. x no code word x <br />

010 011<br />

000<br />

110 111<br />

100<br />

d min = 1<br />

Code rate R c = 1<br />

001<br />

No error correction<br />

No error detection<br />

101<br />

010 011<br />

000<br />

110 111<br />

100<br />

d min = 2<br />

Code rate R c = 2/3<br />

No error correction<br />

001<br />

Detection of single error<br />

101<br />

010 011<br />

000<br />

110 111<br />

100<br />

d min = 3<br />

Code rate R c = 1/3<br />

001<br />

Correction of single error<br />

Detection of 2 errors<br />

101<br />

14

Applications of <strong>Channel</strong> <strong>Coding</strong><br />

Importance of channel coding increased with digital communications<br />

First use for deep space communications:<br />

AWGN channel, no bandwidth restrictions, only few receivers (costs negligible)<br />

Examples: Viking (Mars), Voyager (Jupiter, Saturn), Galileo (Jupiter), ...<br />

Digital mass storage<br />

Compact Disc (CD), Digital Versatile Disc (DVD), Digital Magnetic Tapes (DAT), hard disc, …<br />

Digital wireless communications:<br />

GSM, UMTS, LTE, LTE-A, WLAN (Hiperlan, IEEE 802.11), ...<br />

Digital wired communications<br />

Modem transmission (V.90, ...), ISDN, Digital Subscriber Line (DSL), …<br />

Digital broadcasting<br />

Digital Audio Broadcasting (DAB), Digital Video Broadcasting (DVB)<br />

Depending on the system (transmission parameters) different channel coding schemes<br />

are used<br />

15

Structure of Digital Transmission System<br />

analog<br />

source<br />

digital source<br />

source<br />

encoder<br />

u<br />

• Source transmits signal d(t) (e.g. analog speech signal)<br />

• Source coding samples, quantizes and compresses analog signal<br />

• Digital source: comprises analog source and source coding, delivers<br />

digital data vector u = [u 0 u 1 … u k-1 ] of length k<br />

16

Structure of Digital Transmission System<br />

analog<br />

source<br />

source<br />

encoder<br />

digital source<br />

u<br />

channel<br />

encoder<br />

x<br />

• <strong>Channel</strong> encoder adds redundancy to u so that errors in<br />

x = [x 0 x 1 … x n-1 ] can be detected or even corrected at receiver<br />

• <strong>Channel</strong> encoder may consist of several constituent codes<br />

• Code rate: R c = k / n<br />

17

Structure of Digital Transmission System<br />

analog<br />

source<br />

source<br />

encoder<br />

digital source<br />

• Modulator maps discrete vector x onto analog waveform<br />

and moves it into the transmission band<br />

• Physical channel represents transmission medium<br />

– Multipath propagation intersymbol interference (ISI)<br />

– Time varying fading, i.e. deep fades in complex<br />

envelope<br />

– Additive noise<br />

• Demodulator: Moves signal back into baseband and<br />

performs lowpass filtering, sampling, quantization<br />

u<br />

channel<br />

encoder<br />

x<br />

y<br />

modulator<br />

demodulator<br />

physical<br />

channel<br />

discrete channel<br />

• Discrete channel: comprises analog part of<br />

modulator, physical channel and analog part<br />

of demodulator<br />

• in input alphabet of discrete channel<br />

• out output alphabet of discrete channel<br />

18

Structure of Digital Transmission System<br />

analog<br />

source<br />

source<br />

encoder<br />

digital source<br />

<strong>Channel</strong> decoder:<br />

• Estimation of u on basis of received vector y<br />

• y need not to consist of hard quantized values {0,1}<br />

• Since encoder may consist of several parts,<br />

decoder may also consist of several modules<br />

u<br />

channel<br />

encoder<br />

u<br />

channel<br />

decoder<br />

x<br />

y<br />

modulator<br />

demodulator<br />

physical<br />

channel<br />

discrete channel<br />

19

Structure of Digital Transmission System<br />

analog<br />

source<br />

source<br />

encoder<br />

digital source<br />

sink<br />

u<br />

feedback channel<br />

source<br />

decoder<br />

channel<br />

encoder<br />

u<br />

channel<br />

decoder<br />

x<br />

y<br />

modulator<br />

demodulator<br />

physical<br />

channel<br />

discrete channel<br />

Citation of Jim Massey:<br />

“The purpose of the modulation system is to create a good discrete channel from the<br />

modulator input to the demodulator output, and the purpose of the coding system is to<br />

transmit the information bits reliably through this discrete channel at the highest practicable<br />

rate.”<br />

20

Overview of Data Transmission System<br />

Digital source<br />

Source<br />

Digital sink<br />

Sink<br />

Source<br />

encoder<br />

Source<br />

decoder<br />

Source transmits signals (e.g. speech)<br />

Source encoder samples, quantizes and<br />

compresses analog signal<br />

<strong>Channel</strong> encoder adds redundancy to<br />

enable error detection or correction @ Rx<br />

Modulator maps discrete symbols onto<br />

analog waveform and moves it into the<br />

transmission frequency band<br />

<strong>Channel</strong><br />

encoder<br />

Digital<br />

Transmission<br />

<strong>Channel</strong><br />

decoder<br />

Modulator<br />

Analogue<br />

Transmission<br />

Demodulator<br />

Discrete channel<br />

Physical<br />

<strong>Channel</strong><br />

Physical channel represents transmission<br />

medium: multipath propagation, time varying<br />

fading, additive noise, …<br />

Demodulator: moves signal back into baseband<br />

and performs lowpass filtering, sampling,<br />

quantization<br />

<strong>Channel</strong> decoder: Estimation of info sequence<br />

out of code sequence error correction<br />

Source decoder: Reconstruction of analog signal<br />

21

Input and Output Alphabet of Discrete <strong>Channel</strong><br />

xi in discrete yi out channel<br />

x = [x 0 x 1 …x n-1 ] y = [y 0 y 1 …y n-1 ]<br />

Discrete channel comprises analog parts of modulator and demodulator as well<br />

as physical transmission medium<br />

Discrete input alphabet in = {X 0 , ..., X |in|-1 } x i X <br />

Discrete output alphabet out = {Y 0 , ..., Y |out|-1 } y i Y µ<br />

Common restrictions in this lecture<br />

Binary input alphabet (BPSK): in ={-1,+1} (corresponds to bits {1, 0}); Pr{X }= 0.5<br />

Output alphabet depends on quantization (q-bit soft decision)<br />

• No quantization (q ): out = <br />

• Hard decision (1-bit): out = in reliability information is lost<br />

Cardinality ||:<br />

number of elements<br />

22

Stochastic Description of Discrete <strong>Channel</strong><br />

Properties of discrete channel are described by<br />

yi Yxi X Y X<br />

Pr Pr<br />

YX<br />

i.e. the probability that the symbol y i =Y is<br />

received when the symbol x i = X was transmitted<br />

(transition probability conditional probability)<br />

Description by transition diagram<br />

General relations (restriction to discrete output alphabet):<br />

Probabilities: Pr{X}, Pr{Y} 0 £ Pr{X}, Pr{Y} £1<br />

Wahrscheinlichkeit<br />

Joint probability of event (X ,Y ): Pr{X ,Y }<br />

Verbundwahrscheinlichkeit<br />

Transition probabilities: Pr{Y | X }<br />

Übergangswahrscheinlichkeit<br />

bedingte Wahrscheinlichkeit<br />

X 0<br />

X 1<br />

X |in |-1<br />

Pr{Y 1 | X 0}<br />

Pr{Y 0 | X 0}<br />

Y 0<br />

Y 1<br />

Y |out |-1<br />

A-priori probability<br />

Pr{X }<br />

XY Y X X Pr , Pr Pr<br />

X Y Y Pr Pr<br />

23

Stochastic Description of Discrete <strong>Channel</strong><br />

General probability relations<br />

Pr a 1<br />

<br />

i<br />

<br />

i<br />

aa b <br />

Pr Pr , j<br />

<br />

i j<br />

j<br />

i j<br />

Pr a , b 1<br />

A-posteriori probabilities:<br />

“nach der Beobachtung”<br />

XY Pr Pr 1<br />

X Y <br />

in out<br />

Y X YX X Y<br />

Pr Pr , Pr Pr ,<br />

<br />

X Y <br />

in out<br />

Pr<br />

X Y <br />

X Y <br />

in out<br />

X Y<br />

Pr , 1<br />

X Y<br />

Y Pr ,<br />

For statistical independent elements (y gives no information about x)<br />

Pr X , Pr Pr<br />

<br />

Pr X, YPrXPrYPr <br />

Y X Y<br />

X Y Pr<br />

X<br />

Pr Y Pr Y<br />

<br />

Pr<br />

<br />

<br />

completeness<br />

Marginal probability<br />

Rand-Wahrscheinlichkeit<br />

Prob. that X was transmitted<br />

when Y is received<br />

Information about x<br />

“after observing y”<br />

<br />

<br />

<br />

24

Bayes Rule:<br />

Bayes Rule<br />

Conditional probability of the event b given the occurrence of event a<br />

Relation of a-posteriori probabilities Pr{X n |Y m } and transition probabilities Pr{Y m |X n }<br />

and<br />

but<br />

<br />

<br />

<br />

<br />

Pr ab , Pr b<br />

Prba Prab<br />

Pr a Pr a<br />

<br />

PrY X PrX<br />

Y <br />

<br />

Attention<br />

Pr Y , Pr<br />

Pr <br />

X Y<br />

X Y<br />

<br />

1<br />

Pr Y Pr Y<br />

X X <br />

in in<br />

<br />

Y <br />

<br />

out<br />

Y X<br />

Pr 1<br />

X Y<br />

<br />

out out<br />

X Y<br />

Y Pr ,<br />

Pr 1<br />

Pr<br />

Y Y <br />

<br />

<br />

<br />

<br />

<br />

Pr<br />

Pr<br />

Y X “for each receive symbol Y with<br />

probability one a symbol X in was<br />

transmitted”<br />

“for each transmitted X in with<br />

probability one a symbol of out is<br />

received”<br />

<br />

25

Example<br />

Discrete channel with alphabets in = out = {0 ,1}<br />

Signal values X0 = Y0 = 0 and X1 = Y1 = 1 with<br />

probabilities Pr{X0 } = Pr{X1 } = 0.5<br />

Transition probabilities Pr{Y0 | X0} = 0.9 and Pr{Y1 | X1} = 0.8<br />

Due to completeness remaining transition probabilities<br />

Pr{Y | X}<br />

Y0 Y1 S<br />

0.1<br />

1<br />

0.8<br />

1<br />

Joint probabilities: Pr{Y m , X n }=Pr{Y m |X n }·Pr{X n }<br />

Pr{Y, X}<br />

Y0 Y1 S<br />

X 0<br />

0.9<br />

X 0<br />

0.45<br />

0.05<br />

0.5<br />

X 1<br />

0.2<br />

X 1<br />

0.1<br />

0.4<br />

0.5<br />

S<br />

1.1<br />

0.9<br />

S<br />

0.55<br />

0.45<br />

1.0<br />

Pr{X |Y}<br />

Y0 Y1 X 0<br />

Pr{Y 1|X 0}<br />

Pr{Y 0|X 1}<br />

X 1<br />

X 0<br />

0.818<br />

0.111<br />

Pr{Y 0|X 0}<br />

0.1<br />

X 1<br />

0.182<br />

0.889<br />

0.9<br />

0.2<br />

0.8<br />

Pr{Y 1|X 1}<br />

A-posteriori probabilities<br />

Pr{X n|Y m}= Pr{Y m, X n}/Pr{Y m}<br />

S<br />

1<br />

1<br />

26<br />

Y 0<br />

Y 1

Continuous Output Alphabet<br />

Continuous output alphabet, if no quantization takes place at the receiver<br />

not practicable, because not realizable on digital systems<br />

but interesting from information theory point of view<br />

Discrete probabilities Pr{Y } become probability density function p y ()<br />

Other relations are still valid<br />

Examples:<br />

<br />

Pr X p , X d<br />

Pr<br />

<br />

<br />

y <br />

Y<br />

out<br />

<br />

y <br />

Y<br />

<br />

Yp d<br />

<br />

<br />

y<br />

<br />

out<br />

<br />

p X d<br />

1<br />

with quantization boarders for Y m : ,<br />

Y Y<br />

<br />

<br />

27

xi<br />

g () t<br />

T<br />

Baseband Transmission<br />

Time-continuous, band-limited signal x(t)<br />

x t x g tiT Average Power per period<br />

Average Energy of each (real) transmit symbol<br />

Noise with spectral density (of real noise) F NN = N 0 /2<br />

Power<br />

x() t<br />

channel channel<br />

i<br />

i<br />

T s<br />

<br />

<br />

nt ()<br />

gR() t<br />

Signal to Noise Ratio (after matched filtering)<br />

yi<br />

<br />

2<br />

2<br />

X 2 BEs Es / Ts E X <br />

N 22 B N B N 2T<br />

2<br />

N 0 0 0 s<br />

iTs<br />

<br />

2<br />

X <br />

0 /2 N<br />

E E T<br />

s s<br />

<br />

S/ N <br />

<br />

2<br />

X<br />

2<br />

N<br />

E<br />

N<br />

2B<br />

, <br />

XX NN<br />

Es 2<br />

X<br />

2<br />

N<br />

Sampling<br />

0<br />

s<br />

2<br />

f 1 T 2B<br />

A s<br />

f<br />

28

xi<br />

g () t<br />

T<br />

x() t<br />

√ 2 · e jω0t<br />

Bandpass Transmission<br />

Transmit real part of complex signal shifted to a carrier frequency f0 x t 2Re<br />

j0t x t e 2 x't cos t x''t<br />

sin<br />

t<br />

xBP (t) <br />

<br />

With x(t) the complex envelope of the data signal<br />

Re{} results in two spectra around -f0 and f0 y () t x () t h () t n<br />

() t<br />

Received signal<br />

Transformation to baseband<br />

Analytical signal achieved by Hilbert transformation (suppress f < 0)<br />

<br />

y t y t jy t<br />

doubles spectrum for f >0<br />

Low pass signal<br />

Re xBP() t<br />

channel channel<br />

nt ()<br />

y () t<br />

j <br />

<br />

BP 0 0<br />

<br />

<br />

y t 1 y 0 te <br />

<br />

BP BP BP<br />

BP BP BP BP<br />

TP 2 BP<br />

j t<br />

BP<br />

<br />

y () t<br />

BP<br />

1√ 2 · e −jω0t<br />

gR() t<br />

yi<br />

29

-f0 B<br />

<br />

1 2<br />

2 X<br />

1 2<br />

2 N<br />

Equivalent Baseband Representation<br />

, <br />

X X N N<br />

BP BP BP BP<br />

Equivalent received signal<br />

y t <br />

1<br />

xBP 2<br />

t hBP t nBP<br />

j0t t <br />

e x t h t n<br />

t<br />

<br />

2<br />

X<br />

2<br />

N<br />

In this semester, only real signals are investigated (BPSK)<br />

Signal is only given in the real part<br />

Only the real part of noise is of interest<br />

f0 B<br />

2BE 2 E<br />

S/ N <br />

2BN 2 N<br />

s s<br />

0 0<br />

E E<br />

N<br />

f<br />

0 2<br />

r<br />

s s<br />

2<br />

0 /2 N<br />

E<br />

S/ N <br />

N<br />

2<br />

X<br />

2<br />

N<br />

<br />

Es 2<br />

<br />

0<br />

s<br />

2<br />

B<br />

,<br />

XX NN<br />

X<br />

2<br />

N<br />

f<br />

30

x i<br />

0.8<br />

0.6<br />

0.4<br />

0.2<br />

Probability Density Functions for AWGN <strong>Channel</strong><br />

signal-to-noise-ratio E s /N 0 = 2 dB<br />

p | 1<br />

p | 1<br />

y|<br />

x<br />

n i<br />

y i<br />

1<br />

pn( ) e<br />

2<br />

py<br />

y|<br />

x<br />

0<br />

-4 -2 0<br />

ddd <br />

2 4<br />

2<br />

N<br />

2<br />

<br />

<br />

2<br />

2<br />

N<br />

2<br />

1 ( yX ) <br />

yx | ( | ) exp 2 2 <br />

2<br />

2<br />

N N <br />

p y X <br />

0.8<br />

0.6<br />

0.4<br />

0.2<br />

signal-to-noise-ratio E s /N 0 = 6 dB<br />

p | 1<br />

p | 1<br />

y|<br />

x<br />

py<br />

<br />

y|<br />

x<br />

0<br />

-4 -2 0<br />

ddd <br />

2 4<br />

31

Signal-to-Noise-Ratio S/N<br />

S Es Ts Es<br />

<br />

N N 2 T N 2<br />

Error Probability of AWGN <strong>Channel</strong><br />

0 s 0<br />

Error Probability for antipodal modulation<br />

<br />

<br />

|<br />

P Pr error x 1 p | E T d p E T d<br />

e i y x s s n s s<br />

0 0<br />

0 0<br />

<br />

i y| x s s n s s<br />

Pr error x 1 p | E T d p E T d<br />

<br />

<br />

<br />

<br />

2<br />

<br />

E s Ts<br />

1<br />

exp<br />

<br />

2 <br />

d<br />

2<br />

2<br />

2<br />

<br />

N 0<br />

N<br />

<br />

E s : symbol energy<br />

N 0/2: noise density<br />

Es Ts, <br />

Es Ts<br />

<br />

32

Error Probability of AWGN <strong>Channel</strong><br />

2<br />

With N N T the error probability becomes<br />

0 2<br />

N s<br />

Pe <br />

2<br />

<br />

1 Es T<br />

<br />

s<br />

exp<br />

<br />

2 d 2<br />

2<br />

2<br />

<br />

N 0 N<br />

<br />

2<br />

<br />

1<br />

<br />

Es T<br />

<br />

s<br />

exp<br />

<br />

<br />

d<br />

N N 0 T <br />

s 0<br />

0 T <br />

s<br />

<br />

Using the substitution <br />

Es Ts N Ts<br />

with d d N T<br />

0<br />

or <br />

Es Ts N Ts<br />

with<br />

0<br />

<br />

1 2 1 E <br />

s<br />

Pe e d<br />

erfc<br />

<br />

<br />

2 N <br />

E N<br />

s<br />

0 2<br />

0 <br />

1<br />

Pe d <br />

2<br />

<br />

d <br />

d N T<br />

with error function complement<br />

2<br />

2<br />

<br />

erfc 1erf e d<br />

<br />

0 2 s<br />

with Q-function<br />

0 s<br />

2<br />

<br />

2E<br />

<br />

2<br />

2<br />

s<br />

<br />

e Q<br />

1 1<br />

2<br />

N Q E 0<br />

e d<br />

erfc<br />

s N02<br />

2<br />

<br />

<br />

<br />

<br />

2 2 <br />

<br />

<br />

33

Error Function and Error Function Complement<br />

Error Function<br />

<br />

2<br />

2<br />

<br />

<br />

<br />

erf e d erfc()<br />

<br />

1.5<br />

Error Function Complement<br />

<br />

2<br />

2<br />

<br />

erfc 1erf e d<br />

<br />

Limits<br />

1erf 1<br />

<br />

0erfc 2<br />

0<br />

<br />

erf(), erfc()<br />

2<br />

1<br />

0.5<br />

0<br />

-0.5<br />

-1<br />

-1.5<br />

-2<br />

erf()<br />

-3 -2 -1 0 1 2 3<br />

<br />

34

Probability Density Function for Frequency Nonselective Rayleigh<br />

Fading <strong>Channel</strong><br />

Mobile communication channel is affected by fading (complex envelope of<br />

receive signals varies in time) Magnitude |α| is Rayleigh distributed<br />

0.1<br />

0.08<br />

0.06<br />

0.04<br />

0.02<br />

x i<br />

i<br />

r i<br />

0<br />

0 2<br />

i<br />

4<br />

n i<br />

y i<br />

0.1<br />

0.08<br />

0.06<br />

0.04<br />

0.02<br />

p <br />

<br />

0<br />

-4 -2 0<br />

ri<br />

2 4<br />

2<br />

2 <br />

2 2 <br />

s s<br />

exp for 0<br />

<br />

<br />

0 else<br />

0.1<br />

0.08<br />

0.06<br />

0.04<br />

0.02<br />

0<br />

-4 -2 0<br />

yi<br />

2 4<br />

35

P b<br />

10 0<br />

10 -2<br />

10 -4<br />

Bit Error Rates for AWGN and Flat Rayleigh <strong>Channel</strong><br />

(BPSK modulation)<br />

17 dB<br />

AWGN<br />

Rayleigh<br />

10<br />

0 10 20 30<br />

-6<br />

E s / N 0 in dB<br />

AWGN channel:<br />

0 <br />

2 s / 0 <br />

1<br />

Pb erfc<br />

2<br />

Es / N<br />

Q E N<br />

Flat Rayleigh fading channel:<br />

P<br />

b<br />

1 E / N <br />

1<br />

2 1 /<br />

s 0<br />

<br />

EsN0 <strong>Channel</strong> coding for fading<br />

channels essential<br />

(time diversity)<br />

36

If line-of-sight connection exist for α, its real part is non-central Gaussian distributed<br />

Rice factor K determines power ratio between line-of-sight path and scattered paths<br />

K = 0 : Rayleigh fading channel<br />

K →∞: AWGN channel<br />

(no fading)<br />

Coefficient i<br />

has Rayleigh distributed magnitude with average power 1<br />

2<br />

Relation between total average power and variance: P(1 K) <br />

Magnitude is Rician distributed<br />

Rice Fading <strong>Channel</strong><br />

xi<br />

K<br />

1<br />

K<br />

1<br />

1 K<br />

'<br />

2<br />

2<br />

K <br />

K I<br />

2 2 0 2 <br />

p| | ( ) <br />

K 1<br />

i 1K 1K<br />

i<br />

exp 2 for 0<br />

<br />

<br />

<br />

0<br />

else<br />

a<br />

ni<br />

yi<br />

<br />

37

Discrete Memoryless <strong>Channel</strong> (DMC)<br />

Memoryless: y i depends on x i but not on x i- for ≠0: Pr{y i | x i } = Pr{y i =Y |x i =X }<br />

Transition probabilities of a discrete memoryless channel (DMC)<br />

yx y0 y1 yn1 x0 x1 xn1 yi xi<br />

Probability, that exactly m errors occur at distinct positions in a sequence of n is given by<br />

Pr m bit of n incorrect<br />

m<br />

P 1 P<br />

n m <br />

<br />

specific positions<br />

Probability, that in a sequence of length n exactly m errors occur (at any place)<br />

n m<br />

n m<br />

Pr merror in a sequence of length n Pe 1Pe m<br />

<br />

<br />

<br />

<br />

<br />

! giving the number of possibilities to choose m<br />

with <br />

elements out of n different elements, without<br />

m m! nm! regarding the succession (combinations)<br />

n1<br />

Pr Pr , , , , , , Pr<br />

<br />

n n<br />

e e<br />

i0<br />

arbitrary positions<br />

38

Binary Symmetric <strong>Channel</strong> (BSC)<br />

Binary Symmetric <strong>Channel</strong> (BSC)<br />

Binary input with hard decision at receiver results<br />

in an equivalent binary channel<br />

Symmetric transmission errors Pe are independent<br />

of transmitted symbol<br />

1 E s<br />

PrY0 X1PrY1 X0 Pe<br />

erfc<br />

2 <br />

N <br />

0 <br />

Probability, that sequence of length n is received correctly<br />

xy x0 y0 x1 y1 xn1 yn1xi yi Pe<br />

Pr Pr , , , Pr 1<br />

Probability, for incorrect sequence (at least one error)<br />

Pr 1Pr 1 1P<br />

n<br />

nP<br />

<br />

x y x y for nP e 1<br />

e e<br />

BSC models BPSK transmission over AWGN and hard-decision<br />

System equation y xe (with modulo-2 sum and ei ={0,1})<br />

n1<br />

i0<br />

X 0<br />

X 1<br />

n<br />

1-P e<br />

1-P e<br />

x i<br />

x i<br />

P e<br />

P e<br />

n i<br />

e i<br />

Y 0<br />

Y 1<br />

39<br />

y i<br />

y i

Binary Symmetric Erasure <strong>Channel</strong> (BSEC)<br />

Binary Symmetric Erasure <strong>Channel</strong> (BSEC)<br />

Instead of performing wrong hard decisions with high<br />

probability, it is often favorable to erase unreliable bits<br />

In order to describe “erased” symbols, a third output<br />

symbol is required<br />

For BPSK we use zero, i.e. out = {-1, 0, +1}<br />

Transmission diagram<br />

Pe denotes the probability of a wrong decision<br />

Pq describes the probability of an erasure<br />

X<br />

1-Pe-Pq 0<br />

Y0 X 1<br />

P e<br />

P e<br />

P q<br />

P q<br />

1-P e-P q<br />

Y 2<br />

Y 1<br />

1<br />

Pe Pq y x<br />

<br />

Pr xy Pq y?<br />

<br />

Pe y x<br />

Y 0<br />

Y 2<br />

Y 1<br />

Binary Erasure<br />

<strong>Channel</strong> (BEC): P e=0<br />

X 0<br />

X 1<br />

1-P q<br />

P q<br />

1-P q<br />

P q<br />

Y 0<br />

Y 2<br />

Y 1<br />

40

Example: Single Parity Check Code (SPC)<br />

Code word x = [x 0 x 1 … x n-1 ] contains information word u = [u 0 u 1 … u k-1 ] and one<br />

additional parity bit p (i.e., n = k+1)<br />

0 k 1<br />

k1 <br />

i0<br />

i<br />

p u u u<br />

Each information word u is mapped onto one code word x (bijective mapping)<br />

Example: k = 3, n = 4, binary digits {0,1}:<br />

x x x x u p u u u p<br />

x 1 3 [ ] <br />

0 2 0 1 2<br />

How many information words u of length k = 3 exist? 2<br />

How many binary words of length n = 4 exist?<br />

Code table<br />

k = 23 = 8<br />

2n = 24 2<br />

= 16<br />

k = 23 = 8<br />

2n = 24 = 16<br />

23 = 8 possible code words (out of 24 = 16 words)<br />

u<br />

000<br />

001<br />

010<br />

011<br />

x<br />

000 0<br />

001 1<br />

010 1<br />

011 0<br />

even parity: under modulo-2 sum, i.e. the sum of all<br />

elements of a valid code word x is always 0<br />

u<br />

100<br />

101<br />

110<br />

111<br />

x<br />

100 1<br />

101 0<br />

110 0<br />

111 1<br />

Set of all code words<br />

={0000, 0011, 0101, 0110, 1001, 1010, 1100, 1111}<br />

e.g., y = [0001] is not a valid code word<br />

transmission error occurred<br />

Code enables error detection (all errors of unequal weight) but no error correction<br />

41

Example: Repetition Code<br />

Code word x = [x 0 x 1 … x n-1] contains n repetitions of the information word u = [u 0]<br />

Set of all code words for n = 4 is given by={0000, 1111}<br />

Transmission over BSC with Pe = 0.1 receive vector y = [y0 y1 … yn-1 ]<br />

Maximum Likelihood Decoding Majority Decoder for unequal n:<br />

0<br />

z n/2<br />

uˆ0 arg max Pru0 yarg max Prx<br />

yuˆ<br />

[ uˆ0]<br />

<br />

u{0,1}<br />

x<br />

1<br />

z n/2<br />

Error rate performance for n = 3 and n = 5:<br />

3 2 3 3<br />

Perr, n3Pr{2 errors} Pr{3 errors} (1 Pe) Pe Pe<br />

30.90.0110.001 2 3 0.028<br />

5 5 5 P P P P P P<br />

<br />

3 4 5 2 3 4 5<br />

err, n5(1 e) e (1 e) e e 0.0086<br />

with<br />

n1<br />

z y<br />

i0<br />

Lower error probability,<br />

but larger transmission<br />

bandwidth required<br />

i<br />

42

Example: Systematic (7,4)-Hamming Code<br />

Codeword x = g(u) consists of the information word u and the parity word p<br />

x<br />

Parity bits<br />

x x x x x x x [ u p]<br />

u u u u p p p <br />

<br />

0 1 2 3 4 5 6 0 1 2 3 0 1 2<br />

p u u u<br />

p u u u<br />

p u u u<br />

0 1 2 3<br />

1 0 2 3<br />

2 0 1 3<br />

2 4 = 16 possible code words (out of 2 7 = 128)<br />

u<br />

0000<br />

0001<br />

0010<br />

0011<br />

x<br />

0000 000<br />

0001 111<br />

0010 110<br />

0011 001<br />

u<br />

0100<br />

0101<br />

0110<br />

0111<br />

x<br />

0100 101<br />

0101 010<br />

0110 011<br />

0111 100<br />

even parity under modulo-2<br />

sum, i.e., the sum within each<br />

circle is 0<br />

u<br />

1000<br />

1001<br />

1010<br />

1011<br />

x<br />

1000 011<br />

1001 100<br />

1010 101<br />

1011 010<br />

u<br />

1100<br />

1101<br />

1110<br />

1111<br />

x<br />

1100 110<br />

1101 001<br />

1110 000<br />

1111 111<br />

Example: Information word u = [1 0 0 0] generates code word<br />

x = [1 0 0 0 0 1 1] all parity rules are fulfilled<br />

p 1<br />

u 0<br />

p 2<br />

u 3<br />

u 2<br />

1<br />

u 1<br />

1<br />

1 0 0<br />

0<br />

p 0<br />

0<br />

43

Basic Approach for Decoding the (7,4)-Hamming Code<br />

Maximum Likelihood Decoding: Find that information word u whose encoding<br />

(code word) x = g(u) differs form the received vector y in the fewest number of<br />

bits<br />

Example 1: Error vector e = [0 1 0 0 0 0 0] flips the second bit<br />

leading to the receive vector y = [1 1 0 0 0 1 1]<br />

Parity is not even in two circles (syndrome s =[s0 s1 s2 ] = [1 0 1])<br />

1<br />

Task: Find smallest set of flipped bits that can cause this<br />

0<br />

s<br />

violation of parity rules<br />

1<br />

Question: Is there a unique bit that lies inside all unhappy circles and outside the<br />

happy circles? If so, the flipping of that bit would account for the observed syndrom<br />

Yes, if bit y 1 is flipped all parity rules are again valid decoding was successful<br />

1<br />

1 0 1<br />

s 2<br />

0<br />

44<br />

s 0

Basic Approach for Decoding the (7,4)-Hamming Code<br />

Example 2: y = [1 0 0 0 0 1 0]<br />

Only one parity check is violated<br />

only y7 lies inside this unhappy circle but outside the happy circles<br />

Flip y 7 (i.e. p 2 )<br />

Example 3: y = [1 0 0 1 0 1 1]<br />

All checks are violated, only y 3 lies inside all three circles<br />

y 3 (i.e. u 3 ) is the suspected bit<br />

Example 4: y = [1 0 0 1 0 0 1]<br />

Two errors occurred (bits y 3 and y 5 )<br />

Syndrome s = [1 0 1] indicates single bit error y 1<br />

Optimal decoding flips y 1 (i.e. u 1 )<br />

leading to decoding result with 3 errors<br />

0<br />

1<br />

1 1 0<br />

0<br />

0<br />

1<br />

1<br />

0<br />

0<br />

1 0 0<br />

0<br />

1<br />

1 1 0<br />

0<br />

1<br />

1 1 1<br />

0<br />

0<br />

0<br />

0<br />

45

Error Rate Performance for BSC with P e = 0.1<br />

With increasing n the BER decreases, but also the code rate R c throughput<br />

10 0<br />

10 -2<br />

BER P b<br />

10 -4<br />

10 -6<br />

10 -8<br />

R(9)<br />

R(5)<br />

B(127,22)<br />

R(13)<br />

B(255,47)<br />

B(255,45)<br />

R(17) B(127,15)<br />

B(255,37)<br />

R(21)<br />

R(25)<br />

R(29)<br />

R(n): RPC<br />

H(n,k): Hamming-Code<br />

B(n,k): BCH-Code<br />

R(3)<br />

B(63,7)<br />

B(255,29)<br />

B(511,76)<br />

B(511,67)<br />

B(127,36)<br />

more useful<br />

codes<br />

achievable<br />

H(7,4)<br />

H(15,11)H(31,26)<br />

H(63,57) H(127,120)<br />

not achievable<br />

0 0.2 0.4 0.6 0.8 1<br />

Rate R c = k/n<br />

R(1)<br />

Trade-off between BER and rate<br />

What points (R c , P b ) are achievable?<br />

Shannon: The maximum rate R c at<br />

which communication is possible<br />

with arbitrarily small P b is called<br />

the capacity of the channel<br />

Capacity of BSC with P e =0.1 equals<br />

C = 1 + P e ·log 2 P e + (1-P e )·log 2 (1-P e )]<br />

0.53 bit<br />

With R c > 0.53 no reliable communication<br />

is possible for P e = 0.1!<br />

46